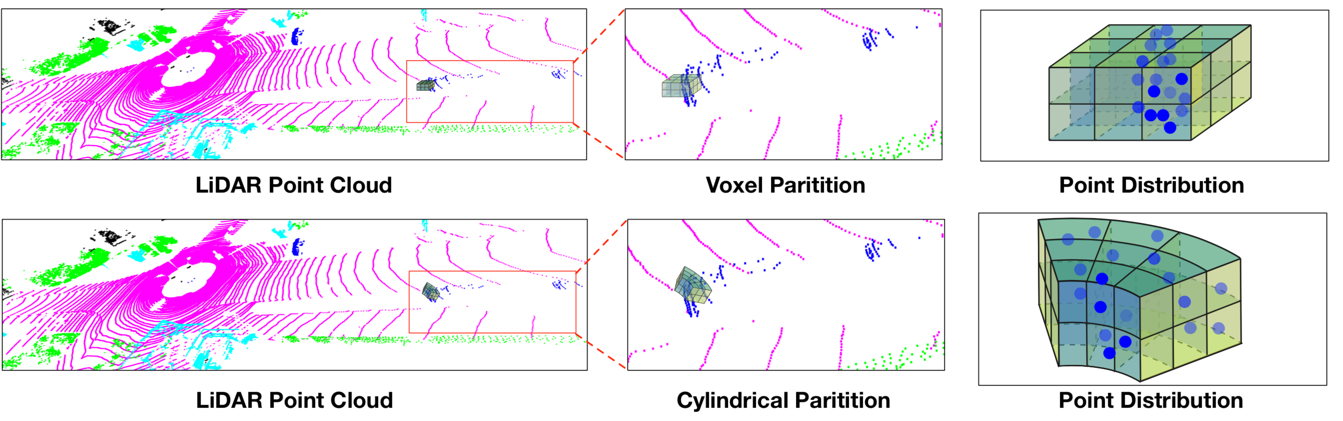

The source code of our work "Cylindrical and Asymmetrical 3D Convolution Networks for LiDAR Segmentation

- 2021-03 [NEW:fire:] Cylinder3D is accepted to CVPR 2021 as an Oral presentation

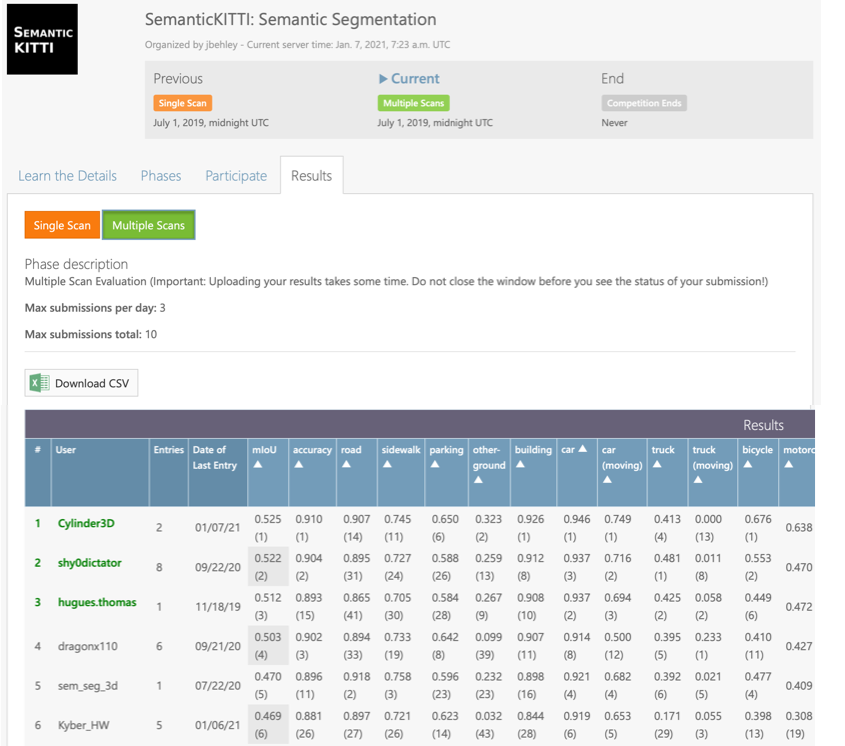

- 2021-01 [NEW:fire:] Cylinder3D achieves the 1st place in the leaderboard of SemanticKITTI multiscan semantic segmentation

- 2020-12 [NEW:fire:] Cylinder3D achieves the 2nd place in the challenge of nuScenes LiDAR segmentation, with mIoU=0.779, fwIoU=0.899 and FPS=10Hz.

- 2020-12 We release the new version of Cylinder3D with nuScenes dataset support.

- 2020-11 We preliminarily release the Cylinder3D--v0.1, supporting the LiDAR semantic segmentation on SemanticKITTI and nuScenes.

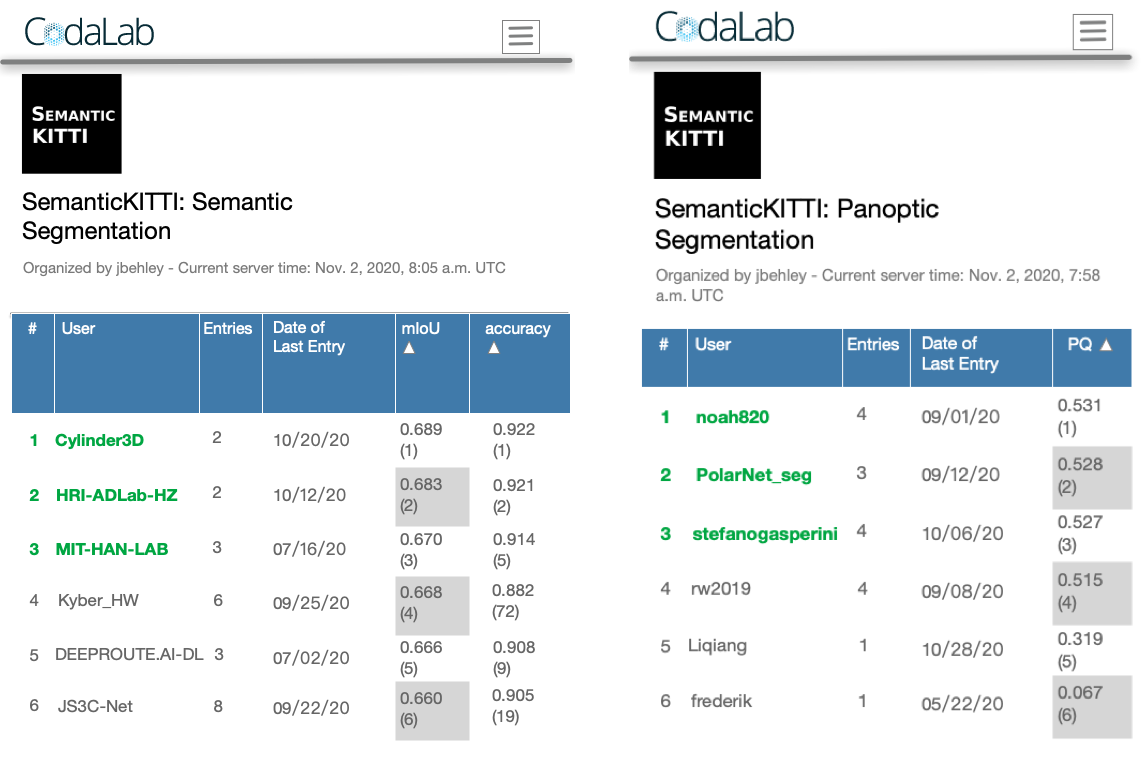

- 2020-11 Our work achieves the 1st place in the leaderboard of SemanticKITTI semantic segmentation (until CVPR2021 DDL, still rank 1st in term of Accuracy now), and based on the proposed method, we also achieve the 1st place in the leaderboard of SemanticKITTI panoptic segmentation.

- PyTorch >= 1.2

- yaml

- Cython

- torch-scatter

- nuScenes-devkit (optional for nuScenes)

- spconv (tested with spconv==1.2.1 and cuda==10.2)

./

├──

├── ...

└── path_to_data_shown_in_config/

├──sequences

├── 00/

│ ├── velodyne/

| | ├── 000000.bin

| | ├── 000001.bin

| | └── ...

│ └── labels/

| ├── 000000.label

| ├── 000001.label

| └── ...

├── 08/ # for validation

├── 11/ # 11-21 for testing

└── 21/

└── ...

./

├──

├── ...

└── path_to_data_shown_in_config/

├──v1.0-trainval

├──v1.0-test

├──samples

├──sweeps

├──maps

- modify the config/semantickitti.yaml with your custom settings. We provide a sample yaml for SemanticKITTI

- train the network by running "sh train.sh"

Please refer to NUSCENES-GUIDE

-- We provide a pretrained model for SemanticKITTI LINK1 or LINK2 (access code: xqmi)

-- For nuScenes dataset, please refer to NUSCENES-GUIDE

python demo_folder.py --demo-folder YOUR_FOLDER --save-folder YOUR_SAVE_FOLDER

If you want to validate with your own datasets, you need to provide labels. --demo-label-folder is optional

python demo_folder.py --demo-folder YOUR_FOLDER --save-folder YOUR_SAVE_FOLDER --demo-label-folder YOUR_LABEL_FOLDER

- Release pretrained model for nuScenes.

- Support multiscan semantic segmentation.

- Support more models, including PolarNet, RandLA, SequeezeV3 and etc.

- Integrate LiDAR Panotic Segmentation into the codebase.

If you find our work useful in your research, please consider citing our paper:

@article{zhu2020cylindrical,

title={Cylindrical and Asymmetrical 3D Convolution Networks for LiDAR Segmentation},

author={Zhu, Xinge and Zhou, Hui and Wang, Tai and Hong, Fangzhou and Ma, Yuexin and Li, Wei and Li, Hongsheng and Lin, Dahua},

journal={arXiv preprint arXiv:2011.10033},

year={2020}

}

#for LiDAR panoptic segmentation

@article{hong2020lidar,

title={LiDAR-based Panoptic Segmentation via Dynamic Shifting Network},

author={Hong, Fangzhou and Zhou, Hui and Zhu, Xinge and Li, Hongsheng and Liu, Ziwei},

journal={arXiv preprint arXiv:2011.11964},

year={2020}

}

We thanks for the opensource codebases, PolarSeg and spconv

The SenseTime-LidarSegmentation is now hiring. If you are interested in internship, researcher and software engineer positions related to lidar segmentation or deep learning, feel free to send email: zhouhui@sensetime.com.