Our paper is now available online: A Survey and an Empirical Evaluation of Multi-view Clustering Approaches. .

This reporsity is a collection of state-of-the-art (SOTA), novel incomplete and complete multi-view clustering (papers, codes and datasets). Any problems, please contact lhzhou@ynu.edu.cn, dugking@mail.ynu.edu.cn and lu983760699@gmail.com. If you find this repository useful to your research or work, it is really appreciated to star this repository. ❤️

- PRELIMINARIES

- Survey papers

- Papers

- The information fusion strategy

- The clustering routine

- The weighting strategy

- COMPLETE MULTI-VIEW CLUSTERING

- Spectral clustering-based approaches

- Co-regularization and co-training spectral clustering

- Constrained spectral clustering

- Fast spectral clustering

- NMF-based approaches

- Fast NMF

- Deep NMF

- Multiple kernel learning

- Graph learning

- Embedding learning

- Alignment learning

- Subspace learning

- Self-paced learning

- Co-Clustering-based approaches

- Multi-task-based approaches

- Incomplete Multi-view clustering

- Code

- Benchmark Datasets

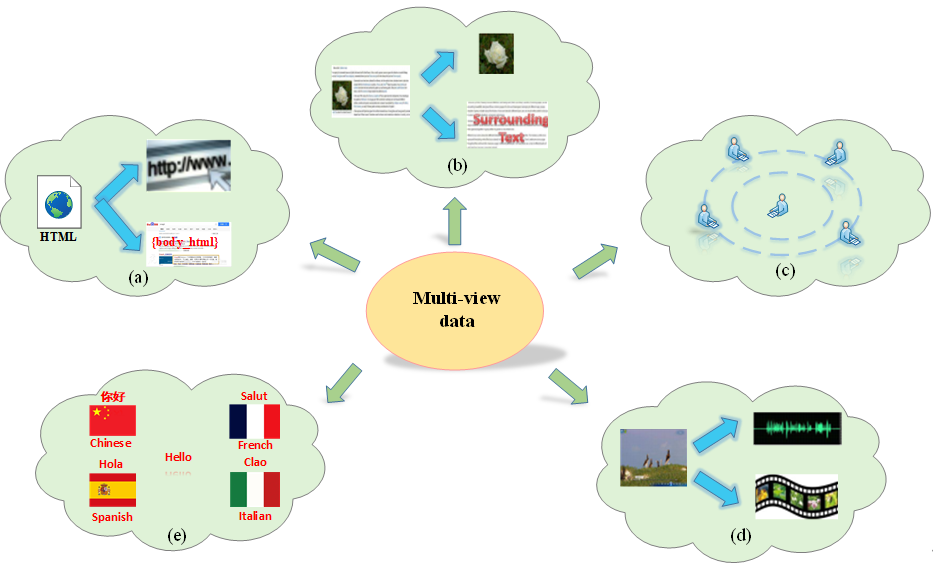

Multi-view data means that the same sample is described from different perspectives, and each perspective describes a class of features of the sample, called a view. In other words, the same sample can be represented by multiple heterogeneous features and each feature representation corresponds to a view. Xu, Tao et al. 2013 provided an intuitive example, where a) a web document is represented by its url and words on the page, b) a web image is depicted by its surrounding text separate to the visual information, c) images of a 3D sample taken from different viewpoints, d) video clips are combinations of audio signals and visual frames, e) multilingual documents have one view in each language.

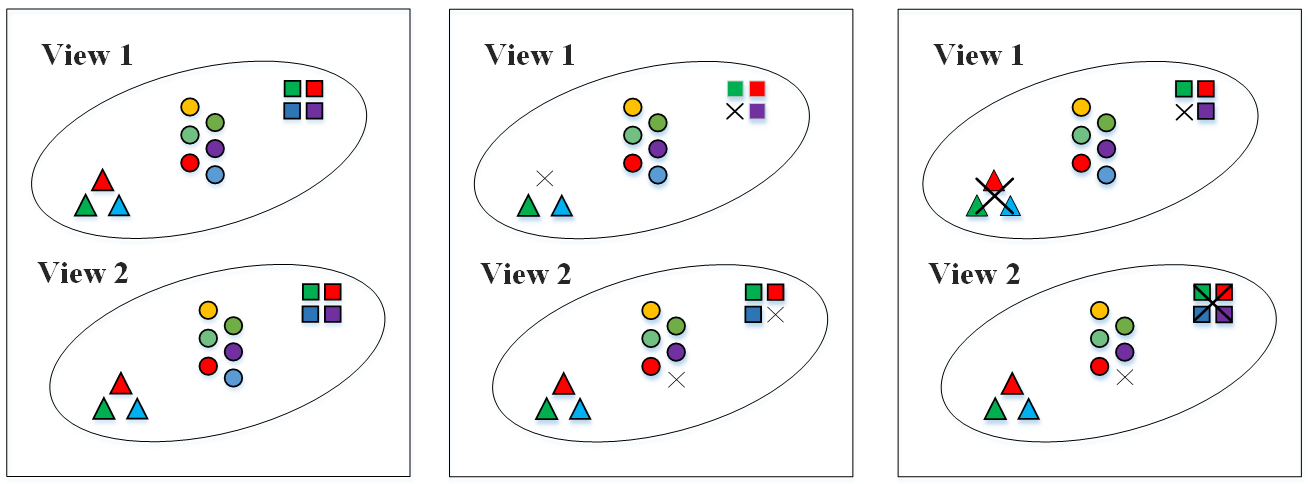

Multi-view data may be complete or incomplete. The complete multi-view data means that each feature has been collected and each sample appears in each view, while incomplete multi-view data indicates that some data samples could be missing their observation on some views (i.e., missing samples) or could be available only for their partial features (i.e., missing feature). (Zhao, Lyu et al. 2022) gave several specific examples, for example, in multi-lingual documents clustering task, documents are translated into different languages to denote different views, but many documents may have only one or two language versions due to the difficulties to obtain documents in each language; in social multimedia, some sample may miss visual or audio information due to sensor failure; in health informatics, some patients may not take certain lab tests to cause missing views or missing values; in video surveillance, some views are missing due to the cameras for these views are out of action or suffer from occlusions. (Zong, Miao et al. 2021) also considered the case of missing clusters, i.e. some clusters may be missing in some views. Figure 2 illustrates the cases of missing samples and missing clusters, where the samples in the same cluster are represented by the same shape but distinguished by color, the marker “×” means missing samples and missing clusters. In Figure 2. (a), clusters and instances are complete; in Figure 2. (b), clusters are complete but four samples are missing; while in Figure 2. (c), two clusters and two samples are missing.

Multi-view clustering (MVC) aims to group samples (objects/instances/points) with similar structures or patterns into the same group (cluster) and samples with dissimilar ones into different groups by combining the available feature information of different views and searching for consistent clusters across different views.

For these multi-view clustering methods, they commonly require that all views of data are complete. However, the requirement is often impossible to satisfy because it is often the case that some views of samples are missing in the real-world applications, especially in the applications of disease diagnosing and webpage clustering. This incomplete problem of views leads to the failure of the conventional multi-view methods. The problem of clustering incomplete multi-view data is known as incomplete multi-view clustering (IMVC) (or partial multi-view clustering) (Hu and Chen 2019). The purpose of IMVC is to group these multi-view data points with incomplete feature views into different clusters by using the observed data instances in different views. IMVC consists of missing multi-view clustering, uncertain multi-view clustering, and incremental multi-view clustering.

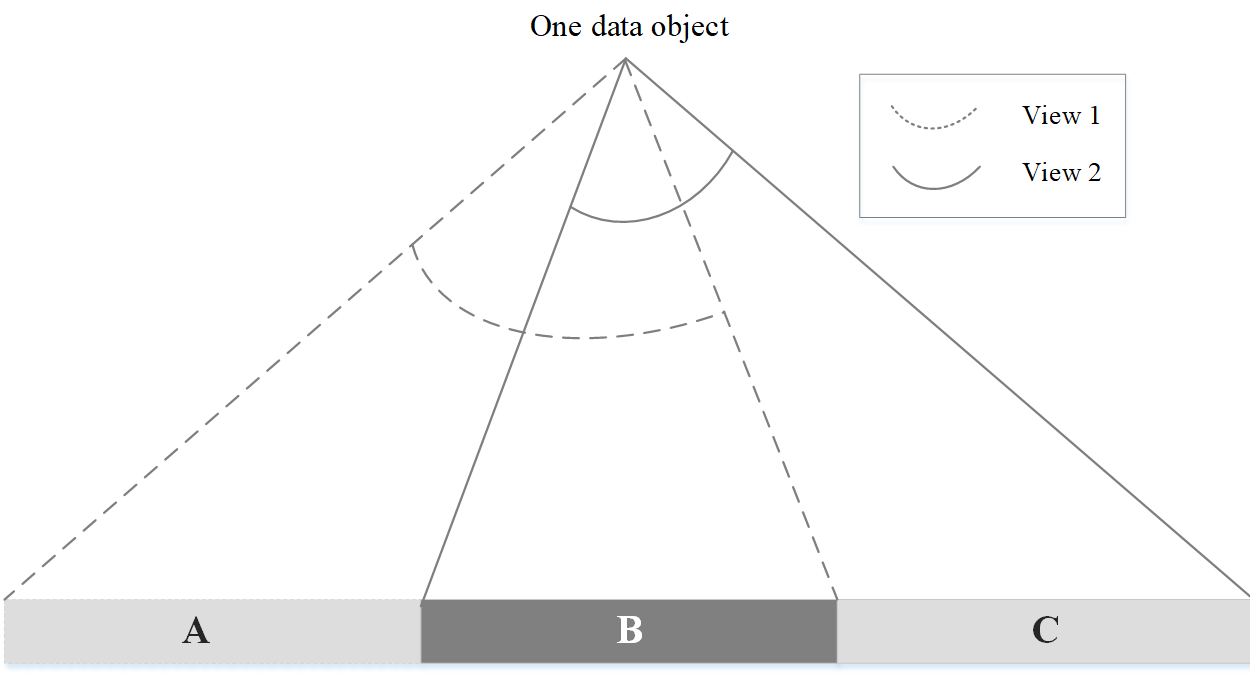

There are two significant principles ensuring the effectiveness of MVC: consensus and complementary principles Xu, Tao et al. 2013. The consistent of multi-view data means that there is some common knowledge across different views (e.g. both two pictures about dogs have contour and facial features), while the complementary of multi-view data refers to some unique knowledge contained in each view that is not available in other views (e.g. one view shows the side of a dog and the other shows the front of the dog, these two views allow for a more complete depiction of the dog). Therefore, the consensus principle aims to maximize the agreement across multiple distinct views for improving the understanding of the commonness of the observed samples, while the complementary principle states that in a multi-view context, each view of the data may contain some particular knowledge that other views do not have, and this particular knowledge can mutually complement to each other. (Yang and Wang 2018) illustrated intuitively the complementary and consensus principles by mapping a data sample with two views into a latent data space, where part A and part C exist in view 1 and view 2 respectively, indicating the complementarity of two views; meanwhile, part B is shared by both views, showing the consensus between two views.

-

A survey on multi-view clustering [paper]

-

A survey of multi-view representation learning [paper]

-

A survey of multi-view machine learning [paper]

-

A Survey on Multi-view Learning [paper]

-

Multi-view clustering: A survey [paper]

-

Representation Learning in Multi-view Clustering: A Literature Review [paper]

-

Survey on deep multi-modal data analytics: Collaboration, rivalry, and fusion [paper]

-

A Comprehensive Survey on Multi-view Clustering [paper]

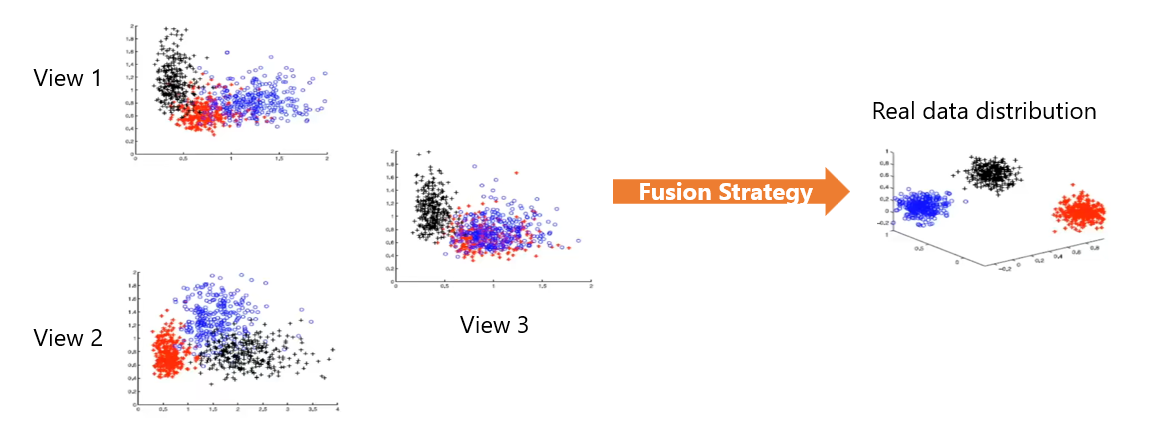

The strategies for fusing information from multiple views can be divided into three categories: direct-fusion, early-fusion, and late-fusion according to the fusion stage. They are also called data level, feature level, and decision level fusion respectively, i.e. fusion in the data, fusion in the projected features, and fusion in the results. The direct-fusion approaches directly incorporate multi-view data into the clustering process through optimizing some particular loss functions.

The early-fusion is to fuse multiple features or graph structure representations of multi-view data into a single representation or a consensus affinity graph across multiple views, and then any known single-view clustering algorithm (such as k-means) can be applied to partition data samples.

The approaches of the late fusion first perform data clustering on each view and then fuse the results for all the views to obtain the final clustering results according to consensus. Late fusion can further be divided into integrated learning and collaborative training. The input to the integrated clustering algorithm is the result of clustering corresponding to multiple views

The one-step routine integrates representation learning and clustering task into a unified framework, which simultaneously learns a graph for each view, a partition for each view, and a consensus partition. Based on an iterative optimization strategy, the high-quality consensus clustering results can be obtained directly and are employed to guide the graph construction and the updating of basic partitions, which later contributes to a new consensus partition. The joint optimization co-trains the clustering together with representation learning, leveraging the inherent interactions between two tasks and realizing the mutual benefit of these two steps. In one-step routine, the cluster label of each data point can be directly assigned and does not need any post-processing, decreasing the instability of the clustering performance induced by the uncertainty of post-processing operation

The two-step routine first extracts the low-dimensional representation of multi-view data and then uses traditional clustering approaches (such as k-means) to process the obtained representation. That is to say, the two-step routine often needs a post-processing process, i.e. applying a simple clustering method on the learned representation or carrying out a fusion operation on the clustering results of individual views, to produce the final clustering results.

-

Self-weighted Multiview Clustering with Multiple Graphs [paper|code]

-

Multi-view content-context information bottleneck for image clustering [paper|[code]

-

Parameter-free auto-weighted multiple graph learning: a framework for multiview clustering and semi-supervised classification [paper|code]

-

基于两级权重的多视角聚类 [paper|[code]

-

Confidence level auto-weighting robust multi-view subspace clustering [paper|[code]

-

Weighted multi-view clustering with feature selection [paper|[code]

-

Two-level weighted collaborative k-means for multi-view clustering [paper|code]

-

Weighted multi-view co-clustering (WMVCC) for sparse data [paper|[code]

-

A cluster-weighted kernel K-means method for multi-view clustering[paper|[code]

-

Multi-graph fusion for multi-view spectral clustering [paper|code]

-

一种双重加权的多视角聚类方法 [paper|[code]

-

View-Wise Versus Cluster-Wise Weight: Which Is Better for Multi-View Clustering?[paper|[code]

-

Multi-view clustering via canonical correlation analysis [paper|[code]

-

Multi-view kernel spectral clustering [paper|[code]

-

Correlational spectral clustering [paper|[code]

-

(TKDE,2020): Multi-view spectral clustering with high-order optimal neighborhood laplacian matrix. [paper|code]

-

(KBS,2020): Multi-view spectral clustering by simultaneous consensus graph learning and discretization. [paper|code]

-

A co-training approach for multi-view spectral clustering[paper|[code]

-

Combining labeled and unlabeled data with co-training[paper|[code]

-

Heterogeneous image feature integration via multi-modal spectral clustering[paper|[code]

-

Robust multi-view spectral clustering via low-rank and sparse decomposition [paper|[code]

-

Multiview clustering via adaptively weighted procrustes[paper|[code]

-

One-step multi-view spectral clustering[paper|[code]]

-

Multi-graph fusion for multi-view spectral clustering[paper|code]

-

Multi-view spectral clustering with adaptive graph learning and tensor schatten p-norm[paper|[code]

-

Multi-view spectral clustering via integrating nonnegative embedding and spectral embedding[paper|code]

-

Multi-view spectral clustering via constrained nonnegative embedding[paper|[code]

-

Low-rank tensor constrained co-regularized multi-view spectral clustering[paper|[code]

-

Large-scale multi-view spectral clustering via bipartite graph[paper|code]

-

Refining a k-nearest neighbor graph for a computationally efficient spectral clustering[paper|[code]

-

Multi-view clustering based on generalized low rank approximation [paper|[code]

-

Multi-view spectral clustering by simultaneous consensus graph learning and discretization[paper|code]

-

Multi-view clustering via joint nonnegative matrix factorization [paper|code]

-

Multi-view clustering via concept factorization with local manifold regularization [paper|code]

-

Multi-view clustering via multi-manifold regularized non-negative matrix factorization [paper|[code]]

-

Semi-supervised multi-view clustering with graph-regularized partially shared non-negative matrix factorization [paper|code]

-

Semi-supervised multi-view clustering based on constrained nonnegative matrix factorization [paper|[code]

-

Semi-supervised multi-view clustering based on orthonormality-constrained nonnegative matrix factorization [paper|[code]

-

Multi-view clustering by non-negative matrix factorization with co-orthogonal constraints [paper|code]

-

Dual regularized multi-view non-negative matrix factorization for clustering [paper|[code]

-

A network-based sparse and multi-manifold regularized multiple non-negative matrix factorization for multi-view clustering [paper|[code]

-

Multi-view clustering with the cooperation of visible and hidden views [paper|[code]

-

Fast Multi-View Clustering via Nonnegative and Orthogonal Factorization [paper|[code]

-

Multi-View Clustering via Deep Matrix Factorization [paper|code]

-

Multi-view clustering via deep concept factorization [paper|code]

-

Deep Multi-View Concept Learning[paper|[code]

-

Deep graph regularized non-negative matrix factorization for multi-view clustering[paper|code

-

Multi-view clustering via deep matrix factorization and partition alignment[paper|[code]

-

Deep multiple non-negative matrix factorization for multi-view clustering[paper|[code]

-

Auto-weighted multi-view clustering via kernelized graph learning[paper|code]

-

Multiple kernel subspace clustering with local structural graph and low-rank consensus kernel learning [paper|[code]

-

Jointly Learning Kernel Representation Tensor and Affinity Matrix for Multi-View Clustering [paper|[code]

-

Kernelized Multi-view Subspace Clustering via Auto-weighted Graph Learning[paper|[code]

-

Refining a k-nearest neighbor graph for a computationally efficient spectral clustering[paper|[code]]

-

Robust unsupervised feature selection via dual self representation and manifold regularization [paper|[code]

-

Robust graph learning from noisy data [paper|[code]

-

Multi-view projected clustering with graph learning[paper|code]

-

Parameter-Free Weighted Multi-View Projected Clustering with Structured Graph Learning [paper|[code]

-

Learning robust affinity graph representation for multi-view clustering[paper|[code]

-

A study of graph-based system for multi-view clustering [paper|code]

-

Multi-view Clustering with Latent Low-rank Proxy Graph Learning[paper|[code]

-

Learning latent low-rank and sparse embedding for robust image feature Extraction [paper|[code]

-

Robust multi-view graph clustering in latent energy-preserving embedding space[paper|[code]

-

Robust multi-view data clustering with multi-view capped-norm k-means[paper|[code]

-

Robust multi-view graph clustering in latent energy-preserving embedding space[paper|[code]

-

COMIC: Multi-view clustering without parameter selection[paper|code]

-

Relaxed multi-view clustering in latent embedding space[paper|code]

-

Auto-weighted multi-view clustering via spectral embedding[paper|[code]

-

Robust graph-based multi-view clustering in latent embedding space[paper|[code]

-

Efficient correntropy-based multi-view clustering with anchor graph embedding[paper|[code]

-

Self-supervised discriminative feature learning for multi-view clustering[paper|code]

-

Deep Multiple Auto-Encoder-Based Multi-view Clustering[paper|[code]]

-

Joint deep multi-view learning for image clustering[paper|[code]]

-

Deep embedded multi-view clustering with collaborative training[paper|[code]]

-

Trio-based collaborative multi-view graph clustering with multiple constraints[paper|[code]

-

Multi-view graph embedding clustering network: Joint self-supervision and block diagonal representation[paper|[code]

-

Multi-view fuzzy clustering of deep random walk and sparse low-rank embedding[paper|[code]]

-

Differentiable Bi-Sparse Multi-View Co-Clustering[paper|[code]]

-

Multi-view Clustering via Late Fusion Alignment Maximization[paper|code]

-

End-to-end adversarial-attention network for multi-modal clustering[paper|code]

-

Reconsidering representation alignment for multi-view clustering[paper|code]

-

Multiview Subspace Clustering With Multilevel Representations and Adversarial Regularization[[paper]|code]

-

Consistent and diverse multi-View subspace clustering with structure constraint[paper|[code]]

-

Consistent and specific multi-view subspace clustering[paper|code]

-

Flexible Multi-View Representation Learning for Subspace Clustering[paper|code]

-

Learning a joint affinity graph for multiview subspace clustering[paper|[code]

-

Exclusivity-consistency regularized multi-view subspace clustering[paper|code]

-

Multi-view subspace clustering with intactness-aware similarity[paper|code]

-

Diversity-induced multi-view subspace clustering[paper|code]

-

Split multiplicative multi-view subspace clustering[paper|code]

-

Learning a consensus affinity matrix for multi-view clustering via subspaces merging on Grassmann manifold[paper|[code]

-

Clustering on multi-layer graphs via subspace analysis on Grassmann manifolds[paper|[code]

-

Deep multi-view subspace clustering with unified and discriminative learning[paper|[code]

-

Attentive multi-view deep subspace clustering net[paper|[code]

-

Dual shared-specific multiview subspace clustering[paper|code]0

-

Multi-view subspace clustering with consistent and view-specific latent factors and coefficient matrices[paper|[code]

-

Robust low-rank kernel multi-view subspace clustering based on the schatten p-norm and correntropy[paper|[code]]

-

Multiple kernel low-rank representation-based robust multi-view subspace clustering[paper|[code]]

-

One-step kernel multi-view subspace clustering[paper|[code]]

-

Deep low-rank subspace ensemble for multi-view clustering[paper|[code]]

-

Multi-view subspace clustering with adaptive locally consistent graph regularization[paper|[code]]

-

Multi-view subspace clustering networks with local and global graph information[paper|[code]

-

Multi-view Deep Subspace Clustering Networks[paper|code|pytorch]

-

Multiview subspace clustering via tensorial t-product representation[paper|[code]]

-

Latent complete row space recovery for multi-view subspace clustering[paper|[code]]

-

Fast Parameter-Free Multi-View Subspace Clustering With Consensus Anchor Guidance[paper|[code]]

-

Multi-view subspace clustering via partition fusion. Information Sciences[[paper]|[code]

-

Semi-Supervised Structured Subspace Learning for Multi-View Clustering[paper|[code]

-

双加权多视角子空间聚类算法[paper|[code]]

-

Fast Self-guided Multi-view Subspace Clustering [paper|code]

-

Self-paced learning for latent variable models[paper|[code]

-

Multi-view self-paced learning for clustering[paper|[code]

-

Self-paced and auto-weighted multi-view clustering[paper|[code]]

-

Dual self-paced multi-view clustering[paper|[code]

-

A generalized maximum entropy approach to bregman co-clustering and matrix approximation[paper|[code]

-

Multi-view information-theoretic co-clustering for co-occurrence data[paper|[code]

-

Dynamic auto-weighted multi-view co-clustering[paper|[code]

-

Auto-weighted multi-view co-clustering with bipartite graphs[paper|[code]]

-

Auto-weighted multi-view co-clustering via fast matrix factorization[paper|[code]]

-

Differentiable Bi-Sparse Multi-View Co-Clustering[paper|[code]

-

Weighted multi-view co-clustering (WMVCC) for sparse data[paper|[code]

-

Multi-task multi-view clustering for non-negative data[paper|[code]

-

A Multi-task Multi-view based Multi-objective Clustering Algorithm[paper|[code]]

-

Multi-task multi-view clustering[paper|[code]]

-

Co-clustering documents and words using bipartite spectral graph partitioning[paper|[code]

-

Self-paced multi-task multi-view capped-norm clustering[paper|[code]]

-

Learning task-driving affinity matrix for accurate multi-view clustering through tensor subspace learning[paper|[code]]

-

Doubly aligned incomplete multi-view clustering[paper|[code]]

-

Incomplete multiview spectral clustering with adaptive graph learning[paper|[code]]

-

Late fusion incomplete multi-view clustering[paper|[code]]

-

Consensus graph learning for incomplete multi-view clustering[paper|[code]

-

Multi-view kernel completion[paper|[code]

-

Unified embedding alignment with missing views inferring for incomplete multi-view clustering[paper|[code]]

-

One-Stage Incomplete Multi-view Clustering via Late Fusion[paper|[code]]

-

Spectral perturbation meets incomplete multi-view data[paper|[code]]

-

Efficient and effective regularized incomplete multi-view clustering[paper|[code]]

-

Adaptive partial graph learning and fusion for incomplete multi‐view clustering[paper|[code]

-

Unified tensor framework for incomplete multi-view clustering and missing-view inferring[paper|[code]]

-

Incomplete multi-view clustering with cosine similarity [paper|[code]]

-

COMPLETER: Incomplete Multi-view Clustering via Contrastive Prediction [paper|code]

-

Learning Disentangled View-Common and View-Peculiar Visual Representations for Multi-View Clustering [paper|code]

-

Partial multi-view clustering via consistent GAN[paper|[code]

-

One-step multi-view subspace clustering with incomplete views[paper|[code]]

-

Consensus guided incomplete multi-view spectral clustering[paper|[code]

-

Incomplete multi-view subspace clustering with adaptive instance-sample mapping and deep feature fusion[paper|[code]]

-

Dual Alignment Self-Supervised Incomplete Multi-View Subspace Clustering Network[paper|[code]]

-

Structural Deep Incomplete Multi-view Clustering Network[paper|[code]]

-

One-Step Graph-Based Incomplete Multi-View Clusterin[paper|code]

-

Structured anchor-inferred graph learning for universal incomplete multi-view clustering [paper|code

-

Complete/incomplete multi‐view subspace clustering via soft block‐diagonal‐induced regulariser[paper|[code]

-

A novel consensus learning approach to incomplete multi-view clustering[paper|[code]]

-

Adaptive graph completion based incomplete multi-view clustering[paper|[code]

-

Incomplete multi-view clustering via contrastive prediction [paper|[code]

-

Outlier-robust multi-view clustering for uncertain data[paper|[code]

-

Multi-view spectral clustering for uncertain objects[paper|[code]

-

Incremental multi-view spectral clustering with sparse and connected graph learning [paper|[code]]

-

Incremental multi-view spectral clustering [paper|[code]]

-

Incremental multi-view spectral clustering with sparse and connected graph learning[paper|[code]]

-

Multi-graph fusion for multi-view spectral clustering[paper|[code]

-

Incremental learning through deep adaptation[paper|[code]]

-

Clustering-Induced Adaptive Structure Enhancing Network for Incomplete Multi-View Data [paper|[code]]

-

-

- "Desc.md" records which papers use the current dataset

-

-

-

We also collect some datasets, which are uploaded to baiduyun. address(code)f3n4. Other some dataset links Multi-view-Datasets

The text datasets consist of news dataset (3Sourses, BBC, BBCSport, Newsgroup), multilingual documents dataset (Reuters, Reuters-21578), citations dataset (Citeseer), WebKB webpage dataset (Cornell, Texas, Washington and Wisconsin), articles (Wikipedia), and diseases dataset (Derm).

| Dataset | #views | #classes | #instances | F-Type(#View1) | F-Type(#View2) | F-Type(#View3) | F-Type(#View4) | F-Type(#View5) | F-Type(#View6) |

|---|---|---|---|---|---|---|---|---|---|

| 3Sources | 3 | 6 | 169 | BBC(3560) | Reuters(3631) | Guardian(3068) | |||

| BBC | 4 | 5 | 685 | seg1(4659) | seg2(4633) | seg3(4665) | seq4(4684) | . | |

| BBCSport | 3 | 5 | 544/282 | seq1(3183/2582) | seg2(3203/2544) | / seq3 (2465) | |||

| Newsgroup | 3 | 5 | 500 | -2000 | -2000 | -2000 | |||

| Reuters | 5 | 6 | 600/1200 | English(9749/2000) | French(9109/2000) | German(7774/2000) | / Italian(2000) | / Spanish(2000) | |

| Reuters-21578 | 5 | 6 | 1500 | English(21531) | French(24892) | German(34251) | Italian(15506) | Spanish(11547) | |

| Citeseers | 2 | 6 | 3312 | citations(4732) | word vector(3703) | ||||

| Cornell | 2 | 5 | 195 | Citation (195) | Content (1703) | ||||

| Texas | 2 | 5 | 187 | Citation (187) | Content (1398) | ||||

| Washington | 2 | 5 | 230 | Citation (230) | Content (2000) | ||||

| Wisconsin | 2 | 5 | 265 | Citation (265) | Content (1703) | ||||

| Wikipedia | 2 | 10 | 693 | ||||||

| Derm | 2 | 6 | 366 | Clinical (11) | Histopathological(22) |

The image datasets consist of facial image datasets (Yale, Yale-B, Extended-Yale, VIS/NIR, ORL, Notting-Hill, YouTube Faces), handwritten digits datasets (UCI, Digits, HW2source, Handwritten, MNIST-USPS, MNIST-10000, Noisy MNIST-Rotated MNIST), object image dataset (NUS/WIDE, MSRC, MSRCv1, COIL-20, Caltech101), Microsoft Research Asia Internet Multimedia Dataset 2.0 (MSRA-MM2.0), natural scene dataset (Scene, Scene-15, Out-Scene, Indoor), plant species dataset (100leaves), animal with attributes (AWA), multi-temporal remote sensing dataset (Forest), Fashion (such as T-shirt, Dress and Coat) dataset (Fashion-10K), sports event dataset (Event), image dataset (ALOI, ImageNet, Corel, Cifar-10, SUN1k, Sun397).

| Dataset | #views | #classes | #instances | F-Type(#View1) | F-Type(#View2) | F-Type(#View3) | F-Type(#View4) | F-Type(#View5) | F-Type(#View6) | url |

|---|---|---|---|---|---|---|---|---|---|---|

| Yale | 3 | 15 | 165 | Intensity (4096) | LBP(33040 | Gabor (6750) | ||||

| Yale-B | 3 | 10 | 650 | Intensity(2500) | LBP(3304) | Gabor(6750) | ||||

| Extended-Yale | 2 | 28 | 1774 | LBP(900) | COV(45) | |||||

| VIS/NIR | 2 | 22 | 1056 | VL(10000) | NIRI(10000) | |||||

| ORL | 3 | 40 | 400 | Intensity(4096) | LBP(3304) | Gabor(6750) | ||||

| Notting-Hill | 3 | 5 | 550 | Intensity(2000) | LBP(3304) | Gabor(6750) | ||||

| YouTube Faces | 3 | 66 | 152549 | CH(768) | GIST(1024) | HOG( 1152) | ||||

| Digits | 3 | 10 | 2000 | FAC(216) | FOU(76) | KAR (64) | ||||

| HW2sources | 2 | 10 | 2000 | FOU (76) | PIX (240) | |||||

| Handwritten | 6 | 10 | 2000 | FOU(76) | FAC(216) | KAR(64) | PIX(240) | ZER(47) | MOR(6) | |

| MNIST-USPS | 2 | 10 | 5000 | MNIST(28´28) | USPS(16´16) | |||||

| MNIST-10000 | 2 | 10 | 10000 | VGG16 FC1(4096) | Resnet50(2048) | |||||

| MNIST-10000 | 3 | 10 | 10000 | ISO(30) | LDA(9) | NPE(30) | ||||

| Noisy MNIST-Rotated MNIST | 2 | 10 | 70000 | Noisy MNIST(28´28) | Rotated | |||||

| NUS-WIDE-Obj | 5 | 31 | 30000 | CH(65) | CM(226) | CORR(145) | ED(74) | WT(129) | ||

| NUSWIDE | 6 | 12 | 2400 | CH(64) | CC(144) | EDH(73) | WAV(128) | BCM(255) | SIFT(500) | |

| MSRC | 5 | 7 | 210 | CM(48) | LBP(256) | HOG(100) | SIFT(200) | GIST(512) | ||

| MSRCv1 | 5 | 7 | 210 | CM(24) | HOG(576) | GIST(512) | LBP(256) | GENT(254) | ||

| COIL-20 | 3 | 20 | 1440 | Intensity(1024) | LBP (3304) | Gabor (6750) | ||||

| Caltech101-7/20/102 | 6 | 7/20/102 | 1474/2386/9144 | Gabor(48) | WM(40) | Centrist (254) | HOG(1984) | GIST(512) | LBP(928) | |

| MSRA-MM2.0 | 4 | 25 | 5000 | HSV-CH(64) | CORRH(144) | EDH(75) | WT(128) | |||

| Scene | 4 | 8 | 2688 | GIST(512) | CM(432) | HOG(256) | LBP(48) | |||

| scene-15 | 3 | 15 | 4485 | GIST(1800) | PHOG(1180) | LBP(1240) | ||||

| Out-Scene | 4 | 8 | 2688 | GIST(512) | LBP(48) | HOG(256) | CM(432) | |||

| Indoor | 6 | 5 | 621 | SURF(200) | SIFT(200) | GIST(512) | HOG(680) | WT(32) | ||

| 100leaves | 3 | 100 | 1600 | TH(64) | FSM(64) | SD(64) | ||||

| Animal with attributes | 6 | 50 | 4000/30475 | CH(2688) | LSS(2000) | PHOG(252) | SIFT(2000) | RGSIFT(2000) | -2000 | |

| Forest | 2 | 4 | 524 | RS(9) | GWSV(18) | |||||

| Fashion-10K | 2 | 10 | 70000 | Test set(28´28) | sampled set (28´28) | |||||

| Event | 6 | 8 | 1579 | SURF(500) | SIFT(500) | GIST(512) | HOG(680) | WT(32) | LBP(256) | |

| ALOI | 4 | 100 | 110250 | RGB-CH(77) | HSV-CH(13) | CS(64) | Haralick (64) | |||

| ImageNet | 3 | 50 | 12000 | HSV-CH(64) | GIST(512) | SIFT(1000) | ||||

| Corel | 3 | 50 | 5000 | CH (9) | EDH(18) | WT (9) | ||||

| Cifar-10 | 3 | 10 | 60000 | CH(768) | GIST (1024) | HOG(1152) | ||||

| SUN1k | 3 | 10 | 1000 | SIFT(6300) | HOG(6300) | TH(10752) | ||||

| Sun397 | 3 | 397 | 108754 | CH(768) | GIST (1024) | HOG(1152) |

The prokaryotic species dataset (Prok) is a text-gene a dataset, which consists of 551 prokaryotic samples belonging to

4 classes. The species are represented by 1 textual view and 2 genomic views. The textual descriptions are summarized

into a document-term matrix that records the TF-IDF re-weighted word frequencies. The genomic views are the proteome

composition and the gene repertoire.

The image-text datasets consist of Wikipedia’s featured articles dataset (Wikipedia), drosophila embryos dataset (BDGP),

NBA-NASCAR Sport dataset (NNSpt), indoor scenes (SentencesNYU v2 (RGB-D)), Pascal dataset (VOC), object dataset (

NUS-WIDE-C5), and photographic images (MIR Flickr 1M) .

The video datasets consist of actions of passengers dataset (DTHC), pedestrian video shot dataset (Lab), motion of body

sequences (CMU Mobo) dataset, face video sequences dataset (YouTubeFace_sel, Honda/UCSD), and Columbia Consumer Video

dataset (CCV).

| Dataset | #views | #classes | #instances | F-Type(#View1) | F-Type(#View2) | F-Type(#View3) | F-Type(#View4) | F-Type(#View5) |

|---|---|---|---|---|---|---|---|---|

| Prokaryotic | 3 | 4 | 551 | 438 | 3 | 393 | ||

| Wikipedia | 2 | 10 | 693/2866 | image | article | |||

| BDGP | 5 | 2500 | Visual(1750) | Textual(79) | ||||

| NNSpt | 2 | 2 | 840 | image(1024) | TF-IDF(296) | |||

| SentencesNYU v2 (RGB-D) | 2 | 13 | 1449 | image (2048) | text (300) | |||

| VOC | 2 | 20 | 5,649 | Image: Gist (512) | Text (399) | |||

| NUS-WIDE-C5 | 2 | 5 | 4000 | visual codeword vector(500) | annotation vector(1000) | |||

| MIR Flickr 1M | 4 | 10 | 2000 | HOG(300) | LBP(50) | HSV CORRH (114) | TF-IDF(60) | |

| DTHC | 3 cameras | Dispersing from the center quickly | 3 video sequences | 151 frames/video | resolution 135 × 240 | |||

| Lab | 4 cameras | 4 people | 16 video sequences | 3915 frames/video | resolution 144 ×180 | |||

| CMU Mobo(CAGL) | 4 videos | 24 objects | 96 video sequences | about 300 frames/video | resolution 40´40 | |||

| Honda/UCSD(CAGL) | at least 2 videos/ person | 20 objects | 59 video sequences | 12 to 645 frames/video | resolution 20´20 | |||

| YouTubeFace_sel | 5 | 31 | 101499 | 64 | 512 | 64 | 647 | 838 |

| CCV | 3 | 20 | 6773 YouTube videos | SIFT(5000) | STIP(5000) | MFCC(4000) |