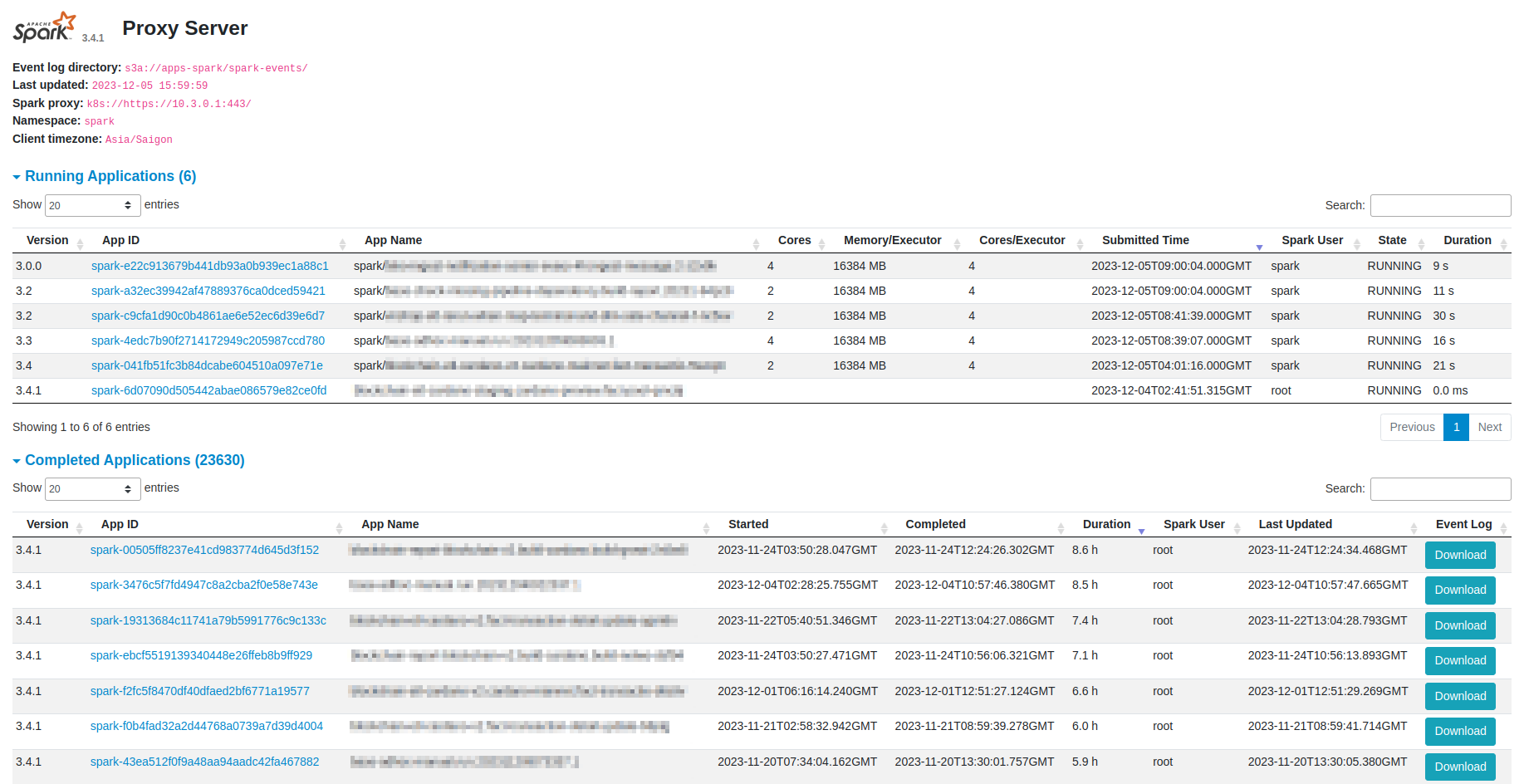

The Spark Proxy server extends the Spark History server by proxy live running SparkApp on Kubernetes alongside with provides application history from event logs stored in the file system.

helm install spark-proxy oci://ghcr.io/dungdm93/helm/spark-proxy:1.0.0 -f <values.yaml>spark-proxyaccept any Spark configuration in thesparkConfigfield, e.g:sparkConfig: spark.history.ui.port: 18080 spark.history.fs.logDirectory: hdfs://apps/spark/spark-events/ spark.history.fs.cleaner.enabled: true spark.history.fs.cleaner.interval: 1d spark.history.fs.cleaner.maxAge: 15d spark.history.store.serializer: PROTOBUF spark.history.store.hybridStore.enabled: true spark.history.store.hybridStore.diskBackend: ROCKSDB spark.history.store.maxDiskUsage: 8g

- The configuration settings for

spark-proxyare located within thesparkProxyConfigsession. Currently, only thekubernetesprovider is supported. By default,spark-proxymonitors SparkApps across all namespaces. If you want to limit the monitoring to SparkApps in a specific namespace, you can freely define thesparkProxyConfig.kubernetes.namespaceproperty.

The outcome of the spark-proxy build comprises only two JAR files, which you can incorporate into your custom Docker image.

For simplicity, just modify the BASE_IMAGE build argument as bellow.

docker build -f deploy/Dockerfile -t <your-image> --build-arg BASE_IMAGE=<your-base-image> .