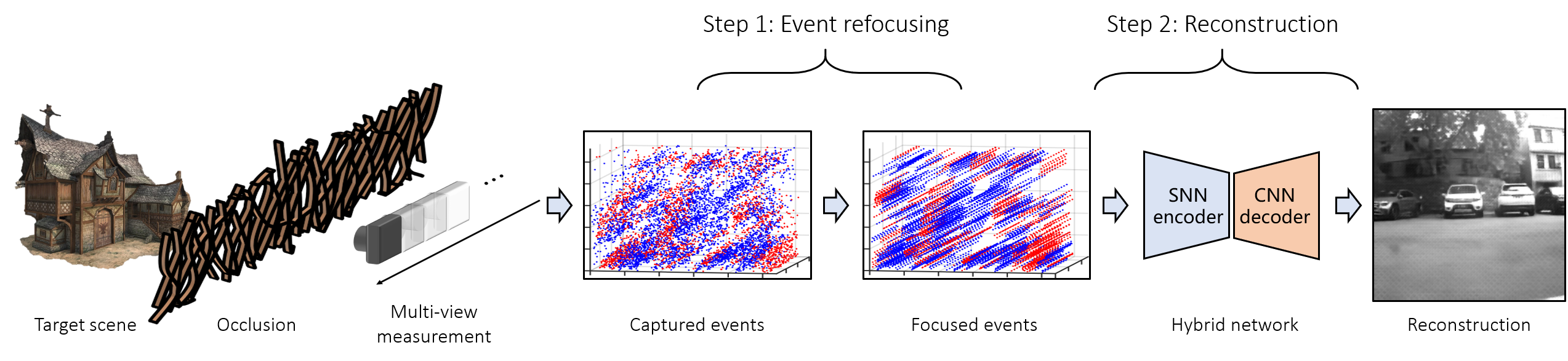

Although synthetic aperture imaging (SAI) can achieve the seeing-through effect by blurring out off-focus foreground occlusions while recovering in-focus occluded scenes from multi-view images, its performance is often deteriorated by very dense occlusions and extreme lighting conditions. To address the problem, this paper presents an Event-based SAI (E-SAI) method by relying on the asynchronous events with extremely low latency and high dynamic range acquired by an event camera. Specifically, the collected events are first refocused by a Refocus-Net module through aligning in-focus events while scattering out off-focus ones. Following that, a hybrid network composed of spiking neural networks (SNNs) and convolutional neural networks (CNNs) is proposed to encode the spatio-temporal information from the refocused events and reconstruct a visual image of the occluded targets.

Exhaustive experiments demonstrate that our proposed E-SAI method can achieve remarkable performance in dealing with very dense occlusions and extreme lighting conditions and produce high-quality images from pure event data.

Previous version has been published in CVPR'21 Event-based Synthetic Aperture Imaging with a Hybrid Network, which is selected as one of the Best Paper Candidates.

- Python 3.6

- Pytorch 1.6.0

- torchvision 0.7.0

- opencv-python 4.4.0

- NVIDIA GPU + CUDA

- numpy, argparse, matplotlib

- sewar, lpips (for evaluation, optional)

You can create a new Anaconda environment with the above dependencies as follows.

Please make sure to adapt the CUDA toolkit version according to your setup when installing torch and torchvision.

conda create -n esai python=3.6

conda activate esai

pip install torch==1.6.0+cu101 torchvision==0.7.0+cu101 -f https://download.pytorch.org/whl/torch_stable.html

pip install -r requirements.txt

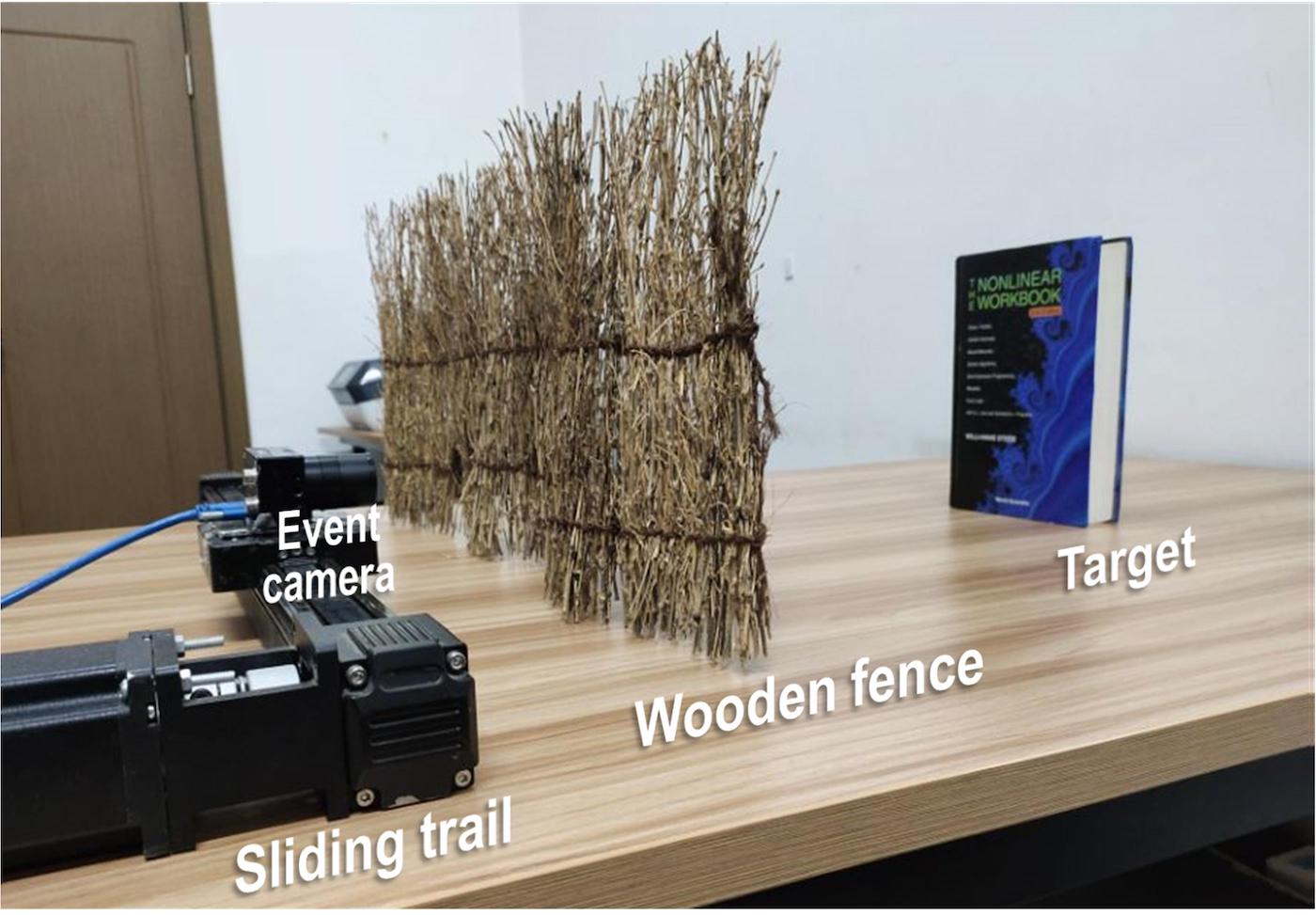

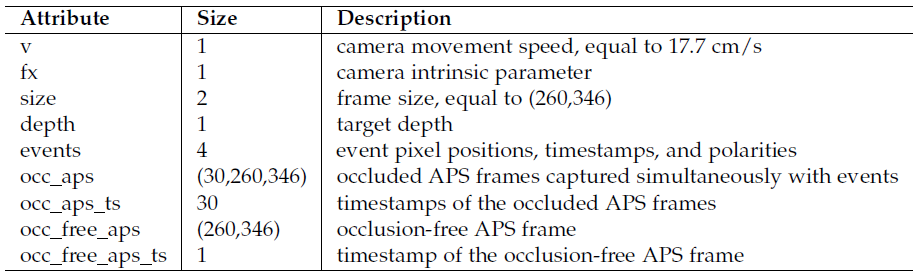

We construct a new SAI dataset containing 588 pairs of data in both indoor and outdoor environments. We install the DAVIS346 camera on a programmable sliding trail and employ a wooden fence to imitate the densely occluded scenes. When the camera moves linearly on the sliding trail, the triggered events can be collected from different viewpoints, and the occluded frames are captured simultaneously by the DAVIS346 camera. For each pair of data, an occlusion-free frame is also provided as ground truth image.

All the data are released as python (npy) files, and each pair of data contains the following information:

Feel free to download our SAI dataset (3.46 G). You are also welcome to check out our EF-SAI dataset via One Drive or Baidu Net Disk.

Pretrained model can be downloaded via Google Drive. Note that the network structure is slightly different from the model in our CVPR paper.

We provide some example data from the SAI dataset here for quick start.

Change the parent directory to './codes/'

cd codes

- Create directories

mkdir -p PreTraining Example_data/Raw

- Copy the pretrained model to directory './PreTraining/'

- Copy the example data to directory './Example_data/Raw/'

Run E-SAI+Hybrid with manual refocusing module.

- Preprocess event data with manual refocusing

python Preprocess.py --do_event_refocus=1 --input_path=./Example_data/Raw/ --save_path=./Example_data/Processed-M/

- Run reconstruction (using only HybridNet)

python Test_ManualRefocus.py --reconNet=./PreTraining/Hybrid.pth --input_path=./Example_data/Processed-M/ --save_path=./Results-M/

The reconstruction results will be saved at save_path (default: './Results-M/').

Run E-SAI+Hybrid with auto refocusing module.

- Preprocess event data without refocusing

python Preprocess.py --do_event_refocus=0 --input_path=./Example_data/Raw/ --save_path=./Example_data/Processed-A/

- Run reconstruction (using HybridNet and RefocusNet)

python Test_AutoRefocus.py --reconNet=./PreTraining/Hybrid.pth --refocusNet=./PreTraining/RefocusNet.pth --input_path=./Example_data/Processed-A/ --save_path=./Results-A/

The reconstruction results will be saved at save_path (default: './Results-A/').

This code will also compute the Average Pixel Shift Error (APSE) and save the result in './Results-A/APSE.txt'.

Evaluate the reconstruction results with metrics PSNR, SSIM and LPIPS.

- If you want to evaluate the results on some particular data such as the E-SAI+Hybrid (M) results of our example data, run evaluation

python Evaluation.py --input_path=./Results-M/

This code will create an IQA.txt file containing the quantitative results in './Results-M/IQA.txt'.

- If you want to evaluate on the whole SAI dataset, please arrange the results as follows

<project root>

|-- Results

| |-- Indoor

| | |-- Object

| | |-- Portrait

| | |-- Picture

| |-- Outdoor

| | |-- Scene

Under each category folder, please put the reconstruction results and the corresponding gt images in 'Test' and 'True' directories, respectively. For example,

<project root>

|-- Results

| |-- Outdoor

| | |-- Scene

| | | |-- Test

| | | | |-- 000000.png

| | | | |-- 000001.png

| | | | |-- ...

| | | |-- True

| | | | |-- 000000.png

| | | | |-- 000001.png

| | | | |-- ...

Then run

python Evaluation_SAIDataset.py --indoor_path=./Results/Indoor/ --outdoor_path=./Results/Outdoor/

The quantitative results will be printed in terminal and recorded in IQA.txt files. Note that we use center cropped 256x256 images for evaluation in our paper.

If you find our work useful in your research, please cite:

@inproceedings{zhang2021event,

title={Event-based Synthetic Aperture Imaging with a Hybrid Network},

author={Zhang, Xiang and Liao, Wei and Yu, Lei and Yang, Wen and Xia, Gui-Song},

year={2021},

booktitle={CVPR},

}

@inproceedings{yu2022learning,

title={Learning to See Through with Events},

author={Yu, Lei and Zhang, Xiang and Liao, Wei and Yang, Wen and Xia, Gui-Song},

year={2022},

booktitle={IEEE TPAMI},

}