This repository records my GAN models with Anime.

- Python 3.6+

- PyTorch 0.4.0

- numpy 1.14.1, matplotlib 2.2.2, scipy 1.1.0

- imageio 2.3.0

- tqdm 4.24.0

- keras 2.1.2(for WGAN_keras)

- tensorflow 1.2.1(for WGAN_keras)

- cv2 --

pip install opencv-python(for ConditionalGAN)

you need to download the dataset (onedrive, or baiduyun) named faces.zip, then execute the first step——Cloning the repository, and extract and move it in Anime_GAN/DCGAN/.

$ git clone https://github.com/FangYang970206/Anime_GAN.git

$ cd Anime_GAN/DCGAN/$ python main.py The WGAN implemented by pytorch has bugs. If anyone can find them, i will appreciate you so much!

I implement a keras version Anime_WGAN. Like DCGAN, you should download the dataset (onedrive, or baiduyun) named faces.zip(the same as DCGAN), then execute the first step——Cloning the repository, and extract and move it in Anime_GAN/WGAN_keras/faces/.

$ git clone https://github.com/FangYang970206/Anime_GAN.git

$ cd Anime_GAN/WGAN_keras/$ python wgan_keras.py you need to download the dataset (onedrive or baiduyun) named images.zip, then execute the first step——Cloning the repository, and extract and move it in Anime_GAN/ConditionalGAN/.The tag.csv file is dataset label, include hair color and eye color.The test.txt is test label file used to generate test images.

$ git clone https://github.com/FangYang970206/Anime_GAN.git

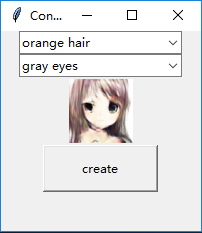

$ cd Anime_GAN/ConditionalGAN/Two mode: train or infer. In infer mode, make sure you have trained the ConditionalGAN.

$ python main.py --mode "train"

$ python main.py --mode "infer" Before run GUI.py, you should train the conditionalGAN to get the generate.t7.

$ python GUI.py