com.unity.perceptionis in active development. Its features and API are subject to significant change as development progresses.

Perception Package (Unity Computer Vision)

The Perception package provides a toolkit for generating large-scale datasets for computer vision training and validation. It is focused on a handful of camera-based use cases for now and will ultimately expand to other forms of sensors and machine learning tasks.

Visit the Unity Computer Vision page for more information on our tools and offerings!

Quick Installation Instructions

Get your local Perception workspace up and running quickly. Recommended for users with prior Unity experience.

Perception Tutorial

Detailed instructions covering all the important steps from installing Unity Editor, to creating your first computer vision data generation project, building a randomized Scene, and generating large-scale synthetic datasets by leveraging the power of Unity Simulation. No prior Unity experience required.

Human Pose Labeling and Randomization Tutorial

Step by step instructions for using the keypoint, pose, and animation randomization tools included in the Perception package. It is recommended that you finish Phase 1 of the Perception Tutorial above before starting this tutorial.

FAQ

Check out our FAQ for a list of common questions, tips, tricks, and some sample code.

In-depth documentation on individual components of the package.

| Feature | Description |

|---|---|

| Labeling | A component that marks a GameObject and its descendants with a set of labels |

| Label Config | An asset that defines a taxonomy of labels for ground truth generation |

| Perception Camera | Captures RGB images and ground truth from a Camera. |

| Dataset Capture | Ensures sensors are triggered at proper rates and accepts data for the JSON dataset. |

| Randomization | The Randomization tool set lets you integrate domain randomization principles into your simulation. |

For setup problems or discussions about leveraging the Perception package in your project, please create a new thread on the Unity Computer Vision forum and make sure to include as much detail as possible. If you run into any other problems with the Perception package or have a specific feature request, please submit a GitHub issue.

For any other questions or feedback, connect directly with the Computer Vision team at computer-vision@unity3d.com.

SynthDet is an end-to-end solution for training a 2D object detection model using synthetic data.

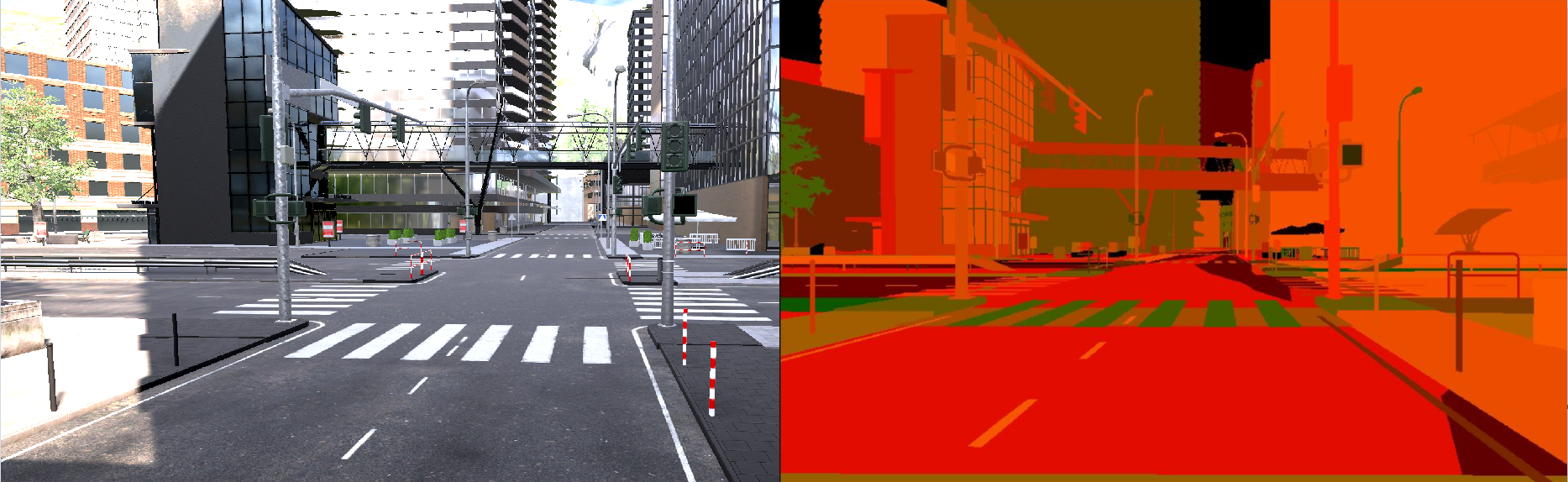

The Unity Simulation Smart Camera Example illustrates how the Perception package could be used in a smart city or autonomous vehicle simulation. You can generate datasets locally or at scale in Unity Simulation.

The Robotics Object Pose Estimation Demo & Tutorial demonstrates pick-and-place with a robot arm in Unity. It includes using ROS with Unity, importing URDF models, collecting labeled training data using the Perception package, and training and deploying a deep learning model.

The repository includes two projects for local development in TestProjects folder, one set up for HDRP and the other for URP.

For closest standards conformity and best experience overall, JetBrains Rider or Visual Studio w/ JetBrains Resharper are suggested. For optimal experience, perform the following additional steps:

- To allow navigating to code in all packages included in your project, in your Unity Editor, navigate to

Edit -> Preferences... -> External Toolsand checkGenerate all .csproj files.

- The Linux Editor 2019.4.7f1 and 2019.4.8f1 might hang when importing HDRP-based Perception projects. For Linux Editor support, use 2019.4.6f1 or 2020.1

If you find this package useful, consider citing it using:

@misc{com.unity.perception2021,

title={Unity {P}erception Package},

author={{Unity Technologies}},

howpublished={\url{https://github.com/Unity-Technologies/com.unity.perception}},

year={2020}

}