This repo contains the code for the DynCL algorithm presented in "Self-supervised contrastive learning performs non-linear system identification".

We will open source the code upon publication of our pre-print. Stay tuned!

If you want to get notified about the code release, make sure to watch 🕶️ the repo!

In case you need early access to the codebase (for benchmarking/comparisons, application of DynCL to a dataset, etc.), please send an email to Steffen Schneider.

Self-supervised learning (SSL) approaches have brought tremendous success across many tasks and domains. It has been argued that these successes can be attributed to a link between SSL and identifiable representation learning: Temporal structure and auxiliary variables ensure that latent representations are related to the true underlying generative factors of the data. Here, we deepen this connection and show that SSL can perform system identification in latent space. We propose a new model to uncover linear, switching linear and non-linear dynamics under a non-linear observation model, give theoretical guarantees and validate them empirically.

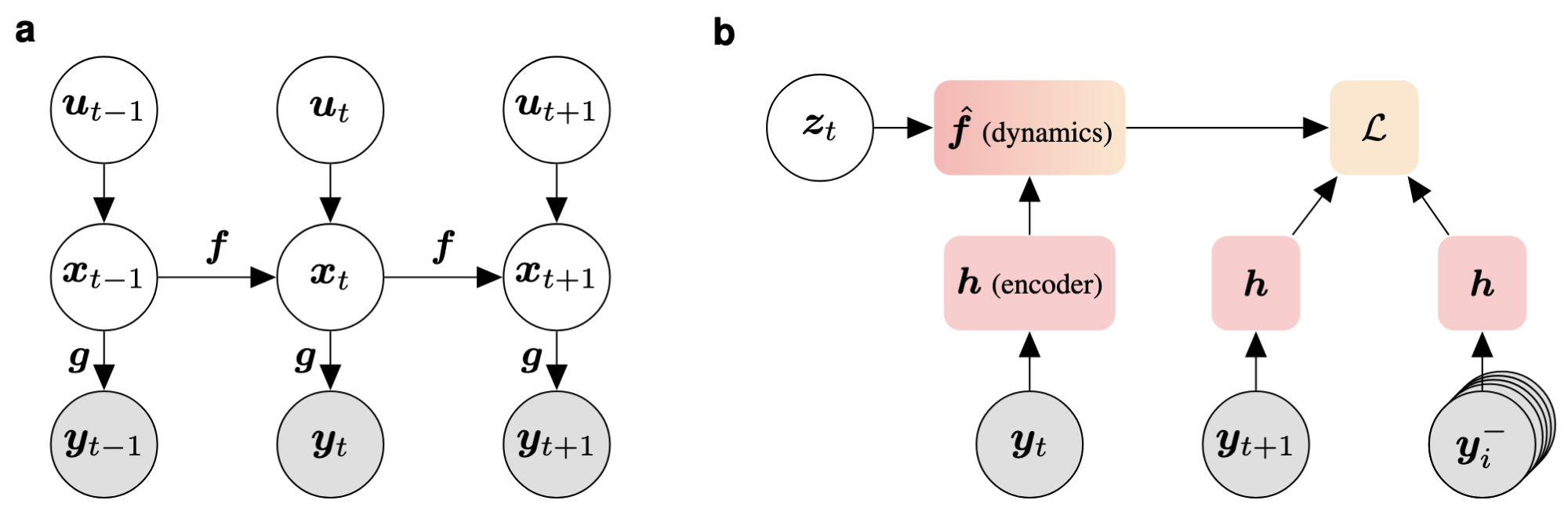

(a) The assumed data-generating process: y represents the observable input variables, x denotes the latent variables, and u is the control input. (b) General formulation of our method: It consists of an encoder h that is shared across the reference, positive, and negative samples. The method also includes a dynamics model f̂. Additionally, a (possibly latent) variable z can be used to parameterize the dynamics model (see section 4).

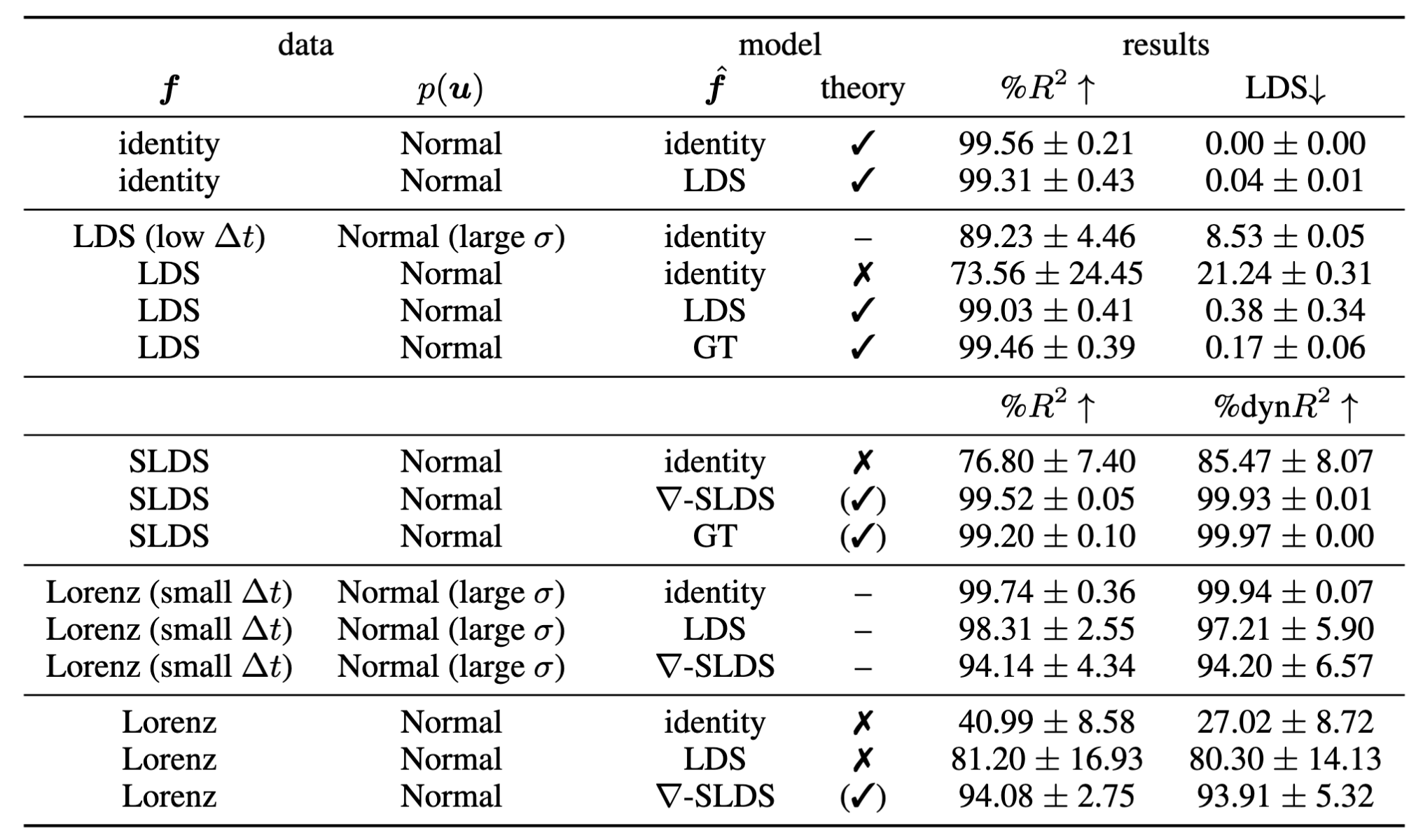

Comprehensive categorization of ground-truth dynamical processes f and model configurations f̂. Note, the mixing function g is always assumed non-linear, and h is always parameterized as a neural network. For all metrics, we report mean and standard deviation across 3 datasets (5 for Lorenz) and 3 experiment repeats.

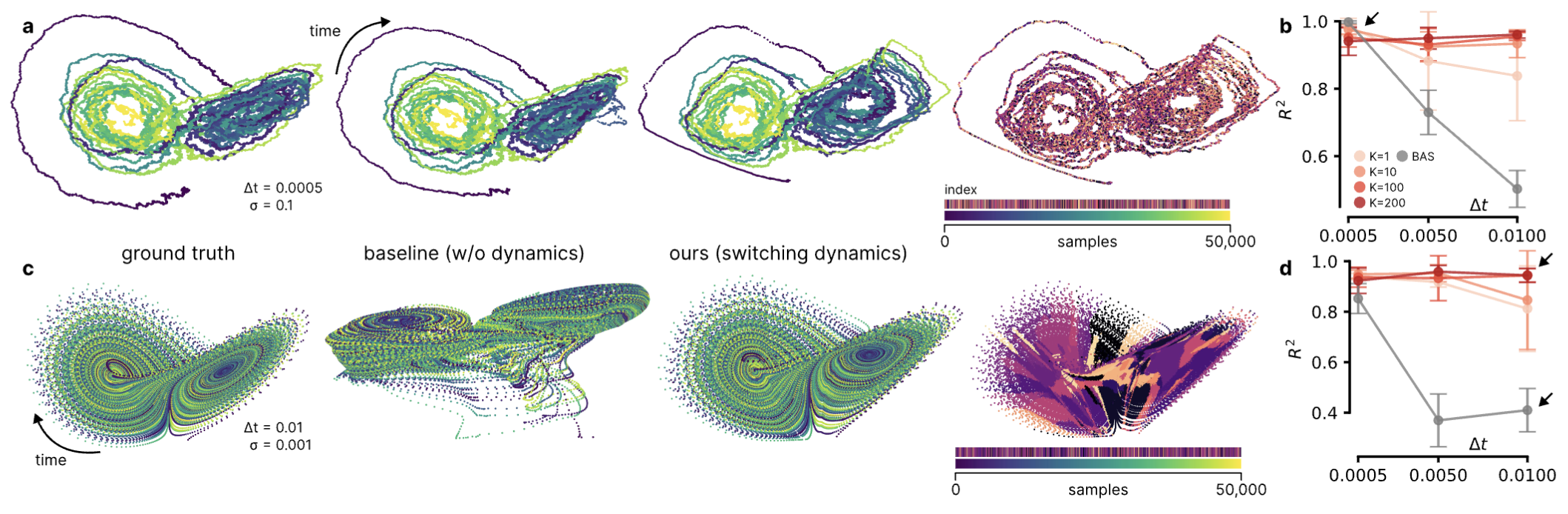

Contrastive learning of 3D non-linear dynamics following a Lorenz attractor model. (a) Left to right: ground truth dynamics for 10k samples with dt = 0.0005 and σ = 0.1, estimation results for baseline (identity dynamics), DynCL with ∇-SLDS, estimated mode sequence. (b) Empirical identifiability (R²) between baseline (BAS) and ∇-SLDS for varying numbers of discrete states K. (c, d) Same layout but for dt = 0.01 and σ = 0.001.

@article{gozalezlaizschmidt2024dyncl,

author = {González Laiz, Rodrigo and Schmidt, Tobias and Schneider, Steffen},

title={Self-supervised contrastive learning performs non-linear system identification},

journal={arxiv},

year={2024},

month={October},

url={https://arxiv.org/abs/2410.14673}

}