A smarter web fuzzing tool that combines local LLM models (via Ollama) and ffuf to optimize directory and file discovery.

I wrote a blog post about the ideas behind this tool: Brainstorm tool release: Optimizing web fuzzing with local LLMs

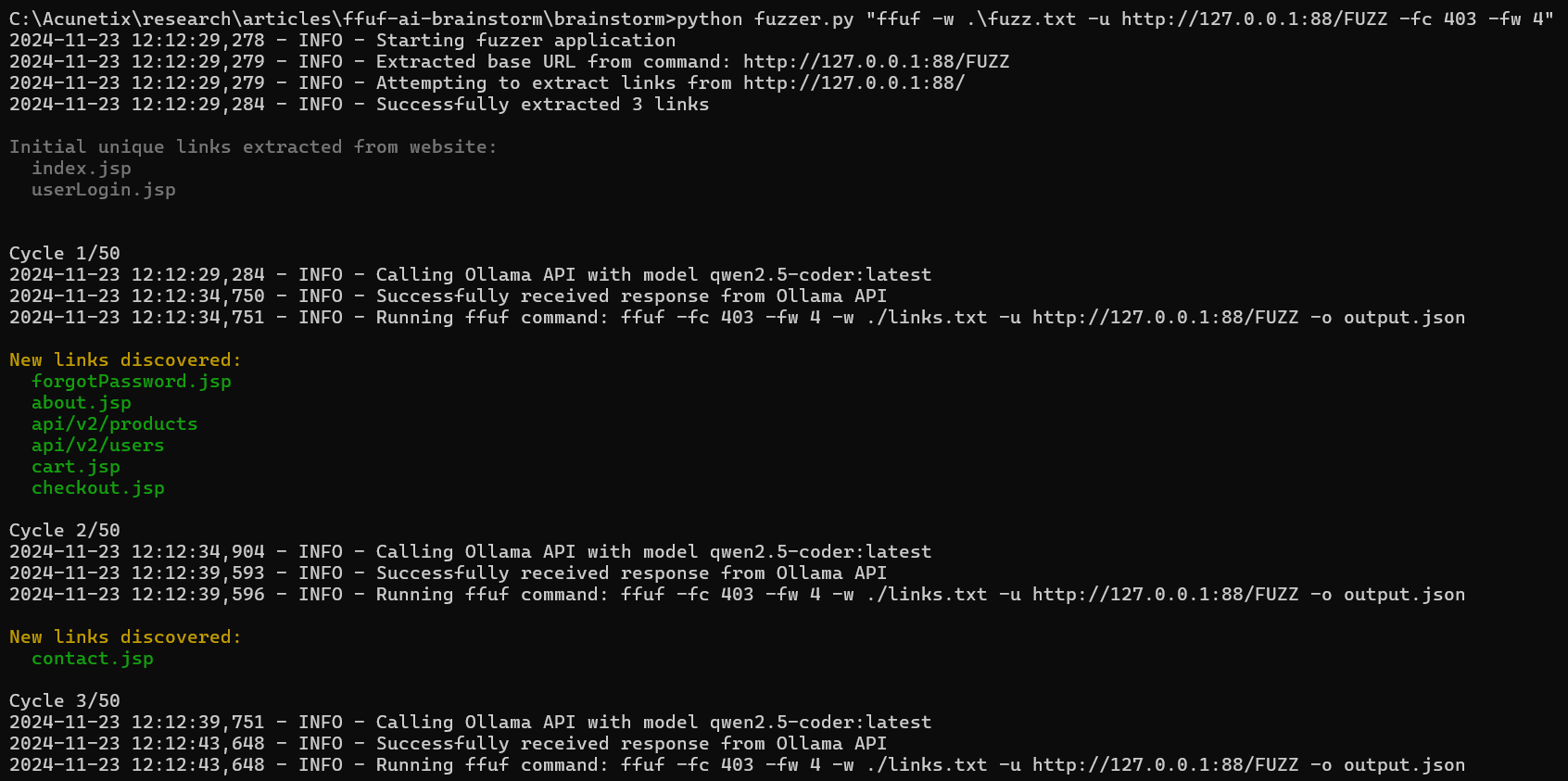

Combines traditional web fuzzing techniques with AI-powered path generation to discover hidden endpoints, files, and directories in web applications.

This tool enhances traditional web fuzzing by using local AI language models (via Ollama) to generate intelligent guesses for potential paths and filenames. It works by:

- Extracting initial links from the target website

- Using AI to analyze the structure and suggest new potential paths

- Fuzzing these paths using ffuf

- Learning from discoveries to generate more targeted suggestions

- Repeat

There are 2 tools:

fuzzer.py: Main fuzzer focusing on general path discoveryfuzzer_shortname.py: Specialized variant for short filename discovery (e.g., legacy 8.3 format)

- Python 3.6+

- ffuf (https://github.com/ffuf/ffuf)

- Ollama (https://ollama.ai)

- Required Python packages (see requirements.txt)

By default, the tool is using the model qwen2.5-coder:latest.

This model (or other models you want to use) needs to be downloaded first.

ollama pull qwen2.5-coder:latest# Clone the repository

git clone https://github.com/Invicti-Security/brainstorm.git

cd brainstorm

# Install Python dependencies

pip install -r requirements.txt

# Ensure ffuf is installed and in your PATH

# Ensure Ollama is running locally on port 11434# Basic fuzzing with default settings

python fuzzer.py "ffuf -w ./fuzz.txt -u http://example.com/FUZZ"

# Short filename fuzzing (specify the 8.3 filename as the last parameter)

python fuzzer_shortname.py "ffuf -w ./fuzz.txt -u http://example.com/FUZZ" "BENCHM~1.PY"--debug Enable debug mode

--cycles N Number of fuzzing cycles to run (default: 50)

--model NAME Ollama model to use (default: qwen2.5-coder:latest)

--prompt-file PATH Path to prompt file (default: prompts/files.txt)

--status-codes LIST Comma-separated list of status codes to consider successful

(default: 200,301,302,303,307,308,403,401,500)

--debug Enable debug mode

--cycles N Number of fuzzing cycles to run (default: 50)

--model NAME Ollama model to use (default: qwen2.5-coder:latest)

--status-codes LIST Comma-separated list of status codes to consider successful

# Run fuzzing with custom cycles and model

python fuzzer.py "ffuf -w ./fuzz.txt -u http://target.com/FUZZ" --cycles 100 --model llama2:latest

# Run short filename fuzzing targeting a specific file

python fuzzer_shortname.py "ffuf -w ./fuzz.txt -u http://target.com/FUZZ" "document.pdf" --cycles 25

# Benchmark different models and generate HTML report

python benchmark.py- Discovered paths are saved to

all_links.txt - Short filenames are saved to

all_filenames.txt - Real-time console output shows progress and discoveries

I've compared the most popular local LLM models, you can find the results here.