The code is for our paper in the 7th AI City Challenge Track 2, Tracked-Vehicle Retrieval by Natural Language Descriptions, reaching the 2nd rank on the public leaderboard.

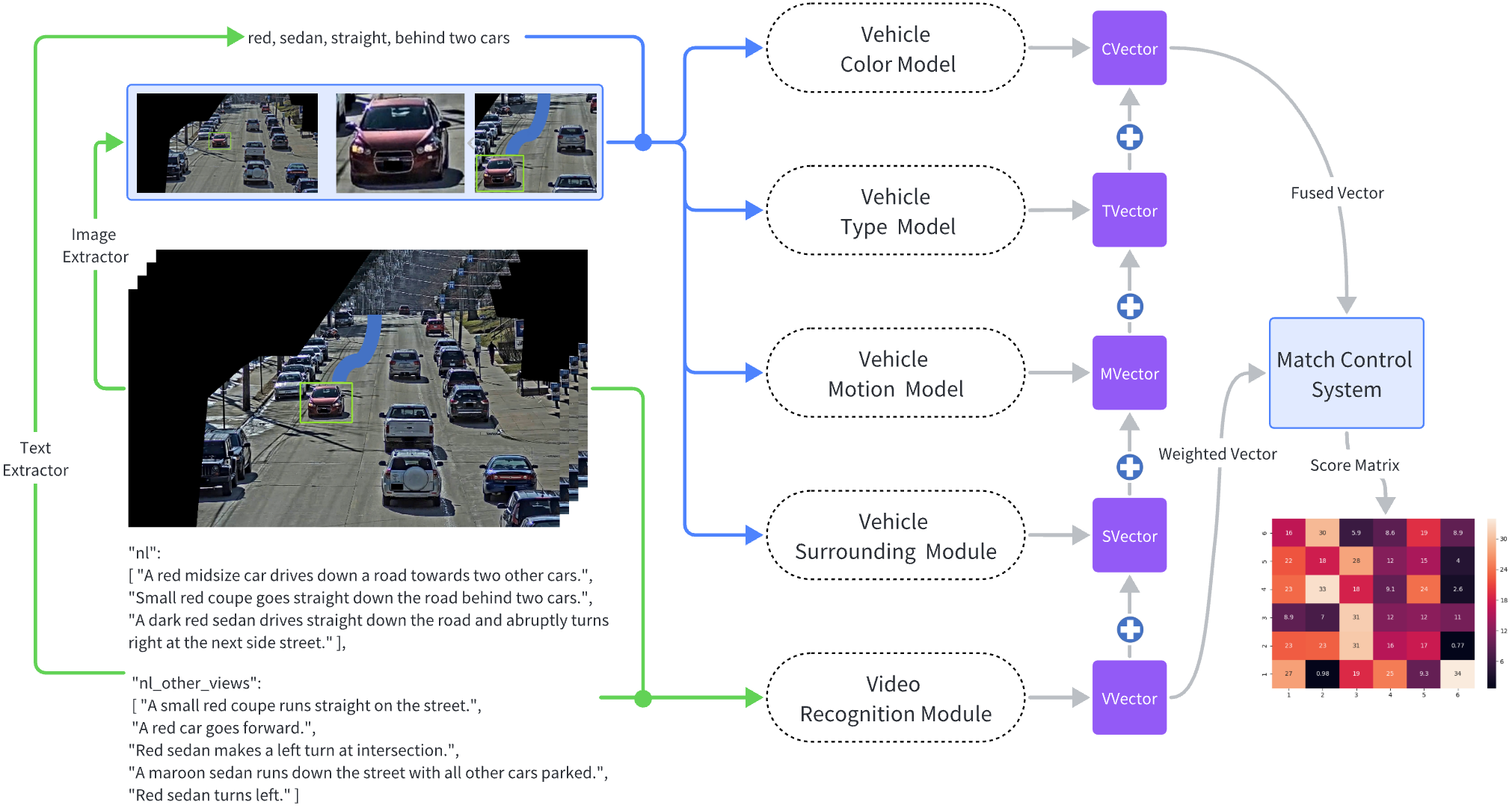

Through the development of multi-modal and contrastive learning, image and video retrieval have made immense progress over the last years. Organically fused text, image, and video knowledge brings huge potential opportunities for multi-dimension, and multi-view retrieval, especially in traffic senses. This paper proposes a novel Multi-modal Language Vehicle Retrieval (MLVR) system, for retrieving the trajectory of tracked vehicles based on natural language descriptions. The MLVR system is mainly combined with an end-to-end text-video contrastive learning model, a CLIP few-shot domain adaption method, and a semi-centralized control optimization system. Through a comprehensive understanding the knowledge from the vehicle type, color, maneuver, and surrounding environment, the MLVR forms a robust method to recognize an effective trajectory with provided natural language descriptions. Under this structure, our approach has achieved 81.79% Mean Reciprocal Rank (MRR) accuracy on the test dataset, in the 7th AI City Challenge Track 2, Tracked-Vehicle Retrieval by Natural Language Descriptions, rendering the 2nd rank on the public leaderboard.

pip install -r requirements.txtMLVR

├── data # put aicity2023 track 2 data

├── docs # pictures and paper

├── preprocessing # process the data for model

├── model # modules for MLVR

│ ├── vrm # Video Recognition Module

│ ├── vct # Vehicle Color and Type Modules

│ ├── vmm # Vehicle Motion Module

│ └── vsm # Vehicle Surrounding Module

├── postprocessing # Model Postprocessing

│ ├── matrix # vrm, vct, vmm, vsm score matrices

│ └── final_results.json # submit result 81.79%

├── requirements.txt

└── README.md

- Get images from the video

cd ./preprocessing

python extract_vdo_frms.py- Get background of the images

python generate_median.py- Generate the video clip for video recognition module

python create_video_clip.py- Format the text input for video recognition module

python create_vrm_data.py- Crop the vehicle images for vehicle color and type modules

python crop_vehicle_bbox.py- Format the text input for vehicle color and type modules

python create_vct_data.py-

Video Recognition Module (baseline)

This part is modified from X-CLIP.

Please download the pretrain model here for test, and put it in

\model\vrm\ckpts\.

cd ./model/vrm

sh ./scripts/train.sh # train

sh ./scripts/test.sh # test-

Vehicle Color and Type Modules

This part is modified from Tip-Adapter.

cd ./model/vct

python train.py --config vehicle_color_train.yaml # vehicle color module train

python test.py --config vehicle_color_test.yaml # vehicle color module test

python train.py --config vehicle_type_train.yaml # vehicle type module train

python test.py --config vehicle_type_test.yaml # vehicle type module test- Vehicle Motion Modules

cd ./model/vmm

python main.py # vehicle color module-

Vehicle Surrounding Modules

This part is modified from GLIP.

cd ./model/vsm/branch1

python vsm1.py # vehicle surrounding module branch 1

cd ./model/vsm/branch2

python get_candidates.py # vehicle surrounding module branch 2Run the following command to generate the final submit result 81.79%.

cd ./postprocessing

python mcs.py # match control system