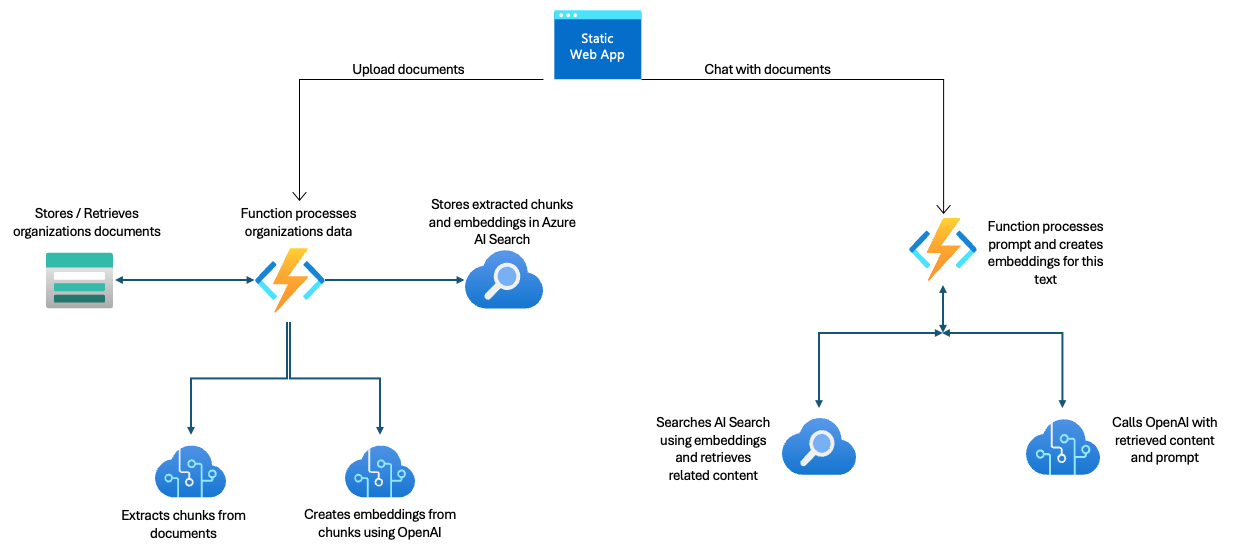

This demo is based on azure-search-openai-demo and uses a static web app for the frontend and Azure functions for the backend API's.

This solution uses the Azure Functions OpenAI triggers and binding extension for the backend capabilities. It includes:

- Ability to upload text files from UI - Delivered by the embeddings and semantic search output bindings

- Ask questions of the uploaded files - Enabled by the semantic search input binding

- Create a chat session and interact with the OpenAI deployed model - Uses the Assistant bindings to interact wiht the OpenAI model and stores chat history in Azure storage tables automatically

- In the chat session, ask the LLM to store reminders and then later retrieve them. This capability is delivered by the AssistantSkills trigger in the OpenAI extension for Azure Functions

- Create Azure functions in different programming language e.g. (C#, Node, Python, Java, PowerShell) and easily replace using config file

- Static web page is configured with Entra ID auth by default

IMPORTANT: In order to deploy and run this example, you'll need an Azure subscription with access enabled for the Azure OpenAI service. You can request access here. You can also visit here to get some free Azure credits to get you started.

AZURE RESOURCE COSTS by default this sample will create Azure App Service and Azure AI Search resources that have a monthly cost. You can switch them to free versions of each of them if you want to avoid this cost by changing the parameters file under the infra folder (though there are some limits to consider; for example, you can have up to 1 free AI Search resource per subscription.)

- .NET 8 -

backendFunctions app is built using .NET 8 - Node.js -

frontendis built in TypeScript - Azure Functions Core Tools - Run and debug

backendFunctions locally - Static Web Apps Cli - Run and debug

frontendSWA locally - Git

- Azure Developer CLI - Provision and deploy Azure Resources

- Powershell 7+ (pwsh) - For Windows users only.

- Important: Ensure you can run

pwsh.exefrom a PowerShell command. If this fails, you likely need to upgrade PowerShell.

- Important: Ensure you can run

NOTE: Your Azure Account must have

Microsoft.Authorization/roleAssignments/writepermissions, such as User Access Administrator or Owner.

This application requires resources like Azure OpenAI and Azure AI Search which must be provisioned in Azure even if the app is run locally. The following steps make it easy to provision, deploy and configure all resources.

Execute the following command in a new terminal, if you don't have any pre-existing Azure services and want to start from a fresh deployment.

- Run the following command to download the project code

azd init -t https://github.com/Azure-Samples/Azure-Functions-OpenAI-Demo- Ensure your deployment scripts are executable (scripts are currently needed to help AZD deploy your app)

Mac/Linux:

chmod +x ./scripts/deploy.shWindows:

set-executionpolicy remotesigned- Provision required Azure resources (e.g. Azure OpenAI and Azure Search) into a new environment

azd upNOTE: For the target location, the regions that currently support the models used in this sample are East US or South Central US. For an up-to-date list of regions and models, check here. Make sure that all the intended services for this deployment have availability in your targeted regions. Note also, it may take a minute for the application to be fully deployed.

- Navigate to the Azure Static WebApp deployed in step 2. The URL is printed out when azd completes (as "Endpoint"), or you can find it in the Azure portal.

The following steps let you override resource names and other values so you can leverage existing resources (e.g. provided by an admin or in a sandbox environment).

- Map configuration using Azure resources provided to you:

azd env set AZURE_OPENAI_SERVICE <Name of existing OpenAI service>

azd env set AZURE_OPENAI_RESOURCE_GROUP <Name of existing resource group with OpenAI resource>

azd env set AZURE_OPENAI_CHATGPT_DEPLOYMENT <Name of existing ChatGPT deployment if not the default `chat`>

azd env set AZURE_OPENAI_EMB_DEPLOYMENT <Name of existing Embedding deployment if not `embedding`>

azd env set AZURE_SEARCH_ENDPOINT <Endpoint of existing Azure AI Search service, e.g. https://xx.search.windows.net>

azd env set AZURE_SEARCH_INDEX: <Name of Azure AI Search index if not `openai-index`>

azd env set fileShare <name of storage file share if not `/mounts/openaifiles`>

azd env set ServiceBusConnection__fullyQualifiedNamespace <Namespace of existing service bus namespace>

azd env set ServiceBusQueueName <Name of service bus Queue>

azd env set OpenAiStorageConnection <Connection string of storage account used by OpenAI extension (managed identity coming soon!)>

azd env set AzureWebJobsStorage__accountName <Account name of storage account used by Function runtime>

azd env set DEPLOYMENT_STORAGE_CONNECTION_STRING <Account name of storage account used by Function deployment>

azd env set APPLICATIONINSIGHTS_CONNECTION_STRING <Connection for App Insights resource>- Deploy all resources (provision any not specified)

azd upNOTE: You can also use existing Search and Storage Accounts. See

./infra/main.parameters.jsonfor list of environment variables to pass toazd env setto configure those existing resources.

- Simply run

azd upagain

Your frontend and backend apps can run on the local machine using storage emulators + remote AI resources.

- Initialize the Azure resources using one of the approaches above.

- Create a new

app/backend/local.settings.jsonfile to store Azure resource configuration using values in the .azure/[environment name]

{

"IsEncrypted": false,

"Values": {

"FUNCTIONS_WORKER_RUNTIME": "dotnet-isolated",

"AZURE_OPENAI_ENDPOINT": "<Endpoint of existing OpenAI service, e.g. https://xx.openai.azure.com/>",

"AZURE_OPENAI_CHATGPT_DEPLOYMENT": "chat",

"AZURE_OPENAI_EMB_DEPLOYMENT": "embedding",

"AZURE_SEARCH_ENDPOINT": "<Endpoint of existing Azure AI Search service, e.g. https://xx.search.windows.net>",

"AZURE_SEARCH_INDEX": "openai-index",

"fileShare": "<Local directory on file system, e.g c:\\temp or /tmp>",

"ServiceBusConnection": "<Namespace of existing service bus namespace>",

"ServiceBusQueueName": "<Name of service bus Queue>",

"OpenAiStorageConnection": "UseDevelopmentStorage=true",

"AzureWebJobsStorage": "UseDevelopmentStorage=true",

"SYSTEM_PROMPT": "You are a helpful assistant. You are responding to requests from a user about internal emails and documents. You can and should refer to the internal documents to help respond to requests. If a user makes a request thats not covered by the documents provided in the query, you must say that you do not have access to the information and not try and get information from other places besides the documents provided. The following is a list of documents that you can refer to when answering questions. The documents are in the format [filename]: [text] and are separated by newlines. If you answer a question by referencing any of the documents, please cite the document in your answer. For example, if you answer a question by referencing info.txt, you should add \"Reference: info.txt\" to the end of your answer on a separate line."

}

}- Make the OpenAI resource have public access so you can reach it as it is set up for private endpoints only. Go to the networking tab on the OpenAI resource and change access to public.

- Add your account (eg. contoso.microsoft.com) to the Open AI resource with the following role.

- Cognitive Services OpenAI User

- Add your account (eg. contoso.microsoft.com) to the AI search resource with the following roles.

- Search Service Contributor

- Search Index Data Contributor

- Start Azurite using VS Code extension or run this command in a new terminal window using optional Docker

docker run -p 10000:10000 -p 10001:10001 -p 10002:10002 \

mcr.microsoft.com/azure-storage/azurite- Start the static web app and function app by running

swa start- navigate to http://localhost:4280/

- Upload .txt files on the Upload screen. Content is provided in

./sample_contentfolder. - In Ask screen, ask questions about uploaded data, e.g.

are eye exams covered? - Explore the search indexes (e.g.

openai-index) in the Azure AI Search resource to inspect vector embeddings created by the Upload step - In Chat screen, try follow up questions, clarifications, ask to simplify or elaborate on answer, etc.

- In Chat screen, try skilling assistants by saying

create a todo to get a haircutandfetch me list of todos. - Explore citations and sources

- Click on "settings" to try different options, tweak prompts, etc.

- Primary Repo - azure-search-openai-demo

- Revolutionize your Enterprise Data with ChatGPT: Next-gen Apps w/ Azure OpenAI and AI Search

- Azure AI Search

- Azure OpenAI Service

- Azure Role-based-access-control

To remove your data from Azure Static Web Apps, go to https://identity.azurestaticapps.net/.auth/purge/aad

The following command deletes and purges all resources (this cannot be undone!):

azd down --purgeCurrently only text files are supported.

Go to Application Insights and go to the Live metrics view to see real time telemtry information. Optionally, go to Application Insights and select Logs and view the traces table, or view Transaction Search.

If no functions load, double check that you get no errors on azd up (e.g. script error with no permission to execute). Also if azd package or azd up fails with Can't determine Project to build. Expected 1 .csproj or .fsproj but found 2 error, delete the /app/backend/bin and /app/backend/obj folders and try to deploy again with azd package or azd up.