By Tao Hu, Lichao Huang, Han Shen.

The code for the official implementation of paper SATA.

The code will be made publicly available shortly.

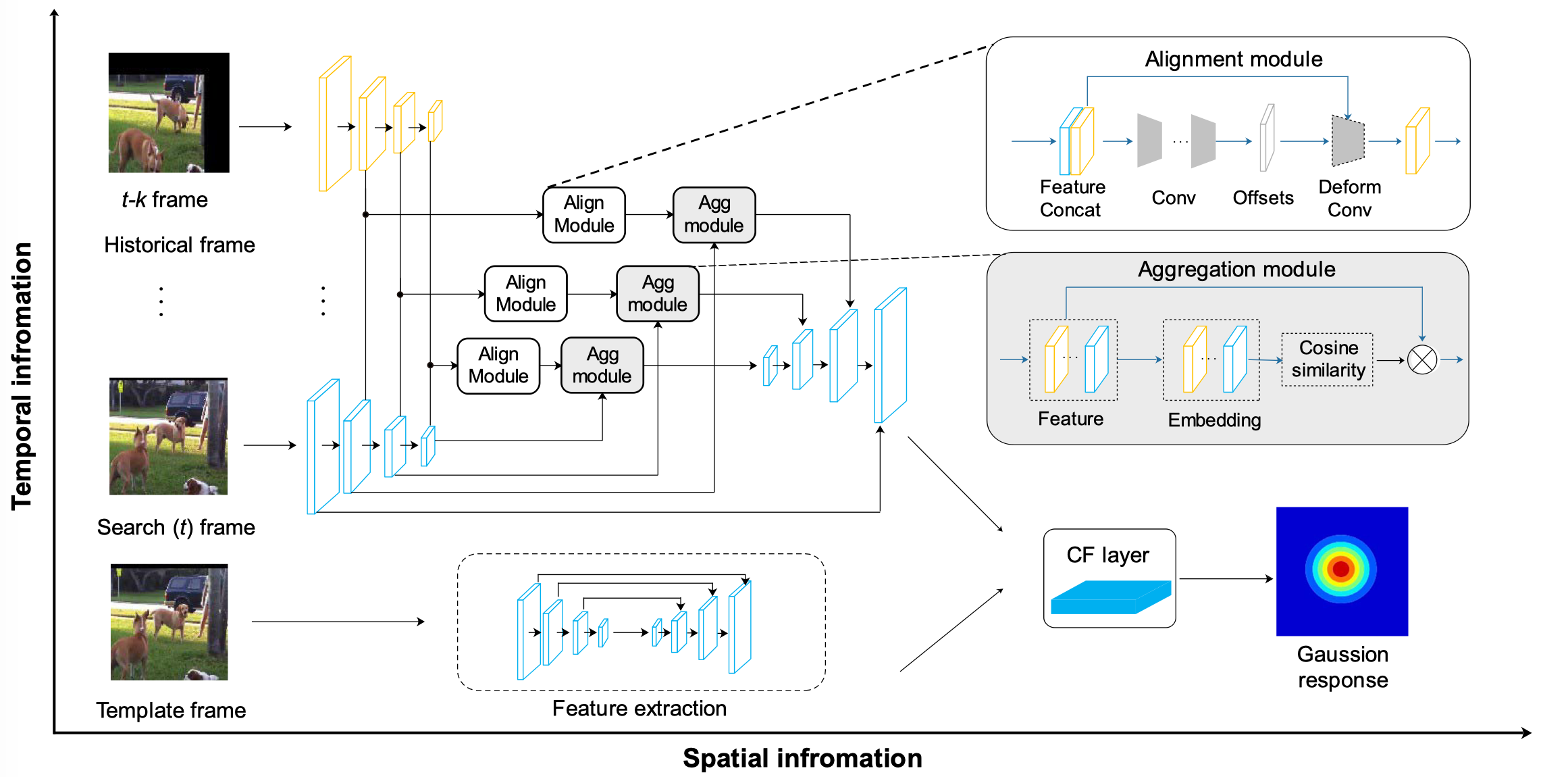

SATA obtains a significant improvements by feature aggregation

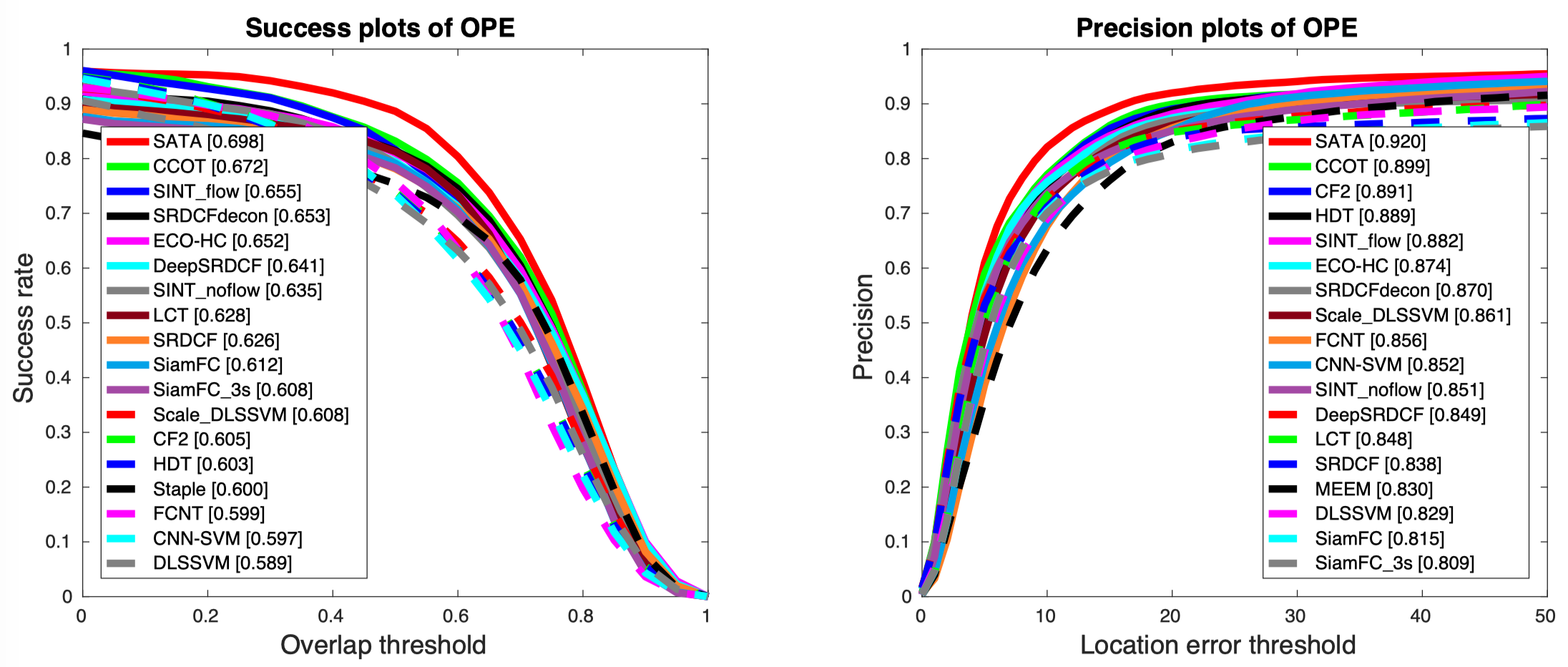

The OPE/TRE/SRE results on OTB GoogleDrive.

OTB2013:

OTB2015:

Note:

- The results are better than reported in the paper because we modify some hyper-parameters.

- The results could be slightly different depending on the running environment.

Env Requirements:

- Linux.

- Python 3.5+.

- PyTorch 1.3 or higher.

- CUDA 9.0 or higher.

- NCCL 2 or higher if you want to use distributed training.

-

Download ILSVRC2015 VID dataset from ImageNet, extract files and put it in the directory you defined.

-

After the download, link your datasets to the current directory, like,

cd data ln -s your/path/to/data/ILSVRC2015 ./ILSVRC2015 -

Split training and validation set. For example, I use MOT17-09 as validation set, and others video as training set.

./ILSVRC2015 ├── Annotations │ └── VID├── a -> ./ILSVRC2015_VID_train_0000 │ ├── b -> ./ILSVRC2015_VID_train_0001 │ ├── c -> ./ILSVRC2015_VID_train_0002 │ ├── d -> ./ILSVRC2015_VID_train_0003 │ ├── e -> ./val │ ├── ILSVRC2015_VID_train_0000 │ ├── ILSVRC2015_VID_train_0001 │ ├── ILSVRC2015_VID_train_0002 │ ├── ILSVRC2015_VID_train_0003 │ └── val ├── Data │ └── VID...........same as Annotations └── ImageSets └── VID -

Download pre-train model for backbone, and put it in

./pretrainmodelcd ./pretrainmodel wget https://download.pytorch.org/models/resnet50-19c8e357.pth -

Install others dependencies. Our environment is PyTorch 1.3.0+cu92, torchvision 0.4.1+cu92.

pip install -r requirements.txt

# Multi GPUs (e.g. 4 GPUs)

cd ./experiments/train_tsr

CUDA_VISIBLE_DEVICES=0,1,2,3 python ../../scripts/train.py --cfg config.yaml

cd ./experiments/test_tsr

python ../../scripts/test.py --cfg config.yaml

The work was mainly done during an internship at Horizon Robotics.

Please consider citing our paper in your publications if the project helps your research. BibTeX reference is as follows.

@article{hu2019real,

title={Real Time Visual Tracking using Spatial-Aware Temporal Aggregation Network},

author={Hu, Tao and Huang, Lichao and Liu, Xianming and Shen, Han},

journal={arXiv preprint arXiv:1908.00692},

year={2019}

}

For academic use, this project is licensed under the 2-clause BSD License - see the LICENSE file for details. For commercial use, please contact the authors.