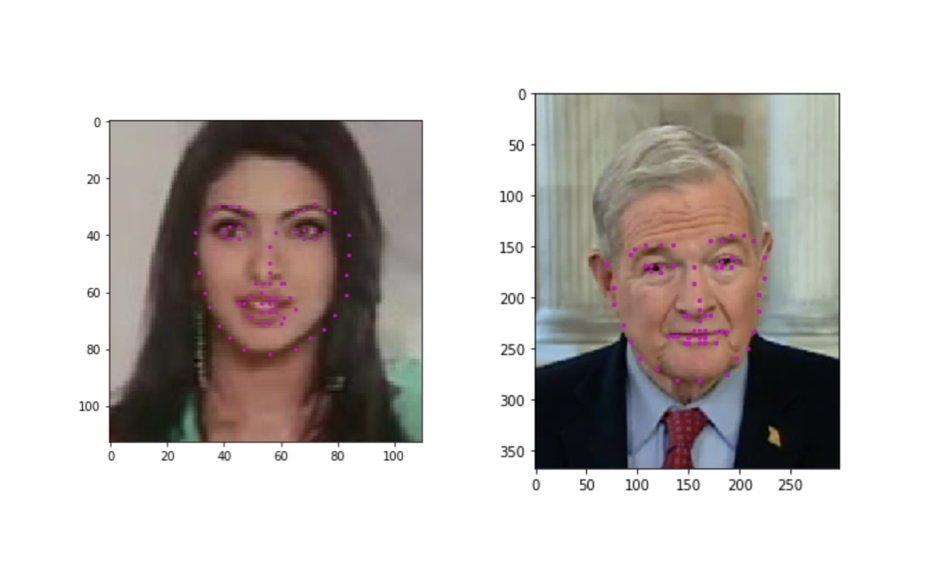

Notebook 1 : Loading and Visualizing the Facial Keypoint Data

Notebook 2 : Defining and Training a Convolutional Neural Network (CNN) to Predict Facial Keypoints

Notebook 3 : Facial Keypoint Detection Using Haar Cascades and your Trained CNN

Notebook 4 : Fun Filters and Keypoint Uses

- Clone the repository, and navigate to the downloaded folder. This may take a minute or two to clone due to the included image data.

git clone https://github.com/udacity/P1_Facial_Keypoints.git

cd P1_Facial_Keypoints

-

Create (and activate) a new environment, named

cv-ndwith Python 3.6. If prompted to proceed with the install(Proceed [y]/n)type y.- Linux or Mac:

conda create -n cv-nd python=3.6 source activate cv-nd- Windows:

conda create --name cv-nd python=3.6 activate cv-ndAt this point your command line should look something like:

(cv-nd) <User>:P1_Facial_Keypoints <user>$. The(cv-nd)indicates that your environment has been activated, and you can proceed with further package installations. -

Install PyTorch and torchvision; this should install the latest version of PyTorch.

- Linux or Mac:

conda install pytorch torchvision -c pytorch- Windows:

conda install pytorch-cpu -c pytorch pip install torchvision -

Install a few required pip packages, which are specified in the requirements text file (including OpenCV).

pip install -r requirements.txt

All of the data you'll need to train a neural network is in the P1_Facial_Keypoints repo, in the subdirectory data. In this folder are training and tests set of image/keypoint data, and their respective csv files. This will be further explored in Notebook 1: Loading and Visualizing Data, and you're encouraged to look trough these folders on your own, too.

- Navigate back to the repo. (Also, your source environment should still be activated at this point.)

cd

cd P1_Facial_Keypoints- Open the directory of notebooks, using the below command. You'll see all of the project files appear in your local environment; open the first notebook and follow the instructions.

jupyter notebookLICENSE: This project is licensed under the terms of the MIT license.