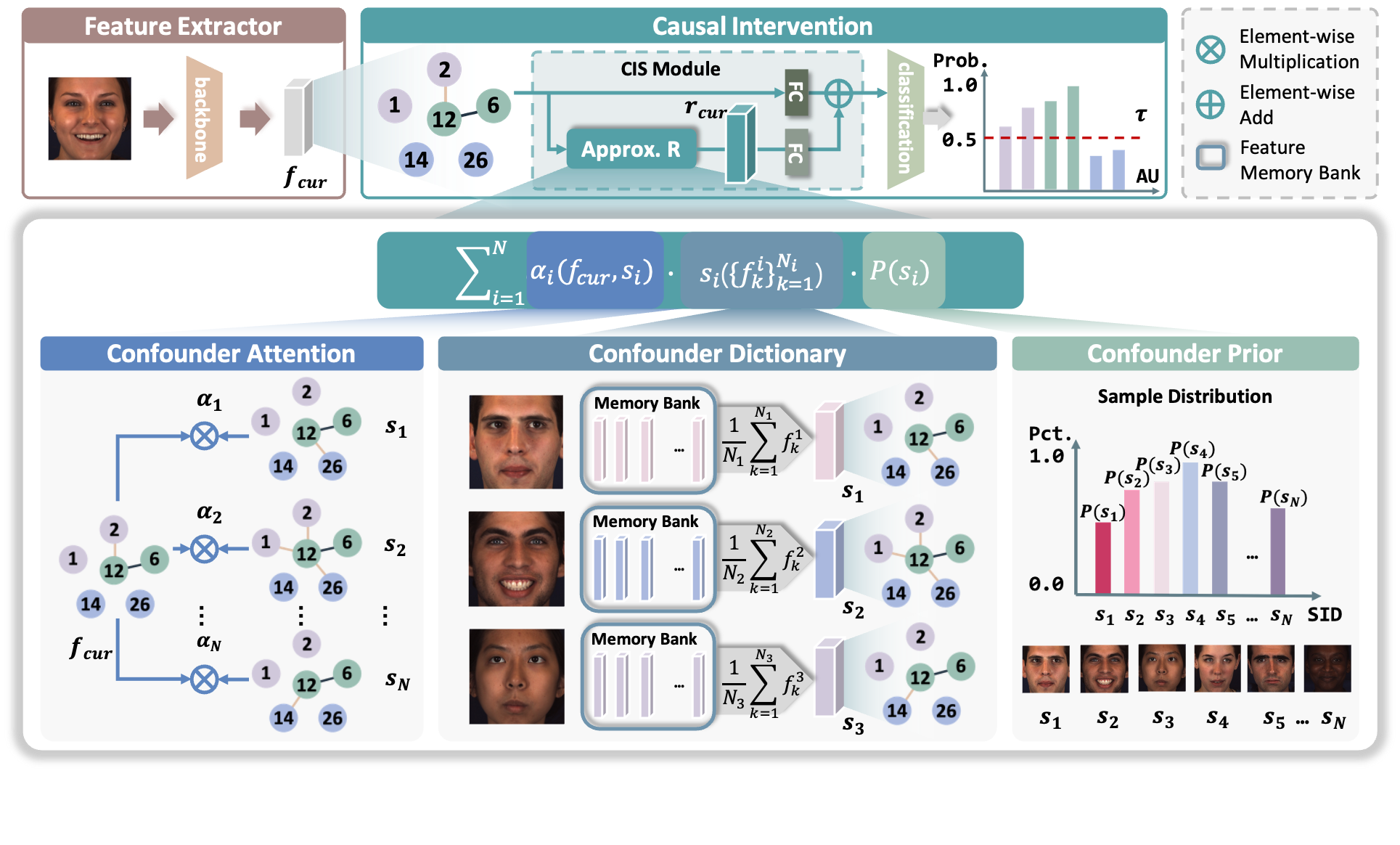

Implementation of "Causal Intervention for Subject-Deconfounded Facial Action Unit Recognition" (AAAI 2022)paper.

$ git clone https://github.com/echoanran/CIS.git $INSTALL_DIR- python >= 3.6

- torch >= 1.1.0

- requirements.txt

$ pip install -r requirements.txt- torchlight

$ cd $INSTALL_DIR/torchlight

$ python setup.py installFirst, request for the access of the two AU benchmark datasets:

Preprocess the downloaded datasets using Dlib

- Detect face and facial landmarks

- Align the cropped faces according to the computed coordinates of eye centers

- Resize faces to (256, 256)

Split the subject IDs into 3 folds randomly

Our dataloader $INSTALL_DIR/feeder/feeder_image_causal.py requires two data files (an example is given in $INSTALL_DIR/data/bp4d_example):

label_path: the path to file which contains labels ('.pkl' data), [N, 1, num_class]image_path: the path to file which contains image paths ('.pkl' data), [N, 1]

$ cd $INSTALL_DIR

$ python run-cisnet.pyPlease cite our paper if you use the codes:

@inproceedings{yingjie2022,

title={Causal Intervention for Subject-Deconfounded Facial Action Unit Recognition},

author={Chen, Yingjie and Chen, Diqi and Wang, Tao and Wang, Yizhou and Liang, Yun},

booktitle={AAAI},

year={2022}

}