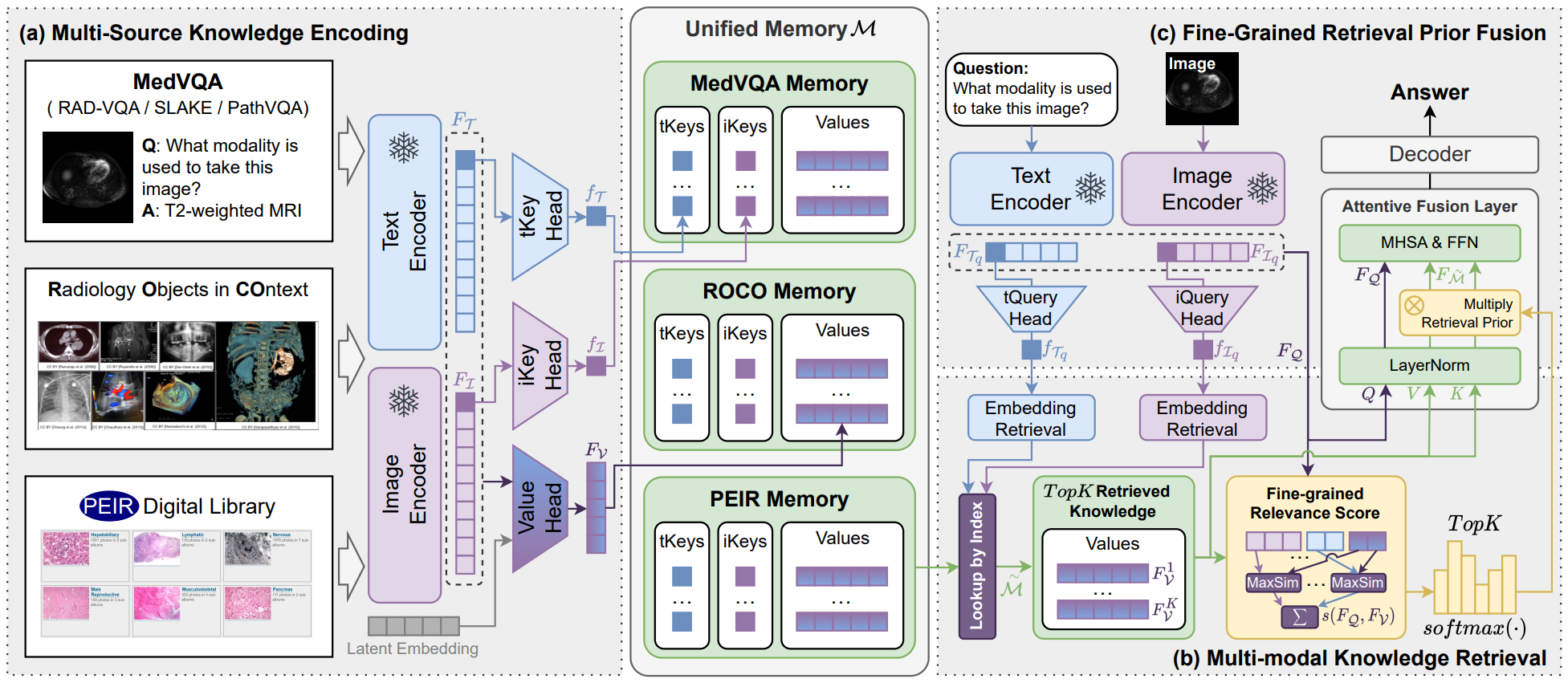

Fine-Grained Re-Weighting (FGRW) is an online retrieval-augmented framework for Medical Visual Question Answering, utilizing fine-grained encoding of multi-source knowledge and computing re-calculate relevance scores between queries and knowledge. These scores serve as supervised priors, guiding the fusion of queries and knowledge and reducing interference from redundant information in answering questions.

The Pathology Education Informational Resource (PEIR) Digital Library, a public multidisciplinary image database for medical education, provided us with 30k image-text pairs, serving as an additional multi-modal knowledge base.

conda create -n fgrr python=3.8

conda activate fgrr

pip install -r requirements.txt

We test our model on:

External knowledge bases beyond PEIR.

Coming Soon.

| Dataset | Source | Feature | Annotation |

|---|---|---|---|

| VQA-RAD | . | . | . |

| SLAKE | . | . | . |

| PathVQA | . | . | . |

| PEIR | . | . | . |

| ROCO | . | . | . |

Coming Soon.

Note: The first time you run this, it will take time to load knowledge features from ./Annotations/memory/memo_list.json into the temporary folder ./temp/. Please be patient.

bash trainval_pathvqa.sh

We also reference the excellent repos of BioMedCLIP in addition to other specific repos to the baselines we examined (see paper).

If you find this paper useful, please consider staring 🌟 this repo and citing 📑 our paper: