Azure Batch is a service that enables you to run batch processes on high-performance computing (HPC) clusters composed of Azure virtual machines (VMs). Batch processes are ideal for handling computationally intensive tasks that can run unattended such as photorealistic rendering and computational fluid dynamics. Azure Batch uses VM scale sets to scale up and down and to prevent you from paying for VMs that aren't being used. It also supports autoscaling, which, if enabled, allows Batch to scale up as needed to handle massively complex workloads.

Azure Batch involves three important concepts: storage, pools, and jobs. Storage is implemented through Azure Storage, and is where data input and output are stored. Pools are composed of compute nodes. Each pool has one or more VMs, and each VM has one or more CPUs. Jobs contain the scripts that process the information in storage and write the results back out to storage. Jobs themselves are composed of one or more tasks. Tasks can be run one at a time or in parallel.

Batch Shipyard is an open-source toolkit that allows Dockerized workloads to be deployed to Azure Batch compute pools. Dockerized workloads use Docker containers rather than VMs. (Containers are hosted in VMs but typically require fewer VMs because one VM can host multiple container instances.) Containers start faster and use fewer resources than VMs and are generally more cost-efficient. For more information, see https://docs.microsoft.com/en-us/azure/virtual-machines/windows/containers.

The workflow for using Batch Shipyard with Azure Batch is pictured below. After creating a Batch account and configuring Batch Shipyard to use it, you upload input files to storage and use Batch Shipyard to create Batch pools. Then you use Batch Shipyard to create and run jobs against those pools. The jobs themselves use tasks to read data from storage, process it, and write the results back to storage.

Azure Batch Shipyard workflow

In this hands-on lab, you will learn how to:

- Create an Azure Batch account

- Configure Batch Shipyard to use the Batch account

- Create a pool and run a job on that pool

- View the results of the job

- Use the Azure Portal to remove the Batch account

- An active Microsoft Azure subscription. If you don't have one, sign up for a free trial

Click here to download a zip file containing the resources used in this lab. Copy the contents of the zip file into a folder on your hard disk.

This hands-on lab includes the following exercises:

- Azure Batch Service with Batch Shipyard

Estimated time to complete this lab: 60 minutes.

Azure Batch accounts can be created through the Azure Portal. In this exercise, you will create a Batch account and a storage account to go with it. This is the storage account that will be used for job input and output.

-

Open the Azure Portal in your browser. If you are asked to log in, do so using your Microsoft account.

-

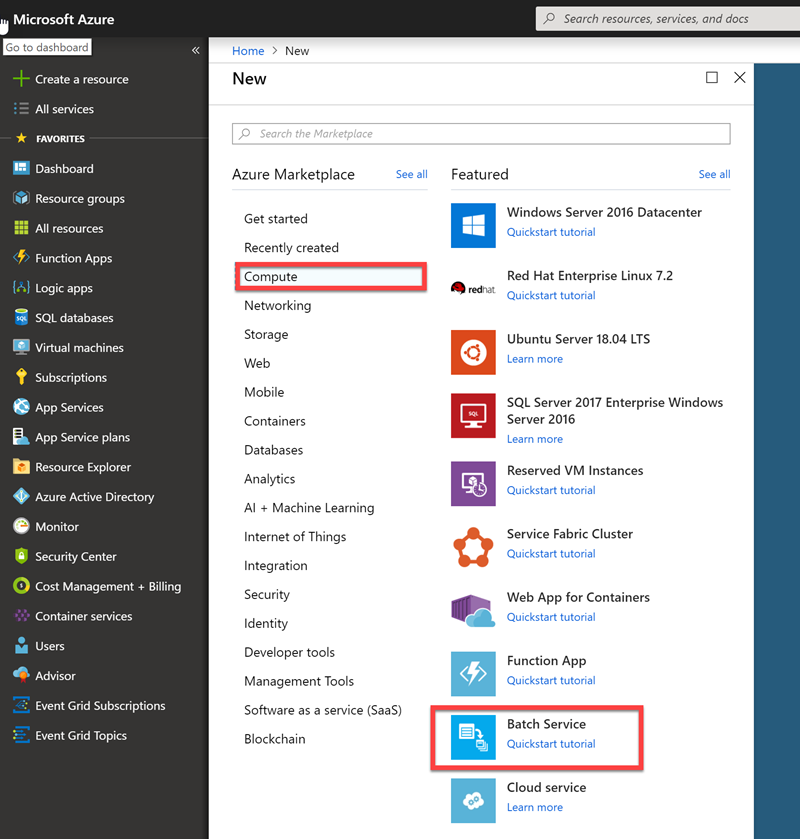

Click + Create a resource, followed by Compute and Batch Service.

Creating a Batch service

-

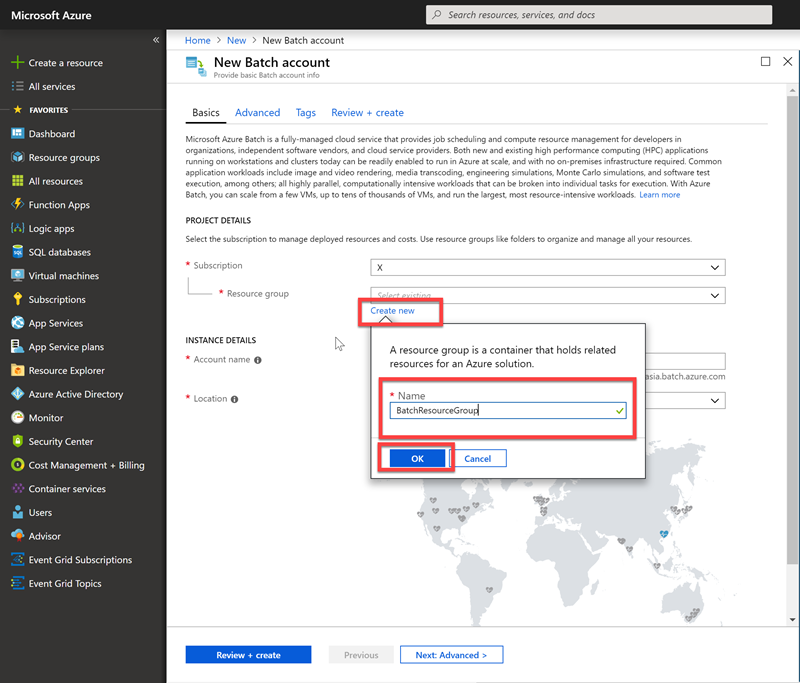

In the "New Batch account" blade, Select Create new under Resource group and name the resource group "BatchResourceGroup."

-

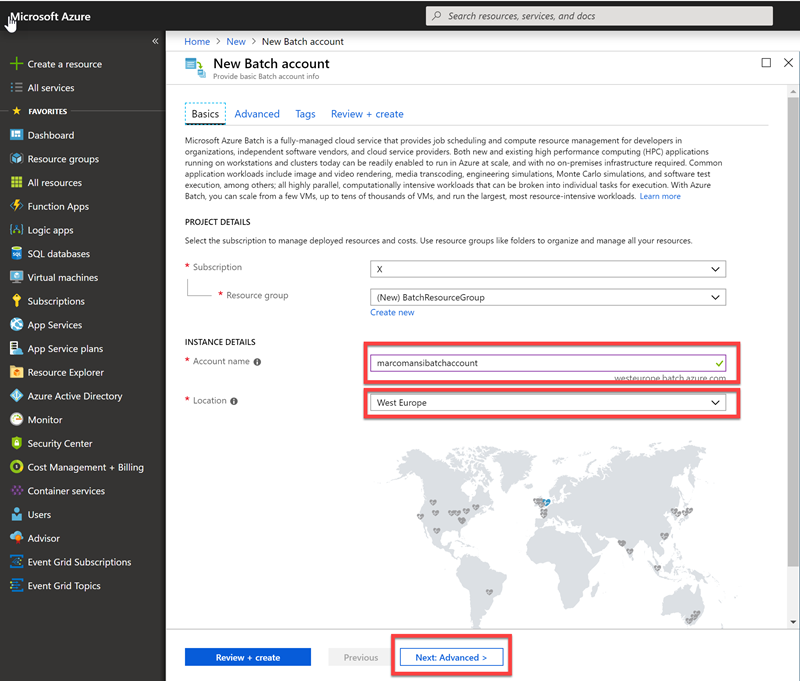

Give the account a unique name such as "batchservicelab" and make sure a green check mark appears next to it. (You can only use numbers and lowercase letters since the name becomes part of a DNS name.) Select the Location nearest you, and then click Next: Advanced.

Entering Batch account parameters

-

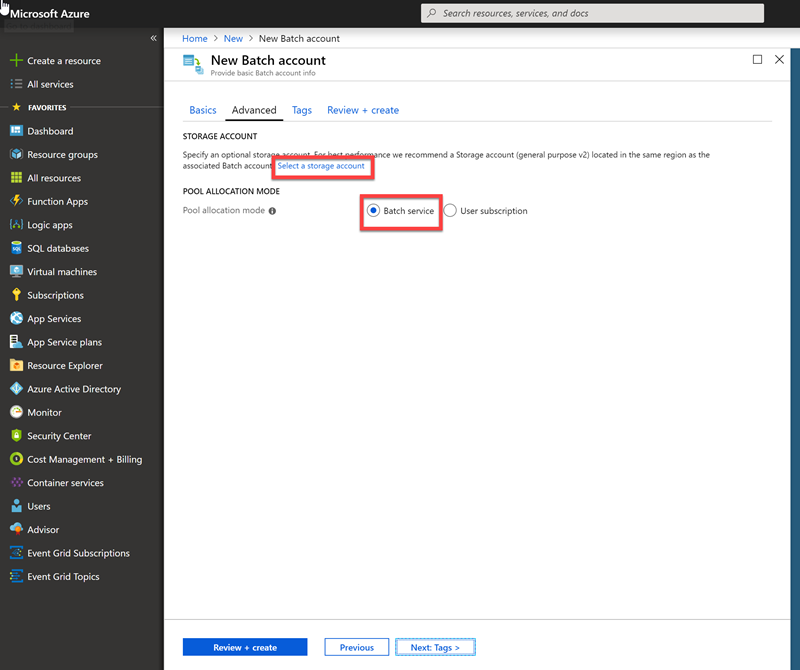

Click Select a Storage Account (leave Pool Allocation Mode to Batch Service)

Entering Batch account parameters

-

Click Create new to create a new storage account for the Batch service. Enter a unique name for the storage account and make sure a green check mark appears next to it. Then set Account Kind to StorageV2 (general purpose v2), Replication to Locally-redundant Storage (LRS) and click OK at the bottom of the blade.

Storage account names can be 3 to 24 characters in length and can only contain numbers and lowercase letters. In addition, the name you enter must be unique within Azure; if someone else has chosen the same name, you'll be notified that the name isn't available with a red exclamation mark in the Name field.

Creating a new storage account

-

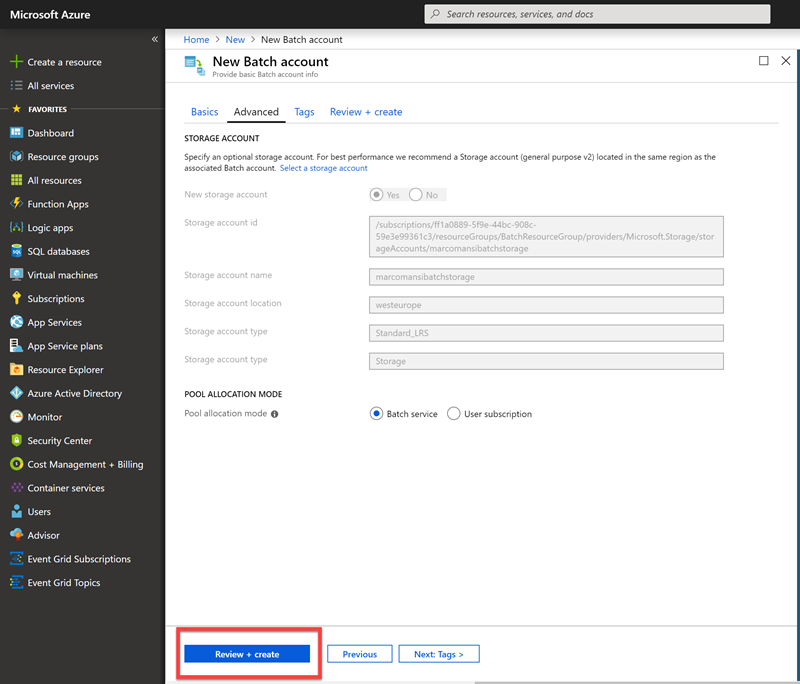

Click on Review + create button at the bottom of the "New Batch account" blade

Creating a new storage account

-

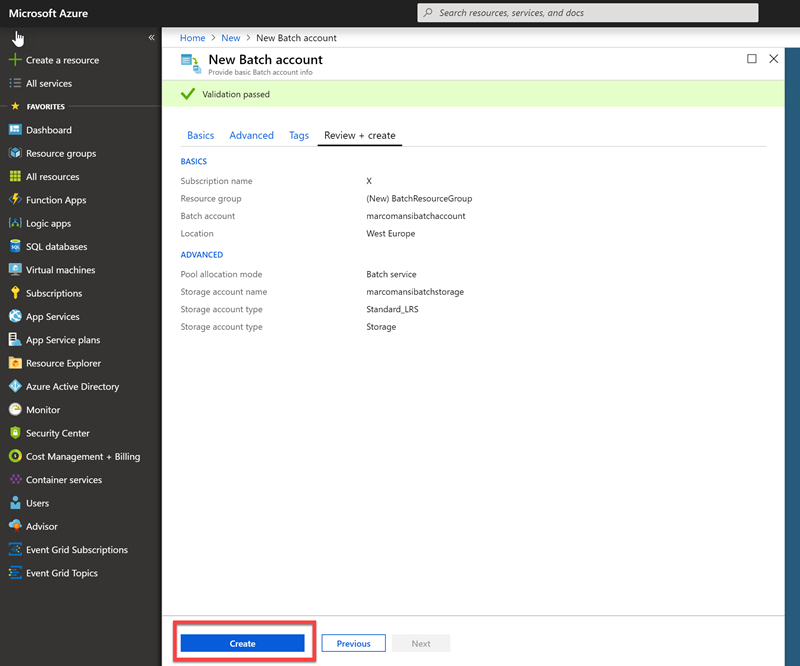

Click the Create button at the bottom of the "New Batch account" blade to start the deployment

Creating a Batch account

-

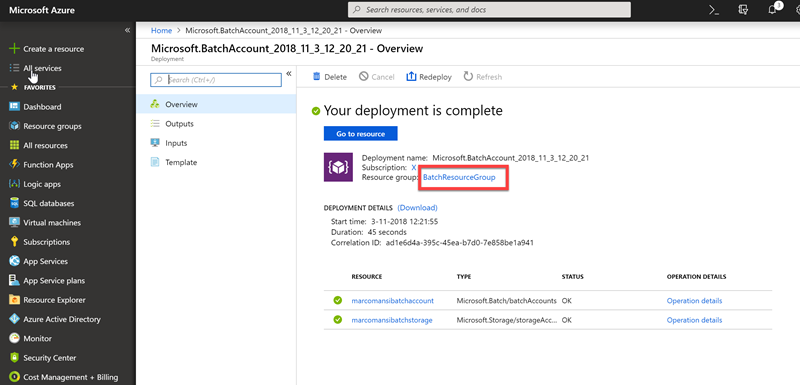

Wait until "Deploying" status changes to "OK", indicating that the Batch account and the storage account have been deployed. You can click the Refresh button at the top of the blade to refresh the deployment status.

Viewing the deployment status

-

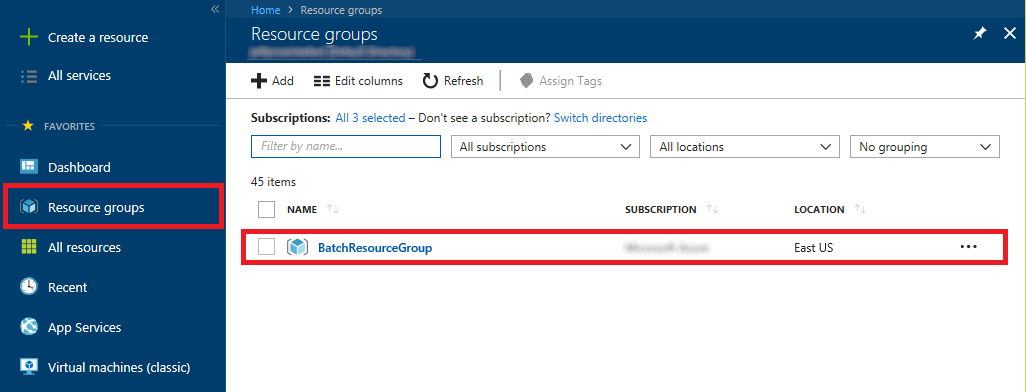

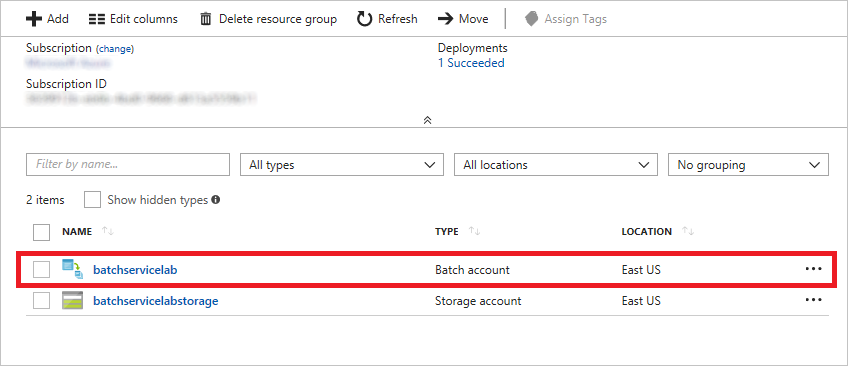

Click Resource groups in the ribbon on the left side of the portal, and then click the resource group created for the Batch account.

Opening the resource group

Batch Shipyard uses YAML files named config.yaml, pool.yaml, jobs.yaml, and credentials.yaml to configure the environment. These four files, the Dockerfiles used to build Docker images, the files referenced in the Dockerfiles, and a readme.md file define a Batch Shipyard "recipe."

Each of the configuration files in a recipe configures one element of Batch Shipyard. config.yaml contains configuration settings for the Batch Shipyard environment. pool.yaml contains definitions for the compute pools, including the VM size and the number of VMs per pool. jobs.yaml contains the job definition and the tasks that are part of the job. credentials.yaml holds information regarding the batch account and the storage account, including the keys used to access them.

In this exercise, you will modify credentials.yaml and jobs.yaml so they can be used in a Batch job. Rather than create a Dockerfile, you will use one that has been created for you. To learn more about Dockerfiles, refer to https://docs.docker.com/engine/getstarted/step_four/.

-

In the Cloud Shell (using Bash) create a directory called "dutchazuremeeeutp" and go to this directory:

mkdir dutchazuremeetup cd dutchazuremeetup -

Now clone in here this repo:

git clone https://github.com/DutchAzureMeetup/SmackYourBatchUp.git -

Go to the folder "SmackYourBatchUp", then the folder "resources" and then to the folder "recipe"

-

Open the Cloud Shell Editor (don't forget the dot at the end):

code . -

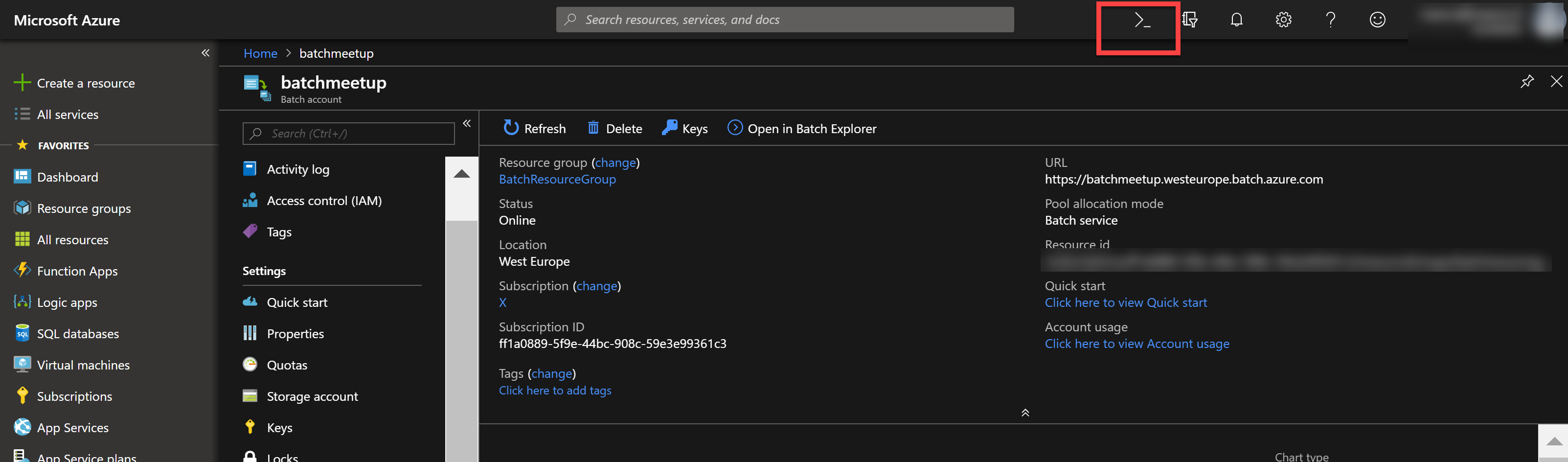

In the Azure Portal and to the "BatchResourceGroup" resource group created for the batch account in Exercise 1. In the resource group, click the Batch account.

Opening the batch account

-

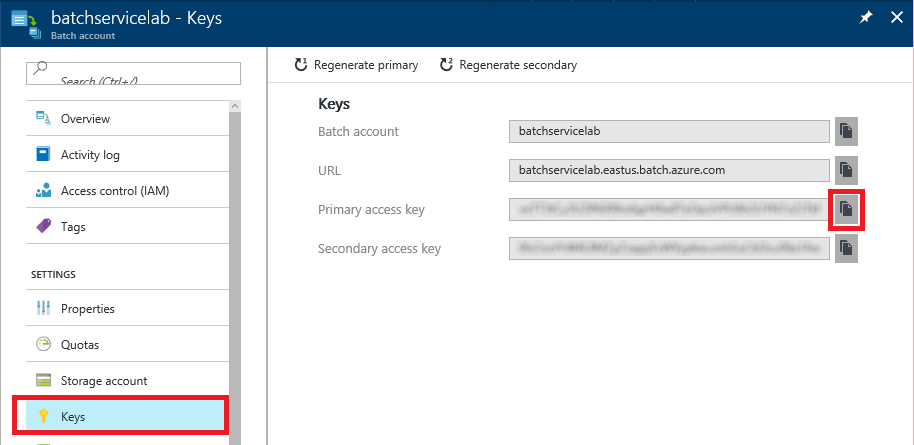

Click Keys, and then click the Copy button next to the Primary access key field.

Copying the Batch account key

-

Return to credentials.yaml and replace BATCH_ACCOUNT_KEY with the key that is on the clipboard.

-

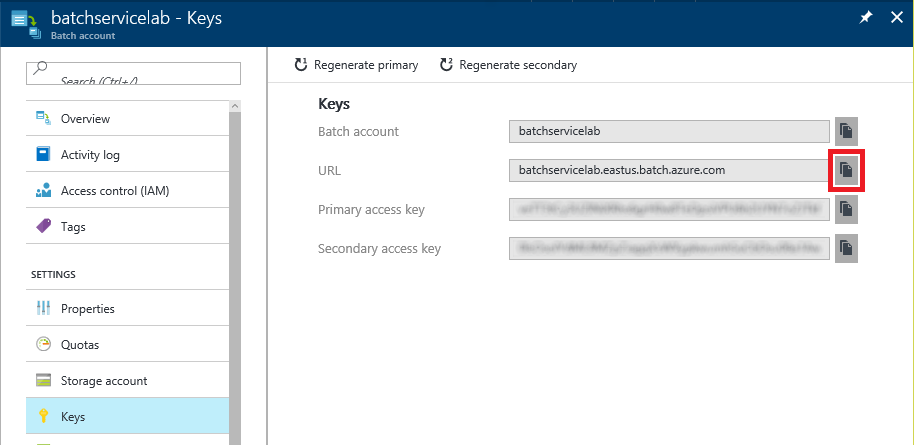

In the blade for the Batch account, click the Copy button next to the URL field.

Copying the Batch account URL

-

In credentials.yaml, replace BATCH_ACCOUNT_URL with the URL that is on the clipboard, leaving "https://" in place at the beginning of the URL. The "batch" section of credentials.yaml should now look something like this:

batch: account_key: ghS8vZrI+5TvmcdRoILz...7XBuvRIA6HFzCaMsPTsXToKdQtWeg== account_service_url: "https://batchservicelab.westeurope.batch.azure.com

-

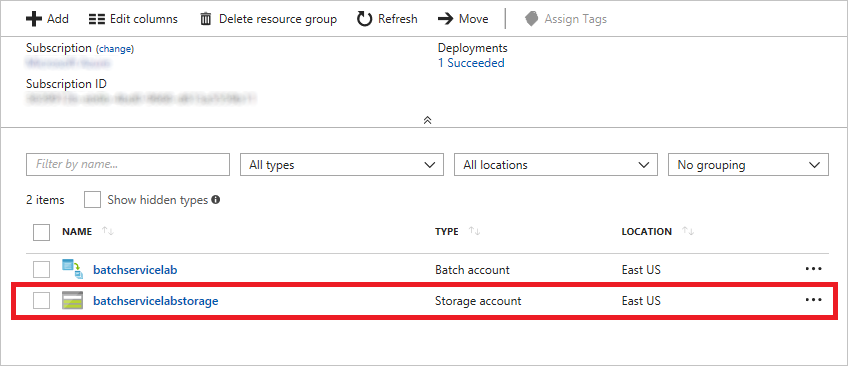

In the Azure Portal, return to the "BatchResourceGroup" resource group and click the storage account in that resource group.

Opening the storage account

-

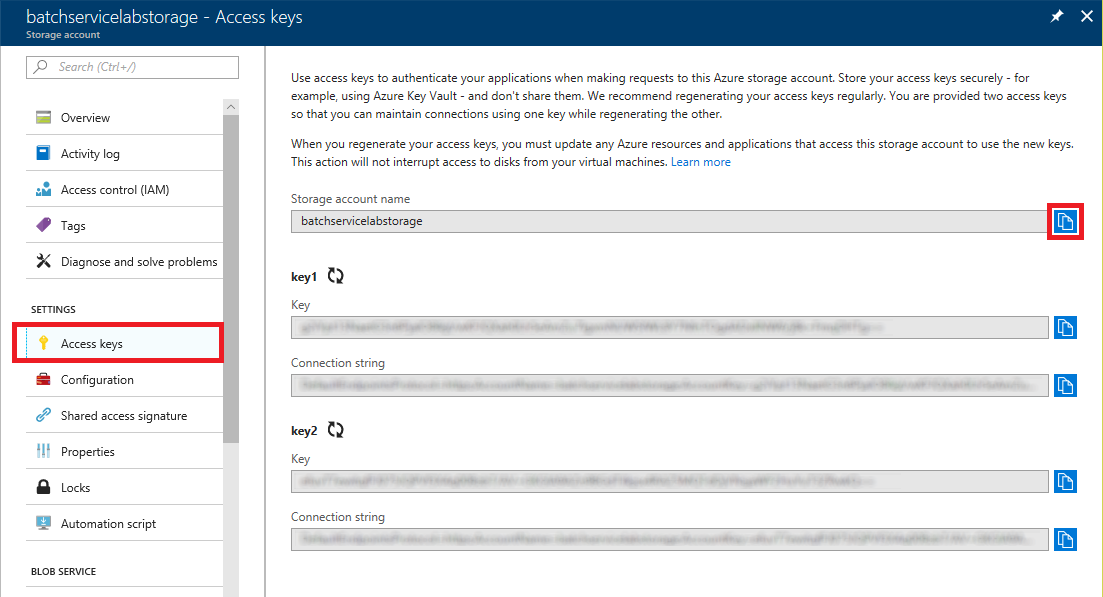

Click Access keys, and then click the Copy button next to the Storage account name field.

Copying the storage account name

-

In credentials.yaml, replace STORAGE_ACCOUNT_NAME with the storage-account name that is on the clipboard.

-

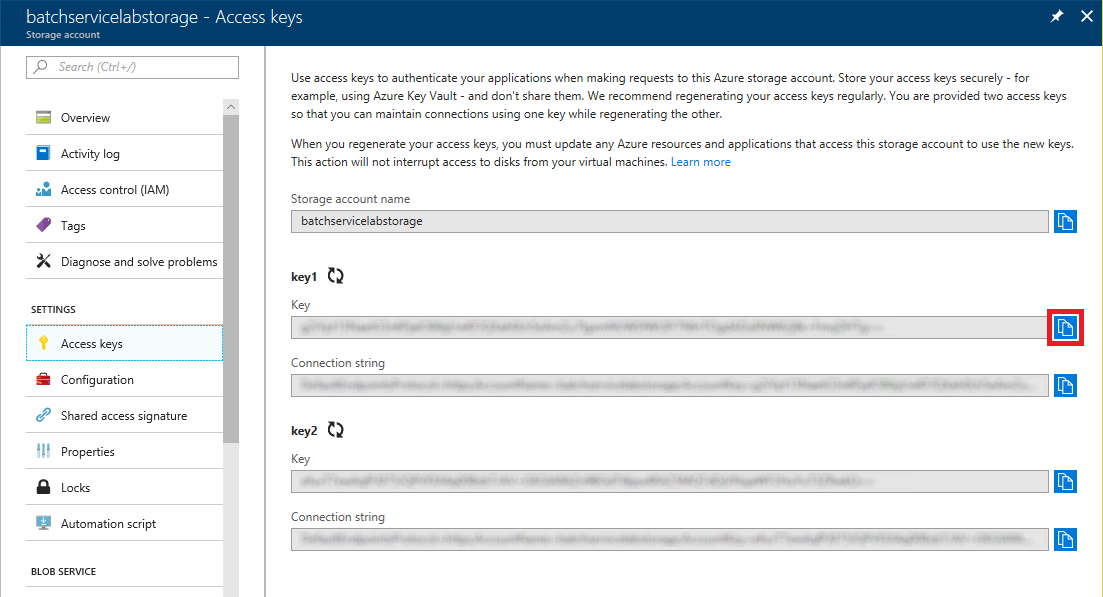

Return to the portal and copy the storage account's access key to the clipboard.

Copying the storage account key

-

In credentials.yaml, replace STORAGE_ACCOUNT_KEY with the key that is on the clipboard, and then save (CTRL + S) the file. The "storage" section of credentials.yaml should now look something like this:

storage: mystorageaccount: account: batchservicelabstorage account_key: YuTLwG3nuaQqezl/rhEkT...Xrs8+UZrxr+TFdzA== endpoint: core.windows.net

-

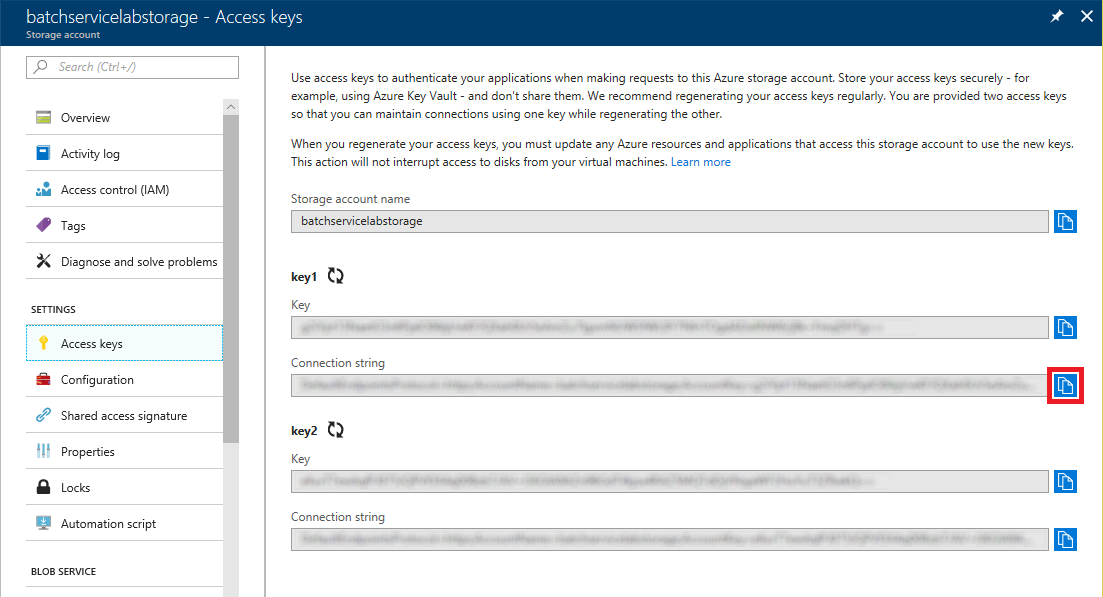

Return to the portal and copy the storage account's connection string to the clipboard.

Copying the storage account connection string

-

Open jobs.yaml and replace STORAGE_ACCOUNT_CONNECTION_STRING with the connection string that is on the clipboard. Then save (CTRL + S) the file.

credentials.yaml and jobs.yaml now contain connection information for the Batch account and the storage account. The next step is to use another of the YAML files to create a compute pool.

Before you run the job, you must create a compute pool using the configuration settings in pool.yaml. Batch Shipyard provides several commands for controlling Batch pools. In this exercise, you will use one of those commands to create a pool.

The pool.yaml file provided for you configures each pool to have two VMs and specifies a VM size of STANDARD_A1, which contains a single core and 1.75 GB of RAM. In real life, you might find it advantageous to use larger VMs with more cores and more RAM, or to increase the number of VMs by increasing the vm_count property in pool.yaml.

-

In the Cloud Shell window that you left open, run the following command:

shipyard pool add --configdir config

This command will take a few minutes to complete. Batch Shipyard is creating virtual machines using Azure Batch, and then provisioning those virtual machines with Docker. You don't have to wait for the provisioning to complete, however, before proceeding to the next exercise.

While the pool is being created, now is a good time to upload the input files that the job will process. The job uses Azure File Storage for data input and output. The configuration files tell Azure Batch to mount an Azure file share inside a Docker container, enabling code running in the container to read data from the file share as input, and then write data back out as output.

-

In the Azure Portal, return to the "BatchResourceGroup" resource group and click the storage account in that resource group.

Opening the storage account

-

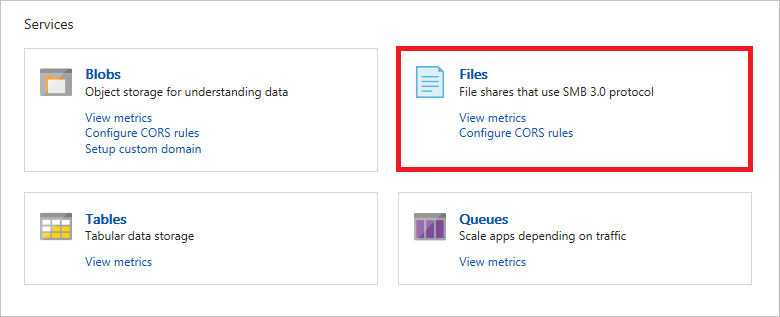

Click Files.

Opening file storage

-

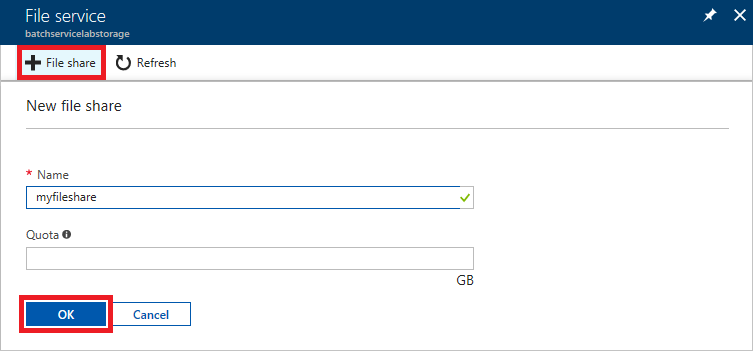

Click + File share. Enter "myfileshare" for the file-share name. Leave Quota blank, and then click OK.

Creating a file share

-

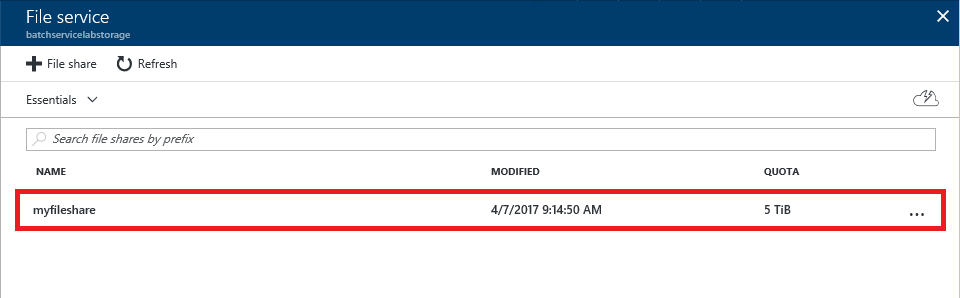

Click the new file share to open it.

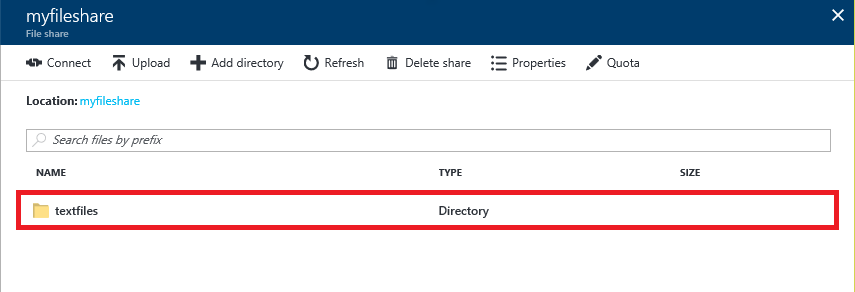

Opening the file share

-

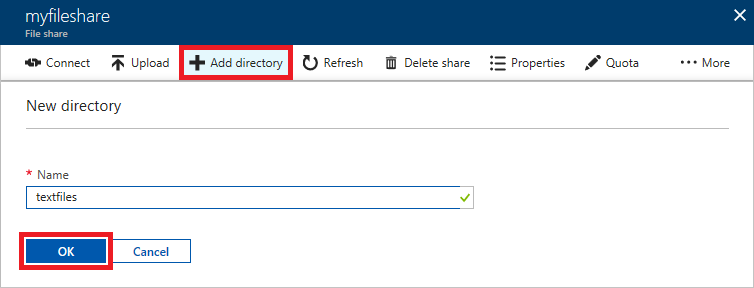

In the blade for the file share, click + Add directory. Enter "textfiles" for the directory name, and then click OK.

Creating a directory

-

Back on the "myfileshare" blade, click the directory that you just created.

Opening the directory

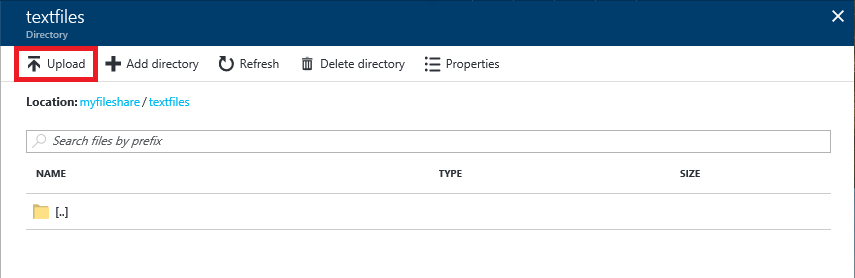

-

Click Upload.

Uploading to the directory

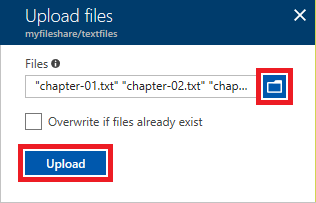

-

In the "Upload files" blade, click the folder icon. Select all of the .txt files in the resources that accompany this lab, and then click the Upload button.

Uploading text files

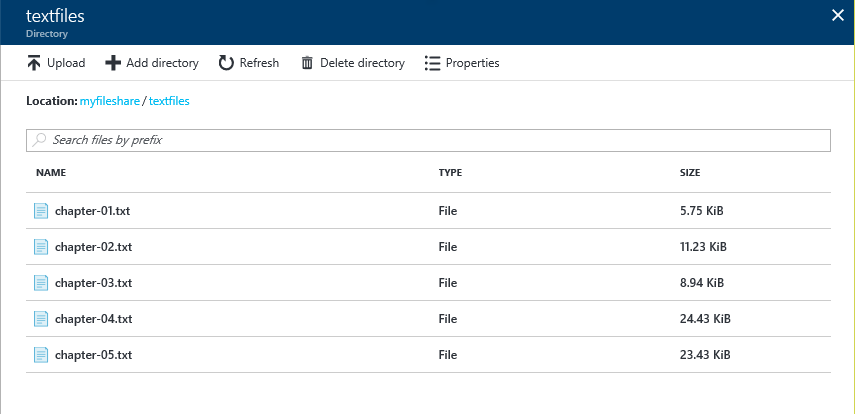

-

Wait for the uploads to complete. Then confirm that five files were uploaded to the "textfiles" directory.

The uploaded text files

The container is configured to handle multiple text files with a .txt extension. For each text file, the container will generate a corresponding .ogg file in the root folder.

Now that Batch Shipyard is configured, the pool is created, and the input data is uploaded, it's time to run the job.

-

Return to the Cloud Shell Prompt window and execute the following command. This command creates a job if it doesn't already exist in the Batch account, and then creates a new task for that job. Jobs can be run multiple times without creating new jobs. Batch Shipyard simply creates a new task each time the jobs add command is called.

shipyard jobs add --configdir config -

Return to the Azure Portal and open the Batch account.

Opening the batch account

-

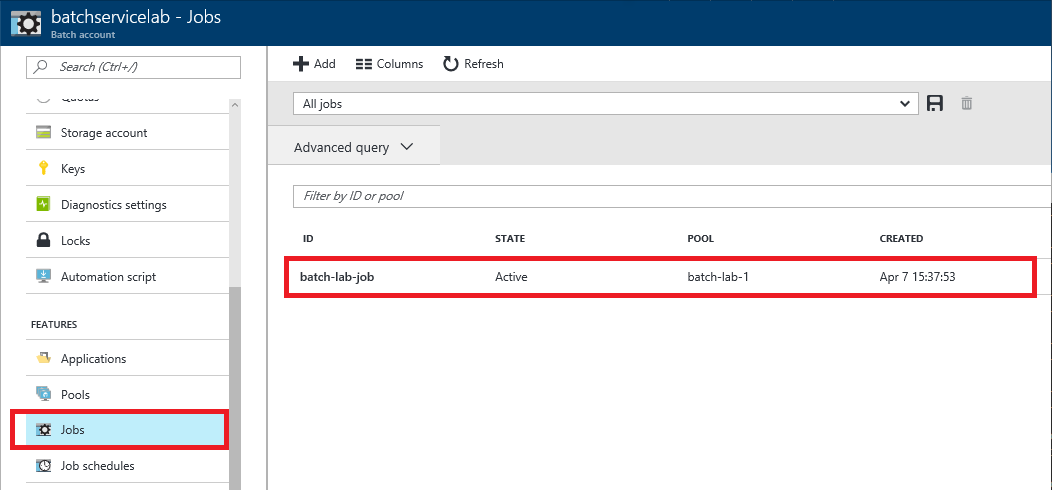

Click Jobs in the menu on the left side of blade, and then click batch-lab-job.

Selecting the job

-

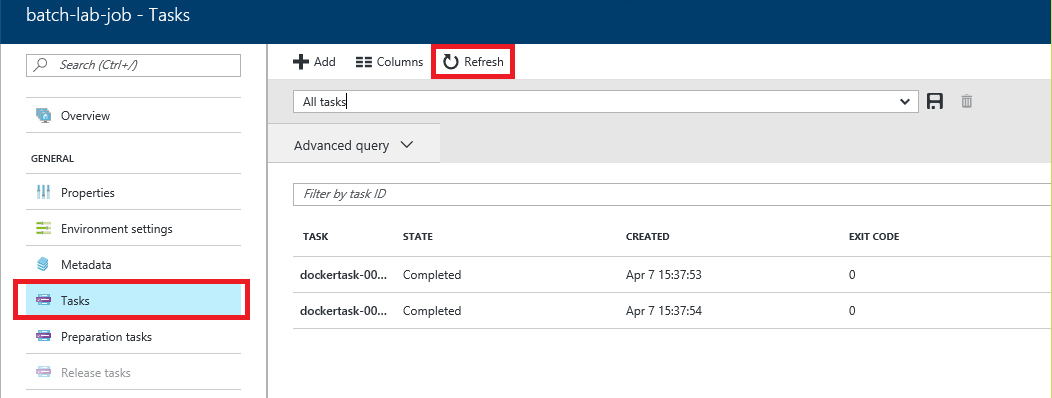

Click Tasks. Then click Refresh periodically until the job completes with an exit code of 0.

Waiting for the job to complete

Once the job has finished running, the next step is to examine the output that it produced.

The results are now available in the storage account. The output files can be downloaded and played back locally in any media player that supports the .ogg file type.

-

In the Azure Portal, return to the "BatchResourceGroup" resource group and click the storage account in that resource group.

Opening the storage account

-

Click Files.

Opening file storage

-

Click myfileshare.

Opening the file share

-

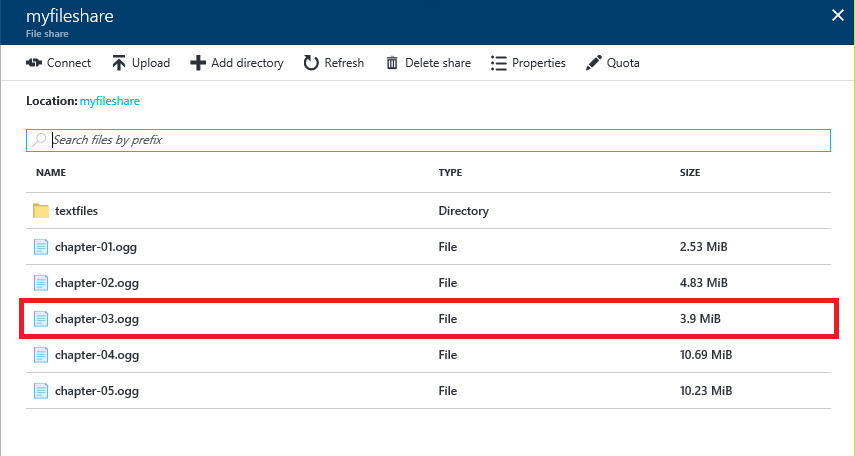

Confirm that the file share contains five files named chapter-01.ogg, chapter-02.ogg, and so on. Click one of them to open a blade for it.

Opening the output file

-

Click Download to download the file. This will download the file to the local machine where it can be played back in a media player.

Downloading the results

Each .ogg file contains spoken content generated from the text in the input files. Play the downloaded file in a media player. Can you guess what famous novel the content came from?

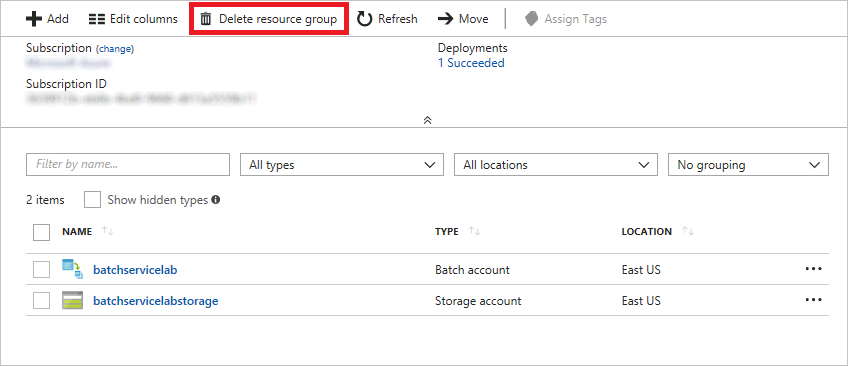

In this exercise, you will delete the resource group created in Exercise 1 when you created the Batch account. Deleting the resource group deletes everything in it and prevents any further charges from being incurred for it.

-

In the Azure Portal, open the blade for the resource group created for the Batch account. Then click the Delete button at the top of the blade.

Deleting the resource group

-

For safety, you are required to type in the resource group's name. (Once deleted, a resource group cannot be recovered.) Type the name of the resource group. Then click the Delete button to remove all traces of this lab from your account.

After a few minutes, the resource group and all of its resources will be deleted.

Azure Batch is ideal for running large jobs that are compute-intensive as batch jobs on clusters of virtual machines. Batch Shipyard improves on Azure Batch by running those same jobs in Docker containers. The exercises you performed here demonstrate the basic steps required to create a Batch service, configure Batch Shipyard using a custom recipe, and run a job. The Batch Shipyard team has prepared other recipes involving scenarios such as deep learning, computational fluid dynamics, molecular dynamics, and video processing. For more information, and to view the recipes themselves, see https://github.com/Azure/batch-shipyard/tree/master/recipes.