- Community Contributed Repository

- [Project Page][Paper] [Official Dataset Page][Sibling Project (OVEN)]

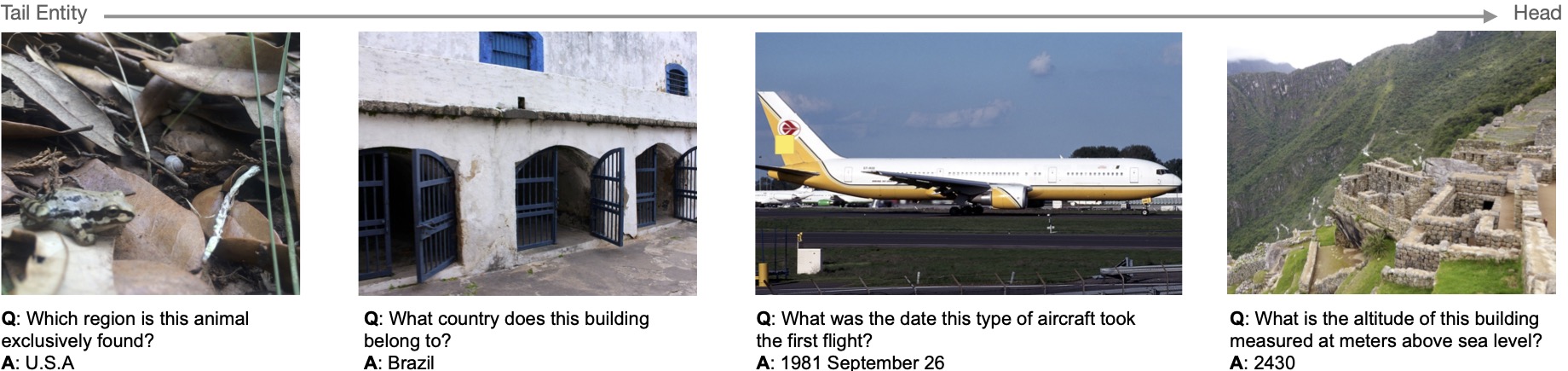

InfoSeek, A New VQA Benchmark focus on Visual Info-Seeking Questions

Can Pre-trained Vision and Language Models Answer Visual Information-Seeking Questions?

Yang Chen, Hexiang Hu, Yi Luan, Haitian Sun, Soravit Changpinyo, Alan Ritter and Ming-Wei Chang.

- [24/5/30] We release the dataset/image snapshot on Huggingface: ychenNLP/oven.

- [23/6/7] We are releasing InfoSeek Dataset and evaluation script.

To download image snapshot, please refer to OVEN.

To download annotations, please run the bash script "download_infoseek_jsonl.sh" inside the infoseek_data folder (from Google Drive).

Run evaluation on InfoSeek validation set:

python run_evaluation.py

# ====Example====

# ===BLIP2 instruct Flan T5 XXL===

# val final score: 8.06

# val unseen question score: 8.89

# val unseen entity score: 7.38

# ===BLIP2 pretrain Flan T5 XXL===

# val final score: 12.51

# val unseen question score: 12.74

# val unseen entity score: 12.28Run BLIP2 zero-shot inference:

python run_blip2_infoseek.py --split valRun BLIP2 fine-tuning:

python run_training_lavis.pyIf you find InfoSeek useful for your your research and applications, please cite using this BibTeX:

@article{chen2023infoseek,

title={Can Pre-trained Vision and Language Models Answer Visual Information-Seeking Questions?},

author={Chen, Yang and Hu, Hexiang and Luan, Yi and Sun, Haitian and Changpinyo, Soravit and Ritter, Alan and Chang, Ming-Wei},

journal={arXiv preprint arXiv:2302.11713},

year={2023}

}