- Use SignalR IAsyncEnumerable streaming for chat messages.

- Tested capybarahermes-2.5-mistral-7b.Q4_K_M.gguf on a NVIDIA GeForce RTX 4060 Laptop GPU with 8GB VRAM.

- Support for Llama3 models.

- Reduce memory consumption by using a single model for both chat and embedding.

- Utilize Microsoft's

semantic-kernelfor chat, embedding, and document retrieval.

🚀 Alpha Release: Expanding Horizons

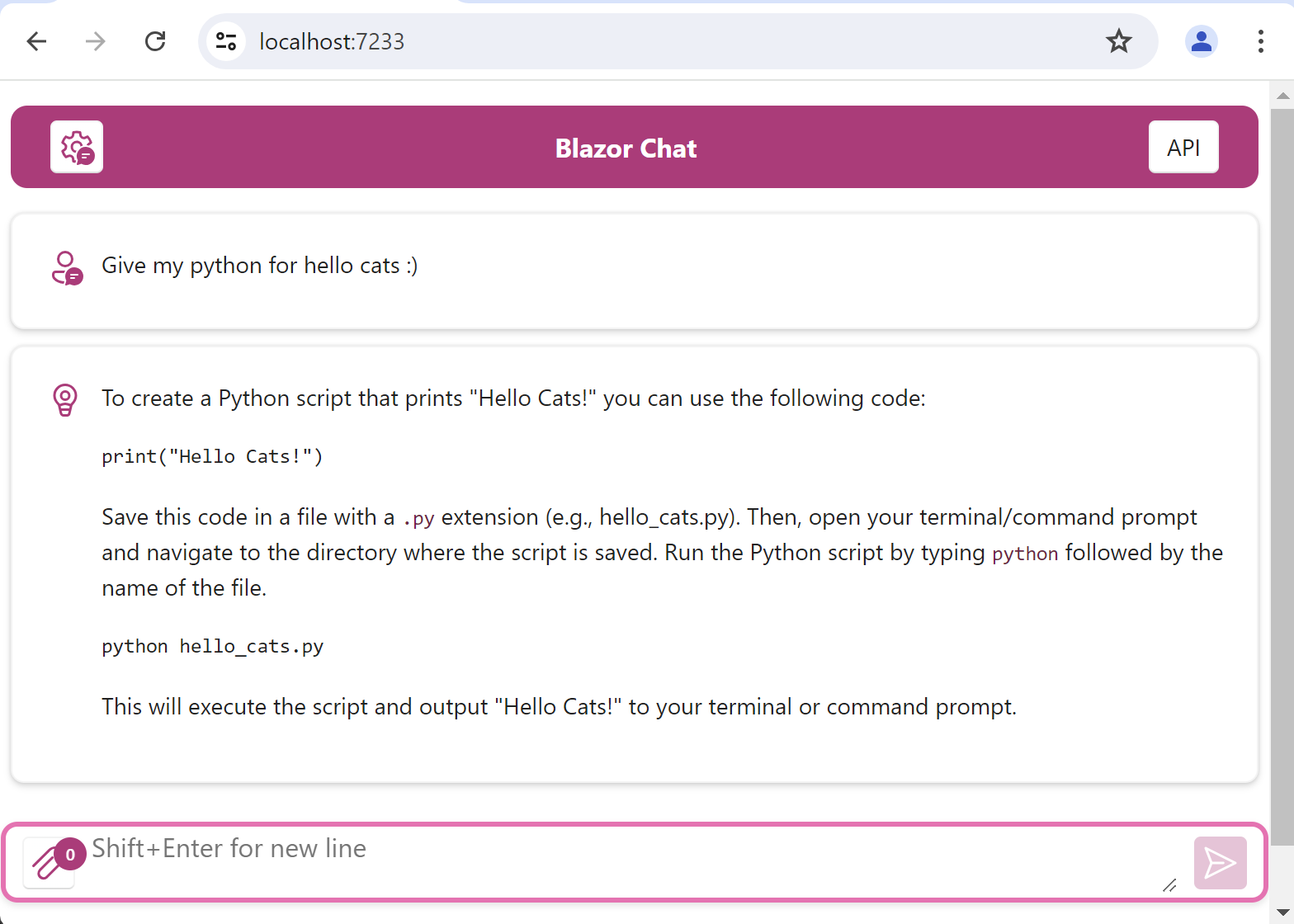

We're excited to announce the alpha release of our chat application built entirely in C#! This software showcases the foundational features and sets the stage for more exciting updates to come. Your feedback and contributions during this phase are invaluable as we strive to enhance and refine the application.

🌐 All C# Magic

This application is entirely written in C#. This choice reflects our commitment to robust, efficient, and scalable solutions. We've harnessed the power of C# to bring you an application that's not just functional but also a joy to interact with.

🤖 ChatGPT's Legacy, Reimagined

Inspired by the original capabilities of ChatGPT at its launch, our application integrates all those pioneering features. We've built upon this foundation to offer a seamless and engaging chat experience that mirrors the sophistication and versatility of ChatGPT.

📱 Mobile-Ready and Responsive

This application provides a mobile-ready design that adapts flawlessly across various devices.

🧠 Choose Your Language Model - Powered by Llama 2

Flexibility to select your preferred Llama 2 based large language model. Explore different models and discover the unique strengths of each one.

🔜 Stay Tuned: More to Come!

The journey doesn't end here! We're constantly working to bring new features and improvements. Keep an eye out for updates as we evolve and grow. Your suggestions and feedback will shape the future of this application.

💡 Your Input Matters

Join us in refining and enhancing this application. Try it out, push its limits, and let us know what you think. Your insights are crucial in this alpha phase and will guide us in creating an application that truly resonates with its users.

🌟 Get Involved

Excited about what you see? Star us on GitHub and share with your network. Every star, fork, and pull request brings us closer to our goal of creating an outstanding chat application.

Thank you for being a part of our journey. Let's make chatting smarter and more fun together!

PalmHill.BlazorChat offers a range of features to provide a seamless and interactive chat experience:

-

Real-Time Chat: Engage in real-time conversations with the help of SignalR, which ensures instant message delivery.

-

Retrieval Augmented Generation for Uploaded Docs: This feature allows users to chat about content within uploaded documents. By leveraging retrieval augmented generation, the chatbot can reference and incorporate specific information from these documents in its responses, providing a more personalized and context-aware interaction. Early version.

-

Markdown Support: The

ModelMarkdown.razorcomponent allows for markdown formatting in chat messages, enhancing readability and user experience. -

Chat Settings: Customize your chat experience with adjustable settings such as temperature, max length, top P, frequency penalty, and presence penalty, all managed by the

ChatSettings.razorcomponent. -

Error Handling: The application gracefully handles errors and displays a user-friendly error page

Error.razorwhen an unhanded error occurs. -

Responsive Layout: The application layout is responsive and provides a consistent look and feel across different screen sizes, thanks to the

FluentContainerandFluentRowcomponents from the Fluent UI library.

The project is structured into three main directories:

-

Client: Contains the Blazor WebAssembly user interface.

-

Server: Contains the server-side websocket/SignalR and REST/WebAPI logic for the application. You can set the model file location in this project in appsettings.json.

-

Llama: This is a wrapper for LlamaSharp. Contains the code related to the Llama models.

To get started with PalmHill.BlazorChat, follow these simple steps to set up the project on your local machine for development and testing:

Before you begin, ensure you have the following installed on your system:

- .NET 8: This application requires the .NET 8 framework. You can download it from the official .NET website.

- CUDA 12: Currently CUDA 12 is required. Download and install CUDA 12 from NVIDIA's CUDA Toolkit page.

-

Download the Language Model: First, you'll need to download the appropriate Llama 2 language model for the application. Any GGUF/Llama2 model should work and can be downloaded from Huggingface. We recommend selecting a model that will fit your VRAM and RAM from this list.

For testing TheBloke/CapybaraHermes-2.5-Mistral-7B-GGUF was used and requires at least 10gb VRAM.

-

Place the Model: Once downloaded, place the model file in a designated directory on your system. Remember the path of this directory, as you'll need it for configuring the application.

- Clone the Repository: Use Git to clone the PalmHill.BlazorChat repository to your local machine. Alternatively, you can open the project directly from GitHub using Visual Studio.

- To clone, use the command:

git clone [https://github.com/edgett/PalmHill.BlazorChat.git]. - If using Visual Studio, select 'Clone a repository' and enter the repository URL.

- To clone, use the command:

- Open the Solution in Visual Studio: Once the repository is cloned, open the solution file in Visual Studio.

- Set the Startup Project: In the Solution Explorer, right-click on the

Serverproject and select 'Set as StartUp Project'. This ensures that the server-side logic is the entry point of the application.

-

Locate

appsettings.json: In the root ofServerproject. -

Set the Model Path: Edit

appsettings.jsonto include the path to the language model file you placed in step 1. Use double backslashes (\\) for the file path to escape the backslashes properly in JSON syntax.Example:

"ModelPath": "C:\\path\\to\\your\\model\\model-file-name"

Full example:

{ "Logging": { "LogLevel": { "Default": "Information", "Microsoft.AspNetCore": "Warning" } }, "AllowedHosts": "*", "InferenceModelConfig": { "ModelPath": "C:\\models\\capybarahermes-2.5-mistral-7b.Q8_0.gguf", "GpuLayerCount": 40, "ContextSize": 4096, "Gpu": 0, "AntiPrompts": [ "User:" ] } }

- Build the Solution: Build the solution in Visual Studio to ensure all dependencies are correctly resolved.

- Run the Application: Click 'Play' in Visual Studio to start the application. This will launch the application in your default web browser.

- Application URLs: The application will open to the URLs specified in BlazorChat/Server/

launchSettings.json, typicallyhttps://localhost:7233andhttp://localhost:5222. You can access the application via either of these URLs in your browser.

- Ensure that all necessary NuGet packages are installed and up to date.

- Check the .NET and Blazor WebAssembly versions required for the project.

- If you encounter issues, refer to the 'FAQs' section or reach out to the community for support.

By following these steps, you should be able to set up and run PalmHill.BlazorChat on your local machine for development and testing. Happy coding!

Open a pull request and go nuts!