This repository contains the code for this demo presented at KubeCon 2024 by Roche.

The purpose of this demo is to demonstrate how to use some advanced Cilium Service Mesh networking features.

We will focus on the following scenario:

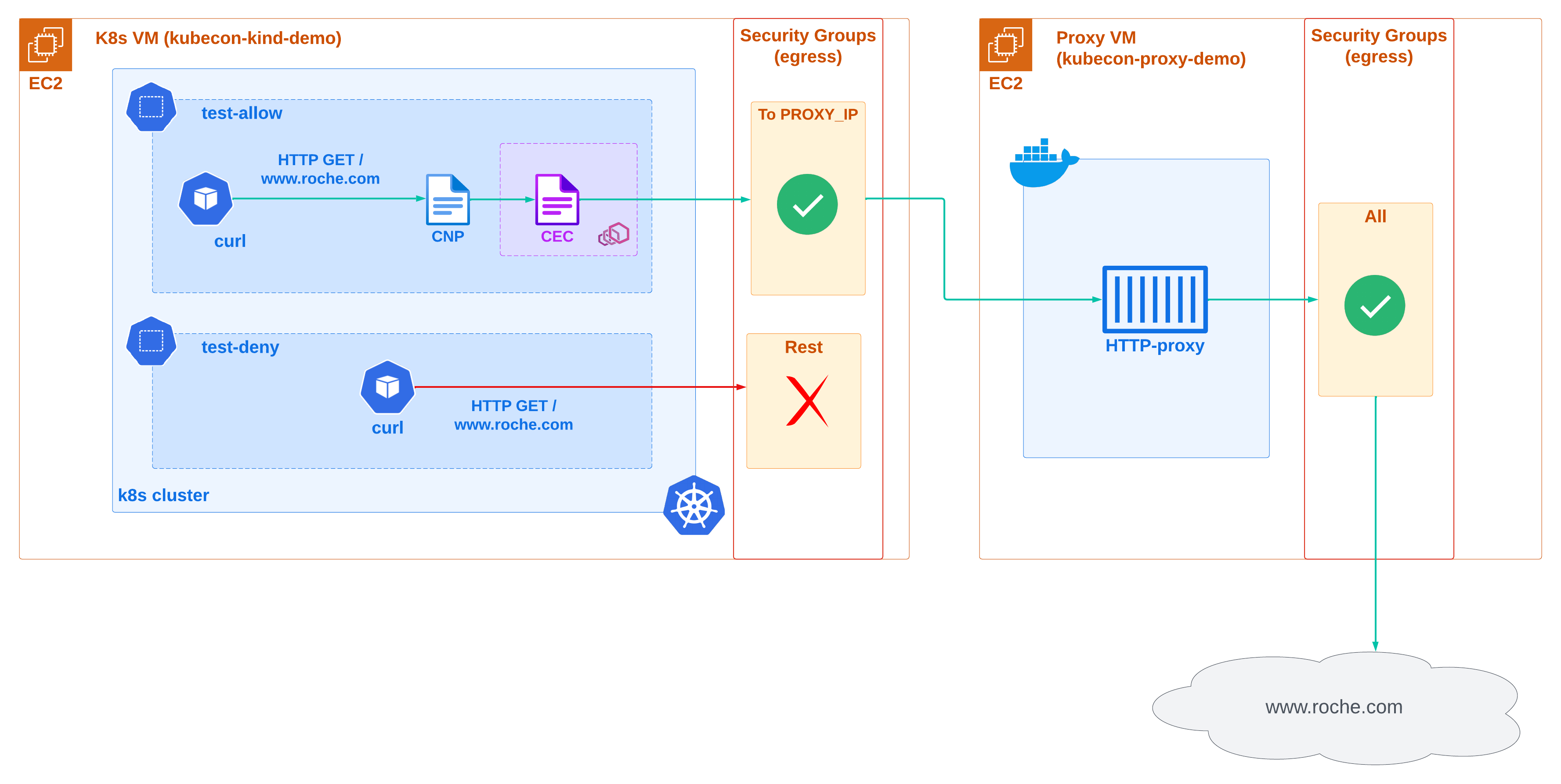

We have one kubernetes cluster running on a server that has egress traffic restricted only allowing as destination the IP of another server that is running a HTTP proxy. The proxy's egress traffic is enabled to any destination. We want to ensure that egress traffic from the kuberentes cluster to an specific destination is routed through the proxy, so that it can reach that destination.

In order to follow along with this demo, you will need the following:

- An AWS account where you can create resources

- terraform installed

- j2cli installed

- kubectl installed

- python 3.11 installed

First of all, go to terraform directory and create the resources:

cd terraform

terraform init

terraform applyThis will create the following resources:

- An EC2 instance named

kubecon-kind-demorunning akindkubernetes cluster whereCiliumis configured as the CNI withCiliumEnvoyConfigCRD enabled. Two namespaces namedtest-allowandtest-denyare also created, each with acurlpod running for further testing later on. - An EC2 instance named

kubecon-proxy-demorunning a HTTP proxy server as a Docker container on port3128.

By default, both EC2 instances are configured with a security group that allows all egress traffic, but only allows incoming traffic from the IP of the machine that created the resources via ssh port 22. Additionally, the kubecon-proxy-demo EC2 instance allows incoming traffic from kubecon-kind-demo EC2 instance's public ip to port 3128.

INFO: if your laptop's IP changes, just execute again

terraform applyagain so that the security group rules of the EC2 instances are updated to allow incoming traffic from your new IP.

WARN: wait for the

kubecon-kind-demoserver to complete its initial cloud-init deployment before continue. You can check if the server is ready executing thisMakefiletarget from the root folder of this repository:make check_ec2_kind_ready.

Now, we need to restrict all egress traffic from kubecon-kind-demo EC2 instance.

We will do this by updating the security group of the kubecon-kind-demo EC2 instance to only allow egress traffic to the public IP of the kubecon-proxy-demo EC2 instance on port 3128 where the HTTP proxy is exposed.

Edit the terraform/ec2_kind.tf file and comment out the egress_with_cidr_blocks under ######### Allow ALL egress traffic, and uncomment the egress_with_cidr_blocks under ####### Allow ONLY egress traffic to the EC2 Proxy (+ DNS and NTP).

Then, apply the changes:

terraform applyAt this point, the kubernetes cluster cannot connect to any external destination.

Let's enable egress traffic to *.roche.com only in namespace test-allow.

For this purpose, execute the following command from the root folder of this repository:

cd ..

make apply_manifestsThis Makefile target will deploy the following custom resources:

- cec_proxy.yaml: A

CiliumEnvoyConfig (cec)in thetest-allownamespace that will route all traffic that arrives to it through thepublic_ip:portof the HTTP proxy server running onkubecon-proxy-demoEC2 instance. - cnp_proxy.yaml: A

CiliumNetworkPolicy (cnp)that allows all traffic from any pod in thetest-allownamespace to*.roche.comand redirects it to theenvoy listenerdefined in thatcec.

But before, it will perform the following actions:

- Get the public ip of the

kubecon-proxy-demoEC2 instance from the terraform state. - Generate the file

cec_proxy.yaml(which defines theCiliumEnvoyConfigcustom resource) fromcec_proxy.j2template usingj2clijinja cli, setting thekubecon-proxy-demoEC2 instance public ip as backend for the envoy cluster. - Download the kubeconfig file

kubecon-kind-demo.configvia ssh from thekubecon-kind-demoEC2 instance and save it to theterraformfolder. - Expose kube-apiserver port

6443of thekubecon-kind-demoEC2 instance to your localhost port6443via an ssh tunnel, so that you can usekubectlfrom your laptop. (See Connect to kube-apiserver).

In a separate terminal, let's monitor the logs of the HTTP proxy running on kubecon-proxy-demo EC2 instance.

You have the ssh command to connect to the kubecon-proxy-demo EC2 instance on the terraform outputs, but you can also get it by executing:

make show_ec2_proxy_sshOnce you get it, execute it to connect to the server. It will be something similar to:

ssh -i terraform/id_rsa ubuntu@x.x.x.xNow that you are connected to the server, execute the following command to monitor the logs of the HTTP proxy:

docker logs $(docker ps --format '{{.ID}}') -fKeep this terminal open to monitor the logs of the HTTP proxy. And switch now back to the other terminal.

Let's send a HTTP request to www.roche.com from the curl pod running in the test-deny namespace:

make test_denyYou should see that the curl request times out:

000command terminated with exit code 28INFO: in this case, the network traffic corresponding to the

curlrequest is being sent directly to the linux netwoking stack, and as there is an AWS security group that only allows egress traffic to thekubecon-proxy-demoEC2 instance public ip and denies all the rest, the request cannot reach roche's ip.

Now let's send a HTTP request to www.roche.com from the curl pod running in the test-allow namespace, where the CiliumEnvoyConfig and CiliumNetworkPolicy are deployed:

make test_allowThis time you should get an HTTP 200 response, meaning that the request was successful.

INFO: in this case, the

CiliumNetworkPolicynot only allows the traffic towww.roche.combut also instead of sending it to the linux networking stack, redirects it to the envoy listener defined in theCiliumEnvoyConfig, and there envoy routes the traffic to the proxy running onkubecon-proxy-demoEC2 instance usingHTTP CONNECTmethod.

Move back to the terminal where you are monitoring the logs of the HTTP proxy.

You should see a log message for the request that the proxy received and routed to the CDN IP behind www.roche.com, similar to this:

{"time_unix":1707585823, "proxy":{"type:":"PROXY", "port":3128}, "error":{"code":"00000"}, "auth":{"user":"-"}, "client":{"ip":"3.123.33.107", "port":38522}, "server":{"ip":"xxx.xxx.xxx.xxx", "port":443}, "bytes":{"sent":844, "received":5871}, "request":{"hostname":"xxx.xxx.xxx.xxx"}, "message":"CONNECT xxx.xxx.xxx.xxx:443 HTTP/1.1"}

Once you are done with the demo, you can clean up all the resources by executing the following:

cd terraform

terraform destroyIncomming traffic to all the EC2 instances is restricted to the IP of the machine that created the resources via ssh port 22. If you want to use kubectl to connect to the kube-apiserver, you will need to create a ssh tunnel to the kubecon-kind-demo EC2 instance.

For this purpose, execute the following command:

make connect_apiserverINFO: if it fails, you probably already executed it and the tunnel is already established. For example, be aware that the

Makefiletargetmake apply_manifestsexecutesmake connect_apiserveras a dependency.

Once you have the tunnel established, you can use kubectl normally from your laptop to interact with the kubernetes cluster.

You might need to export the KUBECONFIG environment variable to point to the kubeconfig file of the kind cluster.

export KUBECONFIG=terraform/kubecon-kind-demo.configThe tunnel is established with an ssh process that runs in background mode exposing port 6443 of the kubecon-kind-demo EC2 instance to your localhost port 6443.

Once you are done with your testing, you can kill the process to free up port 6443 by executing the following command:

make disconnect_apiserver