This API was designed with the goal of allowing users to easily search and extract information from the Fake Insurance company dataset: this dataset was taken from Kaggle. The API was implemented in Python3 and uses the following frameworks/libraries:

- Pandas

- Django-Rest

- Djnago-Rest-Pandas

To install all dependencies, one should download this repository and run:

pip install -r requirements.txt

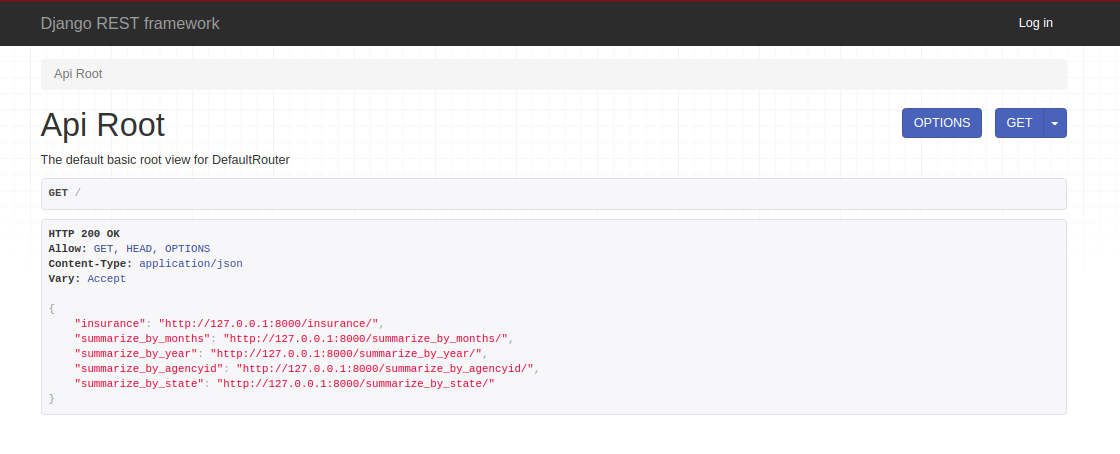

The initial page has some basic URLs that provide the user information regarding insurances. It is possible to obtain different summarized views that group all insurance information according to a given month, year, agency id, or state.

Implementation: This part of the API performs a simple query on all database.

As a result, four tables are generated. These tables are populated by running python3 csv_to_dabase.py.

The first table contains information about the Company.

The other tables group information in terms of

month, year, agency id, or state.

For instance, retrieving insurance information related to month 4 entails running the following 'search/?months=4'. In a similar way, it is also possible to run queries involving the fields below:

- months,

- state_abbr,

- agency_id

- year

Implementation: To implement this method I take the

request string (in the URL),

and filter all database info so that

the query returns all information that matches the request string/query.

Oten, a Company needs an important report concerning its situation within any given year. So, for every (valid) year, it is possible to obtain a report containing agency id, loss, earn, and the prod line.

To generate said report, simply ust use /report/data=2009: in this case, for instance, a report containing everything that took place in 2009 will be downloaded as a CSV file. (Naturally, it is possible to obtain a reporta concerning any valid year. In case there is no such a year, an empty CSV will be generated). http://127.0.0.1:8000/report/?data=year

Implementation: This part was implemented using Django

Rest and the Pandas framework.

I filter a queryset using the specified value from url. After this, all

unused fields are dropped from the resulting dataframe.

Lastly,

the resulting dataframe is turned into a CSV file (i.e., exported as a CSV file).

It is worth mentioning that, for some reason, this part of the API currently

only runs properly in local host

The application is available at: http://britecoreproject.xnm6eb7mad.us-west-2.elasticbeanstalk.com/