This project aims at evaluating cooperative approaches towards object classification on monocular images. It renders 3D meshes into images from three different viewpoints and evaluate the impact of occlusion and sensor noise into the classification result. Ten object classes of interest for driving applications are considered: animal, bike, trash can (bin), bus, car, mailbox, motorbike, person and truck.

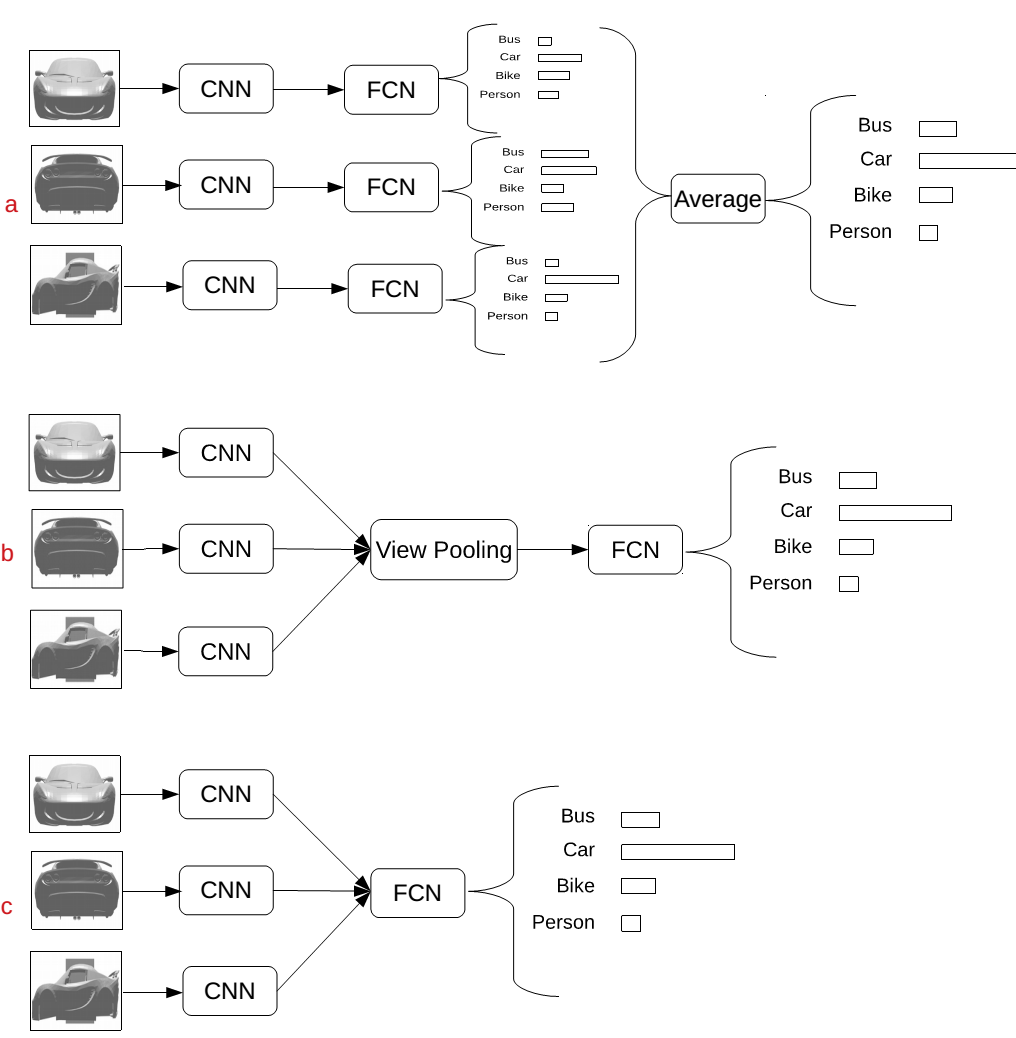

We use a pre-trained VGG-19 as the CNN backbone shared across views and devise different strategies to fuse the features from each view.

We evaluate different fusion architectures: (a) voting, (b) view-pooling, (c) concatenation (proposed). These architectures are illustrated below:

The paper containing the results of this research activity was published here. Please cite using

@INPROCEEDINGS{8813811,

author={E. {Arnold} and O. Y. {Al-Jarrah} and M. {Dianati} and S. {Fallah} and D. {Oxtoby} and A. {Mouzakitis}},

booktitle={2019 IEEE Intelligent Vehicles Symposium (IV)},

title={Cooperative Object Classification for Driving Applications},

year={2019},

volume={},

number={},

pages={2484-2489}

}

We used PyTorch 1.0.1 with CUDA toolkit 8.0. You can set up and activate the exact conda environenment using:

conda env create -f environment.yml -n coopclassification

conda activate coopclassification

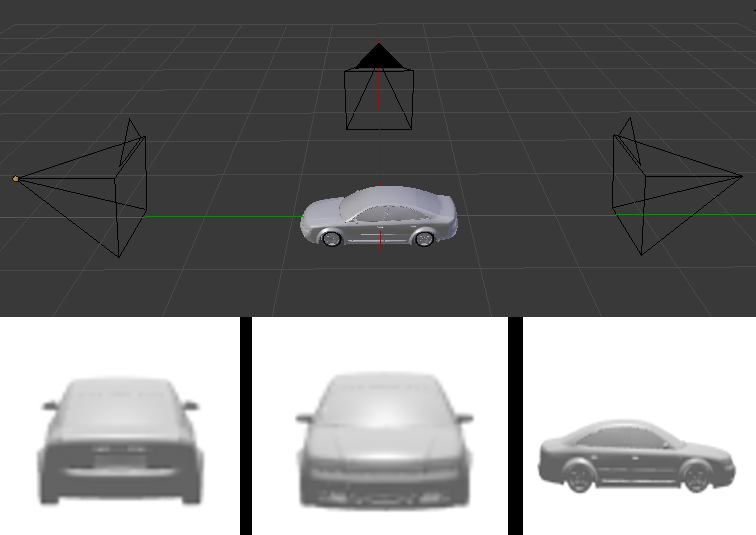

The dataset is generated from a subsample of textureless 3D meshes provided in the Shapenet dataset for the classes of interest. The images are created with the Blender render engine.

The folders are separated in training and testing. For testing, we render occlusion boxes around the objects to investigate the impact of occlusion.

We vary the size of the occluding box as a percentage of the size of the object, resulting in multiple test folders, each labeled as test_OCC where OCC is the percentage of the occlusion size relative to the objects size.

For simplicity we provide the rendered dataset in a compressed file here.

Download and extract it within the data folder.

The dataset class distribution can be observed using python dataset_histogram.py.

To train the models for each experiment use

python train.py

The trained models will be stored within the folder models, one for each fusion scheme (voting, view-pooling and concatenation) as well for models for individual view (single-view 0, single-view 1, single-view 2).

The models can be evaluated for experiment number X using

python test.py expX

Each experiment is described in the next section.

This will create evaluation files for each experiment in the results folder results/expX

After the evaluation script is run, the results can be visualised using

python plot_results.py expX

To evaluate the execution time of our models use

python test_time.py

The training and testing functions are labeled according to the experiment conducted. A description of each experiment is listed below:

- Train and test on impairment free data

- Train on impairment free, evaluate with fixed occlusion size 0.3

- Train on impairment free, evaluate with fixed occlusion 0.3 and additive gaussian noise sigma 0.05

- Train on impaired data, evaluate on different occlusion sizes

- Train on impaired data, evaluate on different gaussian noise powers

- Train on impaired data, evaluate with same occlusion level (0.3) but with random object orientation