This repository is the official implementation of Analogist.

Analogist: Out-of-the-box Visual In-Context Learning with Image Diffusion Model.

Zheng Gu, Shiyuan Yang, Jing Liao, Jing Huo, Yang Gao.

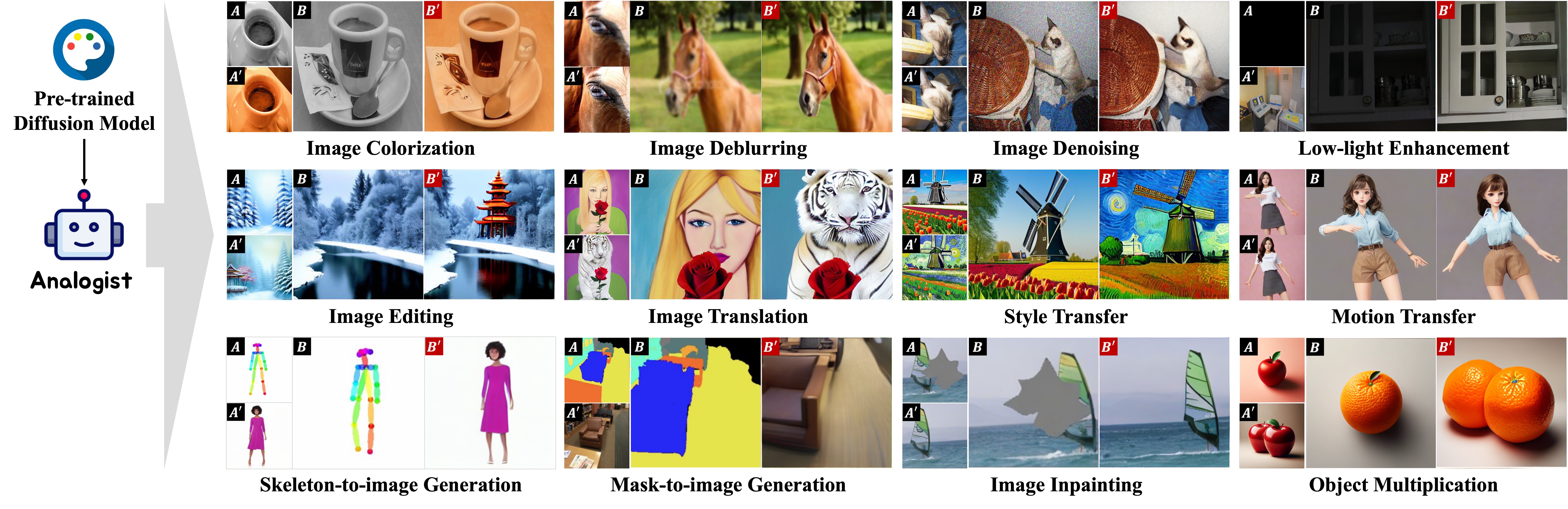

TL;DR: Analogist is a visual In-Context Learning approach that leverages pre-trained Diffusion Models for various tasks with few example pairs, without requiring fine-tuning or optimization.

First create the conda environment

conda create --name analogist python==3.10.0

conda activate analogistThen install the additional requirements

pip install -r requirements.txtFirst prepare the input grid image for visual prompting:

python visual_prompting.py \

--img_A example/colorization_raw/001.png \

--img_Ap example/colorization_raw/002.png \

--img_B example/colorization_raw/003.png \

--output_dir example/colorization_processedThis will generate two images under the output_dir folder:

-

input.png:$2\times2$ grid image consisting of$A$ ,$A'$ , and$B$ . -

input_marked.png: edited grid image with marks and arrows added.

It is recommended to obtain an available OpenAI API and adding it into your environment:

export OPENAI_API_KEY="your-api-key-here"Or you can add it directly in textual_prompting.py:

api_key = os.environ.get('OPENAI_API_KEY', "your-api-key-here")Then ask GPT-4V to get the text prompts for

python textual_prompting.py \

--image_path example/colorization_processed/input_marked.png \

--output_prompt_file example/colorization_processed/prompts.txtYou can ask GPT-4V to generate several prompts in one request. The text prompts will be saved in prompts.txt, with each line shows one text prompt.

For those who do not have OPENAI_API_KEY, you can try publicly available vision-language models(MiniGPT, LLaVA, et al.) with input_marked.png as visual input and the following prompt as textual input:

Please help me with the image analogy task: take an image A and its transformation A’, and provide any image B to produce an output B’ that is analogous to A’. Or, more succinctly: A : A’ :: B : B’. You should give me the text prompt of image B’ with no more than 5 words.

After getting the visual prompt and textual prompt, we can take them together to run the diffusion inpainting model.

python analogist.py \

--input_grid example/colorization_processed/input.png \

--prompt_file example/colorization_processed/prompts.txt \

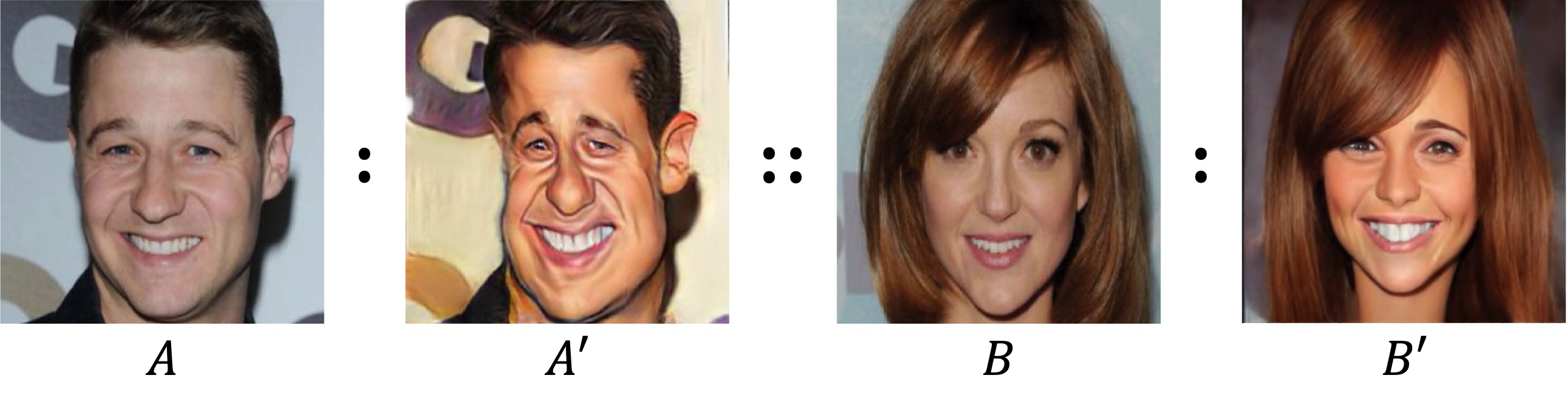

--output_dir results/example/colorizationHere is another example of translating a photo into a caricature. Note that we use the same value of hyper-parameters in the quantitative evaluation. However, it is recommended to try different combinations in specific cases for better results.

python analogist.py \

--input_grid example/caricature_processed/input.png \

--prompt_file example/caricature_processed/prompts.txt \

--output_dir results/example/caricature \

--num_images_per_prompt 1 --res 1024 \

--sac_start_layer 4 --sac_end_layer 9 \

--cam_start_layer 4 --cam_end_layer 9 \

--strength 0.96 --scale_sac 1.3 --guidance_scale 15You can achieve the datasets by huggingface or onedrive.

Please put the datasets in a datasets folder. We also provide the GPT-4V prompts that we used in our experiments. Please see the *_gpt4_out.txt files.

Analogist

├── datasets

│ ├── low_level_tasks_processed

│ │ ├── ...

│ │ ├── *_gpt4_out.txt

│ ├── manipulation_tasks_processed

│ │ ├── ...

│ │ ├── *_gpt4_out.txt

│ ├── vision_tasks_processed

│ │ ├── ...

│ │ ├── *_gpt4_out.txt

Run the following script to run Analogist for these tasks.

bash evaluation.shWe calculate the CLIP direction similarity to evaluate how faithfully the transformations provided by the model adhere to the transformations contained in the given examples.

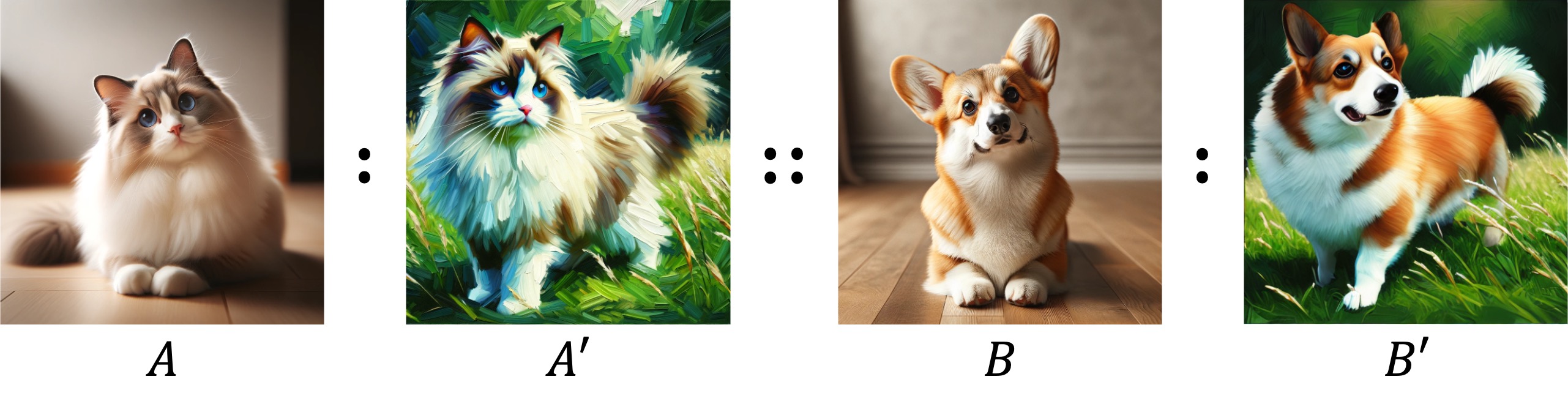

python calc_clip_sim.pyIn this case, the only modification is to swap the position of

python visual_prompting.py \

--img_A example/corgi_raw/001.png \

--img_Ap example/corgi_raw/003.png \

--img_B example/corgi_raw/002.png \

--output_dir example/corgi_processedThe other things are the same.

python analogist.py \

--input_grid example/corgi_processed/input.png \

--prompt_file example/corgi_processed/prompts.txt \

--output_dir results/example/corgi \

--num_images_per_prompt 1 --res 1024 \

--sac_start_layer 4 --sac_end_layer 8 \

--cam_start_layer 4 --cam_end_layer 8 \

--strength 0.8 --scale_sac 1.3 --guidance_scale 15You may be interested in the following related papers:

- Diffusion Image Analogies (code)

- Visual Prompting via Image Inpainting (project page)

- Images Speak in Images: A Generalist Painter for In-Context Visual Learning (project page)

- In-Context Learning Unlocked for Diffusion Models (project page)

- Visual Instruction Inversion: Image Editing via Visual Prompting (project page)

- Context Diffusion: In-Context Aware Image Generation (project page)

We borrow the code of attention control from Prompt-to-Prompt and MasaCtrl.

If you find our code or paper helps, please consider citing:

@article{gu2024analogist,

title={Analogist: Out-of-the-box Visual In-Context Learning with Image Diffusion Model},

author={GU, Zheng and Yang, Shiyuan and Liao, Jing and Huo, Jing and Gao, Yang},

journal={ACM Transactions on Graphics (TOG)},

year={2024},

}