This is the official implementation of a paper titled "The Role of Masking for Efficient Supervised Knowledge Distillation of Vision Transformers (ECCV 2024)"

Seungwoo Son, Jegwang Ryu, Namhoon Lee, Jaeho Lee

Pohang University of Science and Technology (POSTECH)

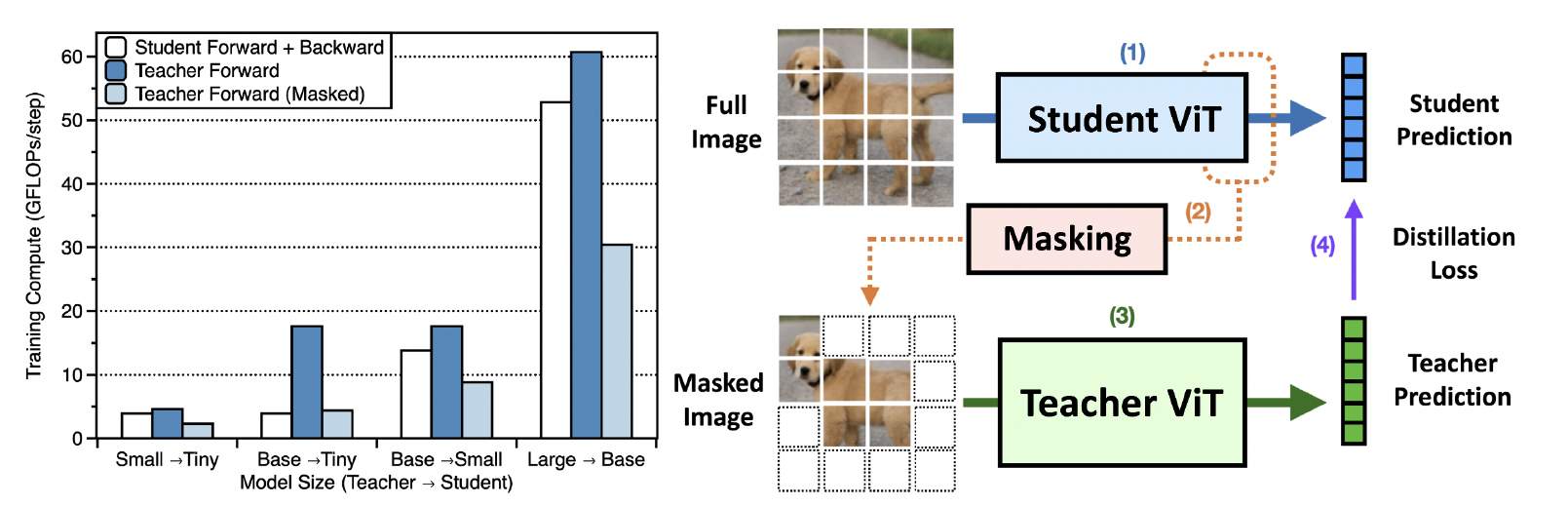

Our method, MaskedKD, reduces supervision cost by masking teacher ViT input based on student attention, maintaining student accuracy while saving computation.

Since we have implemeneted on DeiT and MAE's official code, just following MAE's guideline.

Download and extract ImageNet train and val images from http://image-net.org/. The directory structure is:

│path/to/imagenet/

├──train/

│ ├── n01440764

│ │ ├── n01440764_10026.JPEG

│ │ ├── n01440764_10027.JPEG

│ │ ├── ......

│ ├── ......

├──val/

│ ├── n01440764

│ │ ├── ILSVRC2012_val_00000293.JPEG

│ │ ├── ILSVRC2012_val_00002138.JPEG

│ │ ├── ......

│ ├── ......

To train a DeiT-Small student with a DeiT-Base teacher, run:

python -m torch.distributed.launch --nproc_per_node=8 --use_env main.py \

--model deit_small_patch16_224 --teacher_model deit_base --epochs 300 \

--batch-size 128 --data-path /path/to/ILSVRC2012/ --distillation-type soft \

--distillation-alpha 0.5 --distillation-tau 1 --input-size 224 --maskedkd --len_num_keep 98 \

--output_dir /path/to/output_dir/This repo is based on DeiT, MAE and pytorch-image-models.