Aligning how we can setup our GitOps tooling so that it fits with out team structure is a tricky job, especially when it comes to RBAC, permissions and some of the default's that the RedHat gitops-operator set for us. This README discusses how we can use the redhat-cop/gitops-operator chart to better align our deployments with our teams.

- ArgoCD and Team Topologies

- Table of Content

- Introduction

- Background on OpenShift GitOps Operator and RBAC

- redhat-cop/gitops-operator Helm Chart

- Common Patterns of Deployment

- Helm Setup & Bootstrap Projects

- Cluster ArgoCD for Everyone

- Don't deploy Platform ArgoCD, Cluster ArgoCD per Team

- Platform ArgoCD, Namespaced ArgoCD per Team

- Don't deploy Platform ArgoCD, Namespaced ArgoCD per Team

- One ArgoCD To Rule Them All

- Using Custom RBAC for Team ArgoCD

- Namespaced ArgoCD and Creating projects/namespaces

- Cleanup

In Team Topologies the Product Team is a Stream Aligned team that focusses on application(s) that compose a business service.

The Platform Team is usually composed of Infra-Ops-SRE-Data folks that help provision infrastructure in a self-service fashion for the varying product teams.

The interaction between these two teams is commonly an API - that is the platform team provide services that the product teams can consume in a self-service manner.

ArgoCD is a commonly used GitOps tool that allows teams to declaratively manage the deployments of their applications, middleware and tooling from source code.

There are basically two service accounts that matter with ArgoCD (there are other's for dex and redis which we don't need to focus on for this discussion):

- the

application-controllerservice account - this is the backend controller service account - the

argocd-serverservice account - this is the UI service account

The Cluster connection settings in ArgoCD are controlled by the RedHat gitops-operator. By default the operator will provision a single cluster scoped ArgoCD instance in the gitops-operator namespace.

The RBAC that is associated with the the default instance does not give you custer-admin, although the privileges are pretty wide.

Cluster Scoped ArgoCD

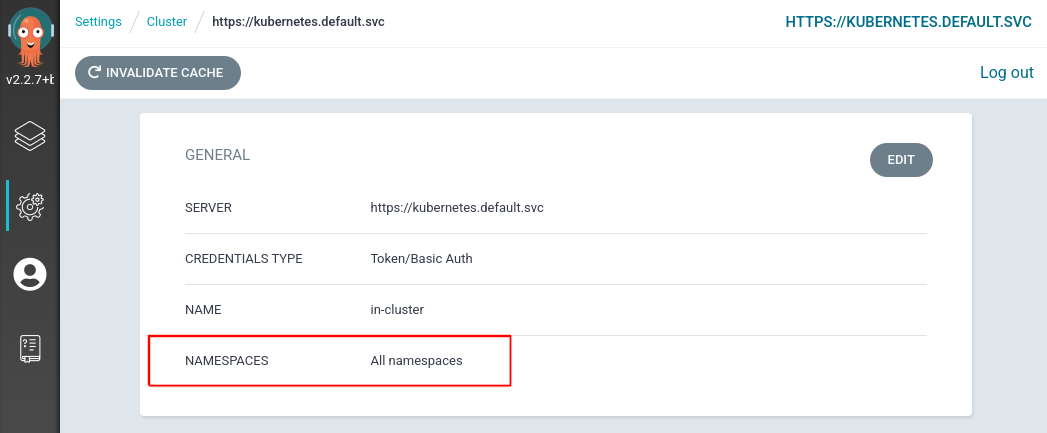

You can tell if your ArgoCD instance is able to see All namespaces in the cluster be examining the default connection under Settings > Cluster

Namespaced Scoped ArgoCD

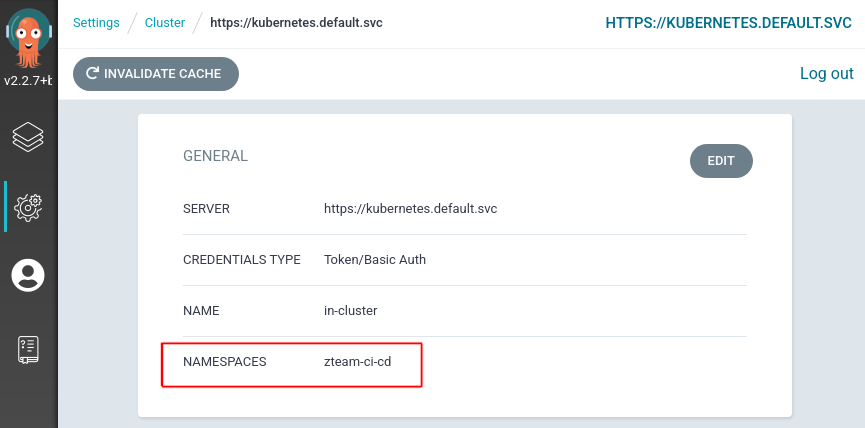

As a normal team user (a project admin), when you deploy ArgoCD using the operator, your cluster connection is namespaced. This means that your ArgoCD can only control objects listed in the comma separated list of NAMESPACES against the cluster connection. Your ArgoCD cannot access cluster resources i.e. for example it cannot create projects or namespaces. For example, the zteam-ci-cd ArgoCD instance below is in namespaced mode:

We have created a helm chart that allows finer grained control of our team based ArgoCD instances. In particular, it allows us to configure the gitops-operator Subscription so that we may deploy cluster scoped team instances of ArgoCD as well as provide finer grained RBAC control e.g. full cluster-admin, or just a subset of Cluster Role rules. We can control the cluster connection mode and whether to deploy a default ArgoCD instance by manipulating the environment variables set on the gitops-operator Subscription:

config:

env:

# don't deploy the default ArgoCD instance in the openshift-gitops project

- name: DISABLE_DEFAULT_ARGOCD_INSTANCE

value: 'true'

# ArgoCD in these namespaces will have cluster scoped connections

- name: ARGOCD_CLUSTER_CONFIG_NAMESPACES

value: 'xteam-ci-cd,yteam-ci-cd,zteam-ci-cd'Let's examine some common patterns of deployment with different trade offs in terms of cluster permissions.

In the examples that follow we use the following helm chart repository. Add it to your local config by running:

# add the redhat-cop repository

helm repo add redhat-cop https://redhat-cop.github.io/helm-chartsThere are four generic teams used in the examples:

ops-sre- this is the platform team. they operate at cluster scope and use the ArgoCD in theopenshift-gitopsnamespace.xteam, yteam, zteam- these are our stream aligned product teams. they operate at cluster or namespaced scope and use ArgoCD in their<team>-ci-cdnamespace.

There are many ways to create the team projects. oc new-project works well!. We can make use of the bootstrap project chart in the redhat-cop that manages the role bindings and namespaces for our teams. In a normal setup the Groups would come from LDAP/OAuth providers and be provisioned on OpenShift already.

Let's create the project configurations:

# create namespaces using bootstrap-project chart

cat <<EOF > /tmp/bootstrap-values.yaml

namespaces:

- name: xteam-ci-cd

operatorgroup: true

bindings:

- name: labs-devs

kind: Group

role: edit

- name: labs-admins

kind: Group

role: admin

namespace: xteam-ci-cd

- name: yteam-ci-cd

operatorgroup: true

bindings:

- name: labs-devs

kind: Group

role: edit

- name: labs-admins

kind: Group

role: admin

namespace: yteam-ci-cd

- name: zteam-ci-cd

operatorgroup: true

bindings:

- name: labs-devs

kind: Group

role: edit

- name: labs-admins

kind: Group

role: admin

namespace: zteam-ci-cd

serviceaccounts:

EOFThere are multiple ways to deploy this helm chart. We can easily install it in our cluster (just do this for now):

# bootstrap our projects

helm upgrade --install bootstrap \

redhat-cop/bootstrap-project \

-f /tmp/bootstrap-values.yaml \

--namespace argocd --create-namespaceelse we could use a cluster-scope ArgoCD instance and an Application if ArgoCD existed already (chicken and egg situation here):

# bootstrap namespaces using ops-sre argo instance

cat <<EOF | oc apply -n openshift-gitops -f-

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

finalizers:

- resources-finalizer.argocd.argoproj.io

name: bootstrap

spec:

destination:

namespace: gitops-operator

server: https://kubernetes.default.svc

project: default

source:

repoURL: https://redhat-cop.github.io/helm-charts

targetRevision: 1.0.1

chart: bootstrap-project

helm:

values: |-

$(sed 's/^/ /' /tmp/bootstrap-values.yaml)

syncPolicy:

automated:

prune: true

selfHeal: true

syncOptions:

- Validate=true

EOFNOTE You must deploy these namespaces first for each of the examples below that deploy team ArgoCD's.

It may also be desireable for the teams to own the creation of namespaces which is not covered here, however that is easily done by altering the use of the methods above.

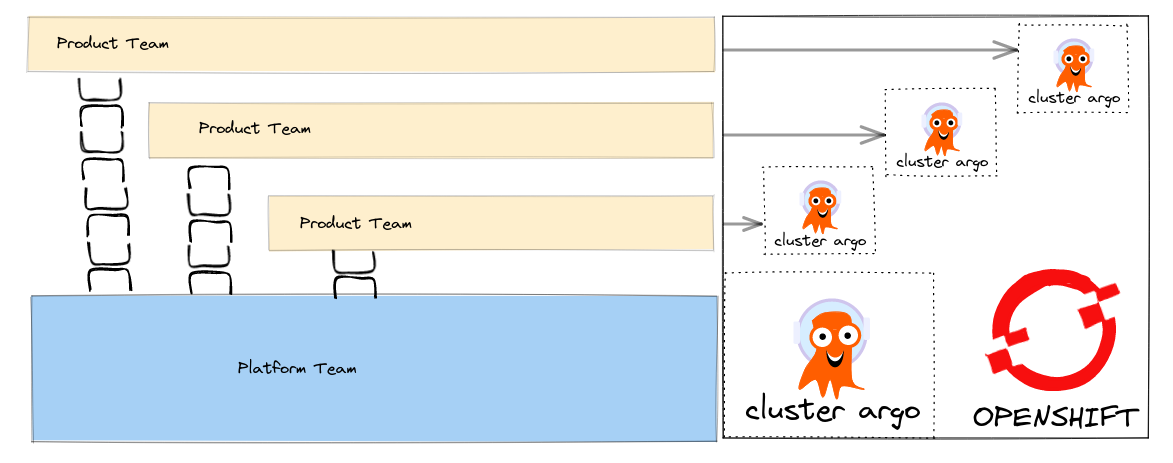

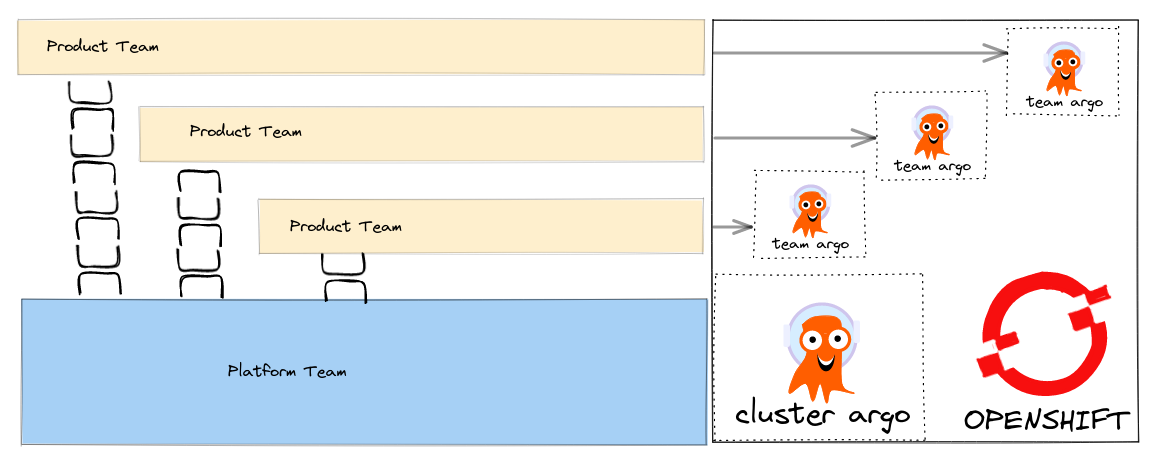

In this pattern there is a cluster scoped ArgoCD instance that the Platform Team controls. The helm chart is used to deploy:

- The RedHat GitOps Operator (cluster scoped)

- A Ops-SRE (cluster scoped) ArgoCD instance

- Team (cluster scoped) ArgoCD instances

Individual teams can then use their cluster scoped ArgoCD to deploy their applications, middleware and tooling including namespaces and operators.

This pattern is useful when:

- High Trust - Teams are trusted at the cluster scope

- A level of isolation is required hence each team get their own ArgoCD instance

Deploy the team bootstrap namespaces as above.

Using a cluster-admin user, use helm and the redhat-cop/gitops-operator chart to deploy an ops-sre (cluster scoped) ArgoCD, and then deploy three team (cluster scoped) ArgoCD instances.

# deploy the argocd instances

helm upgrade --install argocd \

redhat-cop/gitops-operator \

--set operator.disableDefaultArgoCD=false \

--set namespaces={'xteam-ci-cd,yteam-ci-cd,zteam-ci-cd'} \

--namespace argocd --create-namespaceOnce deployed we should see four argocd instances:

oc get argocd --all-namespaces

NAMESPACE NAME AGE

openshift-gitops openshift-gitops 3m

xteam-ci-cd argocd 3m

yteam-ci-cd argocd 3m

zteam-ci-cd argocd 3mAll the ArgoCD's cluster connection's are cluster scoped.

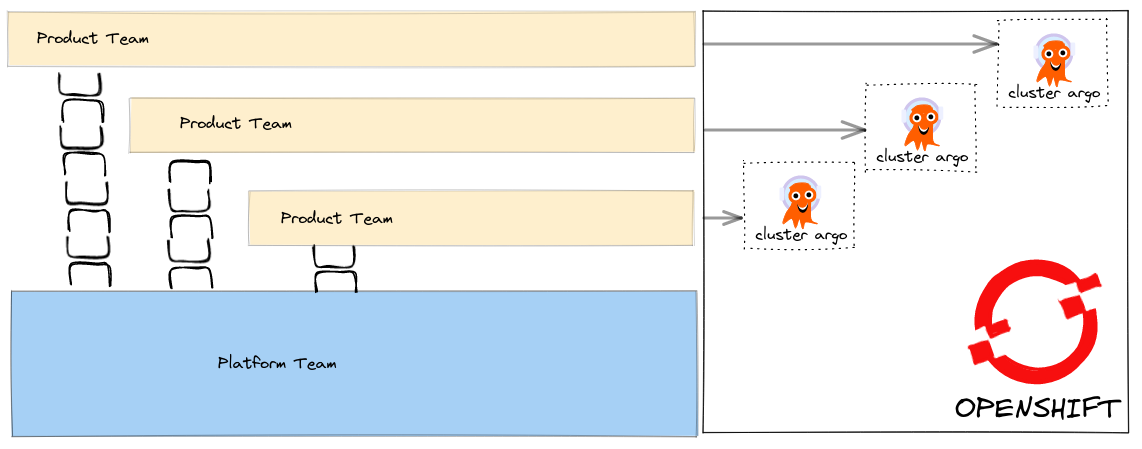

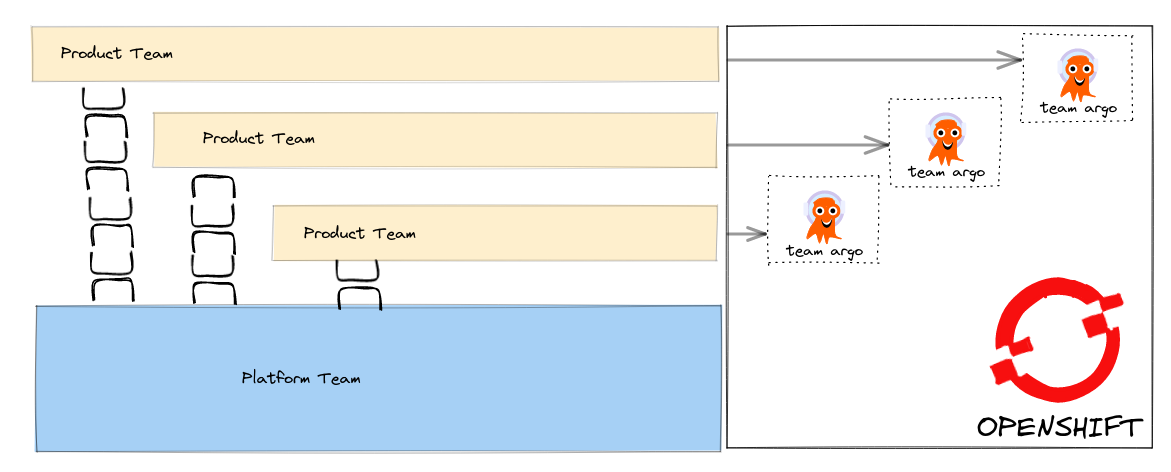

In this pattern the ops-sre instance is not deployed.

We use a helm chart that deploys:

- The RedHat GitOps Operator (cluster scoped)

- Team (cluster scoped) ArgoCD instances

Individual teams can use their cluster scoped ArgoCD to deploy their applications, middleware and tooling including namespaces and operators.

This pattern is useful when:

- High Trust - Teams are trusted at the cluster scope

- A level of isolation is required hence each team get their own ArgoCD instance

- Teams can self-administer their ArgoCD with little assistance from Ops-SRE

Deploy the team bootstrap namespaces as above.

Using a cluster-admin user, use helm and the redhat-cop/gitops-operator chart to deploy three team (cluster scoped) ArgoCD instances.

# deploy the operator, and team instances

helm upgrade --install teams-argocd \

redhat-cop/gitops-operator \

--set namespaces={"xteam-ci-cd,yteam-ci-cd,zteam-ci-cd"} \

--namespace argocdOnce deployed we should see three argocd instances:

oc get argocd --all-namespaces

NAMESPACE NAME AGE

xteam-ci-cd argocd 3m

yteam-ci-cd argocd 3m

zteam-ci-cd argocd 3mThe team ArgoCD's cluster connection's are cluster scoped.

This is the default pattern you get out of the box with OpenShift.

In this pattern there is a cluster scoped ArgoCD instance that the Platform Team controls. The helm chart is used to deploy:

- The RedHat GitOps Operator (cluster scoped)

- A Ops-SRE (cluster scoped) ArgoCD instance

- Team (namespace scoped) ArgoCD instances

Individual teams can then use their namespace scoped ArgoCD to deploy their applications, middleware and tooling, but cannot deploy cluster-scoped resources e.g. teams cannot deploy namespaces or projects or operators as they require cluster scoped privilege.

This pattern is useful when:

- Medium Trust - Teams are trusted to admin their own namespaces which are provisioned for them by the platform team.

- A level of isolation is required hence each team get their own ArgoCD instance

Deploy the team bootstrap namespaces as above.

Using a cluster-admin user, use helm and the redhat-cop/gitops-operator chart to deploy an ops-sre (cluster scoped) ArgoCD, and then deploy three team (cluster scoped) ArgoCD instances.

# deploy the operator, ops-sre instance, namespaced scoped team instances

helm upgrade --install argocd \

redhat-cop/gitops-operator \

--set operator.disableDefaultArgoCD=false \

--set namespaces={"xteam-ci-cd,yteam-ci-cd,zteam-ci-cd"} \

--set teamInstancesAreClusterScoped=false \

--namespace argocd --create-namespaceOnce deployed we should see four argocd instances:

oc get argocd --all-namespaces

NAMESPACE NAME AGE

openshift-gitops openshift-gitops 2m35s

xteam-ci-cd argocd 2m35s

yteam-ci-cd argocd 2m35s

zteam-ci-cd argocd 2m35sThe team ArgoCD's cluster connection's are namespace scoped.

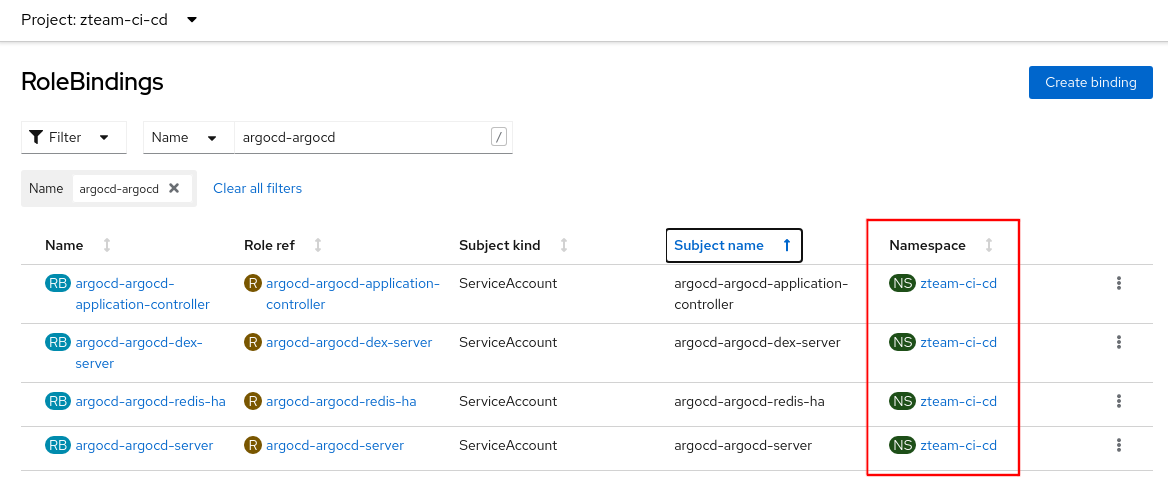

For a given team ArgoCD, what we see is all of the namespace Role Binding's applied from the gitops-operator:

In this pattern the ops-sre instance is not deployed.

We use a helm chart that deploys:

- The RedHat GitOps Operator (cluster scoped)

- Team (namespace scoped) ArgoCD instances

Individual teams can use their namespace scoped ArgoCD to deploy their applications, middleware and tooling but cannot deploy cluster-scoped resources e.g. teams cannot deploy namespaces or projects or operators as they require cluster scoped privilege.

This pattern is useful when:

- Medium Trust - Teams are trusted to admin their own namespaces which are provisioned for them by the platform team.

- A level of isolation is required hence each team get their own ArgoCD instance

Deploy the team bootstrap namespaces as above.

Using a cluster-admin user, use helm and the redhat-cop/gitops-operator chart to deploy three team (cluster scoped) ArgoCD instances.

# deploy the operator, namespaced scoped team instances

helm upgrade --install argocd \

redhat-cop/gitops-operator \

--set namespaces={"xteam-ci-cd,yteam-ci-cd,zteam-ci-cd"} \

--set teamInstancesAreClusterScoped=false \

--namespace argocd --create-namespaceOnce deployed we should see three argocd instances:

oc get argocd --all-namespaces

NAMESPACE NAME AGE

xteam-ci-cd argocd 17s

yteam-ci-cd argocd 17s

zteam-ci-cd argocd 17sThe team ArgoCD's cluster connection's are namespace scoped.

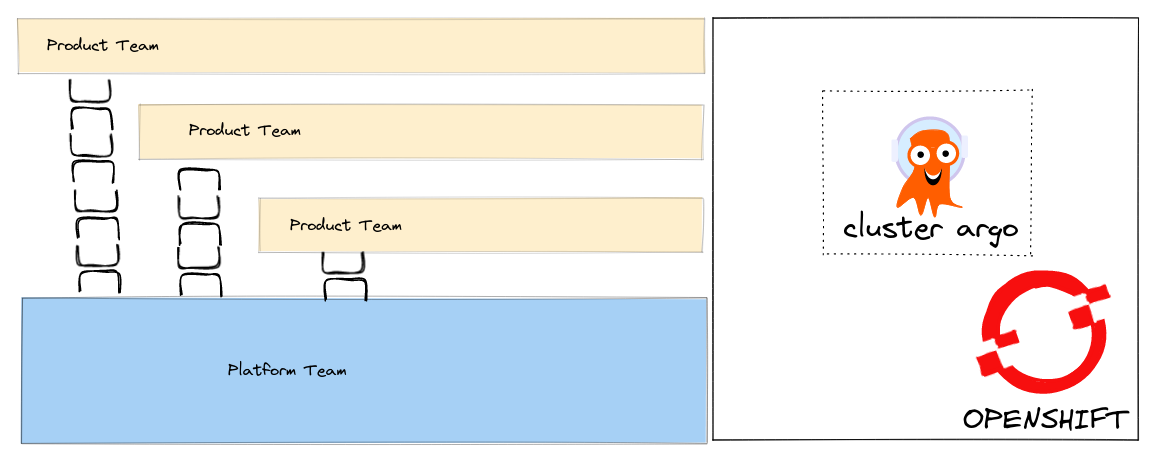

In this pattern the ops-sre instance is the only one deployed.

We use a helm chart that deploys:

- The RedHat GitOps Operator (cluster scoped)

- A Ops-SRE (cluster scoped) ArgoCD instance

Everyone will need to be given edit access to the openshift-gitops namespace e.g. as a cluster-admin user:

# allow labs-admin group edit access to

oc adm policy add-role-to-group edit labs-admins -n openshift-gitopsThis pattern is useful when:

- High Trust - everyone shares one privileged ArgoCD instance.

- No isolation required.

Using a cluster-admin user, use helm and the redhat-cop/gitops-operator chart to deploy one (cluster scoped) ArgoCD instance.

# deploy the operator, cluster scoped ops-sre instance

helm upgrade --install argocd \

redhat-cop/gitops-operator \

--set namespaces= \

--set operator.disableDefaultArgoCD=false \

--namespace argocd --create-namespaceoc get argocd --all-namespaces

NAMESPACE NAME AGE

openshift-gitops openshift-gitops 20sThe ArgoCD cluster connection is cluster scoped.

We can also customize the RBAC for our ArgoCD team instances. As a default, the helm chart currently sets up a cluster-admin role for the controller:

rules:

- apiGroups:

- '*'

resources:

- '*'

verbs:

- '*'

- nonResourceURLs:

- '*'

verbs:

- '*'Let's imagine we want to apply a slightly more restricted RBAC for teams ArgoCD e.g. let's use the same RBAC as the current default ClusterRole rules that get applied for the ops-sre ArgoCD instance.

We have placed these Role rules in two files in this repo:

controller-cluster-rbac-rules.yaml- role rules for the backend controller service accountserver-cluster-rbac-rules.yaml- roles rules for the UI service account

Lets create a custom values file for our helm chart:

cat <<EOF > /tmp/custom-values.yaml

namespaces:

- xteam-ci-cd

- yteam-ci-cd

- zteam-ci-cd

clusterRoleRulesServer:

$(sed 's/^/ /' server-cluster-rbac-rules.yaml)

clusterRoleRulesController:

$(sed 's/^/ /' controller-cluster-rbac-rules.yaml)

EOFDeploy the team bootstrap namespaces as above.

Using a cluster-admin user, use helm and the redhat-cop/gitops-operator chart to deploy three team (cluster scoped) ArgoCD instances.

# deploy the operator, namespaced scoped team instances

helm upgrade --install argocd \

redhat-cop/gitops-operator \

-f /tmp/custom-values.yaml \

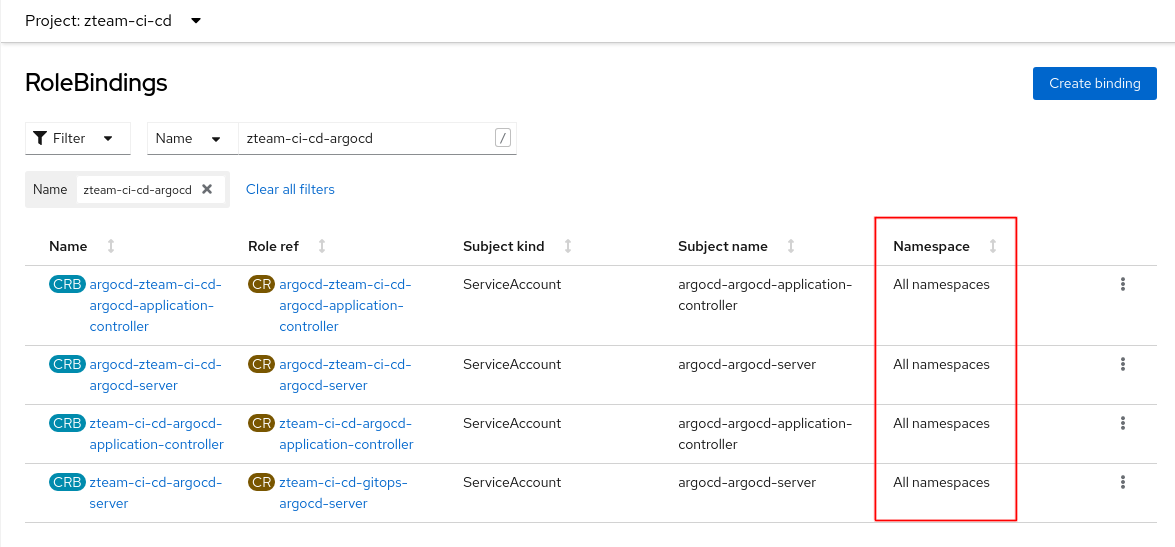

--namespace argocd --create-namespaceFor a given team ArgoCD (cluster scoped), what we are the Cluster Role Binding's applied - two from the gitops-operator starting with the name argocd-* and two from the helm chart starting with the name zteam-ci-cd-* (i have not show the redis and dex namespaces Role Bindings from the gitops-operator):

OpenShift by default allows normal authenticated users to create projects in a self-service manner. This is advantageous for all sorts of reasons related to Continuous Delivery. It is not possible to allow namespaced ArgoCD these same permissions today using the gitops-operator, although there is an upstream enhancement request for it.

To quickly remove everything between exercise deployments i use this to Nuke from orbit:

helm delete argocd -n argocd

helm delete bootstrap -n argocd

oc delete csv openshift-gitops-operator.v1.4.3 -n openshift-operators

oc patch argocds.argoproj.io argocd -n xteam-ci-cd --type='json' -p='[{"op": "remove" , "path": "/metadata/finalizers" }]'

oc patch argocds.argoproj.io argocd -n yteam-ci-cd --type='json' -p='[{"op": "remove" , "path": "/metadata/finalizers" }]'

oc patch argocds.argoproj.io argocd -n zteam-ci-cd --type='json' -p='[{"op": "remove" , "path": "/metadata/finalizers" }]'

oc patch argocds.argoproj.io openshift-gitops -n openshift-gitops --type='json' -p='[{"op": "remove" , "path": "/metadata/finalizers" }]'

oc delete project argocd

oc delete project openshift-gitops

project-finalize.sh argocd

project-finalize.sh openshift-gitops

project-finalize.sh xteam-ci-cd

project-finalize.sh yteam-ci-cd

project-finalize.sh zteam-ci-cdIn the ideal world, finalizers would work as expected.