If I could predict the stock market with even as much as 30% error, I'd be rich enough to inherit the entire nation of Wakanda, vibranium mountain and all.

There's a reason why an entire district of New York City is dedicated to the art of taming the stock market. Whoever cracks the code even slightly has the potential to get absurd levels of returns, and with those returns, lots of bills.

I'll admit the title of this article is a bit misleading. There is no current method to even remotely accurately predict the stock market, but that's kind of the point.

- Predicting the market with Stocker

- Predicting the market with linear regression

- Predicting the market with neural networks

- Conclusion -> it's safer to invest in ETFs

!git clone https://github.com/WillKoehrsen/Data-Analysis.git

import os

!ls

Data-Analysis gdrive sample_data

os.chdir('Data-Analysis')

!ls

additive_models learning_skills sentdex_data_analysis

'bayesian drake equation' LICENSE setup

bayesian_log_reg logistic_regression slack_interaction

bayesian_lr medium sp500tickers.pickle

copernican nyc_traffic_data statistical_significance

cyclical-features over_vs_under statistics

data-science-tips pairplots stocker

datashader-work plotly think_complexity

distributions poisson time_features

ecdf prediction-intervals univariate_dist

economics random_forest_explained web_automation

example_notebook README.md weighter

Facts recall_precision weight_loss_challenge

geo requirements.txt widgets

os.chdir('stocker')

!ls

data __pycache__ 'Stocker Prediction Usage.ipynb'

dev readme.md stocker.py

images 'Stocker Analysis Usage.ipynb'

!pip install quandl

!pip install pytrends

import stocker

from stocker import Stocker

goog = Stocker('GOOGL')

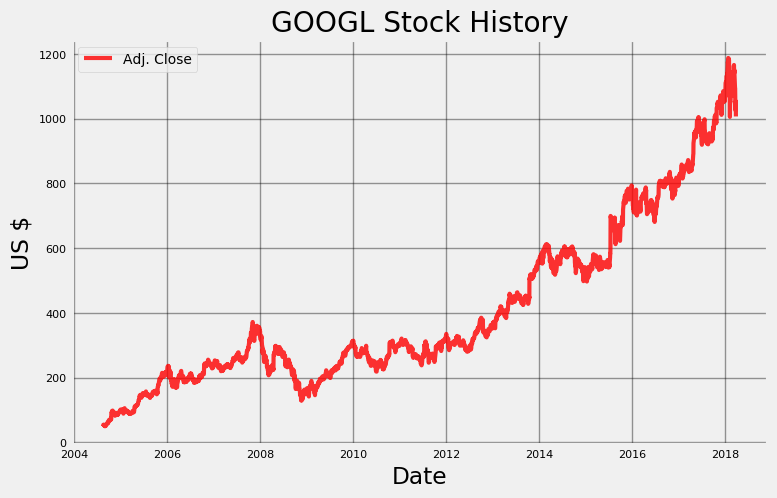

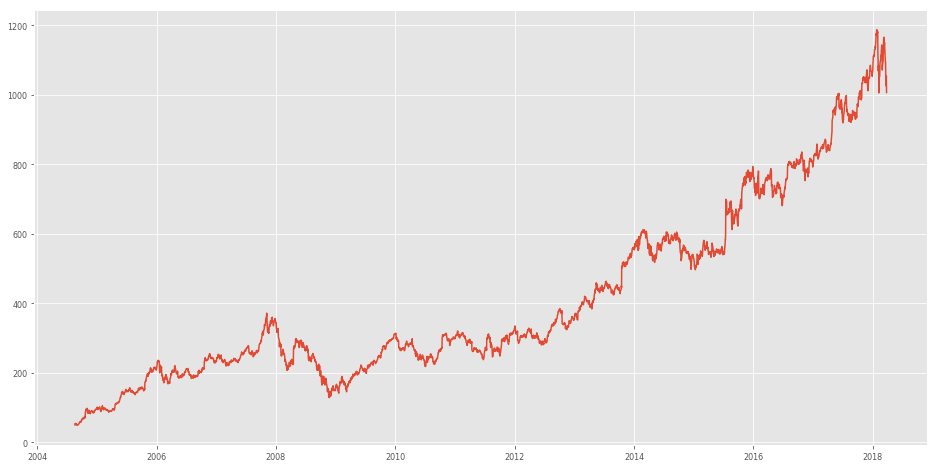

goog.plot_stock()

Maximum Adj. Close = 1187.56 on 2018-01-26 00:00:00.

Minimum Adj. Close = 50.16 on 2004-09-03 00:00:00.

Current Adj. Close = 1006.94 on 2018-03-27 00:00:00.

If you pay attention, you'll notice that the dates for the Stocker object are not up-to-date. It stops at 2018--3-27. Taking close look at the actual module code, we'll see that the data is taken from Quandl's WIKI exchange. Perhaps the data is not kept up to date?

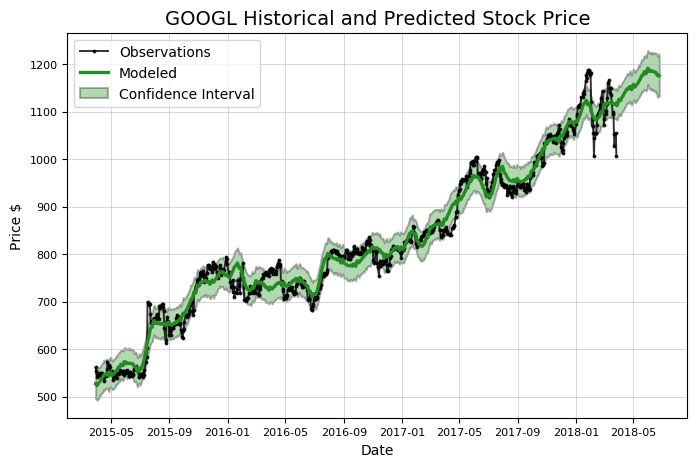

We can use Stocker to conduct technical stock analysis, but for now we will focus on being mediums. Stocker uses a package created by Facebook called prophet which is good for additive modeling.

model, model_data = goog.create_prophet_model(days=90)

# Let's make some predictions

Predicted Price on 2018-06-25 00:00:00 = $1175.64

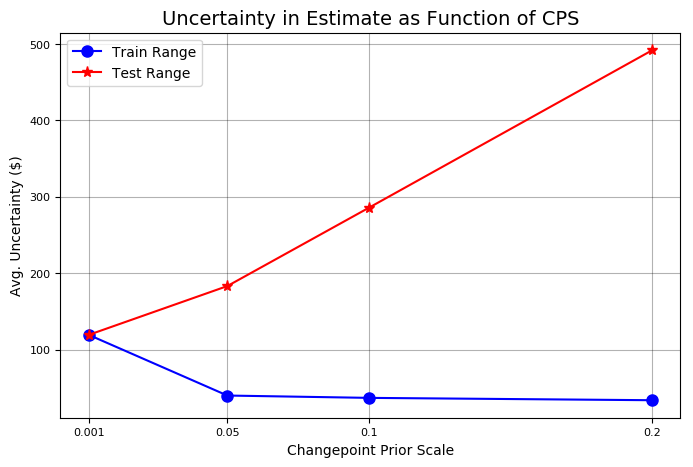

Now we will test the stocker predictions. We need to create a test set and a training set. We'll have our training set to be 2014-2016, and our test set to be 2017. Let's see how accurate this model is.

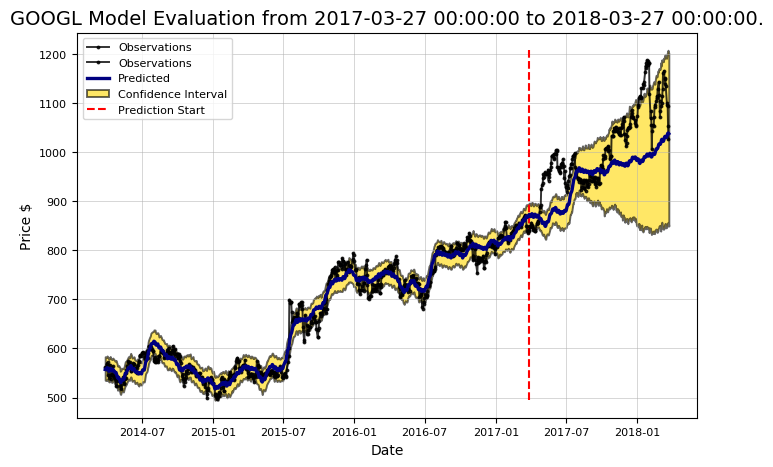

goog.evaluate_prediction()

Prediction Range: 2017-03-27 00:00:00 to 2018-03-27 00:00:00.

Predicted price on 2018-03-24 00:00:00 = $1037.84.

Actual price on 2018-03-23 00:00:00 = $1026.55.

Average Absolute Error on Training Data = $14.31.

Average Absolute Error on Testing Data = $65.52.

When the model predicted an increase, the price increased 58.27% of the time.

When the model predicted a decrease, the price decreased 48.18% of the time.

The actual value was within the 80% confidence interval 63.60% of the time.

This is absolutely horrible!

We'll try again, and this time we'll adjust some hyperparameters.

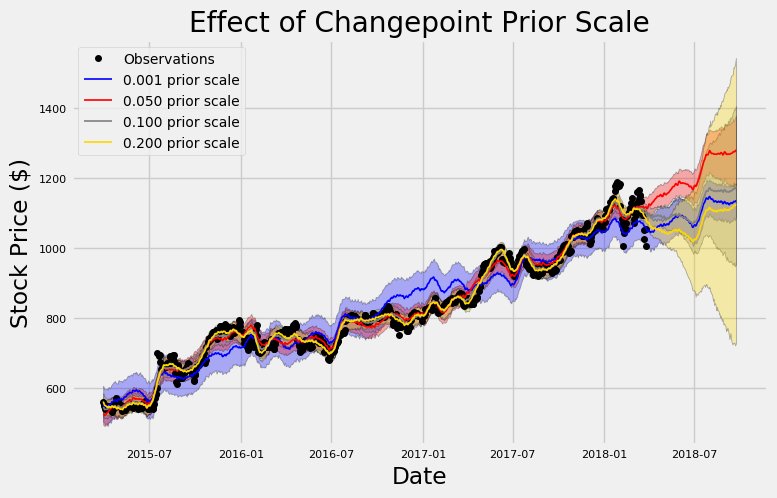

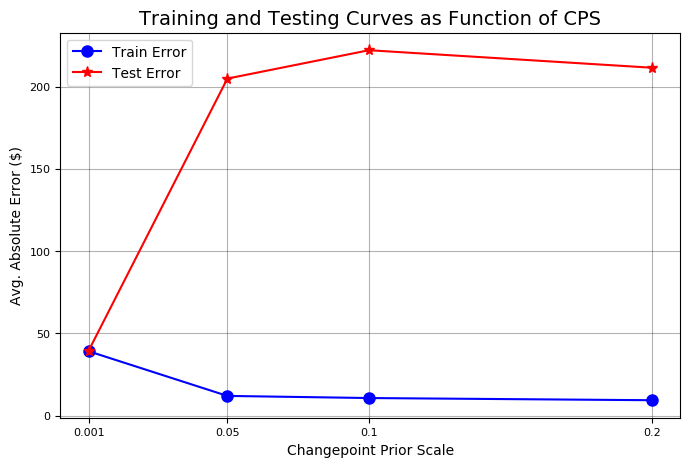

# changepoint priors is the list of changepoints to evaluate

goog.changepoint_prior_analysis(changepoint_priors=[0.001, 0.05, 0.1, 0.2])

goog.changepoint_prior_validation(start_date='2016-01-04', end_date='2017-01-03', changepoint_priors=[0.001, 0.05, 0.1, 0.2])

Validation Range 2016-01-04 00:00:00 to 2017-01-03 00:00:00.

cps train_err train_range test_err test_range

0 0.001 39.201088 119.332565 39.458360 119.175959

1 0.050 12.057805 39.832086 204.848301 183.146491

2 0.100 10.765865 36.778261 222.082001 285.733082

3 0.200 9.441183 33.703218 211.435183 491.831858

goog.evaluate_prediction()

Prediction Range: 2017-03-27 00:00:00 to 2018-03-27 00:00:00.

Predicted price on 2018-03-24 00:00:00 = $1025.88.

Actual price on 2018-03-23 00:00:00 = $1026.55.

Average Absolute Error on Training Data = $10.92.

Average Absolute Error on Testing Data = $72.17.

When the model predicted an increase, the price increased 58.74% of the time.

When the model predicted a decrease, the price decreased 49.06% of the time.

The actual value was within the 80% confidence interval 80.00% of the time.

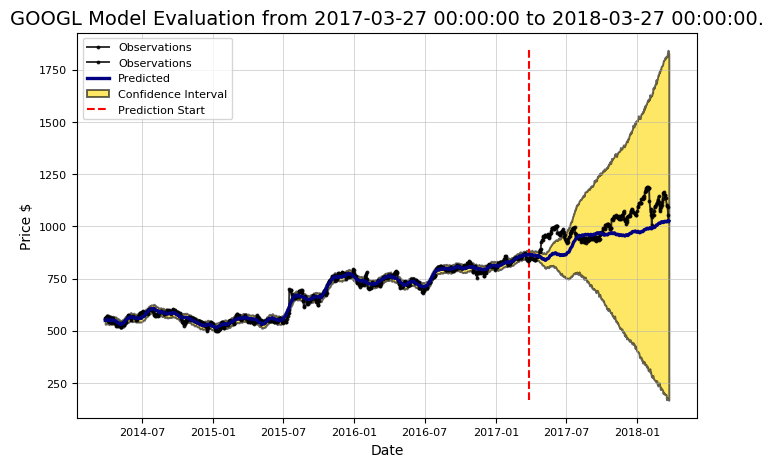

Let's see how well our forecasts would play out in the real stock market.

goog.evaluate_prediction(nshares=1000)

/content/Data-Analysis/stocker/stocker.py:613: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: http://pandas.pydata.org/pandas-docs/stable/indexing.html#indexing-view-versus-copy

You played the stock market in GOOGL from 2017-03-27 00:00:00 to 2018-03-27 00:00:00 with 1000 shares.

When the model predicted an increase, the price increased 58.74% of the time.

When the model predicted a decrease, the price decreased 49.06% of the time.

The total profit using the Prophet model = $87380.00.

The Buy and Hold strategy profit = $188040.00.

Thanks for playing the stock market!

This shows that it's better to simply invest for the long term.

# Getting the dataframe of the data

goog_data = goog.make_df('2004-08-19', '2018-03-27')

#2004-08-19 00:00:00 to 2018-03-27 00:00:00.

goog_data.head(50)

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| Date | Open | High | Low | Close | Volume | Ex-Dividend | Split Ratio | Adj. Open | Adj. High | Adj. Low | Adj. Close | Adj. Volume | ds | y | Daily Change | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2004-08-19 | 100.010 | 104.06 | 95.96 | 100.335 | 44659000.0 | 0.0 | 1.0 | 50.159839 | 52.191109 | 48.128568 | 50.322842 | 44659000.0 | 2004-08-19 | 50.322842 | 0.163003 |

| 1 | 2004-08-20 | 101.010 | 109.08 | 100.50 | 108.310 | 22834300.0 | 0.0 | 1.0 | 50.661387 | 54.708881 | 50.405597 | 54.322689 | 22834300.0 | 2004-08-20 | 54.322689 | 3.661302 |

| 2 | 2004-08-23 | 110.760 | 113.48 | 109.05 | 109.400 | 18256100.0 | 0.0 | 1.0 | 55.551482 | 56.915693 | 54.693835 | 54.869377 | 18256100.0 | 2004-08-23 | 54.869377 | -0.682106 |

| 3 | 2004-08-24 | 111.240 | 111.60 | 103.57 | 104.870 | 15247300.0 | 0.0 | 1.0 | 55.792225 | 55.972783 | 51.945350 | 52.597363 | 15247300.0 | 2004-08-24 | 52.597363 | -3.194862 |

| 4 | 2004-08-25 | 104.760 | 108.00 | 103.88 | 106.000 | 9188600.0 | 0.0 | 1.0 | 52.542193 | 54.167209 | 52.100830 | 53.164113 | 9188600.0 | 2004-08-25 | 53.164113 | 0.621920 |

| 5 | 2004-08-26 | 104.950 | 107.95 | 104.66 | 107.910 | 7094800.0 | 0.0 | 1.0 | 52.637487 | 54.142132 | 52.492038 | 54.122070 | 7094800.0 | 2004-08-26 | 54.122070 | 1.484583 |

| 6 | 2004-08-27 | 108.100 | 108.62 | 105.69 | 106.150 | 6211700.0 | 0.0 | 1.0 | 54.217364 | 54.478169 | 53.008633 | 53.239345 | 6211700.0 | 2004-08-27 | 53.239345 | -0.978019 |

| 7 | 2004-08-30 | 105.280 | 105.49 | 102.01 | 102.010 | 5196700.0 | 0.0 | 1.0 | 52.802998 | 52.908323 | 51.162935 | 51.162935 | 5196700.0 | 2004-08-30 | 51.162935 | -1.640063 |

| 8 | 2004-08-31 | 102.320 | 103.71 | 102.16 | 102.370 | 4917800.0 | 0.0 | 1.0 | 51.318415 | 52.015567 | 51.238167 | 51.343492 | 4917800.0 | 2004-08-31 | 51.343492 | 0.025077 |

| 9 | 2004-09-01 | 102.700 | 102.97 | 99.67 | 100.250 | 9138200.0 | 0.0 | 1.0 | 51.509003 | 51.644421 | 49.989312 | 50.280210 | 9138200.0 | 2004-09-01 | 50.280210 | -1.228793 |

| 10 | 2004-09-02 | 99.090 | 102.37 | 98.94 | 101.510 | 15118600.0 | 0.0 | 1.0 | 49.698414 | 51.343492 | 49.623182 | 50.912161 | 15118600.0 | 2004-09-02 | 50.912161 | 1.213747 |

| 11 | 2004-09-03 | 100.950 | 101.74 | 99.32 | 100.010 | 5152400.0 | 0.0 | 1.0 | 50.631294 | 51.027517 | 49.813770 | 50.159839 | 5152400.0 | 2004-09-03 | 50.159839 | -0.471455 |

| 12 | 2004-09-07 | 101.010 | 102.00 | 99.61 | 101.580 | 5847500.0 | 0.0 | 1.0 | 50.661387 | 51.157920 | 49.959219 | 50.947269 | 5847500.0 | 2004-09-07 | 50.947269 | 0.285882 |

| 13 | 2004-09-08 | 100.740 | 103.03 | 100.50 | 102.300 | 4985600.0 | 0.0 | 1.0 | 50.525969 | 51.674514 | 50.405597 | 51.308384 | 4985600.0 | 2004-09-08 | 51.308384 | 0.782415 |

| 14 | 2004-09-09 | 102.500 | 102.71 | 101.00 | 102.310 | 4061700.0 | 0.0 | 1.0 | 51.408694 | 51.514019 | 50.656371 | 51.313400 | 4061700.0 | 2004-09-09 | 51.313400 | -0.095294 |

| 15 | 2004-09-10 | 101.470 | 106.56 | 101.30 | 105.330 | 8698800.0 | 0.0 | 1.0 | 50.892099 | 53.444980 | 50.806836 | 52.828075 | 8698800.0 | 2004-09-10 | 52.828075 | 1.935976 |

| 16 | 2004-09-13 | 106.630 | 108.41 | 106.46 | 107.500 | 7844100.0 | 0.0 | 1.0 | 53.480088 | 54.372844 | 53.394825 | 53.916435 | 7844100.0 | 2004-09-13 | 53.916435 | 0.436347 |

| 17 | 2004-09-14 | 107.440 | 112.00 | 106.79 | 111.490 | 10828900.0 | 0.0 | 1.0 | 53.886342 | 56.173402 | 53.560336 | 55.917612 | 10828900.0 | 2004-09-14 | 55.917612 | 2.031270 |

| 18 | 2004-09-15 | 110.560 | 114.23 | 110.20 | 112.000 | 10713000.0 | 0.0 | 1.0 | 55.451172 | 57.291854 | 55.270615 | 56.173402 | 10713000.0 | 2004-09-15 | 56.173402 | 0.722229 |

| 19 | 2004-09-16 | 112.340 | 115.80 | 111.65 | 113.970 | 9266300.0 | 0.0 | 1.0 | 56.343928 | 58.079285 | 55.997860 | 57.161452 | 9266300.0 | 2004-09-16 | 57.161452 | 0.817524 |

| 20 | 2004-09-17 | 114.420 | 117.49 | 113.55 | 117.490 | 9472500.0 | 0.0 | 1.0 | 57.387149 | 58.926902 | 56.950802 | 58.926902 | 9472500.0 | 2004-09-17 | 58.926902 | 1.539753 |

| 21 | 2004-09-20 | 116.950 | 121.60 | 116.77 | 119.360 | 10628700.0 | 0.0 | 1.0 | 58.656066 | 60.988265 | 58.565787 | 59.864797 | 10628700.0 | 2004-09-20 | 59.864797 | 1.208731 |

| 22 | 2004-09-21 | 120.200 | 120.42 | 117.51 | 117.840 | 7228700.0 | 0.0 | 1.0 | 60.286097 | 60.396438 | 58.936933 | 59.102444 | 7228700.0 | 2004-09-21 | 59.102444 | -1.183654 |

| 23 | 2004-09-22 | 117.450 | 119.67 | 116.81 | 118.380 | 7581200.0 | 0.0 | 1.0 | 58.906840 | 60.020277 | 58.585849 | 59.373280 | 7581200.0 | 2004-09-22 | 59.373280 | 0.466440 |

| 24 | 2004-09-23 | 118.840 | 122.63 | 117.02 | 120.820 | 8535600.0 | 0.0 | 1.0 | 59.603992 | 61.504860 | 58.691174 | 60.597057 | 8535600.0 | 2004-09-23 | 60.597057 | 0.993065 |

| 25 | 2004-09-24 | 120.970 | 124.10 | 119.76 | 119.830 | 9123400.0 | 0.0 | 1.0 | 60.672290 | 62.242136 | 60.065416 | 60.100525 | 9123400.0 | 2004-09-24 | 60.100525 | -0.571765 |

| 26 | 2004-09-27 | 119.560 | 120.88 | 117.80 | 118.260 | 7066100.0 | 0.0 | 1.0 | 59.965107 | 60.627150 | 59.082382 | 59.313094 | 7066100.0 | 2004-09-27 | 59.313094 | -0.652013 |

| 27 | 2004-09-28 | 121.150 | 127.40 | 120.21 | 126.860 | 16929000.0 | 0.0 | 1.0 | 60.762568 | 63.897245 | 60.291113 | 63.626409 | 16929000.0 | 2004-09-28 | 63.626409 | 2.863840 |

| 28 | 2004-09-29 | 126.530 | 135.02 | 126.23 | 131.080 | 30516400.0 | 0.0 | 1.0 | 63.460898 | 67.719042 | 63.310433 | 65.742942 | 30516400.0 | 2004-09-29 | 65.742942 | 2.282044 |

| 29 | 2004-09-30 | 129.899 | 132.30 | 129.00 | 129.600 | 13758000.0 | 0.0 | 1.0 | 65.150614 | 66.354831 | 64.699722 | 65.000651 | 13758000.0 | 2004-09-30 | 65.000651 | -0.149963 |

| 30 | 2004-10-01 | 130.800 | 134.24 | 128.90 | 132.580 | 15124800.0 | 0.0 | 1.0 | 65.602509 | 67.327835 | 64.649567 | 66.495265 | 15124800.0 | 2004-10-01 | 66.495265 | 0.892756 |

| 31 | 2004-10-04 | 135.275 | 136.87 | 134.03 | 135.060 | 13022700.0 | 0.0 | 1.0 | 67.846937 | 68.646906 | 67.222509 | 67.739104 | 13022700.0 | 2004-10-04 | 67.739104 | -0.107833 |

| 32 | 2004-10-05 | 134.660 | 138.53 | 132.24 | 138.370 | 14973200.0 | 0.0 | 1.0 | 67.538485 | 69.479476 | 66.324738 | 69.399229 | 14973200.0 | 2004-10-05 | 69.399229 | 1.860744 |

| 33 | 2004-10-06 | 137.670 | 138.45 | 136.00 | 137.080 | 13381400.0 | 0.0 | 1.0 | 69.048145 | 69.439353 | 68.210559 | 68.752232 | 13381400.0 | 2004-10-06 | 68.752232 | -0.295913 |

| 34 | 2004-10-07 | 136.560 | 139.88 | 136.55 | 138.850 | 14115000.0 | 0.0 | 1.0 | 68.491426 | 70.156567 | 68.486411 | 69.639972 | 14115000.0 | 2004-10-07 | 69.639972 | 1.148545 |

| 35 | 2004-10-08 | 138.730 | 139.68 | 137.02 | 137.730 | 11069500.0 | 0.0 | 1.0 | 69.579786 | 70.056257 | 68.722139 | 69.078238 | 11069500.0 | 2004-10-08 | 69.078238 | -0.501548 |

| 36 | 2004-10-11 | 137.010 | 138.86 | 133.85 | 135.260 | 10472100.0 | 0.0 | 1.0 | 68.717123 | 69.644987 | 67.132231 | 67.839414 | 10472100.0 | 2004-10-11 | 67.839414 | -0.877709 |

| 37 | 2004-10-12 | 134.490 | 137.61 | 133.40 | 137.400 | 11665500.0 | 0.0 | 1.0 | 67.453222 | 69.018052 | 66.906534 | 68.912727 | 11665500.0 | 2004-10-12 | 68.912727 | 1.459505 |

| 38 | 2004-10-13 | 143.240 | 143.55 | 140.08 | 140.900 | 19766200.0 | 0.0 | 1.0 | 71.841769 | 71.997249 | 70.256876 | 70.668146 | 19766200.0 | 2004-10-13 | 70.668146 | -1.173623 |

| 39 | 2004-10-14 | 141.020 | 142.38 | 138.56 | 142.000 | 10442100.0 | 0.0 | 1.0 | 70.728332 | 71.410437 | 69.494523 | 71.219849 | 10442100.0 | 2004-10-14 | 71.219849 | 0.491517 |

| 40 | 2004-10-15 | 144.950 | 145.50 | 141.95 | 144.110 | 13194700.0 | 0.0 | 1.0 | 72.699416 | 72.975268 | 71.194771 | 72.278116 | 13194700.0 | 2004-10-15 | 72.278116 | -0.421301 |

| 41 | 2004-10-18 | 143.120 | 149.20 | 141.21 | 149.160 | 14036300.0 | 0.0 | 1.0 | 71.781583 | 74.830996 | 70.823626 | 74.810934 | 14036300.0 | 2004-10-18 | 74.810934 | 3.029351 |

| 42 | 2004-10-19 | 150.500 | 152.40 | 147.35 | 147.940 | 18109800.0 | 0.0 | 1.0 | 75.483009 | 76.435950 | 73.903132 | 74.199045 | 18109800.0 | 2004-10-19 | 74.199045 | -1.283963 |

| 43 | 2004-10-20 | 147.940 | 148.99 | 139.60 | 140.490 | 22722600.0 | 0.0 | 1.0 | 74.199045 | 74.725671 | 70.016133 | 70.462511 | 22722600.0 | 2004-10-20 | 70.462511 | -3.736534 |

| 44 | 2004-10-21 | 144.130 | 150.13 | 141.62 | 149.380 | 29149800.0 | 0.0 | 1.0 | 72.288147 | 75.297436 | 71.029261 | 74.921275 | 29149800.0 | 2004-10-21 | 74.921275 | 2.633128 |

| 45 | 2004-10-22 | 170.435 | 180.17 | 164.08 | 172.430 | 73710000.0 | 0.0 | 1.0 | 85.481373 | 90.363945 | 82.294034 | 86.481962 | 73710000.0 | 2004-10-22 | 86.481962 | 1.000589 |

| 46 | 2004-10-25 | 176.280 | 194.43 | 172.55 | 187.400 | 65462800.0 | 0.0 | 1.0 | 88.412922 | 97.516023 | 86.542147 | 93.990139 | 65462800.0 | 2004-10-25 | 93.990139 | 5.577216 |

| 47 | 2004-10-26 | 186.449 | 192.64 | 180.00 | 181.800 | 44569500.0 | 0.0 | 1.0 | 93.513166 | 96.618251 | 90.278682 | 91.181468 | 44569500.0 | 2004-10-26 | 91.181468 | -2.331698 |

| 48 | 2004-10-27 | 182.509 | 189.52 | 181.77 | 185.970 | 26686200.0 | 0.0 | 1.0 | 91.537066 | 95.053421 | 91.166422 | 93.272925 | 26686200.0 | 2004-10-27 | 93.272925 | 1.735858 |

| 49 | 2004-10-28 | 186.630 | 194.39 | 185.60 | 193.300 | 29663900.0 | 0.0 | 1.0 | 93.603946 | 97.495961 | 93.087352 | 96.949273 | 29663900.0 | 2004-10-28 | 96.949273 | 3.345327 |

goog_data = goog_data[['Date', 'Open', 'High', 'Low', 'Close', 'Adj. Close', 'Volume']]

goog_data.head(50)

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| Date | Open | High | Low | Close | Adj. Close | Volume | |

|---|---|---|---|---|---|---|---|

| 0 | 2004-08-19 | 100.010 | 104.06 | 95.96 | 100.335 | 50.322842 | 44659000.0 |

| 1 | 2004-08-20 | 101.010 | 109.08 | 100.50 | 108.310 | 54.322689 | 22834300.0 |

| 2 | 2004-08-23 | 110.760 | 113.48 | 109.05 | 109.400 | 54.869377 | 18256100.0 |

| 3 | 2004-08-24 | 111.240 | 111.60 | 103.57 | 104.870 | 52.597363 | 15247300.0 |

| 4 | 2004-08-25 | 104.760 | 108.00 | 103.88 | 106.000 | 53.164113 | 9188600.0 |

| 5 | 2004-08-26 | 104.950 | 107.95 | 104.66 | 107.910 | 54.122070 | 7094800.0 |

| 6 | 2004-08-27 | 108.100 | 108.62 | 105.69 | 106.150 | 53.239345 | 6211700.0 |

| 7 | 2004-08-30 | 105.280 | 105.49 | 102.01 | 102.010 | 51.162935 | 5196700.0 |

| 8 | 2004-08-31 | 102.320 | 103.71 | 102.16 | 102.370 | 51.343492 | 4917800.0 |

| 9 | 2004-09-01 | 102.700 | 102.97 | 99.67 | 100.250 | 50.280210 | 9138200.0 |

| 10 | 2004-09-02 | 99.090 | 102.37 | 98.94 | 101.510 | 50.912161 | 15118600.0 |

| 11 | 2004-09-03 | 100.950 | 101.74 | 99.32 | 100.010 | 50.159839 | 5152400.0 |

| 12 | 2004-09-07 | 101.010 | 102.00 | 99.61 | 101.580 | 50.947269 | 5847500.0 |

| 13 | 2004-09-08 | 100.740 | 103.03 | 100.50 | 102.300 | 51.308384 | 4985600.0 |

| 14 | 2004-09-09 | 102.500 | 102.71 | 101.00 | 102.310 | 51.313400 | 4061700.0 |

| 15 | 2004-09-10 | 101.470 | 106.56 | 101.30 | 105.330 | 52.828075 | 8698800.0 |

| 16 | 2004-09-13 | 106.630 | 108.41 | 106.46 | 107.500 | 53.916435 | 7844100.0 |

| 17 | 2004-09-14 | 107.440 | 112.00 | 106.79 | 111.490 | 55.917612 | 10828900.0 |

| 18 | 2004-09-15 | 110.560 | 114.23 | 110.20 | 112.000 | 56.173402 | 10713000.0 |

| 19 | 2004-09-16 | 112.340 | 115.80 | 111.65 | 113.970 | 57.161452 | 9266300.0 |

| 20 | 2004-09-17 | 114.420 | 117.49 | 113.55 | 117.490 | 58.926902 | 9472500.0 |

| 21 | 2004-09-20 | 116.950 | 121.60 | 116.77 | 119.360 | 59.864797 | 10628700.0 |

| 22 | 2004-09-21 | 120.200 | 120.42 | 117.51 | 117.840 | 59.102444 | 7228700.0 |

| 23 | 2004-09-22 | 117.450 | 119.67 | 116.81 | 118.380 | 59.373280 | 7581200.0 |

| 24 | 2004-09-23 | 118.840 | 122.63 | 117.02 | 120.820 | 60.597057 | 8535600.0 |

| 25 | 2004-09-24 | 120.970 | 124.10 | 119.76 | 119.830 | 60.100525 | 9123400.0 |

| 26 | 2004-09-27 | 119.560 | 120.88 | 117.80 | 118.260 | 59.313094 | 7066100.0 |

| 27 | 2004-09-28 | 121.150 | 127.40 | 120.21 | 126.860 | 63.626409 | 16929000.0 |

| 28 | 2004-09-29 | 126.530 | 135.02 | 126.23 | 131.080 | 65.742942 | 30516400.0 |

| 29 | 2004-09-30 | 129.899 | 132.30 | 129.00 | 129.600 | 65.000651 | 13758000.0 |

| 30 | 2004-10-01 | 130.800 | 134.24 | 128.90 | 132.580 | 66.495265 | 15124800.0 |

| 31 | 2004-10-04 | 135.275 | 136.87 | 134.03 | 135.060 | 67.739104 | 13022700.0 |

| 32 | 2004-10-05 | 134.660 | 138.53 | 132.24 | 138.370 | 69.399229 | 14973200.0 |

| 33 | 2004-10-06 | 137.670 | 138.45 | 136.00 | 137.080 | 68.752232 | 13381400.0 |

| 34 | 2004-10-07 | 136.560 | 139.88 | 136.55 | 138.850 | 69.639972 | 14115000.0 |

| 35 | 2004-10-08 | 138.730 | 139.68 | 137.02 | 137.730 | 69.078238 | 11069500.0 |

| 36 | 2004-10-11 | 137.010 | 138.86 | 133.85 | 135.260 | 67.839414 | 10472100.0 |

| 37 | 2004-10-12 | 134.490 | 137.61 | 133.40 | 137.400 | 68.912727 | 11665500.0 |

| 38 | 2004-10-13 | 143.240 | 143.55 | 140.08 | 140.900 | 70.668146 | 19766200.0 |

| 39 | 2004-10-14 | 141.020 | 142.38 | 138.56 | 142.000 | 71.219849 | 10442100.0 |

| 40 | 2004-10-15 | 144.950 | 145.50 | 141.95 | 144.110 | 72.278116 | 13194700.0 |

| 41 | 2004-10-18 | 143.120 | 149.20 | 141.21 | 149.160 | 74.810934 | 14036300.0 |

| 42 | 2004-10-19 | 150.500 | 152.40 | 147.35 | 147.940 | 74.199045 | 18109800.0 |

| 43 | 2004-10-20 | 147.940 | 148.99 | 139.60 | 140.490 | 70.462511 | 22722600.0 |

| 44 | 2004-10-21 | 144.130 | 150.13 | 141.62 | 149.380 | 74.921275 | 29149800.0 |

| 45 | 2004-10-22 | 170.435 | 180.17 | 164.08 | 172.430 | 86.481962 | 73710000.0 |

| 46 | 2004-10-25 | 176.280 | 194.43 | 172.55 | 187.400 | 93.990139 | 65462800.0 |

| 47 | 2004-10-26 | 186.449 | 192.64 | 180.00 | 181.800 | 91.181468 | 44569500.0 |

| 48 | 2004-10-27 | 182.509 | 189.52 | 181.77 | 185.970 | 93.272925 | 26686200.0 |

| 49 | 2004-10-28 | 186.630 | 194.39 | 185.60 | 193.300 | 96.949273 | 29663900.0 |

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

import matplotlib.style

import matplotlib as mpl

mpl.style.use('ggplot')

from matplotlib.pylab import rcParams

rcParams['figure.figsize'] = 20, 10

from sklearn.preprocessing import MinMaxScaler

scaler = MinMaxScaler(feature_range=(0, 1))

# Creating copy of goog_data dataframe for moving averages

df = goog_data

df.head()

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| Date | Open | High | Low | Close | Adj. Close | Volume | |

|---|---|---|---|---|---|---|---|

| 0 | 2004-08-19 | 100.01 | 104.06 | 95.96 | 100.335 | 50.322842 | 44659000.0 |

| 1 | 2004-08-20 | 101.01 | 109.08 | 100.50 | 108.310 | 54.322689 | 22834300.0 |

| 2 | 2004-08-23 | 110.76 | 113.48 | 109.05 | 109.400 | 54.869377 | 18256100.0 |

| 3 | 2004-08-24 | 111.24 | 111.60 | 103.57 | 104.870 | 52.597363 | 15247300.0 |

| 4 | 2004-08-25 | 104.76 | 108.00 | 103.88 | 106.000 | 53.164113 | 9188600.0 |

df['Date'] = pd.to_datetime(df.Date, format='%Y-%m-%d')

df.index = df['Date']

df.head(50)

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| Date | Open | High | Low | Close | Adj. Close | Volume | |

|---|---|---|---|---|---|---|---|

| Date | |||||||

| 2004-08-19 | 2004-08-19 | 100.010 | 104.06 | 95.96 | 100.335 | 50.322842 | 44659000.0 |

| 2004-08-20 | 2004-08-20 | 101.010 | 109.08 | 100.50 | 108.310 | 54.322689 | 22834300.0 |

| 2004-08-23 | 2004-08-23 | 110.760 | 113.48 | 109.05 | 109.400 | 54.869377 | 18256100.0 |

| 2004-08-24 | 2004-08-24 | 111.240 | 111.60 | 103.57 | 104.870 | 52.597363 | 15247300.0 |

| 2004-08-25 | 2004-08-25 | 104.760 | 108.00 | 103.88 | 106.000 | 53.164113 | 9188600.0 |

| 2004-08-26 | 2004-08-26 | 104.950 | 107.95 | 104.66 | 107.910 | 54.122070 | 7094800.0 |

| 2004-08-27 | 2004-08-27 | 108.100 | 108.62 | 105.69 | 106.150 | 53.239345 | 6211700.0 |

| 2004-08-30 | 2004-08-30 | 105.280 | 105.49 | 102.01 | 102.010 | 51.162935 | 5196700.0 |

| 2004-08-31 | 2004-08-31 | 102.320 | 103.71 | 102.16 | 102.370 | 51.343492 | 4917800.0 |

| 2004-09-01 | 2004-09-01 | 102.700 | 102.97 | 99.67 | 100.250 | 50.280210 | 9138200.0 |

| 2004-09-02 | 2004-09-02 | 99.090 | 102.37 | 98.94 | 101.510 | 50.912161 | 15118600.0 |

| 2004-09-03 | 2004-09-03 | 100.950 | 101.74 | 99.32 | 100.010 | 50.159839 | 5152400.0 |

| 2004-09-07 | 2004-09-07 | 101.010 | 102.00 | 99.61 | 101.580 | 50.947269 | 5847500.0 |

| 2004-09-08 | 2004-09-08 | 100.740 | 103.03 | 100.50 | 102.300 | 51.308384 | 4985600.0 |

| 2004-09-09 | 2004-09-09 | 102.500 | 102.71 | 101.00 | 102.310 | 51.313400 | 4061700.0 |

| 2004-09-10 | 2004-09-10 | 101.470 | 106.56 | 101.30 | 105.330 | 52.828075 | 8698800.0 |

| 2004-09-13 | 2004-09-13 | 106.630 | 108.41 | 106.46 | 107.500 | 53.916435 | 7844100.0 |

| 2004-09-14 | 2004-09-14 | 107.440 | 112.00 | 106.79 | 111.490 | 55.917612 | 10828900.0 |

| 2004-09-15 | 2004-09-15 | 110.560 | 114.23 | 110.20 | 112.000 | 56.173402 | 10713000.0 |

| 2004-09-16 | 2004-09-16 | 112.340 | 115.80 | 111.65 | 113.970 | 57.161452 | 9266300.0 |

| 2004-09-17 | 2004-09-17 | 114.420 | 117.49 | 113.55 | 117.490 | 58.926902 | 9472500.0 |

| 2004-09-20 | 2004-09-20 | 116.950 | 121.60 | 116.77 | 119.360 | 59.864797 | 10628700.0 |

| 2004-09-21 | 2004-09-21 | 120.200 | 120.42 | 117.51 | 117.840 | 59.102444 | 7228700.0 |

| 2004-09-22 | 2004-09-22 | 117.450 | 119.67 | 116.81 | 118.380 | 59.373280 | 7581200.0 |

| 2004-09-23 | 2004-09-23 | 118.840 | 122.63 | 117.02 | 120.820 | 60.597057 | 8535600.0 |

| 2004-09-24 | 2004-09-24 | 120.970 | 124.10 | 119.76 | 119.830 | 60.100525 | 9123400.0 |

| 2004-09-27 | 2004-09-27 | 119.560 | 120.88 | 117.80 | 118.260 | 59.313094 | 7066100.0 |

| 2004-09-28 | 2004-09-28 | 121.150 | 127.40 | 120.21 | 126.860 | 63.626409 | 16929000.0 |

| 2004-09-29 | 2004-09-29 | 126.530 | 135.02 | 126.23 | 131.080 | 65.742942 | 30516400.0 |

| 2004-09-30 | 2004-09-30 | 129.899 | 132.30 | 129.00 | 129.600 | 65.000651 | 13758000.0 |

| 2004-10-01 | 2004-10-01 | 130.800 | 134.24 | 128.90 | 132.580 | 66.495265 | 15124800.0 |

| 2004-10-04 | 2004-10-04 | 135.275 | 136.87 | 134.03 | 135.060 | 67.739104 | 13022700.0 |

| 2004-10-05 | 2004-10-05 | 134.660 | 138.53 | 132.24 | 138.370 | 69.399229 | 14973200.0 |

| 2004-10-06 | 2004-10-06 | 137.670 | 138.45 | 136.00 | 137.080 | 68.752232 | 13381400.0 |

| 2004-10-07 | 2004-10-07 | 136.560 | 139.88 | 136.55 | 138.850 | 69.639972 | 14115000.0 |

| 2004-10-08 | 2004-10-08 | 138.730 | 139.68 | 137.02 | 137.730 | 69.078238 | 11069500.0 |

| 2004-10-11 | 2004-10-11 | 137.010 | 138.86 | 133.85 | 135.260 | 67.839414 | 10472100.0 |

| 2004-10-12 | 2004-10-12 | 134.490 | 137.61 | 133.40 | 137.400 | 68.912727 | 11665500.0 |

| 2004-10-13 | 2004-10-13 | 143.240 | 143.55 | 140.08 | 140.900 | 70.668146 | 19766200.0 |

| 2004-10-14 | 2004-10-14 | 141.020 | 142.38 | 138.56 | 142.000 | 71.219849 | 10442100.0 |

| 2004-10-15 | 2004-10-15 | 144.950 | 145.50 | 141.95 | 144.110 | 72.278116 | 13194700.0 |

| 2004-10-18 | 2004-10-18 | 143.120 | 149.20 | 141.21 | 149.160 | 74.810934 | 14036300.0 |

| 2004-10-19 | 2004-10-19 | 150.500 | 152.40 | 147.35 | 147.940 | 74.199045 | 18109800.0 |

| 2004-10-20 | 2004-10-20 | 147.940 | 148.99 | 139.60 | 140.490 | 70.462511 | 22722600.0 |

| 2004-10-21 | 2004-10-21 | 144.130 | 150.13 | 141.62 | 149.380 | 74.921275 | 29149800.0 |

| 2004-10-22 | 2004-10-22 | 170.435 | 180.17 | 164.08 | 172.430 | 86.481962 | 73710000.0 |

| 2004-10-25 | 2004-10-25 | 176.280 | 194.43 | 172.55 | 187.400 | 93.990139 | 65462800.0 |

| 2004-10-26 | 2004-10-26 | 186.449 | 192.64 | 180.00 | 181.800 | 91.181468 | 44569500.0 |

| 2004-10-27 | 2004-10-27 | 182.509 | 189.52 | 181.77 | 185.970 | 93.272925 | 26686200.0 |

| 2004-10-28 | 2004-10-28 | 186.630 | 194.39 | 185.60 | 193.300 | 96.949273 | 29663900.0 |

plt.figure(figsize=(16,8))

plt.plot(df['Date'], df['Adj. Close'], label='Close Price history')

[<matplotlib.lines.Line2D at 0x7f6e00e4acc0>]

# Creating dataframe with date and the target variable

data = df.sort_index(ascending=True, axis=0)

new_data = pd.DataFrame(index=range(0, len(df)), columns=['Date', 'Adj. Close'])

for i in range(0, len(data)):

new_data['Date'][i] = data['Date'][i]

new_data['Adj. Close'][i] = data['Adj. Close'][i]

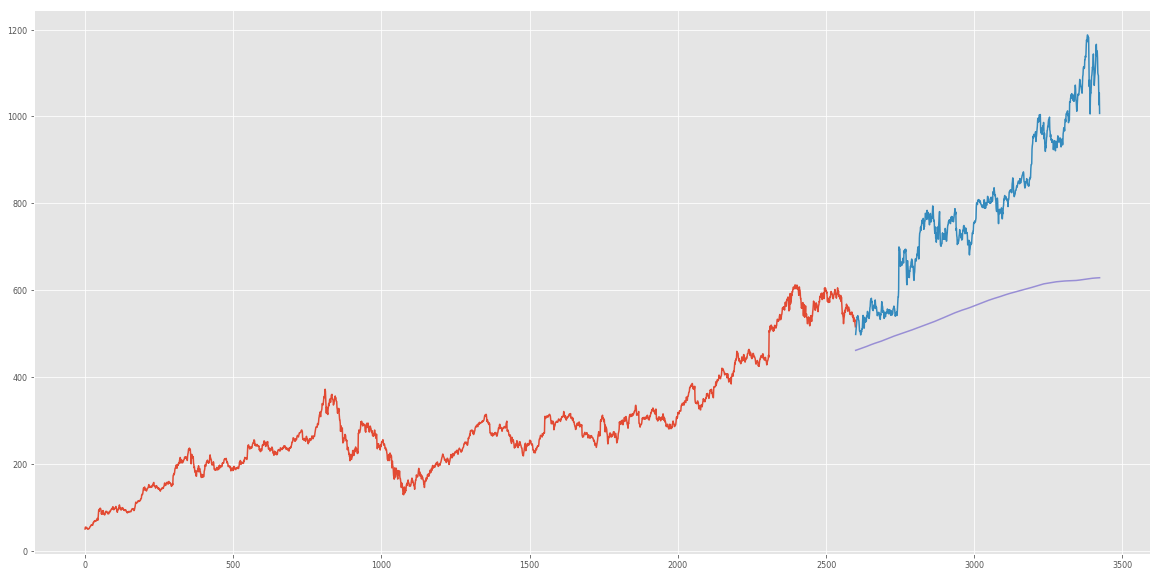

# Train-test split

train = new_data[:2600]

test = new_data[2600:]

new_data.shape, train.shape, test.shape

((3424, 2), (2600, 2), (824, 2))

num = test.shape[0]

train['Date'].min(), train['Date'].max(), test['Date'].min(), test['Date'].max()

(Timestamp('2004-08-19 00:00:00'),

Timestamp('2014-12-15 00:00:00'),

Timestamp('2014-12-16 00:00:00'),

Timestamp('2018-03-27 00:00:00'))

# Making predictions

preds = []

for i in range(0, num):

a = train['Adj. Close'][len(train)-924+i:].sum() + sum(preds)

b = a/num

preds.append(b)

len(preds)

824

# Measure accuracy with rmse (Root Mean Squared Error)

rms=np.sqrt(np.mean(np.power((np.array(test['Adj. Close'])-preds),2)))

print(rms)

264.46002931639754

test['Predictions'] = 0

test['Predictions'] = preds

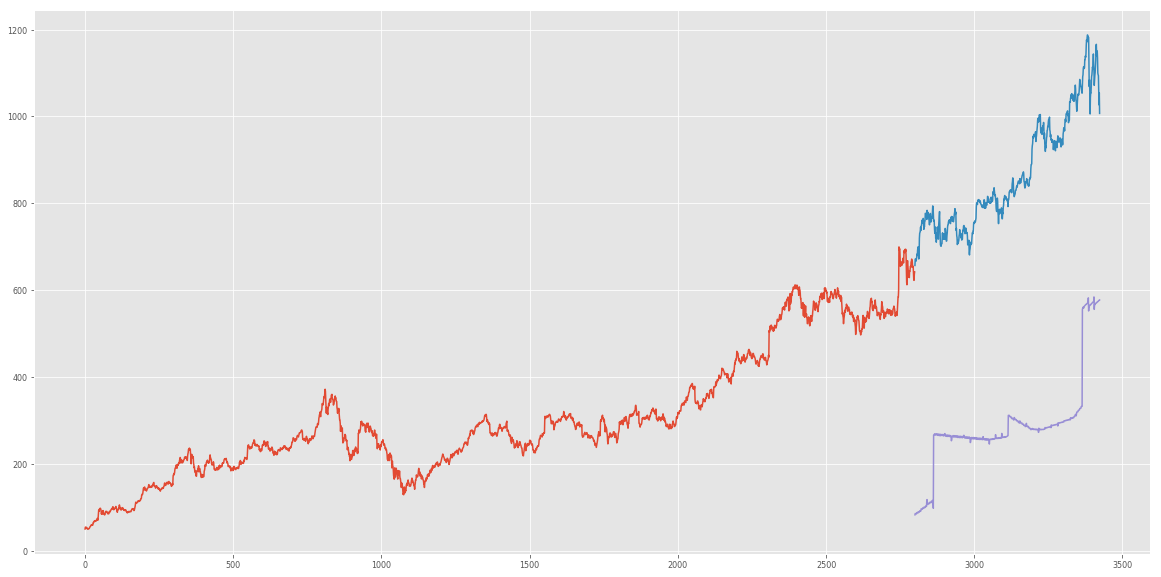

plt.plot(train['Adj. Close'])

plt.plot(test[['Adj. Close', 'Predictions']])

/usr/local/lib/python3.6/dist-packages/ipykernel_launcher.py:1: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: http://pandas.pydata.org/pandas-docs/stable/indexing.html#indexing-view-versus-copy

/usr/local/lib/python3.6/dist-packages/ipykernel_launcher.py:2: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: http://pandas.pydata.org/pandas-docs/stable/indexing.html#indexing-view-versus-copy

[<matplotlib.lines.Line2D at 0x7f6dee712940>,

<matplotlib.lines.Line2D at 0x7f6dee712b70>]

lr_data = goog_data

lr_data.head(50)

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| Date | Open | High | Low | Close | Adj. Close | Volume | |

|---|---|---|---|---|---|---|---|

| Date | |||||||

| 2004-08-19 | 2004-08-19 | 100.010 | 104.06 | 95.96 | 100.335 | 50.322842 | 44659000.0 |

| 2004-08-20 | 2004-08-20 | 101.010 | 109.08 | 100.50 | 108.310 | 54.322689 | 22834300.0 |

| 2004-08-23 | 2004-08-23 | 110.760 | 113.48 | 109.05 | 109.400 | 54.869377 | 18256100.0 |

| 2004-08-24 | 2004-08-24 | 111.240 | 111.60 | 103.57 | 104.870 | 52.597363 | 15247300.0 |

| 2004-08-25 | 2004-08-25 | 104.760 | 108.00 | 103.88 | 106.000 | 53.164113 | 9188600.0 |

| 2004-08-26 | 2004-08-26 | 104.950 | 107.95 | 104.66 | 107.910 | 54.122070 | 7094800.0 |

| 2004-08-27 | 2004-08-27 | 108.100 | 108.62 | 105.69 | 106.150 | 53.239345 | 6211700.0 |

| 2004-08-30 | 2004-08-30 | 105.280 | 105.49 | 102.01 | 102.010 | 51.162935 | 5196700.0 |

| 2004-08-31 | 2004-08-31 | 102.320 | 103.71 | 102.16 | 102.370 | 51.343492 | 4917800.0 |

| 2004-09-01 | 2004-09-01 | 102.700 | 102.97 | 99.67 | 100.250 | 50.280210 | 9138200.0 |

| 2004-09-02 | 2004-09-02 | 99.090 | 102.37 | 98.94 | 101.510 | 50.912161 | 15118600.0 |

| 2004-09-03 | 2004-09-03 | 100.950 | 101.74 | 99.32 | 100.010 | 50.159839 | 5152400.0 |

| 2004-09-07 | 2004-09-07 | 101.010 | 102.00 | 99.61 | 101.580 | 50.947269 | 5847500.0 |

| 2004-09-08 | 2004-09-08 | 100.740 | 103.03 | 100.50 | 102.300 | 51.308384 | 4985600.0 |

| 2004-09-09 | 2004-09-09 | 102.500 | 102.71 | 101.00 | 102.310 | 51.313400 | 4061700.0 |

| 2004-09-10 | 2004-09-10 | 101.470 | 106.56 | 101.30 | 105.330 | 52.828075 | 8698800.0 |

| 2004-09-13 | 2004-09-13 | 106.630 | 108.41 | 106.46 | 107.500 | 53.916435 | 7844100.0 |

| 2004-09-14 | 2004-09-14 | 107.440 | 112.00 | 106.79 | 111.490 | 55.917612 | 10828900.0 |

| 2004-09-15 | 2004-09-15 | 110.560 | 114.23 | 110.20 | 112.000 | 56.173402 | 10713000.0 |

| 2004-09-16 | 2004-09-16 | 112.340 | 115.80 | 111.65 | 113.970 | 57.161452 | 9266300.0 |

| 2004-09-17 | 2004-09-17 | 114.420 | 117.49 | 113.55 | 117.490 | 58.926902 | 9472500.0 |

| 2004-09-20 | 2004-09-20 | 116.950 | 121.60 | 116.77 | 119.360 | 59.864797 | 10628700.0 |

| 2004-09-21 | 2004-09-21 | 120.200 | 120.42 | 117.51 | 117.840 | 59.102444 | 7228700.0 |

| 2004-09-22 | 2004-09-22 | 117.450 | 119.67 | 116.81 | 118.380 | 59.373280 | 7581200.0 |

| 2004-09-23 | 2004-09-23 | 118.840 | 122.63 | 117.02 | 120.820 | 60.597057 | 8535600.0 |

| 2004-09-24 | 2004-09-24 | 120.970 | 124.10 | 119.76 | 119.830 | 60.100525 | 9123400.0 |

| 2004-09-27 | 2004-09-27 | 119.560 | 120.88 | 117.80 | 118.260 | 59.313094 | 7066100.0 |

| 2004-09-28 | 2004-09-28 | 121.150 | 127.40 | 120.21 | 126.860 | 63.626409 | 16929000.0 |

| 2004-09-29 | 2004-09-29 | 126.530 | 135.02 | 126.23 | 131.080 | 65.742942 | 30516400.0 |

| 2004-09-30 | 2004-09-30 | 129.899 | 132.30 | 129.00 | 129.600 | 65.000651 | 13758000.0 |

| 2004-10-01 | 2004-10-01 | 130.800 | 134.24 | 128.90 | 132.580 | 66.495265 | 15124800.0 |

| 2004-10-04 | 2004-10-04 | 135.275 | 136.87 | 134.03 | 135.060 | 67.739104 | 13022700.0 |

| 2004-10-05 | 2004-10-05 | 134.660 | 138.53 | 132.24 | 138.370 | 69.399229 | 14973200.0 |

| 2004-10-06 | 2004-10-06 | 137.670 | 138.45 | 136.00 | 137.080 | 68.752232 | 13381400.0 |

| 2004-10-07 | 2004-10-07 | 136.560 | 139.88 | 136.55 | 138.850 | 69.639972 | 14115000.0 |

| 2004-10-08 | 2004-10-08 | 138.730 | 139.68 | 137.02 | 137.730 | 69.078238 | 11069500.0 |

| 2004-10-11 | 2004-10-11 | 137.010 | 138.86 | 133.85 | 135.260 | 67.839414 | 10472100.0 |

| 2004-10-12 | 2004-10-12 | 134.490 | 137.61 | 133.40 | 137.400 | 68.912727 | 11665500.0 |

| 2004-10-13 | 2004-10-13 | 143.240 | 143.55 | 140.08 | 140.900 | 70.668146 | 19766200.0 |

| 2004-10-14 | 2004-10-14 | 141.020 | 142.38 | 138.56 | 142.000 | 71.219849 | 10442100.0 |

| 2004-10-15 | 2004-10-15 | 144.950 | 145.50 | 141.95 | 144.110 | 72.278116 | 13194700.0 |

| 2004-10-18 | 2004-10-18 | 143.120 | 149.20 | 141.21 | 149.160 | 74.810934 | 14036300.0 |

| 2004-10-19 | 2004-10-19 | 150.500 | 152.40 | 147.35 | 147.940 | 74.199045 | 18109800.0 |

| 2004-10-20 | 2004-10-20 | 147.940 | 148.99 | 139.60 | 140.490 | 70.462511 | 22722600.0 |

| 2004-10-21 | 2004-10-21 | 144.130 | 150.13 | 141.62 | 149.380 | 74.921275 | 29149800.0 |

| 2004-10-22 | 2004-10-22 | 170.435 | 180.17 | 164.08 | 172.430 | 86.481962 | 73710000.0 |

| 2004-10-25 | 2004-10-25 | 176.280 | 194.43 | 172.55 | 187.400 | 93.990139 | 65462800.0 |

| 2004-10-26 | 2004-10-26 | 186.449 | 192.64 | 180.00 | 181.800 | 91.181468 | 44569500.0 |

| 2004-10-27 | 2004-10-27 | 182.509 | 189.52 | 181.77 | 185.970 | 93.272925 | 26686200.0 |

| 2004-10-28 | 2004-10-28 | 186.630 | 194.39 | 185.60 | 193.300 | 96.949273 | 29663900.0 |

# We'll create a separate dataset so that new features don't mess up the original data.

lr_data['Date'] = pd.to_datetime(lr_data.Date, format='%Y-%m-%d')

lr_data.index = lr_data['Date']

lr_data = lr_data.sort_index(ascending=True, axis=0)

new_data = pd.DataFrame(index=range(0, len(lr_data)), columns=['Date', 'Adj. Close'])

for i in range(0,len(data)):

new_data['Date'][i] = lr_data['Date'][i]

new_data['Adj. Close'][i] = lr_data['Adj. Close'][i]

new_data.head(50)

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| Date | Adj. Close | |

|---|---|---|

| 0 | 2004-08-19 00:00:00 | 50.3228 |

| 1 | 2004-08-20 00:00:00 | 54.3227 |

| 2 | 2004-08-23 00:00:00 | 54.8694 |

| 3 | 2004-08-24 00:00:00 | 52.5974 |

| 4 | 2004-08-25 00:00:00 | 53.1641 |

| 5 | 2004-08-26 00:00:00 | 54.1221 |

| 6 | 2004-08-27 00:00:00 | 53.2393 |

| 7 | 2004-08-30 00:00:00 | 51.1629 |

| 8 | 2004-08-31 00:00:00 | 51.3435 |

| 9 | 2004-09-01 00:00:00 | 50.2802 |

| 10 | 2004-09-02 00:00:00 | 50.9122 |

| 11 | 2004-09-03 00:00:00 | 50.1598 |

| 12 | 2004-09-07 00:00:00 | 50.9473 |

| 13 | 2004-09-08 00:00:00 | 51.3084 |

| 14 | 2004-09-09 00:00:00 | 51.3134 |

| 15 | 2004-09-10 00:00:00 | 52.8281 |

| 16 | 2004-09-13 00:00:00 | 53.9164 |

| 17 | 2004-09-14 00:00:00 | 55.9176 |

| 18 | 2004-09-15 00:00:00 | 56.1734 |

| 19 | 2004-09-16 00:00:00 | 57.1615 |

| 20 | 2004-09-17 00:00:00 | 58.9269 |

| 21 | 2004-09-20 00:00:00 | 59.8648 |

| 22 | 2004-09-21 00:00:00 | 59.1024 |

| 23 | 2004-09-22 00:00:00 | 59.3733 |

| 24 | 2004-09-23 00:00:00 | 60.5971 |

| 25 | 2004-09-24 00:00:00 | 60.1005 |

| 26 | 2004-09-27 00:00:00 | 59.3131 |

| 27 | 2004-09-28 00:00:00 | 63.6264 |

| 28 | 2004-09-29 00:00:00 | 65.7429 |

| 29 | 2004-09-30 00:00:00 | 65.0007 |

| 30 | 2004-10-01 00:00:00 | 66.4953 |

| 31 | 2004-10-04 00:00:00 | 67.7391 |

| 32 | 2004-10-05 00:00:00 | 69.3992 |

| 33 | 2004-10-06 00:00:00 | 68.7522 |

| 34 | 2004-10-07 00:00:00 | 69.64 |

| 35 | 2004-10-08 00:00:00 | 69.0782 |

| 36 | 2004-10-11 00:00:00 | 67.8394 |

| 37 | 2004-10-12 00:00:00 | 68.9127 |

| 38 | 2004-10-13 00:00:00 | 70.6681 |

| 39 | 2004-10-14 00:00:00 | 71.2198 |

| 40 | 2004-10-15 00:00:00 | 72.2781 |

| 41 | 2004-10-18 00:00:00 | 74.8109 |

| 42 | 2004-10-19 00:00:00 | 74.199 |

| 43 | 2004-10-20 00:00:00 | 70.4625 |

| 44 | 2004-10-21 00:00:00 | 74.9213 |

| 45 | 2004-10-22 00:00:00 | 86.482 |

| 46 | 2004-10-25 00:00:00 | 93.9901 |

| 47 | 2004-10-26 00:00:00 | 91.1815 |

| 48 | 2004-10-27 00:00:00 | 93.2729 |

| 49 | 2004-10-28 00:00:00 | 96.9493 |

!pip install fastai==0.7.0

from fastai.structured import add_datepart

add_datepart(new_data, 'Date')

new_data.drop('Elapsed', axis=1, inplace=True)

new_data.head(50)

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| Adj. Close | Year | Month | Week | Day | Dayofweek | Dayofyear | Is_month_end | Is_month_start | Is_quarter_end | Is_quarter_start | Is_year_end | Is_year_start | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 50.3228 | 2004 | 8 | 34 | 19 | 3 | 232 | False | False | False | False | False | False |

| 1 | 54.3227 | 2004 | 8 | 34 | 20 | 4 | 233 | False | False | False | False | False | False |

| 2 | 54.8694 | 2004 | 8 | 35 | 23 | 0 | 236 | False | False | False | False | False | False |

| 3 | 52.5974 | 2004 | 8 | 35 | 24 | 1 | 237 | False | False | False | False | False | False |

| 4 | 53.1641 | 2004 | 8 | 35 | 25 | 2 | 238 | False | False | False | False | False | False |

| 5 | 54.1221 | 2004 | 8 | 35 | 26 | 3 | 239 | False | False | False | False | False | False |

| 6 | 53.2393 | 2004 | 8 | 35 | 27 | 4 | 240 | False | False | False | False | False | False |

| 7 | 51.1629 | 2004 | 8 | 36 | 30 | 0 | 243 | False | False | False | False | False | False |

| 8 | 51.3435 | 2004 | 8 | 36 | 31 | 1 | 244 | True | False | False | False | False | False |

| 9 | 50.2802 | 2004 | 9 | 36 | 1 | 2 | 245 | False | True | False | False | False | False |

| 10 | 50.9122 | 2004 | 9 | 36 | 2 | 3 | 246 | False | False | False | False | False | False |

| 11 | 50.1598 | 2004 | 9 | 36 | 3 | 4 | 247 | False | False | False | False | False | False |

| 12 | 50.9473 | 2004 | 9 | 37 | 7 | 1 | 251 | False | False | False | False | False | False |

| 13 | 51.3084 | 2004 | 9 | 37 | 8 | 2 | 252 | False | False | False | False | False | False |

| 14 | 51.3134 | 2004 | 9 | 37 | 9 | 3 | 253 | False | False | False | False | False | False |

| 15 | 52.8281 | 2004 | 9 | 37 | 10 | 4 | 254 | False | False | False | False | False | False |

| 16 | 53.9164 | 2004 | 9 | 38 | 13 | 0 | 257 | False | False | False | False | False | False |

| 17 | 55.9176 | 2004 | 9 | 38 | 14 | 1 | 258 | False | False | False | False | False | False |

| 18 | 56.1734 | 2004 | 9 | 38 | 15 | 2 | 259 | False | False | False | False | False | False |

| 19 | 57.1615 | 2004 | 9 | 38 | 16 | 3 | 260 | False | False | False | False | False | False |

| 20 | 58.9269 | 2004 | 9 | 38 | 17 | 4 | 261 | False | False | False | False | False | False |

| 21 | 59.8648 | 2004 | 9 | 39 | 20 | 0 | 264 | False | False | False | False | False | False |

| 22 | 59.1024 | 2004 | 9 | 39 | 21 | 1 | 265 | False | False | False | False | False | False |

| 23 | 59.3733 | 2004 | 9 | 39 | 22 | 2 | 266 | False | False | False | False | False | False |

| 24 | 60.5971 | 2004 | 9 | 39 | 23 | 3 | 267 | False | False | False | False | False | False |

| 25 | 60.1005 | 2004 | 9 | 39 | 24 | 4 | 268 | False | False | False | False | False | False |

| 26 | 59.3131 | 2004 | 9 | 40 | 27 | 0 | 271 | False | False | False | False | False | False |

| 27 | 63.6264 | 2004 | 9 | 40 | 28 | 1 | 272 | False | False | False | False | False | False |

| 28 | 65.7429 | 2004 | 9 | 40 | 29 | 2 | 273 | False | False | False | False | False | False |

| 29 | 65.0007 | 2004 | 9 | 40 | 30 | 3 | 274 | True | False | True | False | False | False |

| 30 | 66.4953 | 2004 | 10 | 40 | 1 | 4 | 275 | False | True | False | True | False | False |

| 31 | 67.7391 | 2004 | 10 | 41 | 4 | 0 | 278 | False | False | False | False | False | False |

| 32 | 69.3992 | 2004 | 10 | 41 | 5 | 1 | 279 | False | False | False | False | False | False |

| 33 | 68.7522 | 2004 | 10 | 41 | 6 | 2 | 280 | False | False | False | False | False | False |

| 34 | 69.64 | 2004 | 10 | 41 | 7 | 3 | 281 | False | False | False | False | False | False |

| 35 | 69.0782 | 2004 | 10 | 41 | 8 | 4 | 282 | False | False | False | False | False | False |

| 36 | 67.8394 | 2004 | 10 | 42 | 11 | 0 | 285 | False | False | False | False | False | False |

| 37 | 68.9127 | 2004 | 10 | 42 | 12 | 1 | 286 | False | False | False | False | False | False |

| 38 | 70.6681 | 2004 | 10 | 42 | 13 | 2 | 287 | False | False | False | False | False | False |

| 39 | 71.2198 | 2004 | 10 | 42 | 14 | 3 | 288 | False | False | False | False | False | False |

| 40 | 72.2781 | 2004 | 10 | 42 | 15 | 4 | 289 | False | False | False | False | False | False |

| 41 | 74.8109 | 2004 | 10 | 43 | 18 | 0 | 292 | False | False | False | False | False | False |

| 42 | 74.199 | 2004 | 10 | 43 | 19 | 1 | 293 | False | False | False | False | False | False |

| 43 | 70.4625 | 2004 | 10 | 43 | 20 | 2 | 294 | False | False | False | False | False | False |

| 44 | 74.9213 | 2004 | 10 | 43 | 21 | 3 | 295 | False | False | False | False | False | False |

| 45 | 86.482 | 2004 | 10 | 43 | 22 | 4 | 296 | False | False | False | False | False | False |

| 46 | 93.9901 | 2004 | 10 | 44 | 25 | 0 | 299 | False | False | False | False | False | False |

| 47 | 91.1815 | 2004 | 10 | 44 | 26 | 1 | 300 | False | False | False | False | False | False |

| 48 | 93.2729 | 2004 | 10 | 44 | 27 | 2 | 301 | False | False | False | False | False | False |

| 49 | 96.9493 | 2004 | 10 | 44 | 28 | 3 | 302 | False | False | False | False | False | False |

# Train-test split

train = new_data[:2600]

test = new_data[2600:]

x_train = train.drop('Adj. Close', axis=1)

y_train = train['Adj. Close']

x_test = test.drop('Adj. Close', axis=1)

y_test = test['Adj. Close']

x_train

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| Year | Month | Week | Day | Dayofweek | Dayofyear | Is_month_end | Is_month_start | Is_quarter_end | Is_quarter_start | Is_year_end | Is_year_start | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2004 | 8 | 34 | 19 | 3 | 232 | False | False | False | False | False | False |

| 1 | 2004 | 8 | 34 | 20 | 4 | 233 | False | False | False | False | False | False |

| 2 | 2004 | 8 | 35 | 23 | 0 | 236 | False | False | False | False | False | False |

| 3 | 2004 | 8 | 35 | 24 | 1 | 237 | False | False | False | False | False | False |

| 4 | 2004 | 8 | 35 | 25 | 2 | 238 | False | False | False | False | False | False |

| 5 | 2004 | 8 | 35 | 26 | 3 | 239 | False | False | False | False | False | False |

| 6 | 2004 | 8 | 35 | 27 | 4 | 240 | False | False | False | False | False | False |

| 7 | 2004 | 8 | 36 | 30 | 0 | 243 | False | False | False | False | False | False |

| 8 | 2004 | 8 | 36 | 31 | 1 | 244 | True | False | False | False | False | False |

| 9 | 2004 | 9 | 36 | 1 | 2 | 245 | False | True | False | False | False | False |

| 10 | 2004 | 9 | 36 | 2 | 3 | 246 | False | False | False | False | False | False |

| 11 | 2004 | 9 | 36 | 3 | 4 | 247 | False | False | False | False | False | False |

| 12 | 2004 | 9 | 37 | 7 | 1 | 251 | False | False | False | False | False | False |

| 13 | 2004 | 9 | 37 | 8 | 2 | 252 | False | False | False | False | False | False |

| 14 | 2004 | 9 | 37 | 9 | 3 | 253 | False | False | False | False | False | False |

| 15 | 2004 | 9 | 37 | 10 | 4 | 254 | False | False | False | False | False | False |

| 16 | 2004 | 9 | 38 | 13 | 0 | 257 | False | False | False | False | False | False |

| 17 | 2004 | 9 | 38 | 14 | 1 | 258 | False | False | False | False | False | False |

| 18 | 2004 | 9 | 38 | 15 | 2 | 259 | False | False | False | False | False | False |

| 19 | 2004 | 9 | 38 | 16 | 3 | 260 | False | False | False | False | False | False |

| 20 | 2004 | 9 | 38 | 17 | 4 | 261 | False | False | False | False | False | False |

| 21 | 2004 | 9 | 39 | 20 | 0 | 264 | False | False | False | False | False | False |

| 22 | 2004 | 9 | 39 | 21 | 1 | 265 | False | False | False | False | False | False |

| 23 | 2004 | 9 | 39 | 22 | 2 | 266 | False | False | False | False | False | False |

| 24 | 2004 | 9 | 39 | 23 | 3 | 267 | False | False | False | False | False | False |

| 25 | 2004 | 9 | 39 | 24 | 4 | 268 | False | False | False | False | False | False |

| 26 | 2004 | 9 | 40 | 27 | 0 | 271 | False | False | False | False | False | False |

| 27 | 2004 | 9 | 40 | 28 | 1 | 272 | False | False | False | False | False | False |

| 28 | 2004 | 9 | 40 | 29 | 2 | 273 | False | False | False | False | False | False |

| 29 | 2004 | 9 | 40 | 30 | 3 | 274 | True | False | True | False | False | False |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 2570 | 2014 | 11 | 45 | 3 | 0 | 307 | False | False | False | False | False | False |

| 2571 | 2014 | 11 | 45 | 4 | 1 | 308 | False | False | False | False | False | False |

| 2572 | 2014 | 11 | 45 | 5 | 2 | 309 | False | False | False | False | False | False |

| 2573 | 2014 | 11 | 45 | 6 | 3 | 310 | False | False | False | False | False | False |

| 2574 | 2014 | 11 | 45 | 7 | 4 | 311 | False | False | False | False | False | False |

| 2575 | 2014 | 11 | 46 | 10 | 0 | 314 | False | False | False | False | False | False |

| 2576 | 2014 | 11 | 46 | 11 | 1 | 315 | False | False | False | False | False | False |

| 2577 | 2014 | 11 | 46 | 12 | 2 | 316 | False | False | False | False | False | False |

| 2578 | 2014 | 11 | 46 | 13 | 3 | 317 | False | False | False | False | False | False |

| 2579 | 2014 | 11 | 46 | 14 | 4 | 318 | False | False | False | False | False | False |

| 2580 | 2014 | 11 | 47 | 17 | 0 | 321 | False | False | False | False | False | False |

| 2581 | 2014 | 11 | 47 | 18 | 1 | 322 | False | False | False | False | False | False |

| 2582 | 2014 | 11 | 47 | 19 | 2 | 323 | False | False | False | False | False | False |

| 2583 | 2014 | 11 | 47 | 20 | 3 | 324 | False | False | False | False | False | False |

| 2584 | 2014 | 11 | 47 | 21 | 4 | 325 | False | False | False | False | False | False |

| 2585 | 2014 | 11 | 48 | 24 | 0 | 328 | False | False | False | False | False | False |

| 2586 | 2014 | 11 | 48 | 25 | 1 | 329 | False | False | False | False | False | False |

| 2587 | 2014 | 11 | 48 | 26 | 2 | 330 | False | False | False | False | False | False |

| 2588 | 2014 | 11 | 48 | 28 | 4 | 332 | False | False | False | False | False | False |

| 2589 | 2014 | 12 | 49 | 1 | 0 | 335 | False | True | False | False | False | False |

| 2590 | 2014 | 12 | 49 | 2 | 1 | 336 | False | False | False | False | False | False |

| 2591 | 2014 | 12 | 49 | 3 | 2 | 337 | False | False | False | False | False | False |

| 2592 | 2014 | 12 | 49 | 4 | 3 | 338 | False | False | False | False | False | False |

| 2593 | 2014 | 12 | 49 | 5 | 4 | 339 | False | False | False | False | False | False |

| 2594 | 2014 | 12 | 50 | 8 | 0 | 342 | False | False | False | False | False | False |

| 2595 | 2014 | 12 | 50 | 9 | 1 | 343 | False | False | False | False | False | False |

| 2596 | 2014 | 12 | 50 | 10 | 2 | 344 | False | False | False | False | False | False |

| 2597 | 2014 | 12 | 50 | 11 | 3 | 345 | False | False | False | False | False | False |

| 2598 | 2014 | 12 | 50 | 12 | 4 | 346 | False | False | False | False | False | False |

| 2599 | 2014 | 12 | 51 | 15 | 0 | 349 | False | False | False | False | False | False |

2600 rows × 12 columns

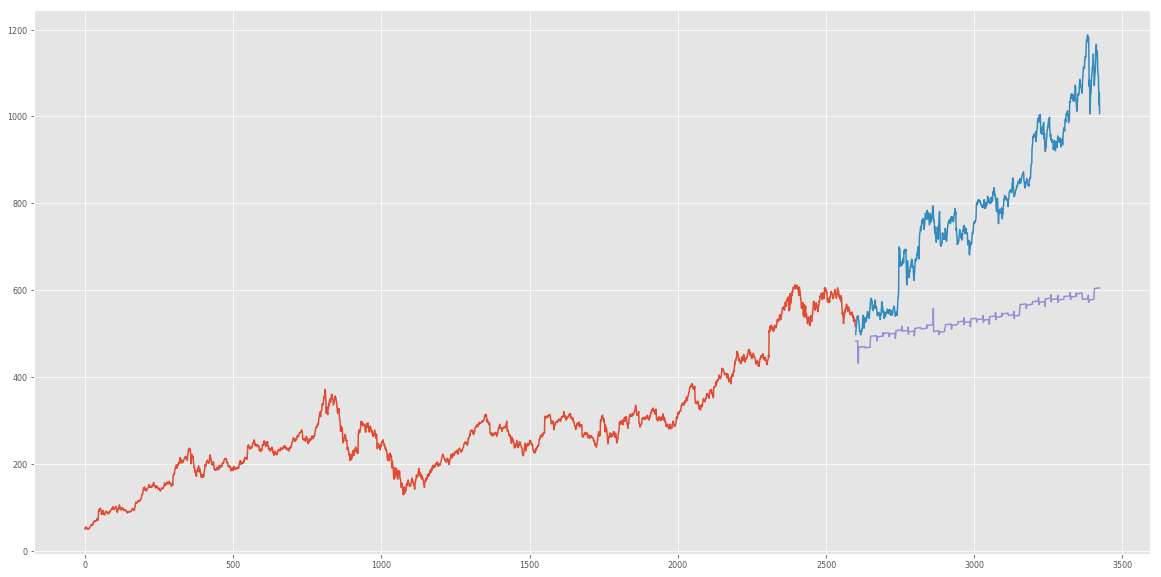

# Implementing linear regression

from sklearn.linear_model import LinearRegression

model = LinearRegression()

model.fit(x_train, y_train)

LinearRegression(copy_X=True, fit_intercept=True, n_jobs=None, normalize=False)

# Predictions

preds = model.predict(x_test)

rms = np.sqrt(np.mean(np.power((np.array(y_test)-np.array(preds)),2)))

print(rms)

292.21562094558186

# Plot

test['Predictions'] = 0

test['Predictions'] = preds

plt.plot(train['Adj. Close'])

plt.plot(test[['Adj. Close', 'Predictions']])

/usr/local/lib/python3.6/dist-packages/ipykernel_launcher.py:2: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: http://pandas.pydata.org/pandas-docs/stable/indexing.html#indexing-view-versus-copy

/usr/local/lib/python3.6/dist-packages/ipykernel_launcher.py:3: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: http://pandas.pydata.org/pandas-docs/stable/indexing.html#indexing-view-versus-copy

[<matplotlib.lines.Line2D at 0x7f6dee6f80f0>,

<matplotlib.lines.Line2D at 0x7f6dee6f82e8>]

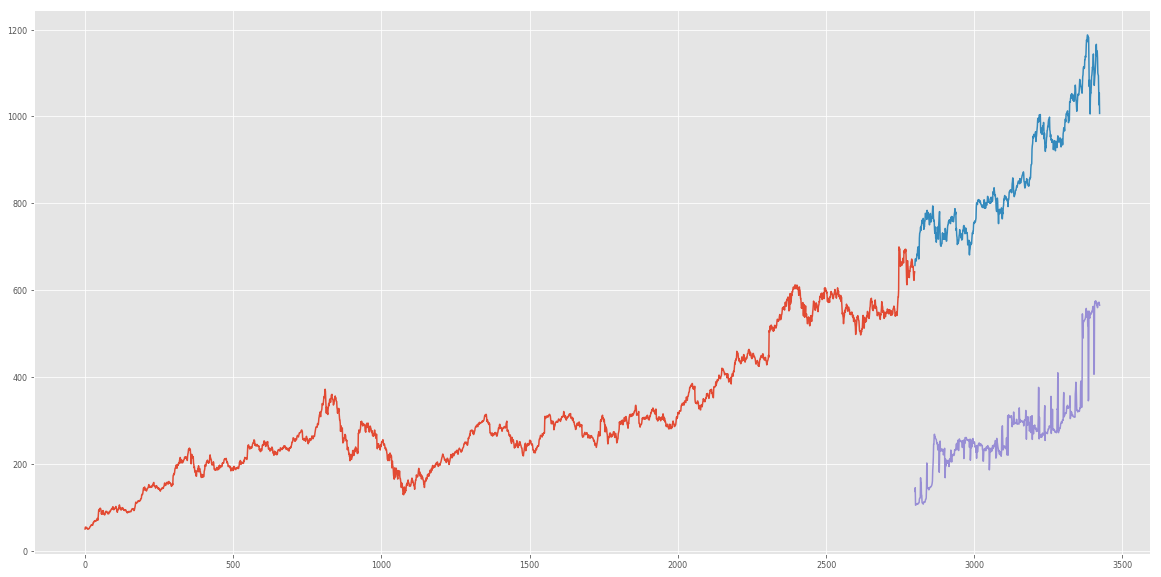

from sklearn import neighbors

from sklearn.model_selection import GridSearchCV

from sklearn.preprocessing import MinMaxScaler

scaler = MinMaxScaler(feature_range=(0, 1))

# scaling the data

x_train_scaled = scaler.fit_transform(x_train)

x_train = pd.DataFrame(x_train_scaled)

x_test_scaled = scaler.fit_transform(x_test)

x_test = pd.DataFrame(x_test_scaled)

# using gridsearch to find the best value of k

params = {'n_neighbors': [1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15]}

knn = neighbors.KNeighborsRegressor()

model = GridSearchCV(knn, params, cv=5)

# fitting the model and predicting

model.fit(x_train, y_train)

preds = model.predict(x_test)

# Results

rms = np.sqrt(np.mean(np.power((np.array(y_test)-np.array(preds)),2)))

print(rms)

589.3454139574452

test['Predictions'] = 0

test['Predictions'] = new_preds

plt.plot(train['Adj. Close'])

plt.plot(test[['Adj. Close', 'Predictions']])

/usr/local/lib/python3.6/dist-packages/ipykernel_launcher.py:1: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: http://pandas.pydata.org/pandas-docs/stable/indexing.html#indexing-view-versus-copy

/usr/local/lib/python3.6/dist-packages/ipykernel_launcher.py:2: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: http://pandas.pydata.org/pandas-docs/stable/indexing.html#indexing-view-versus-copy

[<matplotlib.lines.Line2D at 0x7f6dd249ab70>,

<matplotlib.lines.Line2D at 0x7f6dd24368d0>]

import tensorflow as tf

from tensorflow.keras import layers

model = tf.keras.models.Sequential()

model.add(tf.keras.layers.Dense(100, activation=tf.nn.relu))

model.add(tf.keras.layers.Dense(100, activation=tf.nn.relu))

model.add(tf.keras.layers.Dense(1, activation=tf.nn.relu))

model.compile(optimizer='adam', loss='mean_squared_error')

X_train = np.array(x_train)

Y_train = np.array(y_train)

X_train

array([[0. , 0.63636, 0.63462, ..., 0. , 0. , 0. ],

[0. , 0.63636, 0.63462, ..., 0. , 0. , 0. ],

[0. , 0.63636, 0.65385, ..., 0. , 0. , 0. ],

...,

[1. , 0.72727, 0.75 , ..., 0. , 0. , 0. ],

[1. , 0.72727, 0.75 , ..., 0. , 0. , 0. ],

[1. , 0.81818, 0.75 , ..., 1. , 0. , 0. ]])

X_train.shape

(2800, 12)

model.fit(X_train, Y_train, epochs=500)

Epoch 1/500

2800/2800 [==============================] - 1s 297us/sample - loss: 107042.6812

Epoch 2/500

2800/2800 [==============================] - 0s 67us/sample - loss: 41312.2483

Epoch 3/500

2800/2800 [==============================] - 0s 60us/sample - loss: 18302.2589

Epoch 4/500

2800/2800 [==============================] - 0s 66us/sample - loss: 14739.7240

Epoch 5/500

2800/2800 [==============================] - 0s 65us/sample - loss: 11421.5729

Epoch 6/500

2800/2800 [==============================] - 0s 64us/sample - loss: 8606.6912

Epoch 7/500

2800/2800 [==============================] - 0s 65us/sample - loss: 6625.6495

Epoch 8/500

2800/2800 [==============================] - 0s 62us/sample - loss: 5474.2571

Epoch 9/500

2800/2800 [==============================] - 0s 60us/sample - loss: 4892.3979

Epoch 10/500

2800/2800 [==============================] - 0s 59us/sample - loss: 4633.2238

Epoch 11/500

2800/2800 [==============================] - 0s 62us/sample - loss: 4482.4471

Epoch 12/500

2800/2800 [==============================] - 0s 59us/sample - loss: 4380.0899

Epoch 13/500

2800/2800 [==============================] - 0s 55us/sample - loss: 4300.8690

Epoch 14/500

2800/2800 [==============================] - 0s 62us/sample - loss: 4214.3174

Epoch 15/500

2800/2800 [==============================] - 0s 59us/sample - loss: 4159.0237

Epoch 16/500

2800/2800 [==============================] - 0s 64us/sample - loss: 4102.6403

Epoch 17/500

2800/2800 [==============================] - 0s 62us/sample - loss: 4074.3091

Epoch 18/500

2800/2800 [==============================] - 0s 62us/sample - loss: 4040.7758

Epoch 19/500

2800/2800 [==============================] - 0s 56us/sample - loss: 4000.0518

Epoch 20/500

2800/2800 [==============================] - 0s 56us/sample - loss: 4004.0519

Epoch 21/500

2800/2800 [==============================] - 0s 60us/sample - loss: 3929.9223

Epoch 22/500

2800/2800 [==============================] - 0s 56us/sample - loss: 3899.7539

Epoch 23/500

2800/2800 [==============================] - 0s 60us/sample - loss: 3859.3802

Epoch 24/500

2800/2800 [==============================] - 0s 54us/sample - loss: 3802.6503

Epoch 25/500

2800/2800 [==============================] - 0s 57us/sample - loss: 3777.4047

Epoch 26/500

2800/2800 [==============================] - 0s 60us/sample - loss: 3721.2936

Epoch 27/500

2800/2800 [==============================] - 0s 59us/sample - loss: 3658.5611

Epoch 28/500

2800/2800 [==============================] - 0s 57us/sample - loss: 3596.7238

Epoch 29/500

2800/2800 [==============================] - 0s 63us/sample - loss: 3569.7354

Epoch 30/500

2800/2800 [==============================] - 0s 57us/sample - loss: 3508.0627

Epoch 31/500

2800/2800 [==============================] - 0s 58us/sample - loss: 3432.4962

Epoch 32/500

2800/2800 [==============================] - 0s 59us/sample - loss: 3371.8384

Epoch 33/500

2800/2800 [==============================] - 0s 55us/sample - loss: 3327.3058

Epoch 34/500

2800/2800 [==============================] - 0s 59us/sample - loss: 3239.4707

Epoch 35/500

2800/2800 [==============================] - 0s 60us/sample - loss: 3211.6994

Epoch 36/500

2800/2800 [==============================] - 0s 55us/sample - loss: 3118.9492

Epoch 37/500

2800/2800 [==============================] - 0s 60us/sample - loss: 3059.0352

Epoch 38/500

2800/2800 [==============================] - 0s 54us/sample - loss: 2997.7588

Epoch 39/500

2800/2800 [==============================] - 0s 57us/sample - loss: 2929.7851

Epoch 40/500

2800/2800 [==============================] - 0s 62us/sample - loss: 2875.7662

Epoch 41/500

2800/2800 [==============================] - 0s 60us/sample - loss: 2815.8341

Epoch 42/500

2800/2800 [==============================] - 0s 55us/sample - loss: 2755.2486

Epoch 43/500

2800/2800 [==============================] - 0s 62us/sample - loss: 2743.1073

Epoch 44/500

2800/2800 [==============================] - 0s 55us/sample - loss: 2650.5037

Epoch 45/500

2800/2800 [==============================] - 0s 62us/sample - loss: 2611.3546

Epoch 46/500

2800/2800 [==============================] - 0s 59us/sample - loss: 2559.4127

Epoch 47/500

2800/2800 [==============================] - 0s 62us/sample - loss: 2502.4777

Epoch 48/500

2800/2800 [==============================] - 0s 59us/sample - loss: 2473.7575

Epoch 49/500

2800/2800 [==============================] - 0s 60us/sample - loss: 2427.9666

Epoch 50/500

2800/2800 [==============================] - 0s 55us/sample - loss: 2375.8519

Epoch 51/500

2800/2800 [==============================] - 0s 59us/sample - loss: 2335.6260

Epoch 52/500

2800/2800 [==============================] - 0s 60us/sample - loss: 2302.3150

Epoch 53/500

2800/2800 [==============================] - 0s 63us/sample - loss: 2261.2262

Epoch 54/500

2800/2800 [==============================] - 0s 57us/sample - loss: 2276.2005

Epoch 55/500

2800/2800 [==============================] - 0s 61us/sample - loss: 2217.6836

Epoch 56/500

2800/2800 [==============================] - 0s 53us/sample - loss: 2200.5515

Epoch 57/500

2800/2800 [==============================] - 0s 69us/sample - loss: 2166.6168

Epoch 58/500

2800/2800 [==============================] - 0s 62us/sample - loss: 2149.8538

Epoch 59/500

2800/2800 [==============================] - 0s 62us/sample - loss: 2131.2684

Epoch 60/500

2800/2800 [==============================] - 0s 65us/sample - loss: 2117.5617

Epoch 61/500

2800/2800 [==============================] - 0s 59us/sample - loss: 2136.8635

Epoch 62/500

2800/2800 [==============================] - 0s 59us/sample - loss: 2086.9172

Epoch 63/500

2800/2800 [==============================] - 0s 60us/sample - loss: 2074.4112

Epoch 64/500

2800/2800 [==============================] - 0s 63us/sample - loss: 2070.8835

Epoch 65/500

2800/2800 [==============================] - 0s 65us/sample - loss: 2049.8117

Epoch 66/500

2800/2800 [==============================] - 0s 56us/sample - loss: 2059.9661

Epoch 67/500

2800/2800 [==============================] - 0s 64us/sample - loss: 2042.7345

Epoch 68/500

2800/2800 [==============================] - 0s 58us/sample - loss: 2043.8399

Epoch 69/500

2800/2800 [==============================] - 0s 64us/sample - loss: 2043.6758

Epoch 70/500

2800/2800 [==============================] - 0s 65us/sample - loss: 2010.1117

Epoch 71/500

2800/2800 [==============================] - 0s 57us/sample - loss: 2006.6912

Epoch 72/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1998.4531

Epoch 73/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1984.5140

Epoch 74/500

2800/2800 [==============================] - 0s 58us/sample - loss: 1996.5648

Epoch 75/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1962.1523

Epoch 76/500

2800/2800 [==============================] - 0s 62us/sample - loss: 1955.9846

Epoch 77/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1955.2565

Epoch 78/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1947.7191

Epoch 79/500

2800/2800 [==============================] - 0s 56us/sample - loss: 1953.3301

Epoch 80/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1934.9385

Epoch 81/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1924.2109

Epoch 82/500

2800/2800 [==============================] - 0s 62us/sample - loss: 1910.7859

Epoch 83/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1897.3683

Epoch 84/500

2800/2800 [==============================] - 0s 59us/sample - loss: 1907.4632

Epoch 85/500

2800/2800 [==============================] - 0s 58us/sample - loss: 1891.1020

Epoch 86/500

2800/2800 [==============================] - 0s 55us/sample - loss: 1891.9109

Epoch 87/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1864.7346

Epoch 88/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1862.2231

Epoch 89/500

2800/2800 [==============================] - 0s 56us/sample - loss: 1844.3185

Epoch 90/500

2800/2800 [==============================] - 0s 59us/sample - loss: 1834.2257

Epoch 91/500

2800/2800 [==============================] - 0s 59us/sample - loss: 1833.4358

Epoch 92/500

2800/2800 [==============================] - 0s 55us/sample - loss: 1814.5655

Epoch 93/500

2800/2800 [==============================] - 0s 58us/sample - loss: 1791.7842

Epoch 94/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1780.9216

Epoch 95/500

2800/2800 [==============================] - 0s 59us/sample - loss: 1775.3002

Epoch 96/500

2800/2800 [==============================] - 0s 58us/sample - loss: 1775.6334

Epoch 97/500

2800/2800 [==============================] - 0s 59us/sample - loss: 1741.6012

Epoch 98/500

2800/2800 [==============================] - 0s 56us/sample - loss: 1748.9729

Epoch 99/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1729.0204

Epoch 100/500

2800/2800 [==============================] - 0s 64us/sample - loss: 1717.6277

Epoch 101/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1718.6550

Epoch 102/500

2800/2800 [==============================] - 0s 55us/sample - loss: 1688.5626

Epoch 103/500

2800/2800 [==============================] - 0s 57us/sample - loss: 1678.3398

Epoch 104/500

2800/2800 [==============================] - 0s 57us/sample - loss: 1653.9336

Epoch 105/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1639.7355

Epoch 106/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1652.1106

Epoch 107/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1614.5160

Epoch 108/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1596.8088

Epoch 109/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1614.2061

Epoch 110/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1573.5171

Epoch 111/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1572.3198

Epoch 112/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1559.2580

Epoch 113/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1563.9145

Epoch 114/500

2800/2800 [==============================] - 0s 56us/sample - loss: 1538.0331

Epoch 115/500

2800/2800 [==============================] - 0s 62us/sample - loss: 1522.4755

Epoch 116/500

2800/2800 [==============================] - 0s 66us/sample - loss: 1514.9207

Epoch 117/500

2800/2800 [==============================] - 0s 72us/sample - loss: 1505.6984

Epoch 118/500

2800/2800 [==============================] - 0s 65us/sample - loss: 1497.6740

Epoch 119/500

2800/2800 [==============================] - 0s 67us/sample - loss: 1489.2281

Epoch 120/500

2800/2800 [==============================] - 0s 63us/sample - loss: 1472.0496

Epoch 121/500

2800/2800 [==============================] - 0s 66us/sample - loss: 1455.5400

Epoch 122/500

2800/2800 [==============================] - 0s 68us/sample - loss: 1455.3277

Epoch 123/500

2800/2800 [==============================] - 0s 72us/sample - loss: 1448.1577

Epoch 124/500

2800/2800 [==============================] - 0s 66us/sample - loss: 1442.3502

Epoch 125/500

2800/2800 [==============================] - 0s 66us/sample - loss: 1438.5963

Epoch 126/500

2800/2800 [==============================] - 0s 65us/sample - loss: 1420.4090

Epoch 127/500

2800/2800 [==============================] - 0s 65us/sample - loss: 1410.9522

Epoch 128/500

2800/2800 [==============================] - 0s 66us/sample - loss: 1404.9106

Epoch 129/500

2800/2800 [==============================] - 0s 64us/sample - loss: 1394.6398

Epoch 130/500

2800/2800 [==============================] - 0s 65us/sample - loss: 1394.5640

Epoch 131/500

2800/2800 [==============================] - 0s 66us/sample - loss: 1380.8252

Epoch 132/500

2800/2800 [==============================] - 0s 68us/sample - loss: 1394.0857

Epoch 133/500

2800/2800 [==============================] - 0s 70us/sample - loss: 1379.1661

Epoch 134/500

2800/2800 [==============================] - 0s 68us/sample - loss: 1367.0957

Epoch 135/500

2800/2800 [==============================] - 0s 67us/sample - loss: 1369.8024

Epoch 136/500

2800/2800 [==============================] - 0s 66us/sample - loss: 1372.2572

Epoch 137/500

2800/2800 [==============================] - 0s 64us/sample - loss: 1349.9875

Epoch 138/500

2800/2800 [==============================] - 0s 66us/sample - loss: 1328.4315

Epoch 139/500

2800/2800 [==============================] - 0s 65us/sample - loss: 1345.7265

Epoch 140/500

2800/2800 [==============================] - 0s 66us/sample - loss: 1333.4088

Epoch 141/500

2800/2800 [==============================] - 0s 65us/sample - loss: 1332.9744

Epoch 142/500

2800/2800 [==============================] - 0s 65us/sample - loss: 1316.0742

Epoch 143/500

2800/2800 [==============================] - 0s 67us/sample - loss: 1314.9390

Epoch 144/500

2800/2800 [==============================] - 0s 69us/sample - loss: 1309.4800

Epoch 145/500

2800/2800 [==============================] - 0s 66us/sample - loss: 1307.4666

Epoch 146/500

2800/2800 [==============================] - 0s 64us/sample - loss: 1304.8010

Epoch 147/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1304.5787

Epoch 148/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1306.1439

Epoch 149/500

2800/2800 [==============================] - 0s 59us/sample - loss: 1297.4934

Epoch 150/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1291.2073

Epoch 151/500

2800/2800 [==============================] - 0s 58us/sample - loss: 1302.2408

Epoch 152/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1286.4586

Epoch 153/500

2800/2800 [==============================] - 0s 59us/sample - loss: 1289.1976

Epoch 154/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1290.9848

Epoch 155/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1285.6917

Epoch 156/500

2800/2800 [==============================] - 0s 62us/sample - loss: 1284.8793

Epoch 157/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1276.9912

Epoch 158/500

2800/2800 [==============================] - 0s 57us/sample - loss: 1282.7271

Epoch 159/500

2800/2800 [==============================] - 0s 59us/sample - loss: 1270.7725

Epoch 160/500

2800/2800 [==============================] - 0s 56us/sample - loss: 1275.8668

Epoch 161/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1269.0021

Epoch 162/500

2800/2800 [==============================] - 0s 59us/sample - loss: 1269.8503

Epoch 163/500

2800/2800 [==============================] - 0s 64us/sample - loss: 1272.6825

Epoch 164/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1256.9528

Epoch 165/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1265.8719

Epoch 166/500

2800/2800 [==============================] - 0s 63us/sample - loss: 1274.9810

Epoch 167/500

2800/2800 [==============================] - 0s 66us/sample - loss: 1251.9277

Epoch 168/500

2800/2800 [==============================] - 0s 63us/sample - loss: 1254.3053

Epoch 169/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1250.6730

Epoch 170/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1247.9147

Epoch 171/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1255.3040

Epoch 172/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1246.4044

Epoch 173/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1248.9053

Epoch 174/500

2800/2800 [==============================] - 0s 66us/sample - loss: 1235.4831

Epoch 175/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1235.7729

Epoch 176/500

2800/2800 [==============================] - 0s 63us/sample - loss: 1231.1521

Epoch 177/500

2800/2800 [==============================] - 0s 62us/sample - loss: 1229.4448

Epoch 178/500

2800/2800 [==============================] - 0s 63us/sample - loss: 1235.0874

Epoch 179/500

2800/2800 [==============================] - 0s 63us/sample - loss: 1236.4246

Epoch 180/500

2800/2800 [==============================] - 0s 62us/sample - loss: 1234.3112

Epoch 181/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1241.1206

Epoch 182/500

2800/2800 [==============================] - 0s 55us/sample - loss: 1228.0536

Epoch 183/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1234.6207

Epoch 184/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1218.8249

Epoch 185/500

2800/2800 [==============================] - 0s 55us/sample - loss: 1227.5636

Epoch 186/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1231.7618

Epoch 187/500

2800/2800 [==============================] - 0s 57us/sample - loss: 1211.7460

Epoch 188/500

2800/2800 [==============================] - 0s 59us/sample - loss: 1214.7899

Epoch 189/500

2800/2800 [==============================] - 0s 64us/sample - loss: 1227.6510

Epoch 190/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1221.4233

Epoch 191/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1208.9139

Epoch 192/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1211.7745

Epoch 193/500

2800/2800 [==============================] - 0s 59us/sample - loss: 1212.7552

Epoch 194/500

2800/2800 [==============================] - 0s 55us/sample - loss: 1225.5838

Epoch 195/500

2800/2800 [==============================] - 0s 56us/sample - loss: 1203.9864

Epoch 196/500

2800/2800 [==============================] - 0s 55us/sample - loss: 1192.8778

Epoch 197/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1196.5854

Epoch 198/500

2800/2800 [==============================] - 0s 59us/sample - loss: 1195.8245

Epoch 199/500

2800/2800 [==============================] - 0s 68us/sample - loss: 1205.7381

Epoch 200/500

2800/2800 [==============================] - 0s 58us/sample - loss: 1232.1300

Epoch 201/500

2800/2800 [==============================] - 0s 56us/sample - loss: 1206.4220

Epoch 202/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1208.0591

Epoch 203/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1184.6516

Epoch 204/500

2800/2800 [==============================] - 0s 59us/sample - loss: 1182.5998

Epoch 205/500

2800/2800 [==============================] - 0s 56us/sample - loss: 1181.9274

Epoch 206/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1192.6584

Epoch 207/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1193.7265

Epoch 208/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1186.7215

Epoch 209/500

2800/2800 [==============================] - 0s 59us/sample - loss: 1199.1962

Epoch 210/500

2800/2800 [==============================] - 0s 58us/sample - loss: 1173.3175

Epoch 211/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1170.1261

Epoch 212/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1168.3255

Epoch 213/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1168.4484

Epoch 214/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1177.4722

Epoch 215/500

2800/2800 [==============================] - 0s 62us/sample - loss: 1167.2033

Epoch 216/500

2800/2800 [==============================] - 0s 55us/sample - loss: 1168.7120

Epoch 217/500

2800/2800 [==============================] - 0s 59us/sample - loss: 1161.4171

Epoch 218/500

2800/2800 [==============================] - 0s 56us/sample - loss: 1179.3264

Epoch 219/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1168.1229

Epoch 220/500

2800/2800 [==============================] - 0s 58us/sample - loss: 1163.9322

Epoch 221/500

2800/2800 [==============================] - 0s 57us/sample - loss: 1168.4568

Epoch 222/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1155.7791

Epoch 223/500

2800/2800 [==============================] - 0s 59us/sample - loss: 1159.8121

Epoch 224/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1144.4758

Epoch 225/500

2800/2800 [==============================] - 0s 65us/sample - loss: 1138.5819

Epoch 226/500

2800/2800 [==============================] - 0s 68us/sample - loss: 1150.9946

Epoch 227/500

2800/2800 [==============================] - 0s 55us/sample - loss: 1142.0983

Epoch 228/500

2800/2800 [==============================] - 0s 62us/sample - loss: 1139.8449

Epoch 229/500

2800/2800 [==============================] - 0s 56us/sample - loss: 1138.2159

Epoch 230/500

2800/2800 [==============================] - 0s 58us/sample - loss: 1132.8268

Epoch 231/500

2800/2800 [==============================] - 0s 63us/sample - loss: 1144.6047

Epoch 232/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1145.6636

Epoch 233/500

2800/2800 [==============================] - 0s 56us/sample - loss: 1131.2519

Epoch 234/500

2800/2800 [==============================] - 0s 67us/sample - loss: 1163.3628

Epoch 235/500

2800/2800 [==============================] - 0s 55us/sample - loss: 1132.9240

Epoch 236/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1123.2252

Epoch 237/500

2800/2800 [==============================] - 0s 62us/sample - loss: 1143.7624

Epoch 238/500

2800/2800 [==============================] - 0s 66us/sample - loss: 1131.8123

Epoch 239/500

2800/2800 [==============================] - 0s 55us/sample - loss: 1120.6352

Epoch 240/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1123.3857

Epoch 241/500

2800/2800 [==============================] - 0s 66us/sample - loss: 1129.1544

Epoch 242/500

2800/2800 [==============================] - 0s 62us/sample - loss: 1121.9190

Epoch 243/500

2800/2800 [==============================] - 0s 62us/sample - loss: 1116.0550

Epoch 244/500

2800/2800 [==============================] - 0s 59us/sample - loss: 1126.5603

Epoch 245/500

2800/2800 [==============================] - 0s 59us/sample - loss: 1115.0059

Epoch 246/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1123.4432

Epoch 247/500

2800/2800 [==============================] - 0s 58us/sample - loss: 1108.1146

Epoch 248/500

2800/2800 [==============================] - 0s 62us/sample - loss: 1109.9104

Epoch 249/500

2800/2800 [==============================] - 0s 56us/sample - loss: 1115.1202

Epoch 250/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1116.5792

Epoch 251/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1109.9456

Epoch 252/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1111.4490

Epoch 253/500

2800/2800 [==============================] - 0s 60us/sample - loss: 1108.3779

Epoch 254/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1104.7345

Epoch 255/500

2800/2800 [==============================] - 0s 59us/sample - loss: 1112.3371

Epoch 256/500

2800/2800 [==============================] - 0s 64us/sample - loss: 1104.8332

Epoch 257/500

2800/2800 [==============================] - 0s 61us/sample - loss: 1114.8357

Epoch 258/500