The following describes the problem, constraints, and assumption made when modeling ATS' onboarding process using discrete event simulations (DES) -- collectively called the study. This study is intended to simulate people operating in a simulated system, consisting of two sequential services within a queueing system. The study is meant to represent current conditions and not a future state or process. The study is broken into the following sections:

- Problem Formulation and Study Design

- Collecting Data and Defining the Model

- Assumptions Made in Model Definition

- Discrete Event Simulation using SimPy

- Simulation Outputs and Observations

- Recommended Actions and Next Steps

A customer wanting to use ATS (ATS.Service) must complete a multi-step sequence of tasks that may take several weeks to finalize. The onboarding process requires back-and-forth exchanges of information, and some tasks in the onboarding flow can only be performed by specialists. This study aims to used DES to identify and understand ways to improve the customer's experience during the ATS onboarding process -- either by identifying areas where variations in the system's processes can be used to improve the overall system, or by improving the experience in areas where the system's processes, as they stand, cannot be improved.

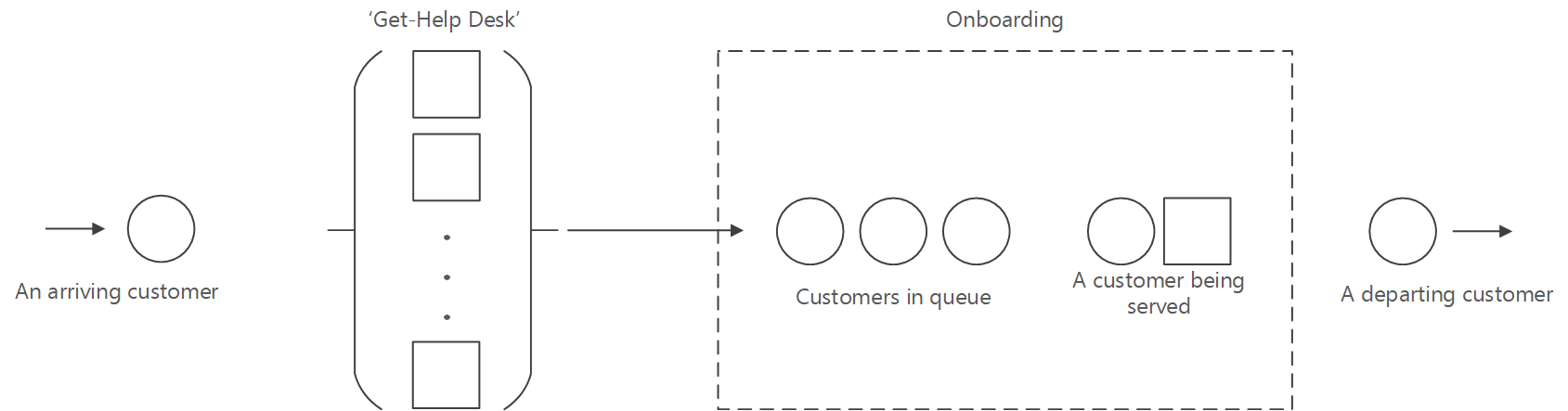

Whenever a 1P customer wants to onboard to ATS' solutions they complete various processes within a system that can be broken down to two main subsystems:

- Getting to Know ATS -- involves a manual back-and-forth exchange of information about ATS and the customer's scenarios. This process ends when ATS has all the necessary information to onboard the customer.

- Tenant Onboarding - involves the manual process of using the customer's provided information to deploy SF clusters containing ATS solutions on their behalf.

Note: The following terms are used throughout the document:

- Customer - a customer is a any service wanting to onboard to ATS Solution

- Server - an ATS engineer that is responsible for completing one, many, or all of the customer-onboarding processes.

- Service - the act of carrying out and completing a process, performed by a Server.

- Event - an instantaneous or temporal occurrence that may change the system state.

- Resource - are Servers. i.e., ATS Engineers, that ATS makes available to its customers

- Process - a simulation construct that encapsulates a customer using a resource that leads to a transition event.

-

Arrival Rate (

$\lambda$ ) is the number of customers arriving per unit time -- a Poisson process at a rate of$\lambda = 1/E(A)$ , where$A$ is the arrival time.- e.g., 3 customers per week.

-

Service Rate (

$\mu$ ) is the number of customers that a single server can serve per unit time -- each customer requires an exponentially distributed service time period with an average service rate of$\mu = 1/E(S)$ , where$S$ is the time it takes to complete a service.- e.g. 1 week per customer => 4 customers per month.

-

Number of servers (

$c$ ) is the number of servers that can serve a customer -- that is, perform the necessary task(s) to move the customer past servicing processes and having them exit the system.

The first subsystem of the ATS customer flow can be broken down to the following processes:

- START: A customer arrives at ATS' site. They may have found the site through word of mouth, by searching, or by being redirected there by an ATS team member.

- The customer spends some time familiarizing themselves on the information and actions that will be required of them. This annealing process is generally involves multiple email exchanges between the customer and ATS engineer(s).

- END: the Getting to Know ATS process ends when a customer submits the required information for an ATS engineering to onboard customer to ATS.

For details and assumption on the modeling approach for the Getting to Know ATS sub-process, see Modeling 'Getting to Know ATS' Subsystem Using GI/G/s Queue

The second subsystem, Tenant Onboarding, can be broken down to the following processes:

- START: A customer arrives at the onboarding queue once they've completed the prerequisite ADO ticket. The on-call engineer then works with customer to address issues with ticket.

- One or more ATS engineers communicate with customer to understand scenarios and to generally educates the customer on ATS solutions. This type of engagement occurs at least once while the customer is undergoing the onboarding process.

- END: a customer has been fully served when their tenant has been onboarded, and they have received an email notification of onboarding completion.

For details and assumptions on the modeling approach for the Tenant Onboarding sub-process, see Modeling 'Tenant Onboarding' Using M/M/c Queue

The following section describes the process by which data for defining the model was acquired.

To understand the ATS onboarding flow, several interviews with ATS engineers we carried out. As part of the interviews, estimate times for processing each step were also collected.

Limitations: Given that this study is a learning project, carried out during Hackathon week, it should be noted that there are several limitations in the data acquisition approach and process definitions used in this study. In a 'production' grade study, the following considerations would have been carried out:

- Iteratively Formulate the problem and plan the study

- Problem of interest would be discussed with management.

- Problem would be stated in quantitative terms.

- Hold one or more kickoff meetings for the study to be conducted, with the project

management, the simulation analysts, and subject-matter experts (SMEs). The following things would be discussed:

- Overall objectives of the study

- Specific questions to be answered by the study (required to decide level of model detail)

- Performance measures that will be used to evaluate the efficacy of different system configurations

- Scope of the model

- System configurations to be modeled (required to decide generality of simulation program

- Time frame for the study and the required resources

- Collect data and define a model

- Collect information on the system structure and operating procedures.

- No single person or document is sufficient.

- Statistical sampling techniques should be issued, and documented in assumptions document.

- Careful attention would be paid to the formalization of the procedures.

- Collect data sets (if possible) that can be used to specify model parameters and input probability distributions.

- A description of the above information and data in a written assumptions document.

- Collect data (if possible) on the performance of the existing system (for benchmarking and validation purposes.

- The level of model detail would be chosen based on the following:

- Project objectives

- Performance measures

- Data availability

- Credibility concerns

- Computer constraints

- Opinions of SMEs

- Time and money constraints

- Careful model analysis would be carried out to ensure there is no one-to-one correspondence between each element of the model and the corresponding element of the system.

- Models would be iteratively improved as needed to ensure the model can be used to make effective decisions, and that it does not obscure important system factors.

- Ongoing interactions with management (and other key project personnel) would be maintained on a regular basis

- Collect information on the system structure and operating procedures.

- An assumptions document would be created and maintained

- As part of document maintenance I would perform a structured walk-through of the assumptions document before an audience of managers, analysts, and SMEs. This will:

- Help ensure that the model’s assumptions are correct and complete

- Promote interaction among the project members

- Promote ownership of the model

- Take place before programming begins, to avoid significant reprogramming later

- As part of document maintenance I would perform a structured walk-through of the assumptions document before an audience of managers, analysts, and SMEs. This will:

- Construct, debug, verify a computer program (computational simulation)

- Make pilot runs

- Pilot runs would be executed to explore answers to some of the questions/goals defined earlier, and/or to test hypotheses about a process or a system.

- Validate the simulation model

- Compare simulation to baselines and or other systems (if they exist)

- Perform a structured analysis of the simulation with management, the simulation analysts, and SMEs to evaluate the model results for correctness.

- Use sensitivity analyses to determine what model factors have a significant impact on performance measures and, thus, have to be modeled carefully.

- Design experiments

- Specify the following for each system configuration of interest:

- Length of each simulation run

- Length of the warmup period, if one is appropriate

- Number of independent simulation runs using different random numbers to construct confidence intervals

- Specify the following for each system configuration of interest:

- Make production runs

- Analyze output data

- Two major objectives in analyzing the output data would be:

- Determine the absolute performance of certain system configurations

- Compare alternative system configurations in a relative sense

- Two major objectives in analyzing the output data would be:

- Document, present, and use results

- Document assumptions, simulation program, and study’s results for use in current and future projects.

- Present study’s results.

- Discuss model building and validation process to promote credibility.

- Results are used in decision-making process if they are both valid and credible.

As previously mentioned, the ATS onboarding flow can be broken down to two core components: (1) Getting to Know ATS, and (2) Tenant Onboarding. Getting to Know ATS is the subsystem by which a customer arrives at a Server that ensures the customer arrives at the necessary knowledge state to undergo tenant onboarding, while Tenant Onboarding is the subsystem by which ATS clusters are deployed on behalf of the customer. The description of the general modeling considerations, and subsystems' approaches used in this study follow.

The simulation beings in an "empty-and-idle state;" i.e., no customers are present and the server is idle. At time 0, we begin waiting for the arrival of the first customer, which occurs after the first interarrival time

To measure the performance of the system we will look at estimates of the following quantities:

-

The expected average delay in queue of the

$n$ customers completing their delays during the simulation -- denoted by$d(n)$ . Where$d(n)$ is the average of a large, finite, number of$n$ -customer delays. From a single run of the simulation resulting in customer delays$D_1, D_2, \dots, D_n$ we have an estimator of$$\hat{d}(n)=\frac{\sum_{i=1}^n D_i}{n}$$ -

The expected average number of customers in the queue that are not being served -- denoted by

$q(n)$ . Then, given the number of customers in queue at time$t$ is$t\ge0$ ,$$\hat{q}(n)=\sum_{i=0}^{\infty} iP_i$$ is the weighted average of the possible values of$i$ for the queue length$Q(t)$ . If we let$T_i$ be the total time during the simulation that the queue is of length$i$ , then$T(n) = T_0+T_1+\cdots$ and$P_i=T_i/T(n)$ we have$$\hat{q}(n)=\frac{\sum_{i=0}^{\infty} iT_i}{T(n)}$$ -

The expected utilization of the server is the expected proportion of time during the simulation (from time 0 to time $T(n)$) that the server is busy serving a customer -- denoted by

$u(n)$ . From a single simulation we have for a 'busy function'$B(t)$ where$B(t)=1$ if the server is busy at time$t$ and$B(t)=0$ if server is idle at time$t$ we$$\hat{u}(n) =\frac{\int_0^{T(n)}B(t) ~dt}{T(n)}$$

A customer can inquire about ATS' solutions at any point in time -- shown in the diagram as arriving at the 'Get-Help Desk.' We assume that the interarrival times of customers are IID Poisson random variables. Each Server, i.e., an ATS engineer (gen. 2 max), will have a separate queue. An arriving customer joins the queue for the first server to engage said customer. A customer is leaves the subsystem when a server completes all processes for a customer.

A common way of simulating independent queues in which a customer can switch between servers, known as jockeying, is done through a multiteller bank with jockeying simulation. In our case, instead of a customer switching tellers to one with a shorter queue, a customer switches servers when the on-call rotation of the lead terminates and they pass the customer to the new on-call lead.

- Either an on-call dev of a more senior engineer fields questions from a customer that is interested in on-boarding to ATS.

- A customer can arrive at the 'Get-Help Desk' at any time of the day, but ATS only fields questions from 8am to 5pm. As such, an arrival that happen at 5pm on a Friday will not be engaged until 8am on the following Monday (63 hrs total).

- The number of ADO engineers that can serve a customer during any one process are interchangable. That is an

on_call_eng, can serve as averify_eng(details below). - If a meeting is scheduled, either one or many of the ATS engineers attend.

- For simplicity purposes, we assume that the customer takes at least a full workday to collect the information and attain the necessary knowledge to file onboarding ticket. The customer is assumed to be eager, thus it it will not take more than a full week for the customer to have completed the 8 hours worth of work required.

- If a meeting is schedule, the meeting is assumed to be one-time, hour-long, event that is scheduled at a minimum of 24hrs in advance. For example, if a meeting is schedule on Monday at 9am for the earliest available slot, the meeting would conclude at 10am on Tuesday (total of 25hrs).

Following is a breakdown of the steps a customer might take as part of completing the Getting to Know ATS subsystem:

- Arrive at ATS and wait to be engaged.

- Ask questions and request resources.

- Wait to have question answered and requests met.

- Collect required information, or complete learning requirements

- Choose to schedule a meeting

- if they schedule a meeting, then they meet

- if they don't schedule a meeting, they skip to the last step.

- Complete and submit ADO ticket.

| Event description | Event type | Notes |

|---|---|---|

| Arrival of a customer to the 'Get Help Desk' | 1 | -- |

| Exchanges of information | 2 | There may be multiple exchanges that affect the system's state. For simplicity, we only consider a single transition from 'not having enough knowledge' to 'having sufficient knowledge' to onboard to ATS. |

| End of sup-system simulation | 3 | This is achieved when an ADO ticket is submitted. |

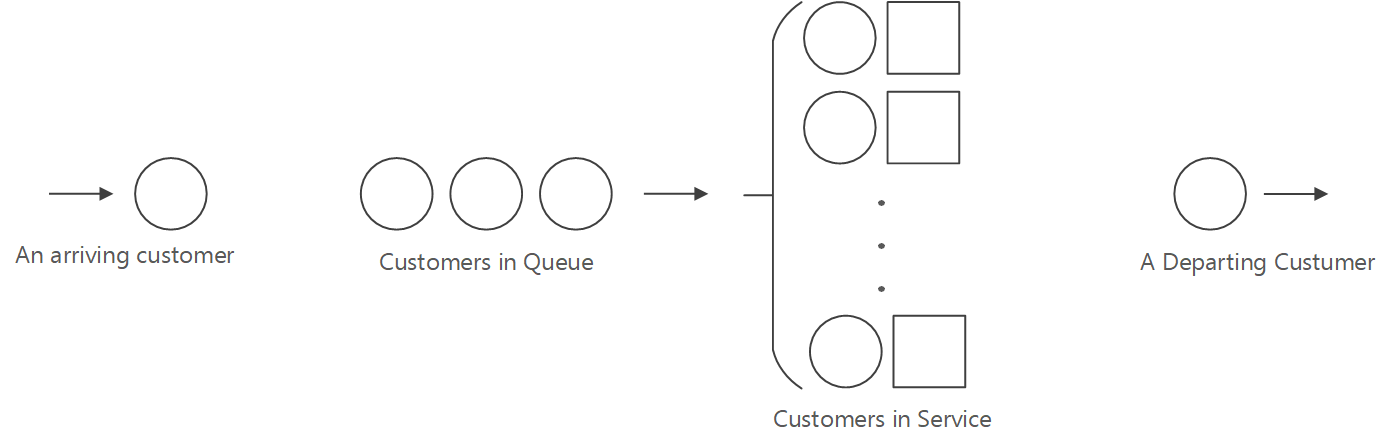

The Tenant Onboarding subsystem is essentially a linear progression that begins when a customer arrives at the queue, i.e., the customer submitted an ADO work item, and terminates when the customer's tenant is onboarded. A customer who arrives and finds the server idle enters service immediately with a service time that is independent of the interarrival times. A customer who arrives and finds the server busy joins the end of a single queue. A server can serve multiple customers in parallel. Upon completing the service for a customer, the server chooses a customer from the queue (if any) in a first-in, first-out (FIFO) manner. A customer has exited the subsystem when they are notified that their tenant onboarding has been completed.

Single Server Queueing System simulation is a common way of modeling systems such as the Tenant Onboarding sup-system.

- A on-call engineer will take the ADO ticket and commence the tenant onboarding process

- It may be the case that the on-call engineer requires additional information from customer, which can lead to correspondence exchanges and or to meetings. For simplicity, the delay introduced to the systems is assumed to be negligible.

- Once having all the necessary information, and verifying that the customer has completed all prerequisite steps for tenant onboarding, the on-call engineer deploys SF clusters using safe deployment practices (SDP). Because SDP can take 2+ weeks to complete, the influence of any other process that introduces delay in the system is assumed to be captured in the variance of tenant deployment process. Hence the influence of any other process is assumed to be negligible.

- An on-call engineer can tenant onboard multiple customers at the same time.

- Service times are assumed to be exponential processes, with a minimum equivalent to the time SDP takes.

- Any delay introduced into the subsystem by the 'end of onboarding process' notification is assumed to be negligible.

Following is a breakdown of the steps a customer might take as part of completing the Tenant Onboarding subsystem:

- Arrive at ATS tenant and wait to be engaged.

- Verify ticket, and meet pre-requirements (negligible time).

- Have SF cluster deployed on customer's behalf.

- Receive notification of completed tenant deployment.

| Event description | Event type | Notes |

|---|---|---|

| Arrival of a customer to the 'Tenant Onboarding' | 1 | -- |

| Tenant Onboarding | 2 | Because SDP introduces the majority of the delay in to the subsystem, for simplicity, the delay contribution of any other sup-process to the tenant onboarding process is assumed to be captured in the variance for the tenant onboarding process. Thus the contribution in delay by other sub-processes is negligible. |

| End of sup-system simulation | 3 | This is achieved when customer is notified of the completion of their tenant onboarding. |

- Interarrival times

$A_1, A_2, \dots$ are independent and identically distributed (IID) random variables -- that is, they all have the same probability distribution) - All servers are identical

- All customers are identical

- A customer who arrives and finds the server idle enters service immediately, and the service times

$S_1, S_2, \dots$ of successive customers are IID. - A customer who arrives and finds the server busy joins the end of a single queue.

- One server can only serve one customer at a time, unless specified in the subsystem assumptions.

- Upon completing service for a customer, the server chooses a customer from the queue in a first-in, first-out manner.

- Customers experience more pain while waiting to be served than while being served. Pain(expected average waiting time in system) > Pain (expected average delay in queue)

- In order to use discrete events, as opposed to continuous events, we must consider time as discrete units. A unit of time can be anywhere between 0 and infinity, so long as the counting units are integers.

- Any engineer that can field questions during Getting to Know ATS stage and/or Tenant Onboarding stage, is assumed to have the knowledge of the group. For example, a meeting can include multiple ATS engineers, but the outcome of 'attaining the required knowledge to submit an ADO ticket' is the same independent of the combinatorics.

- Each process is assumed to be sufficiently generalized for modeling purposes -- e.g., every engineer either performs the same sequence of tasks, or the tasks between 1st and nth can be interchanged without affecting the characteristics of the process.

- Each subsystem is assumed to be independent.

Following is the process, resources, and parameters used in 'Getting to Know ATS' simulation. See help_desk_simulation.ipynb for code.

| Process | Resource Request | Notes |

|---|---|---|

get_help |

Requests an help_desk_eng |

Used to track resources used in fielding customer questions |

collect_complete |

Requests an on_call_eng |

Used to track resources used in ensuring customer has necessary knowledge to submit a ticket -- it includes the time it takes to complete pre-requisites, and may include additional meeting time |

verify_ticket |

Requests a verify_eng |

Used to capture when a customer exists the subsystem, which occurs when they submit an ADO ticket |

| Name | Purpose |

|---|---|

customer |

keeps track on customer(s) as they move through the system |

num_help_desk_eng |

keeps track of allocation of ATS engineering resources to serve as help_desk_eng |

num_on_call_eng |

keeps track of allocation of ATS engineering resources to serve as on_call_eng |

num_verify_eng |

keeps track of allocation of ATS engineering resources to serve as verify_eng |

wait_times |

used to keep track of time being spent in each Resource |

NUM_SIMULATIONS |

user-defined parameter that sets the maximum number of variations on resource allocation and their influence on the system's flow, to be considered |

MAX_ENGINEERS |

user-defined parameter that sets the maximum number of resources, and their allocations, that can be simultaneously active across one or more processes during a simulation run. |

Following is the processes, resources, and parameters used in 'Tenant Onboarding' simulation. See tenant_onboarding_simulation.ipynb for code.

| Process | Resource Request | Notes |

|---|---|---|

request |

Requests an arrival resource |

Used to track customers entering the subsystem's queue |

release |

Requests a serve resource |

Used to track variability in the tenant onboarding process-duration, as well as the time it takes the customer to exit the simulation |

| Name | Purpose |

|---|---|

service_time |

minimum time to be considered under SDP |

wait_times |

keeps track of expected-average end-to-end wait times for entire simulation |

customer_times |

keeps track of expected-average delays in the queue. These are delays not associated with the carrying out of the process |

total_service_time |

used to compute utilization metrics |

ARRIVAL_TIME |

user-defined parameter is used to create Poisson Distribution arrival times to the queue |

TIME_TO_COMPLETE |

user-defined parameter is used to create Exponential Distribution service times. That is, the time it takes a server to serve a customer |

SIM_TIME |

user-defined parameter used as stopping condition for simulation. SIM_TIME is set to run for half-a-year |

The simulation to the 'Get to Know ATS' subsystem, help_desk_simulation.ipynb, was executed multiple times, each execution consisting of ten sequential, independent simulations. As the help_desk_simulation increases in the amount of resources available for serving customers, a few observation become apparent:

- Given three processes that must be completed, there is diminishing returns in scaling past 5 resources.

- If only 5 resources can be allocated, invest them as

num_help_desk_eng=1,num_on_call_eng=3,num_verify_eng=1as they result in the lowest average time over an entire semester of simulated operation:average_time=503.419355 - If more than 5 resources are available, heavily consider whether or not to allocate them to the subsystem as simulated. Each additional resource allocated yields a reduction of less than ten minutes to the average time over an entire semester of simulated operation.

The simulation to the 'Tenant Onboarding' subsystem, tenant_onboarding_simulation_ipynb, was executed multiple times. Each execution represents a semester's worth of simulations (based on the currently estimated arrival rates). A summary of the findings follow:

- The expected-average E2E waiting time (in days) for a customer commencing the Tenant Onboarding process is ~18 days (most recent simulation).

- The same customer can expect an expected delay in queue of ~4 days. These days are 'taxes' from the systems's design and not associated with the actual, and necessary, onboarding tenants to SF cluster tasks.

- Over the course of the semester, a server can expect ~6 customers waiting to be served.

- To meet the stated expected averages, an ATS engineer is simultaneously serving 3 customers.

As described in the outputs and observations section, above, there are two main areas that drive underutilization in the simulation -- namely, resource allocation and server utilization. An back-of-the-napkin recommendation would be to increase the number of engineers that handle the full onboarding flow to a minimum of 3. Moreover, having each server act as each of the three resources in the 'Get to Know ATS' subsystem, and simultaneously onboard as many customers during the 'Tenant Onboarding' subsystem would greatly reduce the expected onboarding time for a customer.

Given that budgets and strategic planning may not alow for the allocation or repurposing of resources, an inexpensive way to improve customer experience is through engagement and communication. A couple of examples follow:

- If the time to engage during a customer entering the queue for the 'Get to Know ATS' subsystem cannot be reduced, automate auto-responses that detail what is needed from customers to onboard to ATS solution -- including links to assets, faq's, and ways to contact ATS.

- Delays caused by SDP are unavoidable. The best the 'Tenant Onboarding' subsystem can perform -- in its current architecture/design -- is to delay only as long as SDP. Since SDP can take ~2 weeks, making the customer aware of progress, akin to shipping information, would be a way to inform and delight customers. For example, when the customer submits their ADO ticket, an email containing descriptions of steps that will be performed, the expect time to complete each step, link to ways they can remain updated with the status of their deployment, and quick links to resources could be included.

Future work should be focused on iteratively improving the simulations while following proper study design methodology, as described in the Data Collection section above.