Implementation based on the following papers:

- Auto Encoding Varational Bayes

- Variational Autoencoder for Deep Learning of Images, Labels and Captions

Types of VAEs in this project

- Vanilla VAE

- Deep Convolutional VAE ( DCVAE )

The Vanilla VAE was trained on the FashionMNIST dataset while the DCVAE was trained on the Street View House Numbers (SVHN) dataset.

To run this project

pip install -r requirements.txt

python main.py

Add the -conv arguement to run the DCVAE. By default the Vanilla VAE is run. You can play around with the model and the hyperparamters in the Jupyter notebook included.

The dataset used can be easily changed to any of the ones available in the PyTorch datasets class (docs) or any other dataset of your choosing by changing the appropriate line in the code.

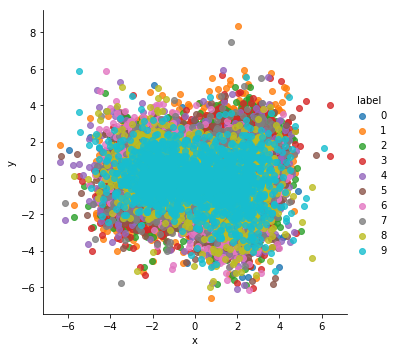

After reduction to 2 dimensions using PCA

For a really good explanation of how these networks work, read this article on Medium or this one by Jeremy Jordan. Both explain how these differ from traditional autoencoders as well as things to keep in mind while training such models.