This repository contains an implementation of the Linear Recurrent Unit using real numbers for computation.

In today's world, training large models requires a significant amount of computation. We can reduce training time by employing AMP training. To encourage research in finding alternative architectures for Large Language Models, it is essential to speed up training and bring LRU on par with the training times of transformers.

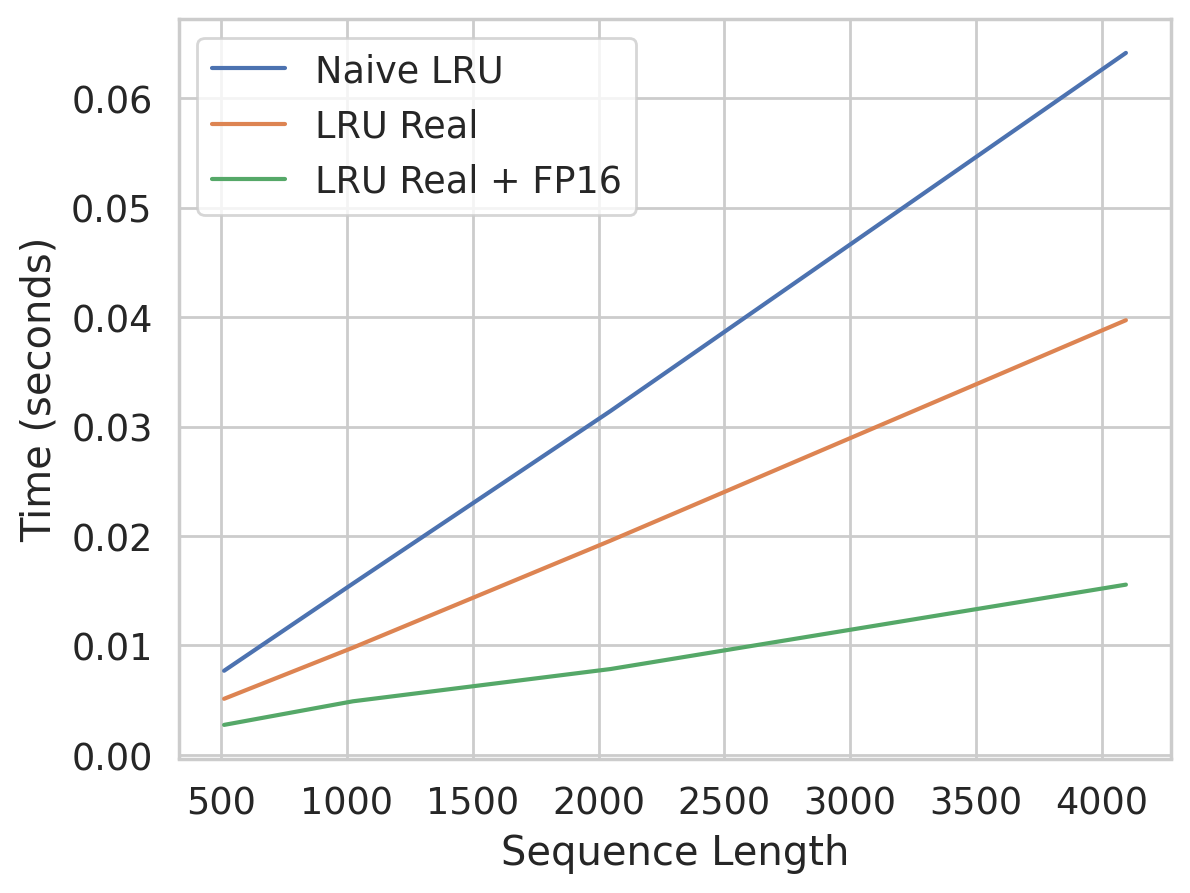

Results obtained by running bench.py.

- Mixed Precision computation

- Materializing

$\Lambda$ through real numbers - Simple LM with LRU

- Wikitext trained model