This project runs multiple Spark workers on a single machine using Docker and docker-compose.

What it does:

- Spin up Spark master + multiple workers effortlessly, on a single machine

- Have a full Spark dev environment locally, with Java, Scala and Python and Jupyter

- No need install any JDK, Python or Jupyter on local machine. Every thing runs as Docker containers

- Only thing needed is Docker and docker-compose

I have adopted the really awesome bitnami/spark Docker and expanded on it. Here is Bitnami Spark Docker github

Please install Docker and docker-compose

$ git clone https://github.com/elephantscale/spark-in-docker

$ cd spark-in-docker

$ bash ./start-spark.sh # this will start spark cluster

$ docker-compose ps # to see docker containersYou will see startup logs

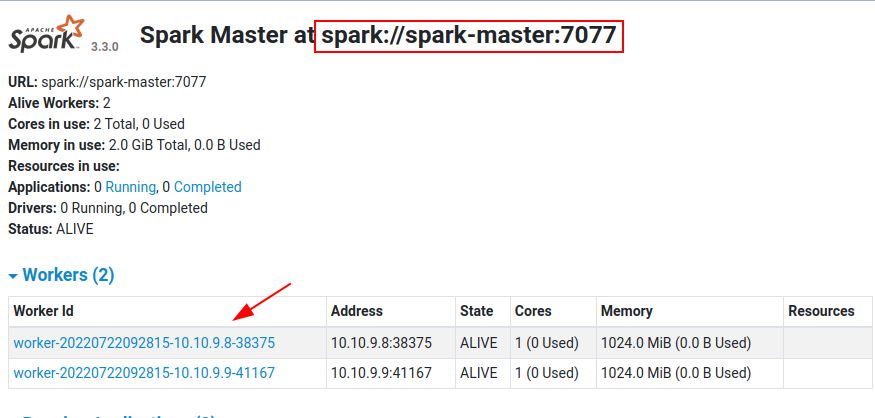

Try Spark master UI at port number 8080 (e.g. localhost:8080)

That's it!

The docker-compose.yaml is the one sets up the whole thing. I adopted this from Bitnami's docker-compose

My additions:

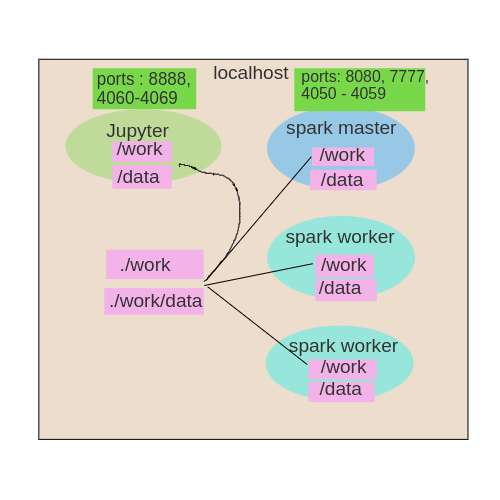

- mounting current directory as

/workspace/in spark docker containers. Here is where all code would be - mounting

./datadirectory as/data/in containers. All data would be here

- 8080 maps to spark-master:8080 (master UI)

- 4050 maps to spark-master:4040 (this is for application UI (4040))

Here is a little graphic explaining the setup:

By default we start 1 master + 2 workers.

To Start 1 master + 3 workers, supply the number of worker instances to the script

$ bash ./start-spark.sh 3Checkout Spark-master UI at port 8080 (e.g. localhost:8080) . You will see 3 workers.

Login to spark master

# on docker host

$ cd spark-in-docker

$ docker-compose exec spark-master bashWithin Spark-master container

# within spark-master container

$ echo $SPARK_HOME

# output: /opt/bitnami/sparkRun Spark-Pi example:

# within spark-master container

# run spark Pi example

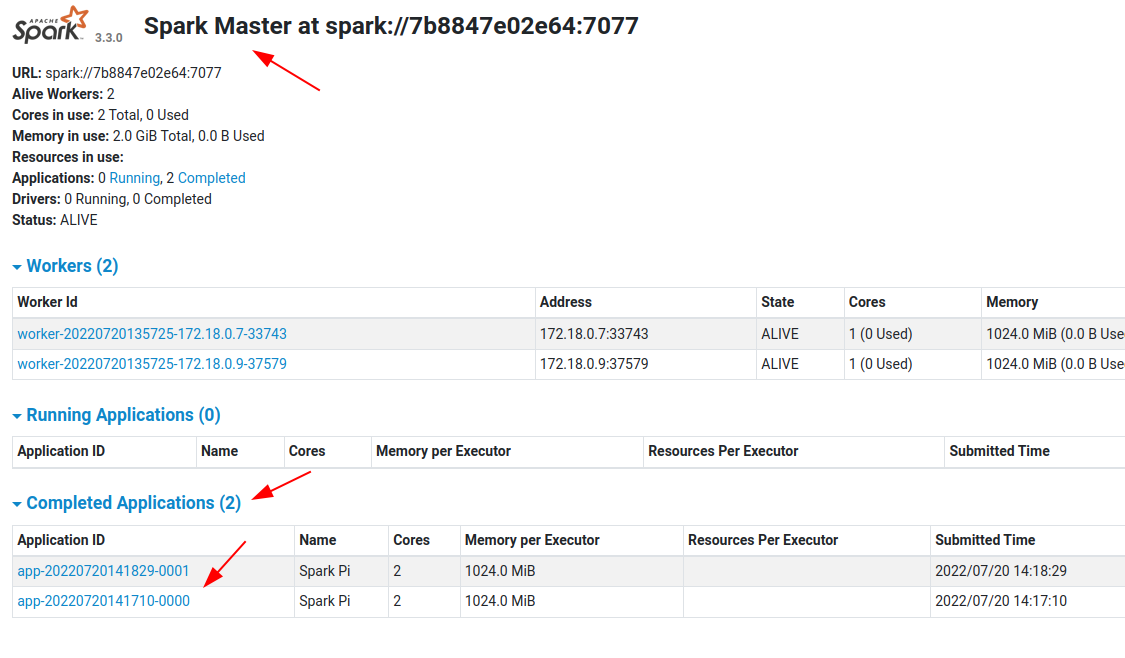

$ $SPARK_HOME/bin/spark-submit \

--master spark://spark-master:7077 \

--num-executors 2 \

--class org.apache.spark.examples.SparkPi \

$SPARK_HOME/examples/jars/spark-examples_*.jar \

100Should get answer like

Pi is roughly 3.141634511416345Check master UI on 8080. You will see applications being run!

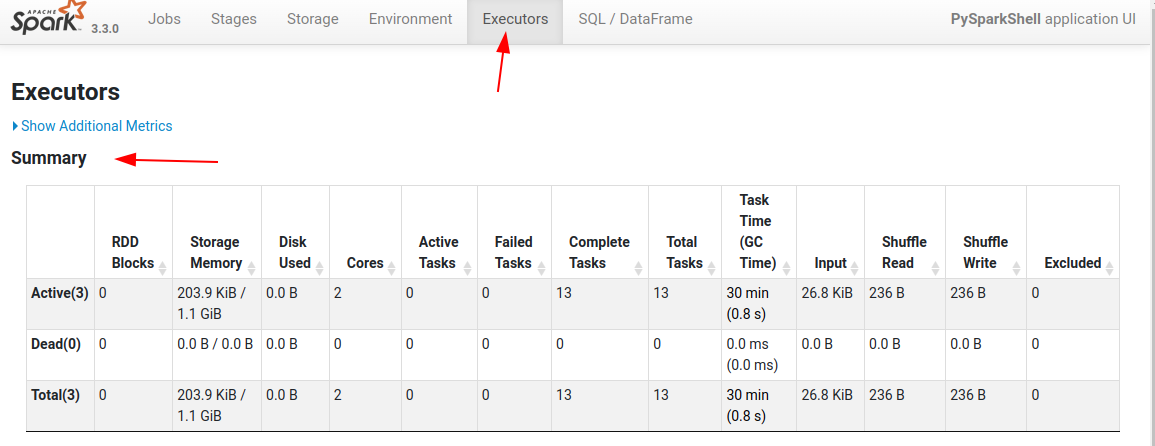

Also see the Spark application UI on port 4050

Execute the following in spark-master container

# within spark-master container

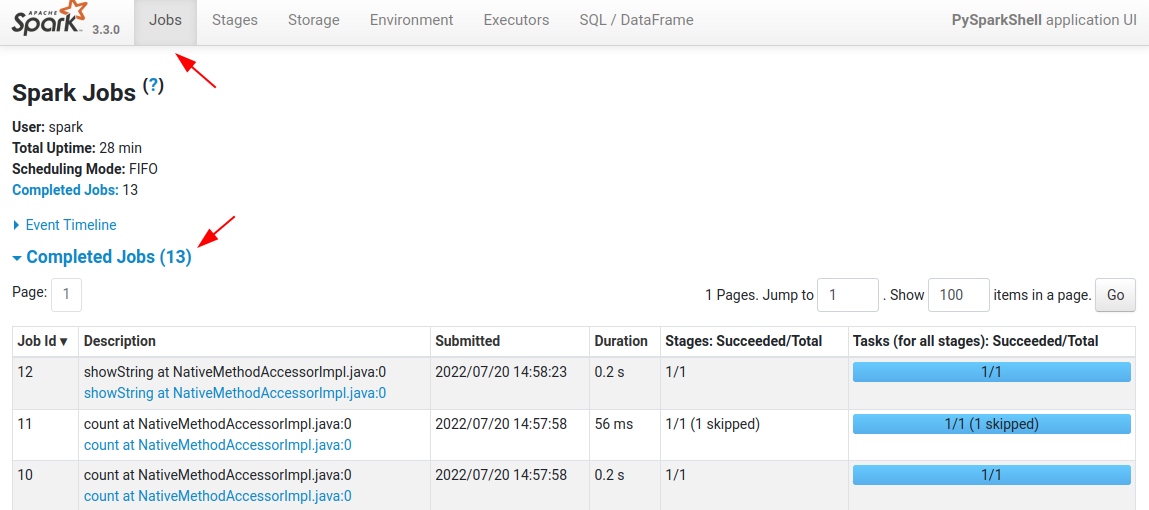

$ spark-shell --master spark://spark-master:7077Now try to access the UI at http:.//localhost:4050

Note : This port is remapped to 4050

Within Spark-shell UI:

> val a = spark.read.textFile("/etc/hosts")

> a.count

// res0: Long = 7

> a.show

// you will see contents of /etc/hosts file

// Let's read a json file

val b = spark.read.json("/data/clickstream/clickstream.json")

b.printSchema

b.count

b.showExecute the following in spark-master container

# within spark-master container

$ pyspark --master spark://spark-master:7077Now try to access the UI at http:.//localhost:4050

Note : This port is remapped to 4050

In pyspark

a = spark.read.text("/etc/hosts")

a.count()

a.show()

# Let's read a json file from /data directory

b = spark.read.json('/data/clickstream/clickstream.json')

b.printSchema()

b.count()

b.show()You can access the application UI on port number 4050 (this is equivalent of spark-ui 4040)

For this we have a spark-dev environment that has all the necessities installed.

See spark-dev/README.md for more details.

See here for some sample applications to get you started:

- Scala app and instructions to run it

- Java app and instructions to run it it

- Python app and instructions to run it

To troubleshoot connection issue, try busybocx

$ docker run -it --rm --user $(id -u):$(id -g) --network bobafett-net busyboxAnd from within busybox, to test connectivity

$ ping spark-master

$ telnet spark-master 7077

# Press Ctrl + ] to exit