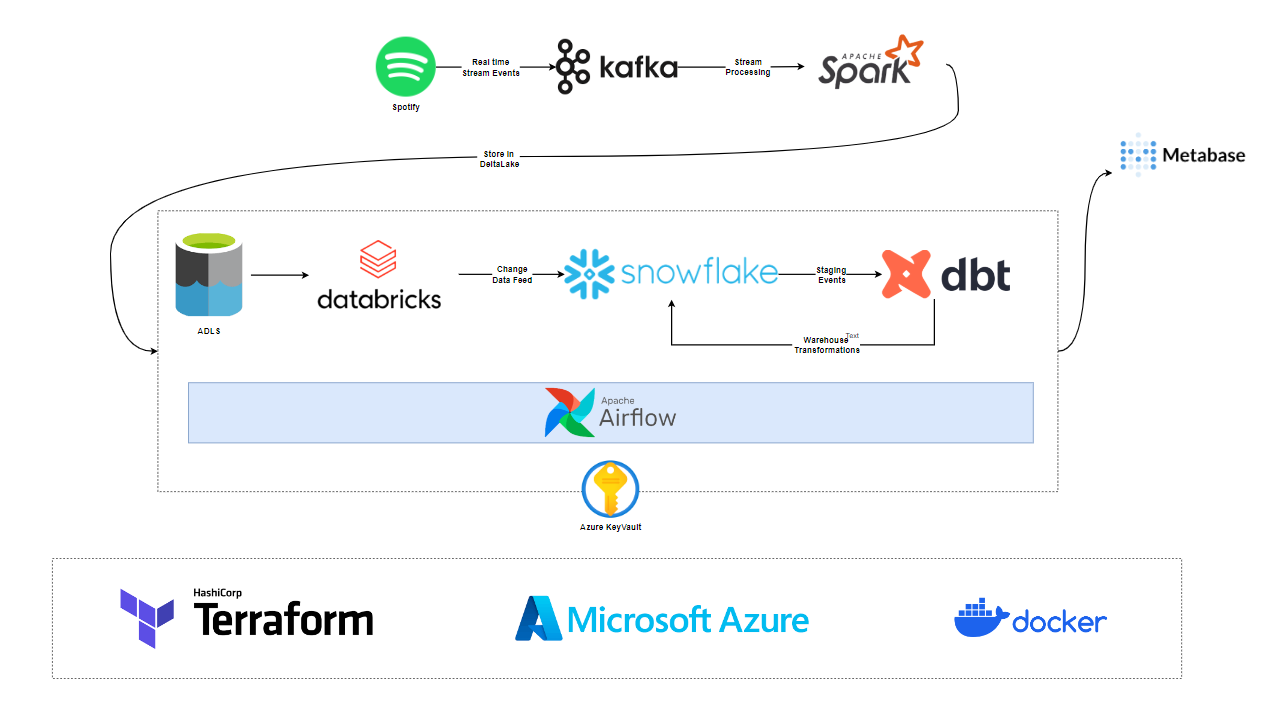

Generate synthetic Spotify music stream dataset to create dashboards. Spotify API generates fake event data emitted to Kafka. Spark consumes and processes Kafka data, saving it to the Datalake. Airflow orchestrates the pipeline. dbt moves data to Snowflake, transforms it, and creates dashboards.

- Songs: Leveraged Spotify API to create artists and tracks data, extracted from set of playlists. Each track includes title, artist, album, ID, release date, etc.

- Users: Created users demographics data with randomized first/last names, gender and location details.

- Interactions: Real-time-like listening data linking users to songs they "listened."

Feel free to explore and analyze the datasets included in this repository to uncover patterns, trends, and valuable insights in the realm of music and user interactions. If you have any questions or need further information about the dataset, please refer to the documentation provided or reach out to the project contributors.

- Cloud - Azure

- Infrastructure as Code software - Terraform

- Containerization - Docker, Docker Compose

- Secrets Manager - Azure Kevy Vault

- Stream Processing - Apache kafka, Spark Streaming

- Data Processing - Databricks

- Data Warehouse - Snowflake

- Pipeline Orchestration - Apache Airflow

- Warehouse Transformation dbt

- Data Visualization - Metabase

- Language - Python

- Setup Free Azure account & Azure Keyvault - Setup

- Setup Terraform and create resources - Setup

- SSH into VM (kafka-vm)

- Setup Snowflake Warehouse - Setup

- Setup Databricks Workspace & CDC (Change Data Capture) job - Setup

- SSH into another VM (airflow-vm)

A lot can still be done :).

- Choose managed Infra

- Confluent Cloud for Kafka

- Write data quality tests

- Include CI/CD

- Add more visualizations