Welcome to the GitHub repository for Enhancing Remote Sensing Vision-Language Models for Zero-Shot Scene Classification.

Authors:

K. El Khoury*, M. Zanella*, B. Gérin*, T. Godelaine*, B. Macq, S. Mahmoudi, C. De Vleeschouwer, I. Ben Ayed

*Denotes equal contribution

We introduce RS-TransCLIP, a transductive approach inspired from TransCLIP, that enhances Remote Sensing Vison-Language Models without requiring any labels, only incurring a negligible computational cost to the overall inference time.

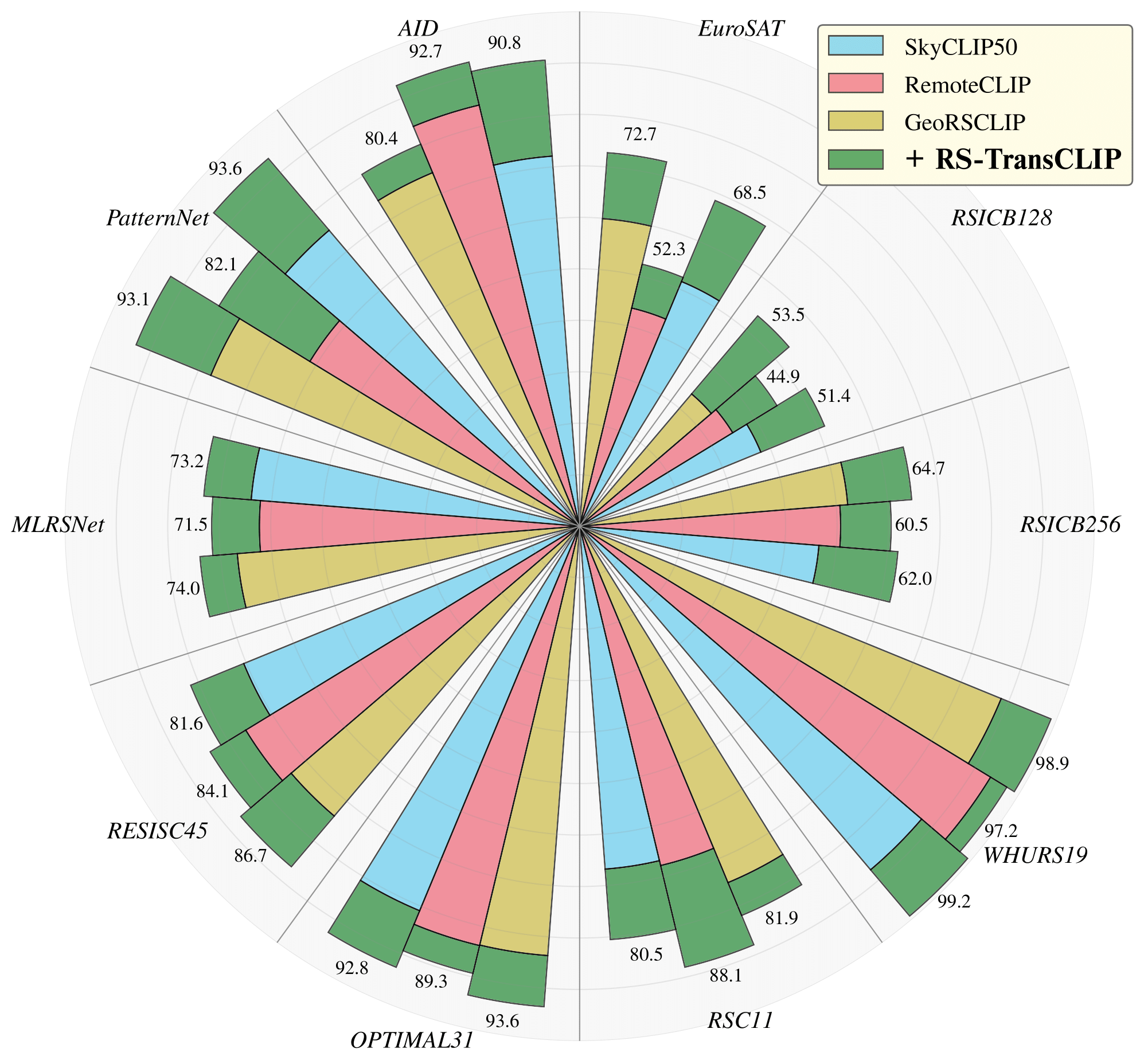

Figure 1: Top-1 accuracy of RS-TransCLIP, on ViT-L/14 Remote Sensing Vision-Language Models, for zero-shot scene classification across 10 benchmark datasets.

NB: the Python version used is 3.10.12.

Create a virtual environment and activate it:

# Example using the virtualenv package on linux

python3 -m pip install --user virtualenv

python3 -m virtualenv RS-TransCLIP-venv

source RS-TransCLIP-venv/bin/activate.cshInstall Pytorch:

pip3 install torch==2.2.2 torchaudio==2.2.2 torchvision==0.17.2Clone GitHub and move to the appropriate directory:

git clone https://github.com/elkhouryk/RS-TransCLIP

cd RS-TransCLIPInstall the remaining Python packages requirements:

pip3 install -r requirements.txtYou are ready to start! 🎉

10 Remote Sensing Scene Classification datasets are already available for evaluation:

-

The WHURS19 dataset is already uploaded to the repository for reference and can be used directly.

-

The following 7 datasets (AID, EuroSAT, OPTIMAL31, PatternNet, RESISC45, RSC11, RSICB256) will be automatically downloaded and formatted from Hugging Face using the run_dataset_download.py script.

# <dataset_name> can take the following values: AID, EuroSAT, OPTIMAL31, PatternNet, RESISC45, RSC11, RSICB256

python3 run_dataset_download.py --dataset_name <dataset_name> Dataset directory structure should be as follows:

$datasets/

└── <dataset_name>/

└── classes.txt

└── class_changes.txt

└── images/

└── <classname>_<id>.jpg

└── ...

- You must download the MLRSNet and RSICB128 datasets manually from Kaggle and place them in '/datasets/' directory. You can format them manually to follow the dataset directory structure listed above and use them for evaluation OR you can use the run_dataset_formatting.py script by placing the .zip files from Kaggle in the '/datasets/' directory.

# <dataset_name> can take the following values: MLRSNet, RSICB128

python3 run_dataset_formatting.py --dataset_name <dataset_name> - Download links: RSICB128 | MLRSNet --- NB: On the Kaggle website, click on the download Arrow in the center of the page instead of the Download button to preserve the data structure needed to use the run_dataset_formatting.py_ script (check figure bellow).

Notes:

- The class_changes.txt file inserts a space between combined class names. For example, the class name "railwaystation" becomes "railway station." This change is applied consistently across all datasets.

- The WHURS19 dataset is already uploaded to the repository for reference.

Running RS-TransCLIP consist of three major steps:

- Generating Image and Text Embeddings

- Generating the Average Text Embedding

- Running Transductive Zero-Shot Classification

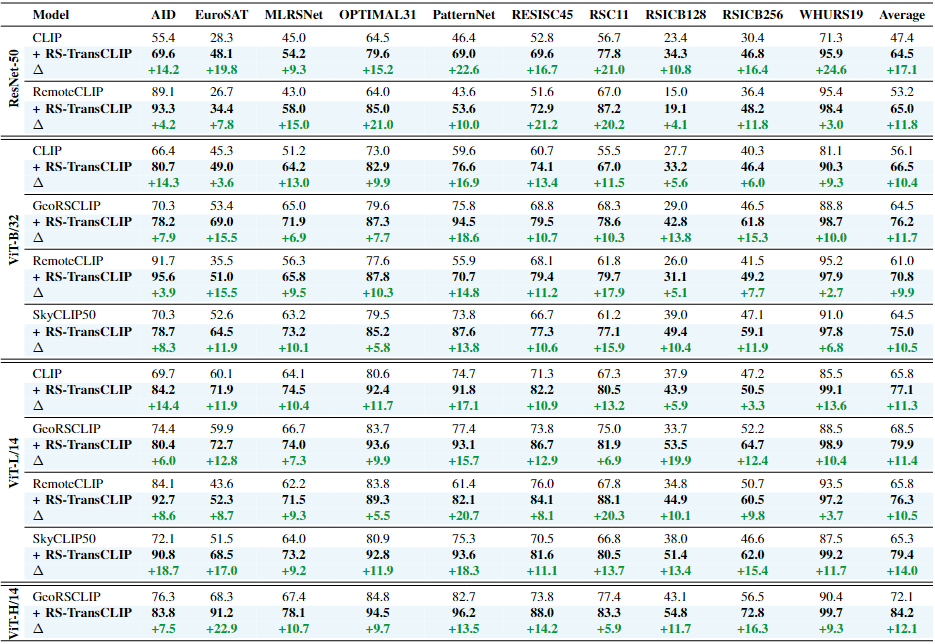

We consider 10 scene classification datasets (AID, EuroSAT, MLRSNet, OPTIMAL31, PatternNet, RESISC45, RSC11, RSICB128, RSICB256, WHURS19), 4 VLM models (CLIP, GeoRSCLIP, RemoteCLIP, SkyCLIP50) and 4 model architectures (RN50, ViT-B-32, ViT-L-14, ViT-H-14) for our experiments.

To generate Image embeddings for each dataset/VLM/architecture trio:

python3 run_featuregeneration.py --image_fgTo generate Text embeddings for each dataset/VLM/architecture trio:

python3 run_featuregeneration.py --text_fgAll results for each dataset/VLM/architecture trio will be stored as follows:

$results/

└── <dataset_name>/

└── <model_name>

└── <model_architecture>

└── images.pt

└── classes.pt

└── texts_<prompt1>.pt

└── ....

└── texts_<prompt106>.pt

Notes:

- Text embeddings will generate 106 individual text embeddings for each VLM/dataset combination, the exhaustive list of all text prompts can be found in run_featuregeneration.py.

- When generating Image embeddings, the run_featuregeneration.py script will also generate the ground truth labels and store them in "classes.pt". These labels will be used for evaluation.

- Please refer to run_featuregeneration.py to control all the respective arguments.

- The embeddings for the WHURS19 dataset are already uploaded to the repository for reference.

To generate the Average Text embedding each dataset/VLM/architecture trio:

python3 run_averageprompt.pyNotes:

- The run_averageprompt.py script will average out all embeddings with the following name structure "texts_*.pt" for each dataset/VLM/architecture trio and create a file called "texts_averageprompt.pt".

- The Average Text embeddings for the WHURS19 dataset are already uploaded to the repository for reference.

To run Transductive zero-shot classification using RS-TransCLIP:

python3 run_TransCLIP.pyNotes:

- The run_TransCLIP.py script will use the Image embeddings "images.pt", the Average Text embedding "texts_averageprompt.pt" and the class ground truth labels "classes.pt" to run Transductive zero-shot classification using RS-TransCLIP.

- The run_TransCLIP.py script will also generate the Inductive zero-shot classification for performance comparison.

- Both Inductive and Transductive results will be stored in "results/results_averageprompt.csv".

- The results for the WHURS19 dataset are already uploaded to the repository for reference.

Table 1: Top-1 accuracy for zero-shot scene classification without (white) and with (blue) RS-TransCLIP on 10 RS datasets.

Support our work by citing our paper if you use this repository:

@article{elkhoury2024enhancing,

title={Enhancing Remote Sensing Vision-Language Models for Zero-Shot Scene Classification},

author={Karim El Khoury and Maxime Zanella and Beno{\^\i}t G{\'e}rin and Tiffanie Godelaine and Beno{\^\i}t Macq and Sa{\"i}d Mahmoudi and Christophe De Vleeschouwer and Ismail Ben Ayed},

journal={arXiv preprint arXiv:2409.00698},

year={2024}

}

Please also consider citing the original TransCLIP paper:

@article{zanella2024boosting,

title={Boosting Vision-Language Models with Transduction},

author={Zanella, Maxime and G{\'e}rin, Beno{\^\i}t and Ayed, Ismail Ben},

journal={arXiv preprint arXiv:2406.01837},

year={2024}

}

For more details on transductive inference in VLMs, visit the TransCLIP comprehensive repository.

Feel free to open an issue or pull request if you have any questions or suggestions. You can also contact us by Email.

- Text-prompt variability study for Zero-Shot Scene Classification

- Few-shot RS-TransCLIP implementation for human-in-the-loop scenarios