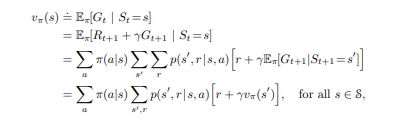

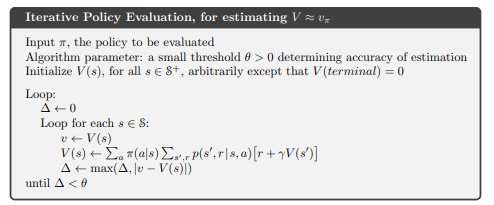

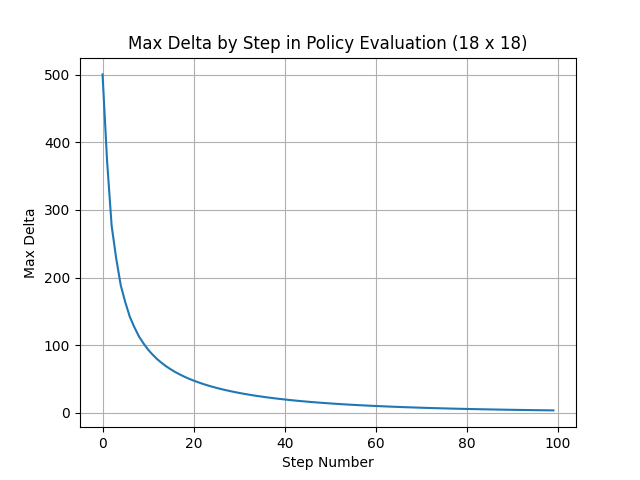

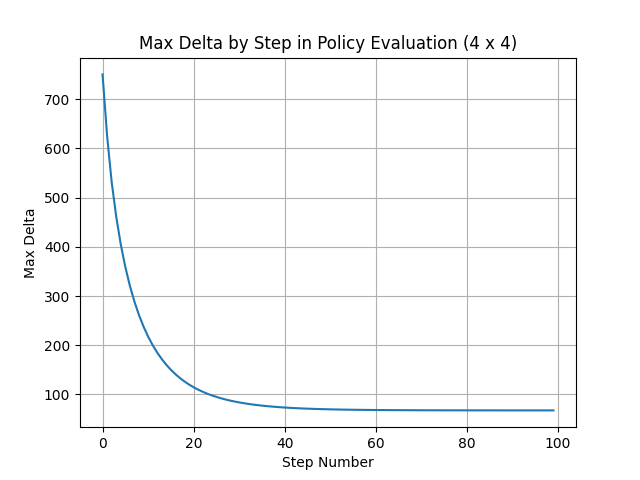

This project implements Iterative Policy Evaluation in a Grid World environment, based on techniques from Reinforcement Learning: An Introduction by Barto and Sutton (Chapter 4). Using an equiprobable random policy, the agent evaluates states iteratively. The value function approximation for each state is computed over a fixed number of steps, with the max delta (|v - v'|) plotted at each step to visualize convergence.

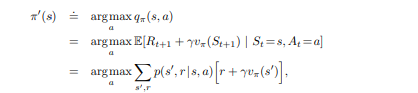

After learning is complete, the policy is adjusted based on the computed value function and tested in the gymnasium environment.

-

Clone the repository and navigate to the project directory.

-

Install dependencies:

pip install -r requirements.txt

Run the main script with default settings:

python3 main.pyThis will execute the policy evaluation on a large grid. Resulting visualizations for the value function and policy execution will be generated.

To run the policy evaluation on a smaller grid, use the --map option:

python3 main.py --map='small'Customize the evaluation with additional command-line options:

python3 main.py --map='small' --video_folder='./videos' --steps=1000 --episodes=1--map: Choose from available map sizes (small,large).--video_folder: Specify a folder to save video recordings of the policy execution.--steps: Set the number of steps for iterative policy evaluation.--episodes: Define the number of episodes to run.

This project is licensed under the MIT License.