Proximal Policy Optimization (PPO)

This repository provides a working and clean code of the PPO algorithm using JAX and Haiku. To see it working, you can simply click on the Colab link above!

Interested readers can have a look to our report that goes deeper into the details.

Contents

Environments

Agents

- random_agent: a random agent..

- vanilla_ppo: our implementation of PPO.

Tricks

Networks

- Separated value and policy networks.

- The standard deviation of the policy can be predicted by the policy network or fixed to a given value.

softplusactivation for making the std always positive. - Orthogonal initialization of the weights and constant initialization for the biases.

- Activation functions are

tanh.

Training

- Linear annealing of the learning rates. Different learning rate for the policy and value networks.

- Learning with minibatches. Normalized advantages at minibatch level.

Loss

- Using Generalized Advantage Estimation (GAE).

- Clipped ratio

- Minimum between ratio x GAE and clipped_ratio x GAE

- Clipped gradient norm

Environment wrappers

- Normalization and clipping of the observation

- Normalization and clipping of the rewards

- Action normalization: the agent can predict actions between

$[-1, 1]$ , and the wrapper scale them back to the environment action range.

How to run it

The training loop is implemented in the ppo notebook. It contains instances of the agents tuned for each of the environments. We log the training metrics (losses, actions, rewards, etc) to a Tensorboard file, you can monitor it separately or within the notebook. After training is completed, a video of the agent is generated.

Fast and easy

Run it locally

First you need to clone the repository. For that, you can use the following command line:

git clone git@github.com:emasquil/ppo.gitThen we recommend using a virtual environment, this can be done by the following:

python3 -m venv env

source env/bin/activateFinally, in order to install the package, you can simply run:

pip install -e .If you are planning on developing the package you will need to add [dev] at the end. This gives:

pip install -e .[dev]This package uses MuJoCo environments, please install it by following these instructions.

Note that you might need to install the following.

sudo apt-get install -y xvfb ffmpeg freeglut3-dev libosmesa6-dev patchelf libglew-dev

After all the installs you should be ready to run the notebook locally.

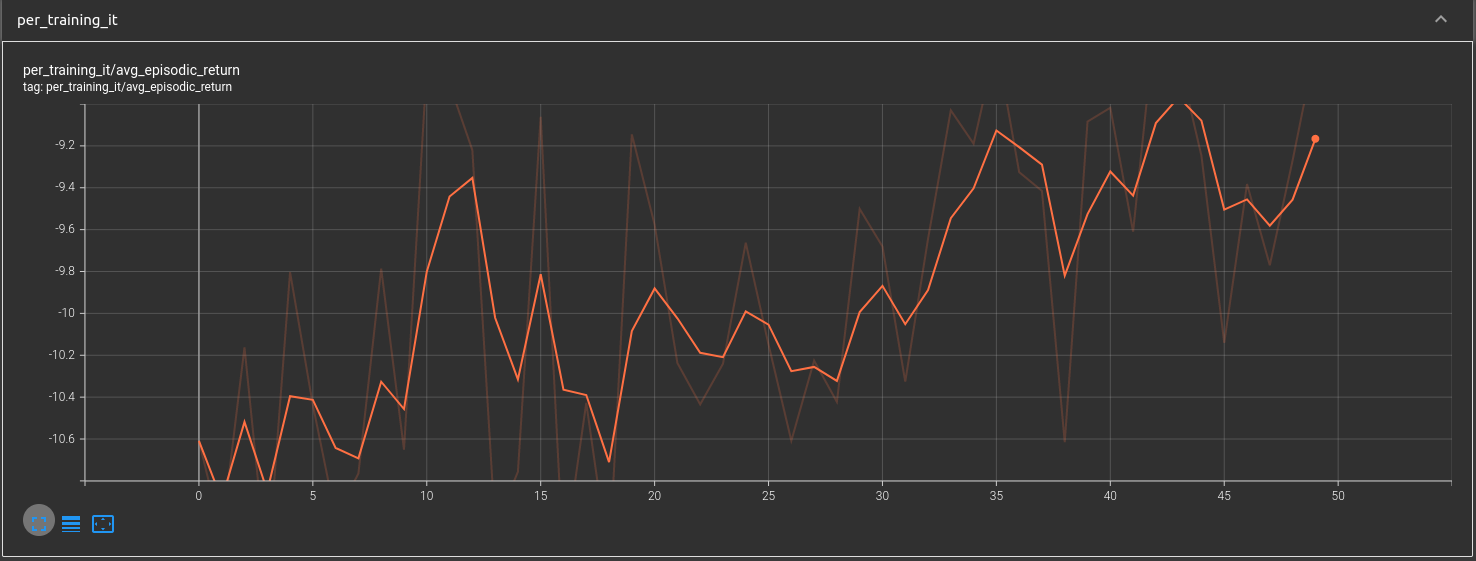

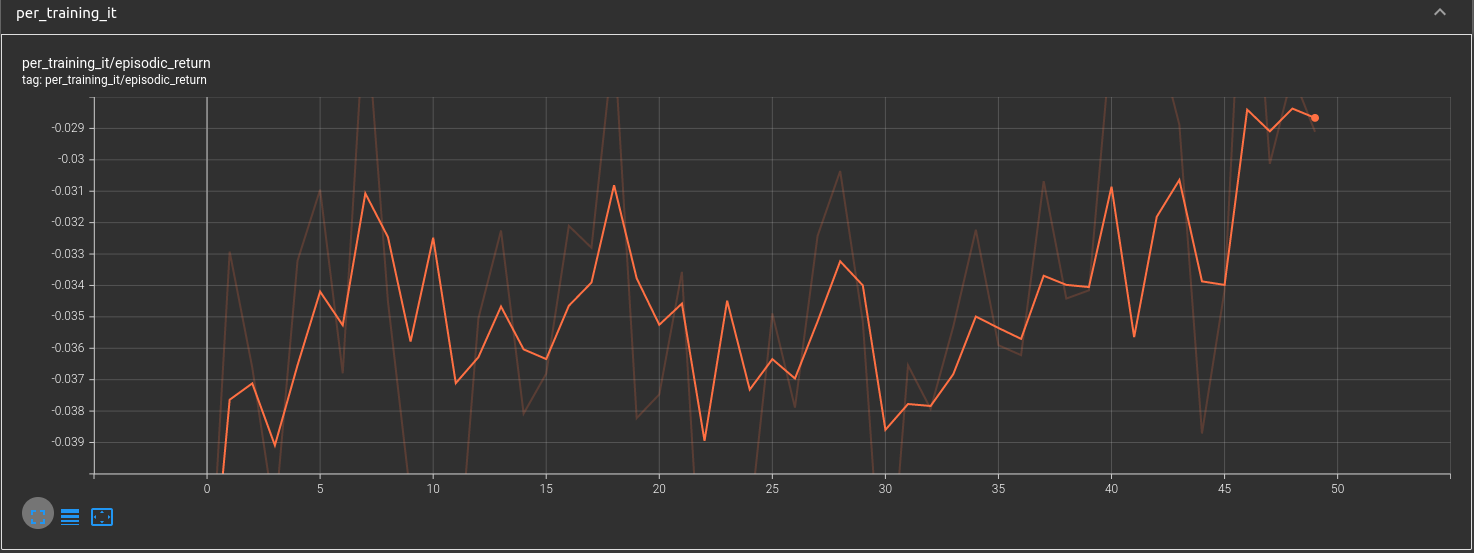

Results

In the results directory you can find some plots, logs, and videos of the agents after being trained on the environments previously mentioned.

Contributing

Before any pull request, please make sure to format your code using the following:

black -l 120 ./Inspirations

vwxyzjn/cleanrl

openai/baselines

DLR-RM/stable-baselines3

openai/spinningup

Costa Huang's blogpost

deepmind/acme