Xuehai He†,1, Weixi Feng*2, Kaizhi Zheng*1, Yujie Lu*2, Wanrong Zhu*2, Jiachen Li*2, Yue Fan*1, Jianfeng Wang3, Linjie Li3, Zhengyuan Yang3, Kevin Lin3, William Yang Wang2, Xin Eric Wang†,1

1UCSC, 2UCSB, 3Microsoft

*Equal contribution

- Release dataset

- Release evaluation code

- EvalAI server setup

- Hugging Face server setup

- Support evaluation with lmms-eval

- [2024.09.21] We integrate the benchmark into lmms-eval.

- [2024.09.17] We set up the Hugging Face server.

- [2024.08.9] We set up the EvalAI server. The portal will open for submissions soon.

- [2024.07.1] We add the evaluation toolkit.

- [2024.06.12] We release our dataset.

The dataset can be downloaded from Hugging Face. Each entry in the dataset contains the following fields:

video_id: Unique identifier for the video. Same as the relative path of the downloaded videovideo_url: URL of the videodiscipline: Main discipline of the video contentsubdiscipline: Sub-discipline of the video contentcaptions: List of captions describing the video contentquestions: List of questions related to the video content, each with options and correct answer

{

"video_id": "eng_vid1",

"video_url": "https://youtu.be/-e1_QhJ1EhQ",

"discipline": "Tech & Engineering",

"subdiscipline": "Robotics",

"captions": [

"The humanoid robot Atlas interacts with objects and modifies the course to reach its goal."

],

"questions": [

{

"type": "Explanation",

"question": "Why is the engineer included at the beginning of the video?",

"options": {

"a": "The reason might be to imply the practical uses of Atlas in a commercial setting, to be an assistant who can perform complex tasks",

"b": "To show how professional engineers can be forgetful sometimes",

"c": "The engineer is controlling the robot manually",

"d": "The engineer is instructing Atlas to build a house"

},

"answer": "The reason might be to imply the practical uses of Atlas in a commercial setting, to be an assistant who can perform complex tasks",

"requires_domain_knowledge": false,

"requires_audio": false,

"requires_visual": true,

"question_only": false,

"correct_answer_label": "a"

}

]

}You can do evaluation by running our evaluation code eval.py. Note that access to the GPT-4 API is required, as defined in line 387 of eval.py.

To use our example evaluation code, you need to define your model initialization function, such as:

modelname_init()at line 357 of eval.py, and the model answer function, such as:

modelname_answer()at line 226 of eval.py.

Alternatively, you may prepare your model results and submit them to the EvalAI server. The model results format should be as follows:

{

"detailed_results": [

{

"video_id": "eng_vid1",

"model_answer": "a</s>",

},

...

]

}Please refer to LICENSE. All videos of the MMworld benchmark are obtained from the Internet which are not property of our institutions. The copyright remains with the original owners of the video. Should you encounter any data samples violating the copyright or licensing regulations of any site, please contact us. Upon verification, those samples will be promptly removed.

@misc{he2024mmworld,

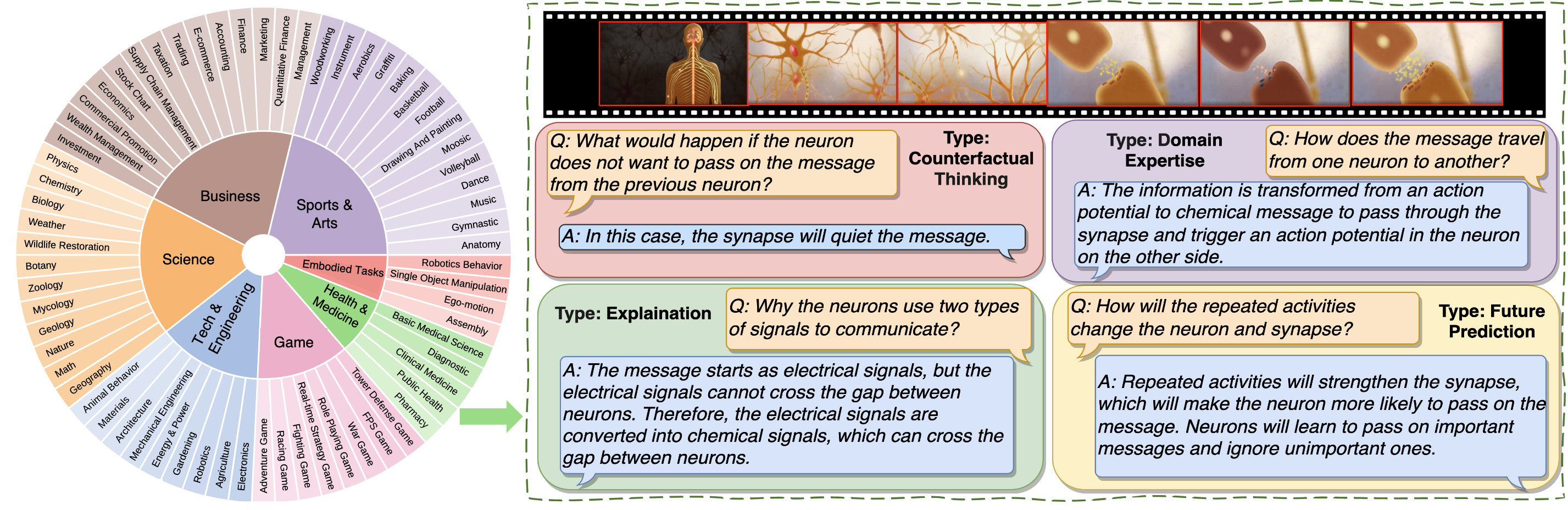

title={MMWorld: Towards Multi-discipline Multi-faceted World Model Evaluation in Videos},

author={Xuehai He and Weixi Feng and Kaizhi Zheng and Yujie Lu and Wanrong Zhu and Jiachen Li and Yue Fan and Jianfeng Wang and Linjie Li and Zhengyuan Yang and Kevin Lin and William Yang Wang and Lijuan Wang and Xin Eric Wang},

year={2024},

eprint={2406.08407},

archivePrefix={arXiv},

primaryClass={cs.CV}

}