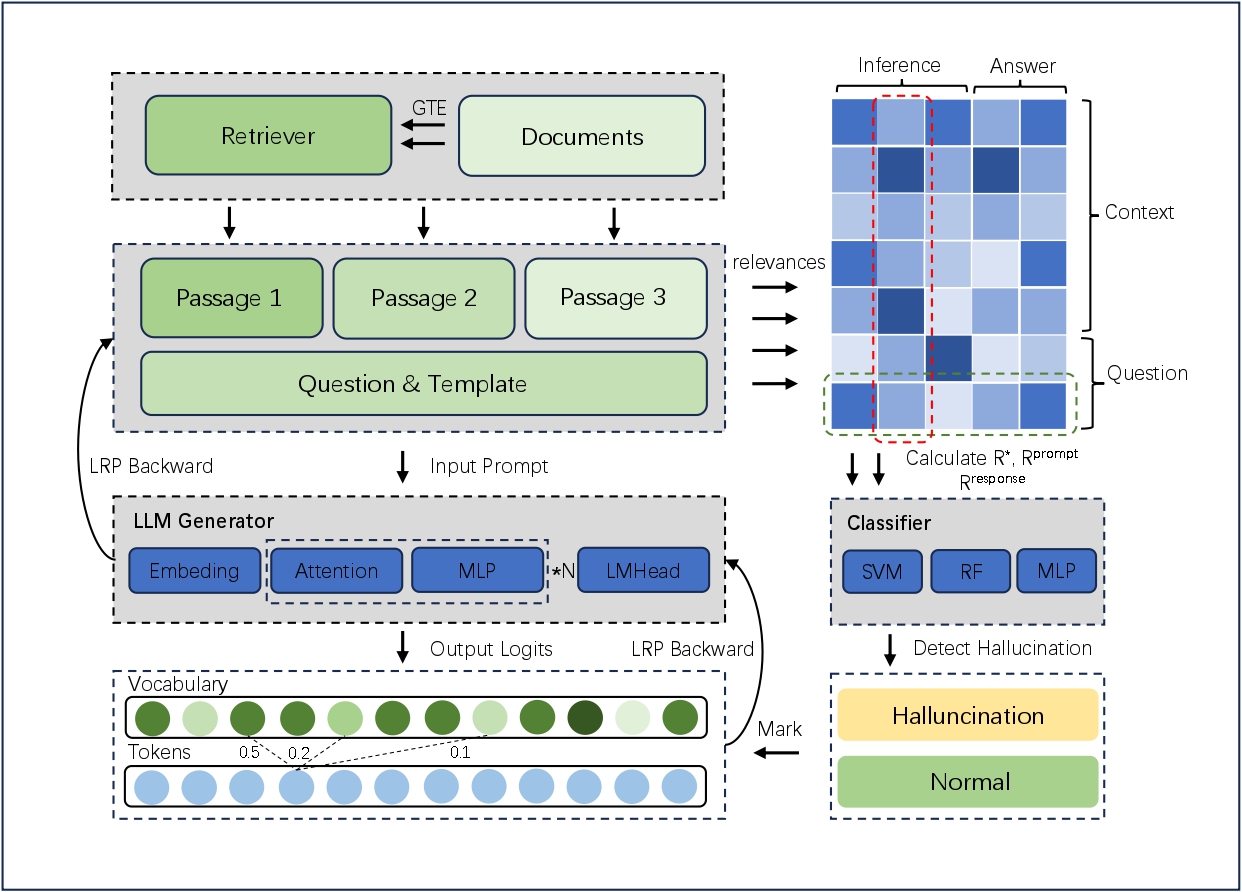

Using LRP-based methods to detect hallucination in RAG, this is code repository for "LRP4RAG: Detecting Hallucinations in Retrieval-Augmented Generation via Layer-wise Relevance Propagation".

Layer-wise Relevance Propagation (LRP) is a technique that brings such explainability and scales to potentially highly complex deep neural networks.

baseline: code to run baselines, including 3 baselines(SelfCheckGPT, Prompt LlaMA/GPT, Finetune)

baseline_output: intermediate results after running baselines

core: code for our approach, plus data analytics and visualization

data: raw data from RAG-Truth

lrp_result_llama_7b: LRP results for llama-2-7b-chat

lrp_result_llama_13b: LRP results for llama-2-13b-chat

pdf: visualization pdf

download baseline_output.zip lrp_result_llama_7b.zip lrp_result_llama_13b.zip from NJUBox

- run

python core/llama_lrp.pyto get LRP results, which will be saved inlrp_result_llama_7b - run

python core/classifier.py --datasets ../lrp_result_llama_7b --classifier SVMto get classification result of our approach

-

SelfCheckGPT:

- run

python baseline/self_checkgpt.py - run

python baseline/self_check_result.py

- run

-

Prompt LlaMA/GPT:

- run

python baseline/prompt_llama.py/ runpython baseline/prompt_gpt.py - run

python baseline/prompt_llm_result.py

- run

-

Finetune:

- run

python baseline/llm_finetune.py

- run