Name: Erin May Gunawan

Matric No.: A0200765L

GitHub Repo: Task D

-

Copy the contents of the

docker-compose.ymlfile and place it in your chosen directory. -

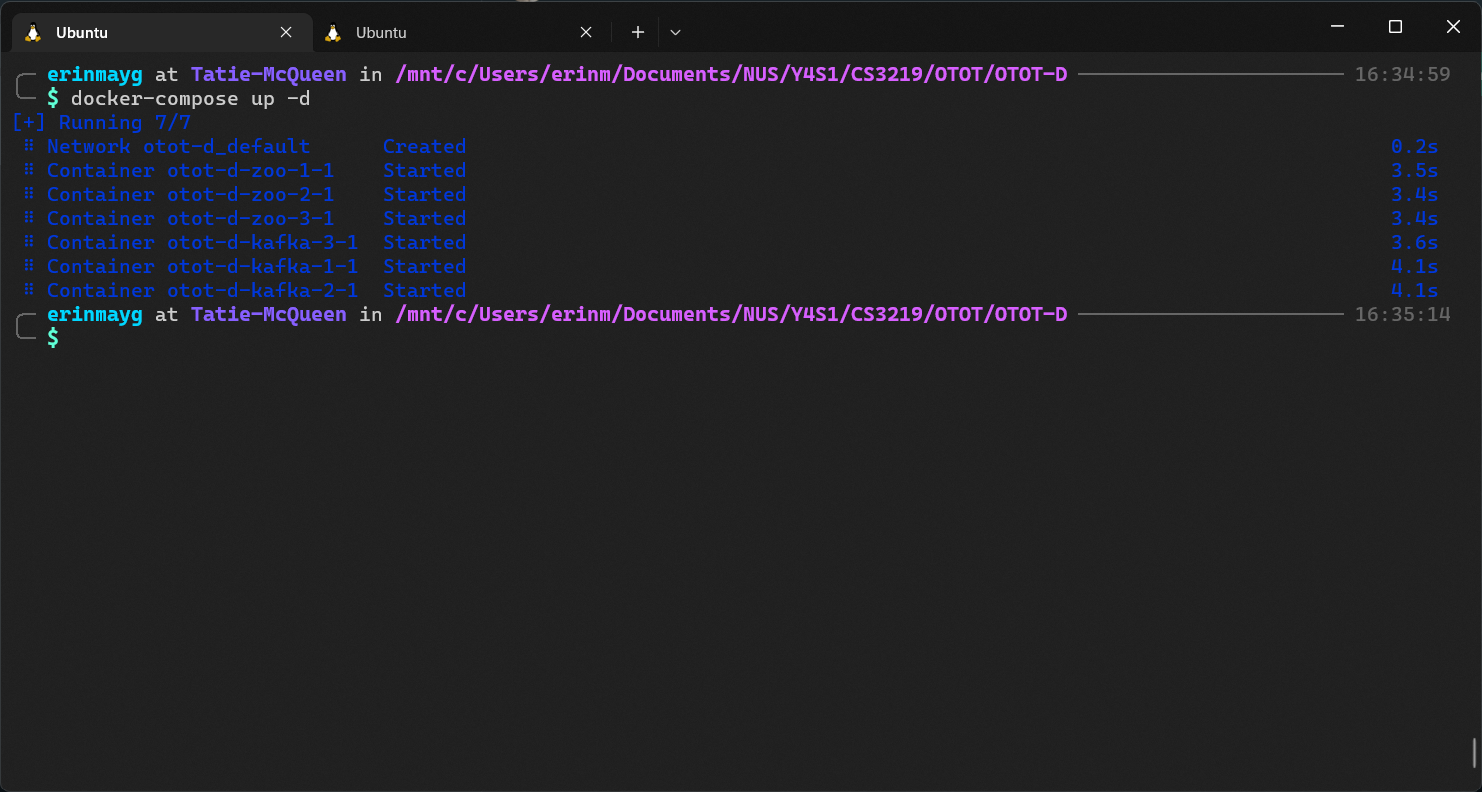

Run the following command on your terminal to start up the containers, make sure you are in the same directory as your

docker-compose.ymlfile.docker-compose up

Note: You may use the

docker-compose up -dif you want the process to run on backgroundTake note of the network used in by the containers, by default it is set to the name of your current directory.

So for example if your

docker-compose.ymlfile path is./otot-d/docker-compose.ymlyour network will beotot-d_default -

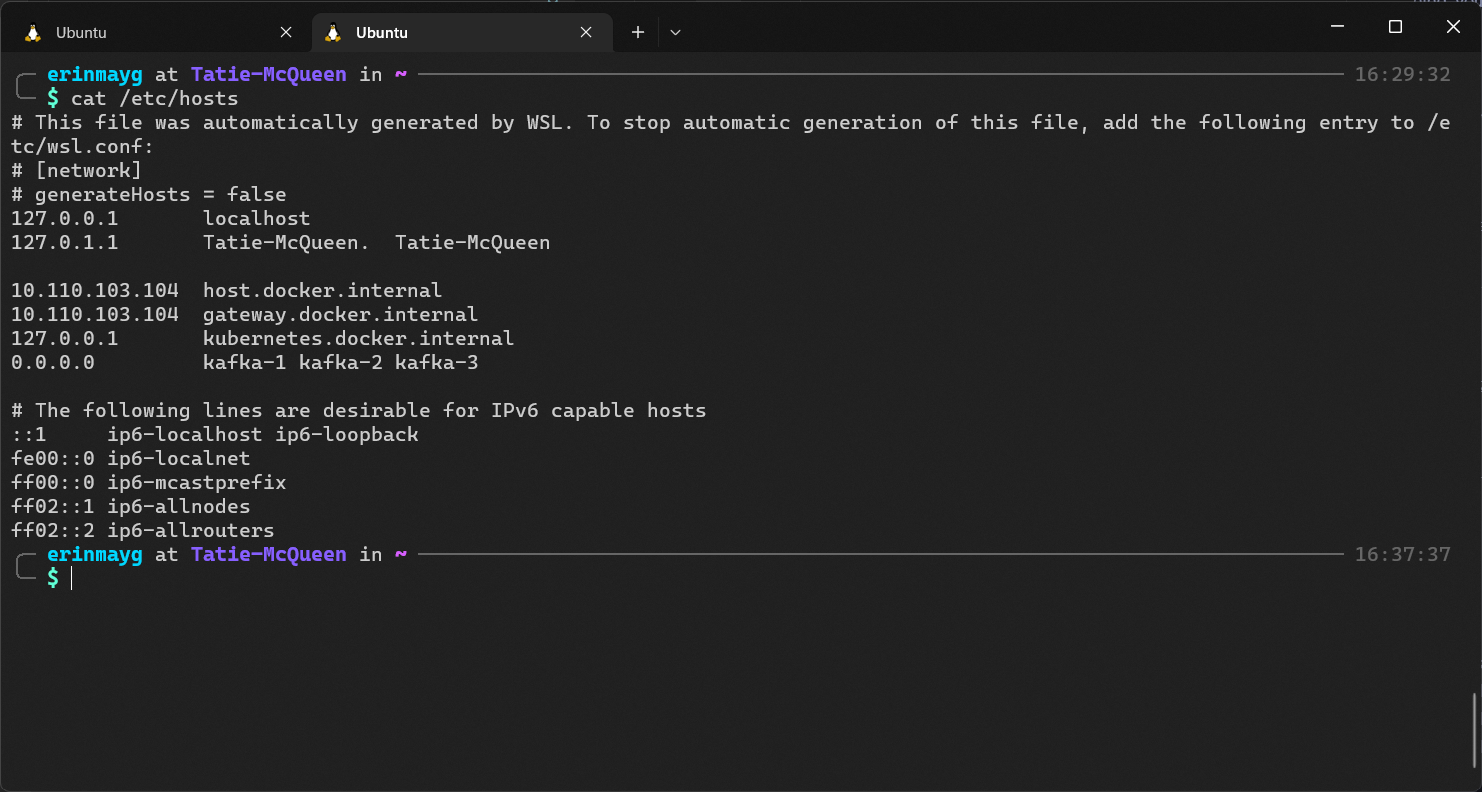

Change the

/etc/hostsfile in your machine to include the hostnames of the kafka containers.sudo vim /etc/hosts

Add the following content:

0.0.0.0 kafka-1 kafka-2 kafka-3kafka-*is the hostname set on yourdocker-compose.ymlfile (i.e.kafka-*.hostname)It should output the similar to the following

-

Once the containers are running, you may test whether or not it is working by using Kafkacat or Docker Commands.

Install kafkacat if you don't have it already. For Ubuntu users, you may do so by running the commands:

sudo apt-get update

sudo apt-get install kafkacatFor more details on installation refer to this link.

-

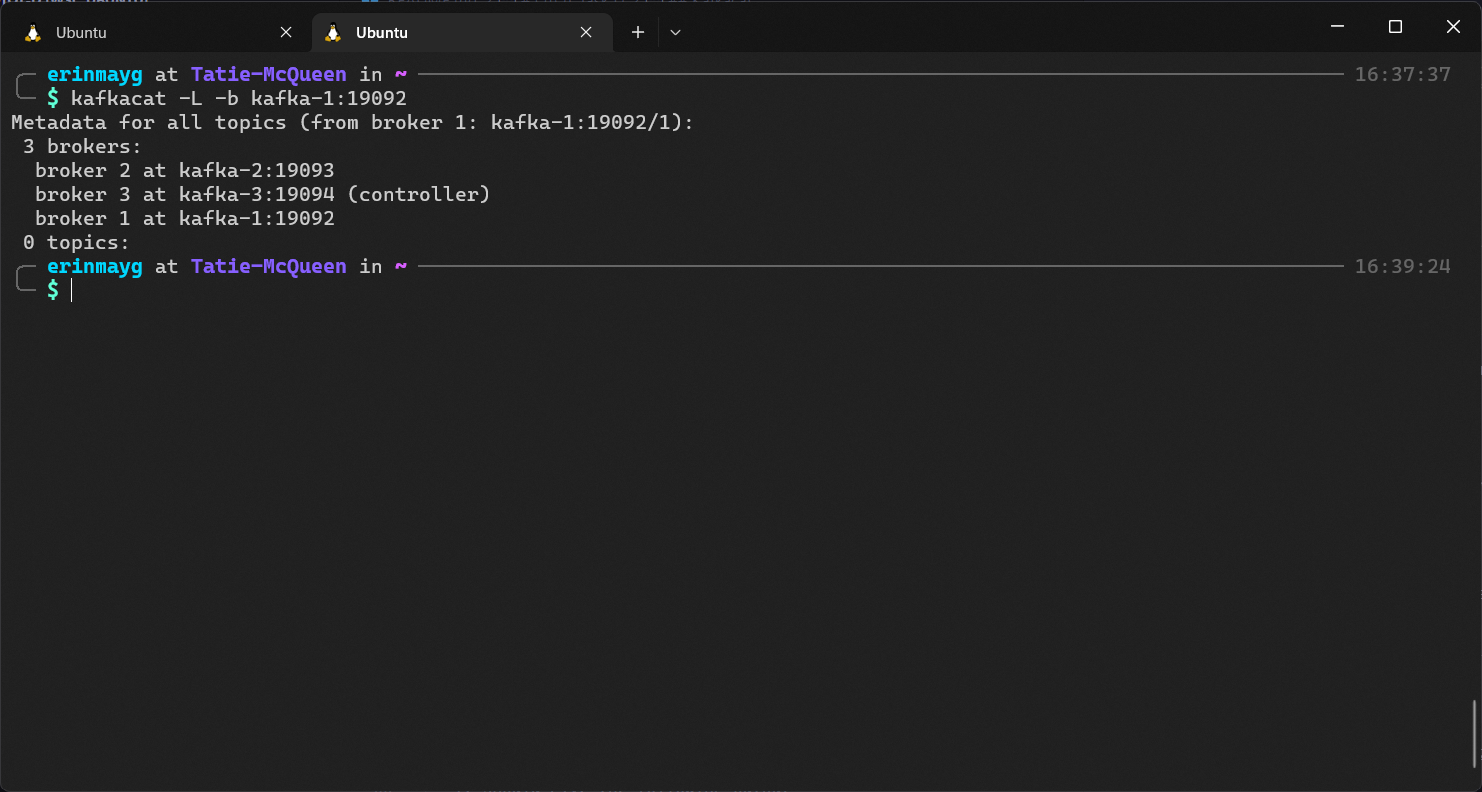

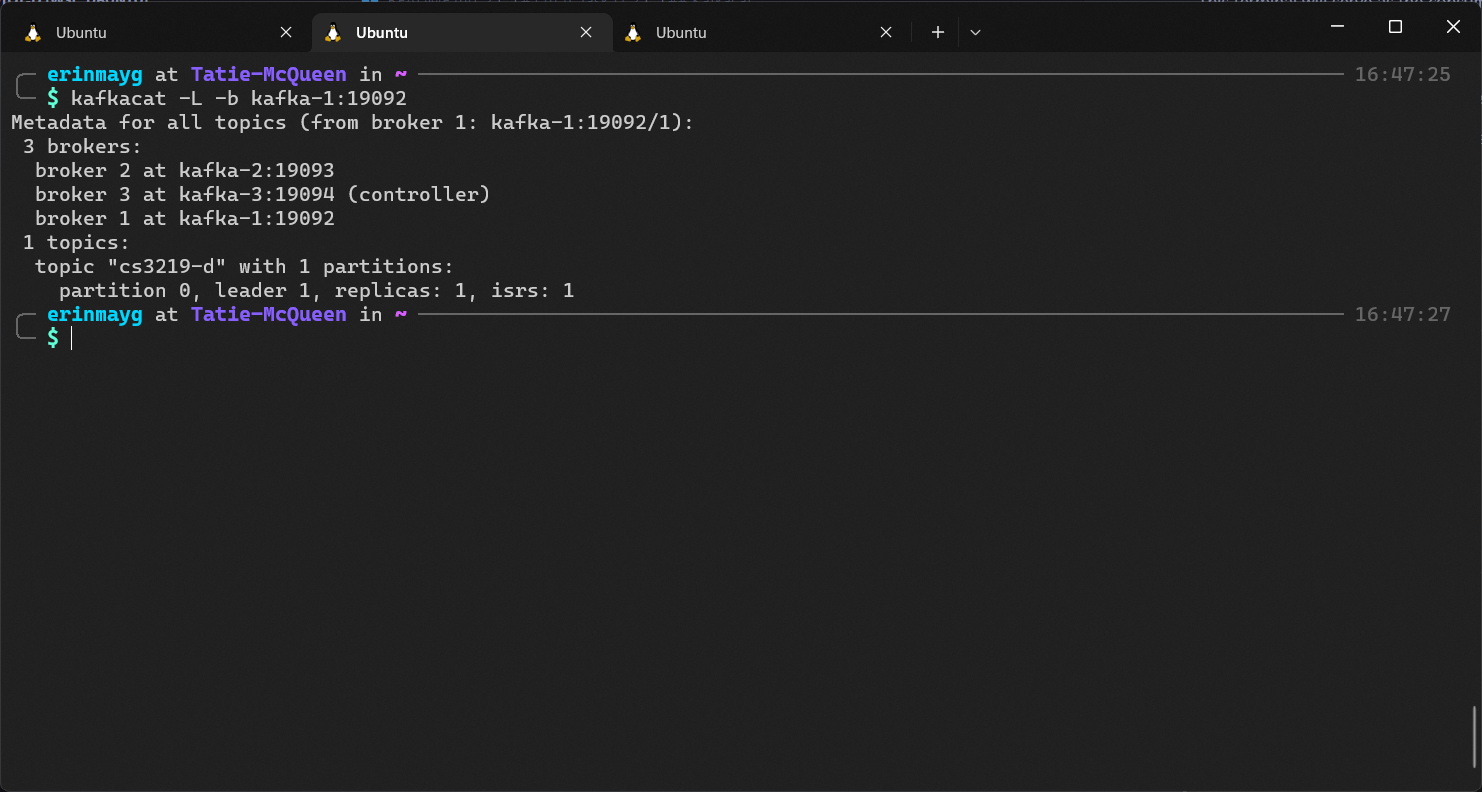

To list out all brokers run

kafkacat -L -b kafka-1:19092

You may replace

kafka-1:19092with any other kafka nodes, provided you give the correct port no. (refer tokafka-*.portsfrom yourdocker-compose.yml)It should give the following output

Take note of the

controllernode (in this case, it'sbroker 3). -

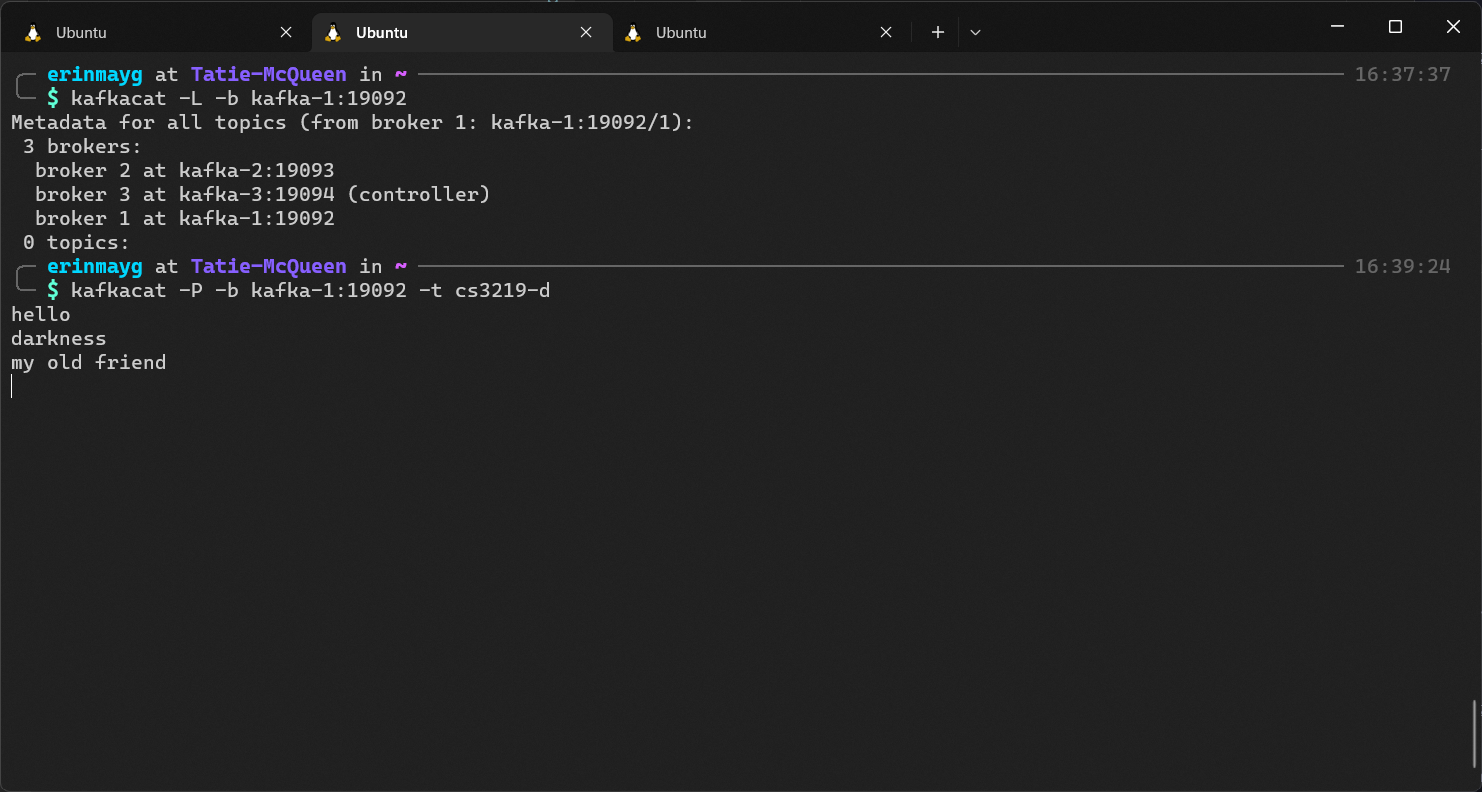

Try sending messages and receiving messages.

In one terminal run

kafkacat -P -b kafka-1:19092 -t cs3219-d

This terminal will serve as the publisher (denoted by the

-Pflag) with topiccs3219-d(denoted by the-tflag).Process doesn't end immediately as it's waiting for input messages from user via

stdin.In another terminal run

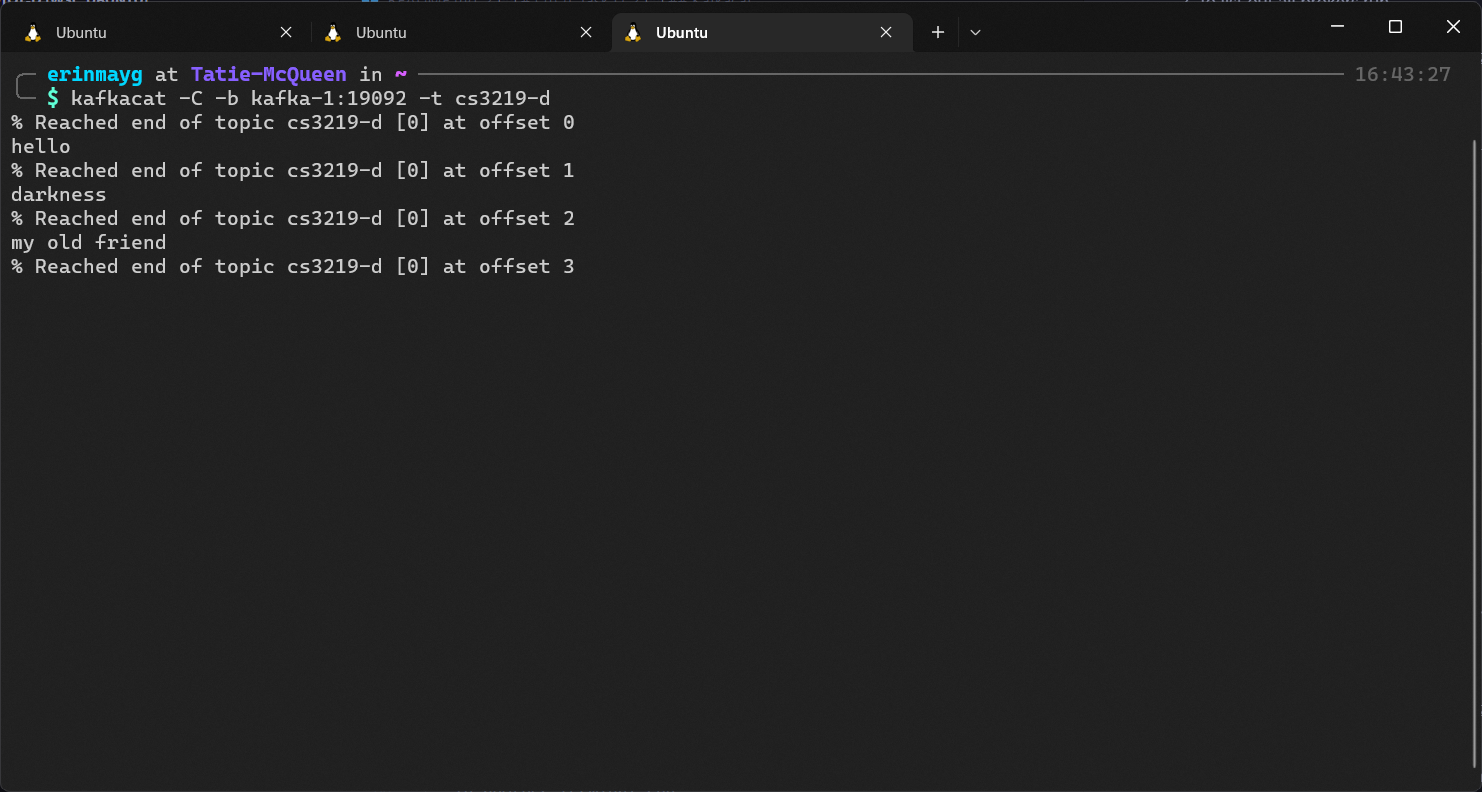

kafkacat -C -b kafka-1:19092 -t cs3219-d

This terminal will serve as the consumer (denoted by

-Cflag) listening to the topiccs3219-d.In the Publisher terminal, you can start typing in messages, and the Consumer terminal should reflect the changes.

To stop listening for messages for the Publisher terminal, press

Ctrl + D, and for the Consumer pressCtrl + C.The output should be the folllowing

And when you relist all the brokers via the command

kafkacat -L -b kafka-1:19092

It should display the new topic

cs3219-d -

Test Controller node failure.

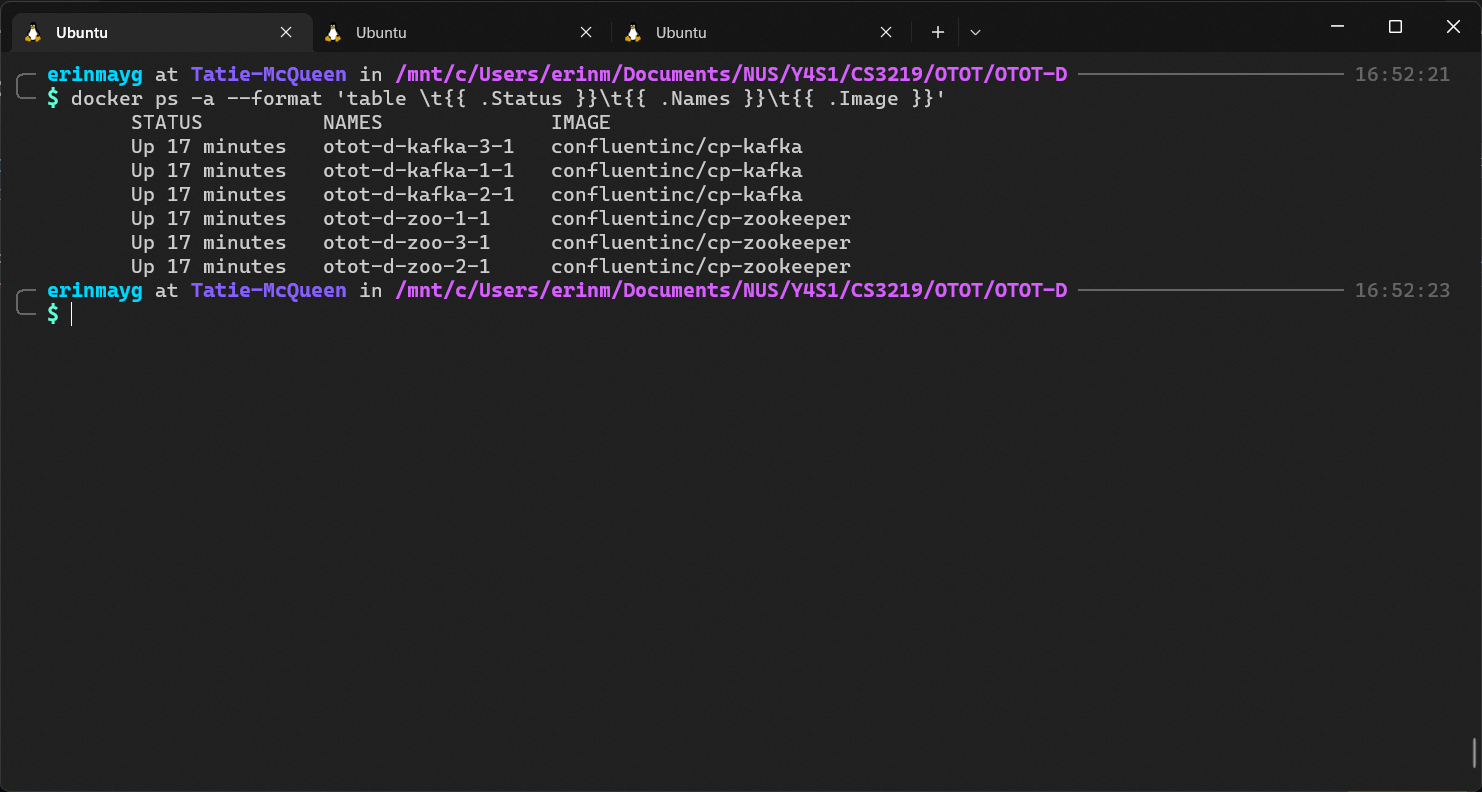

To test this failure, remove the container of the controller node (in this case

kafka-3).You can check the container name via the command

docker ps -a

docker stop otot-d-kafka-3-1

This will stop the container (not delete).

-

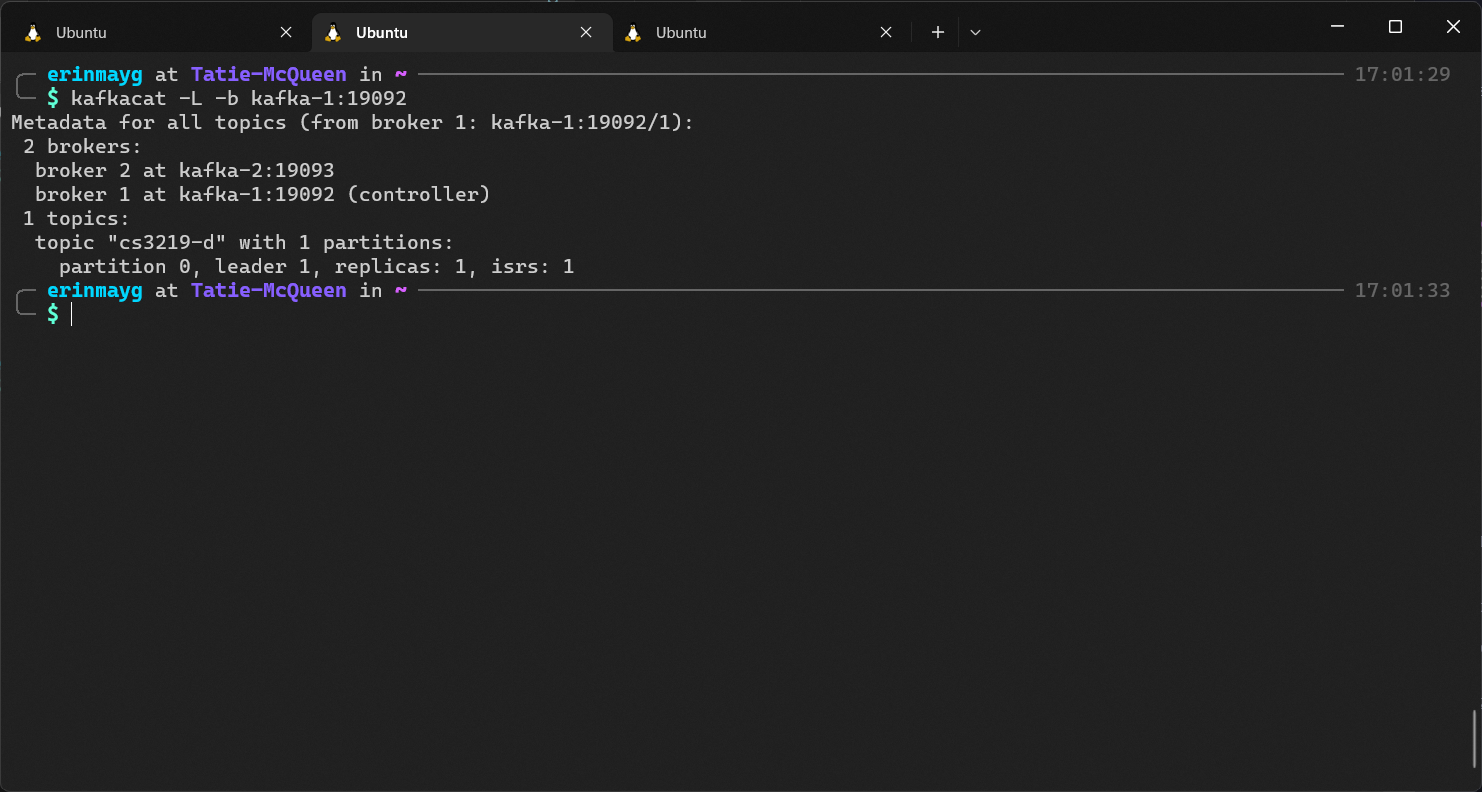

After a couple of moments, run the broker list command again

kafkacat -L -b kafka-1:19092

The

controllershould be changed to a new broker.In this case, it was changed to

broker 1.

-

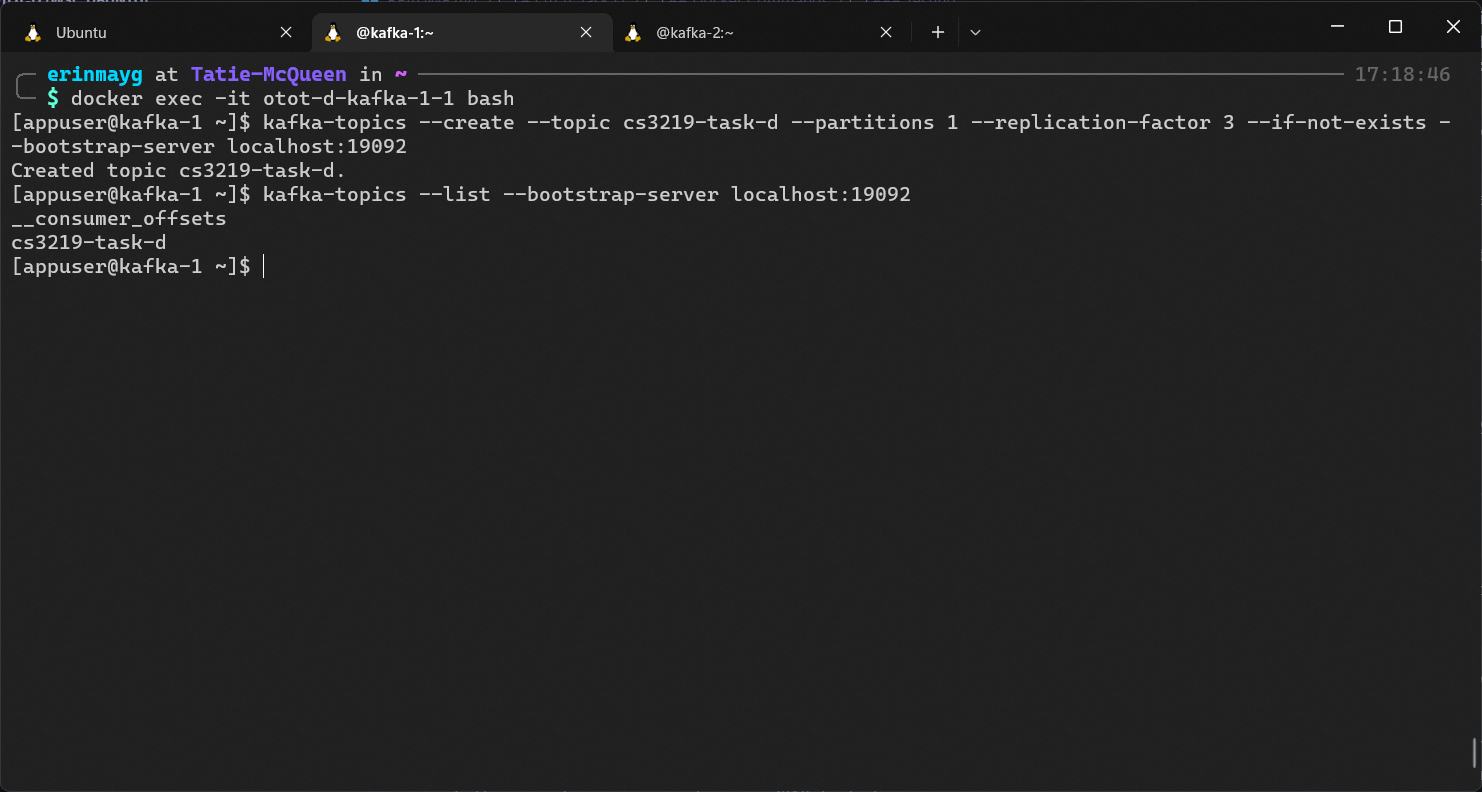

To run kafka commands, you need to enter the broker terminal

List out all container names by running

docker ps -a

It should give an output similar to the following

Enter the terminal of any kafka containers

docker exec -it otot-d-kafka-1-1 bashIn another terminal, do the same with a different broker

example:

docker exec -it otot-d-kafka-2-1 bashOne container will serve as the publisher, the other as a consumer.

-

Start testing sending and receiving messages

One container will serve as the publisher, to create a topic run

kafka-topics --create --topic cs3219-task-d --partitions 1 --replication-factor 3 --if-not-exists --bootstrap-server localhost:19092

Note that

19092is the port number of the kafka container (refer tokafka-*.ports), in this casekafka-1is used.Check if the topic

cs3219-task-dhas been created by running:kafka-topics --list --bootstrap-server localhost:19092

It should output the following

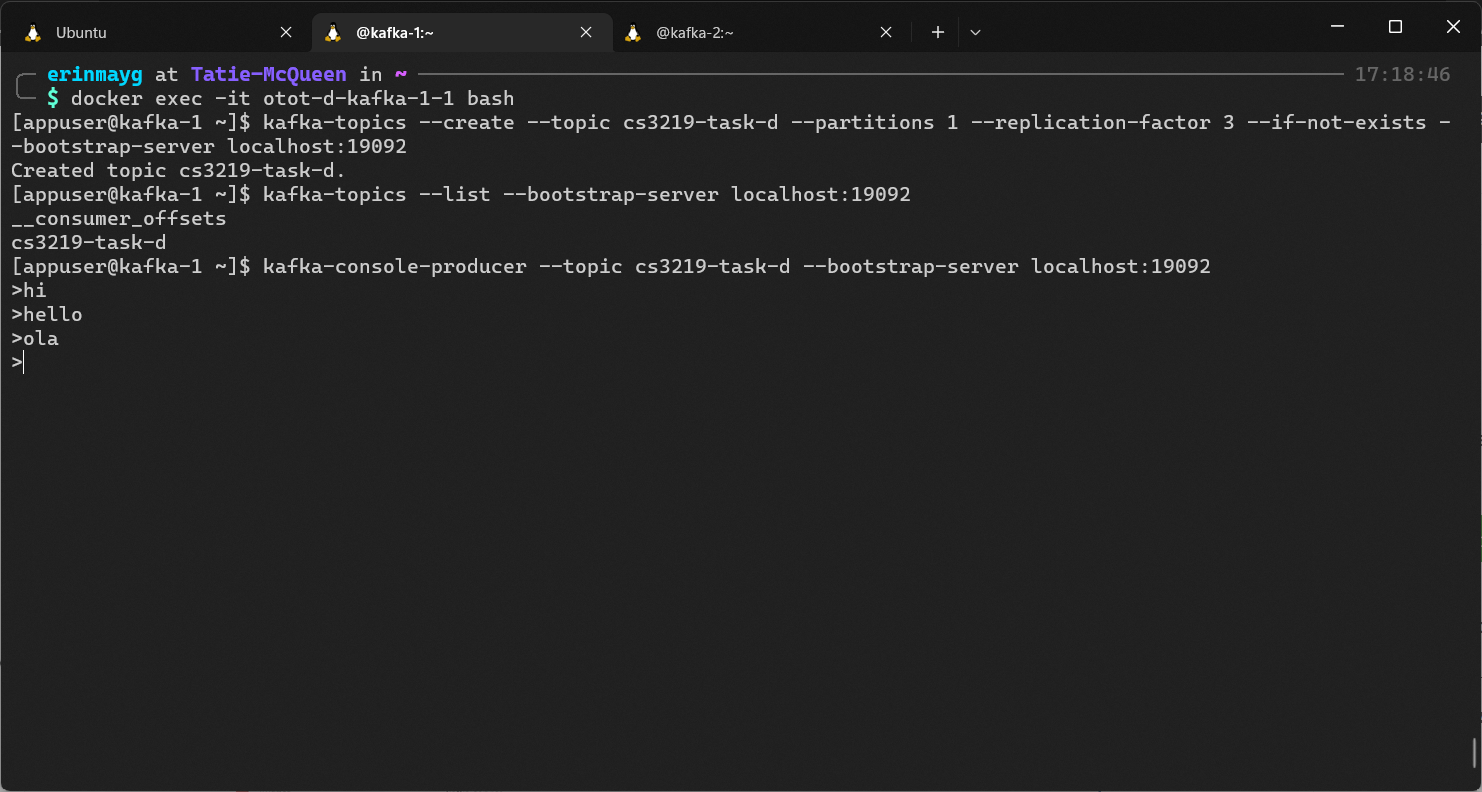

and to type in messages, run:

kafka-console-producer --topic cs3219-task-d --bootstrap-server localhost:19092

The command when end immediately as it's waiting for input messages from

stdin.Try typing in a couple of messages, once finished press

Ctrl + D.the other as a consumer, run

kafka-console-consumer --topic cs3219-task-d --broker-list localhost:19093

Note that

19093is the port number of the kafka container (refer tokafka-*.ports), in this casekafka-2is used.It should display the messages.

To stop listening press

Ctrl + C. -

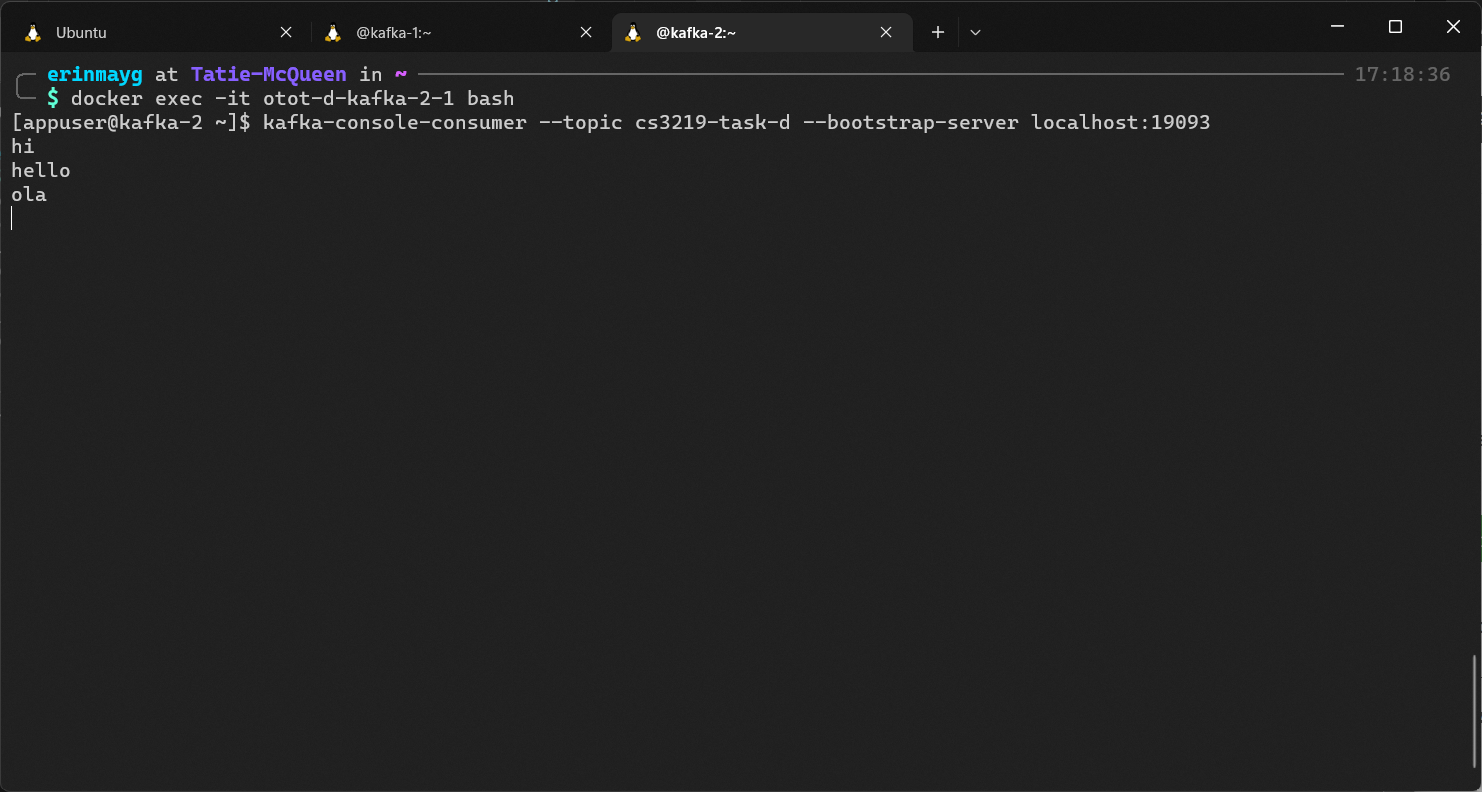

Test Controller Node failure

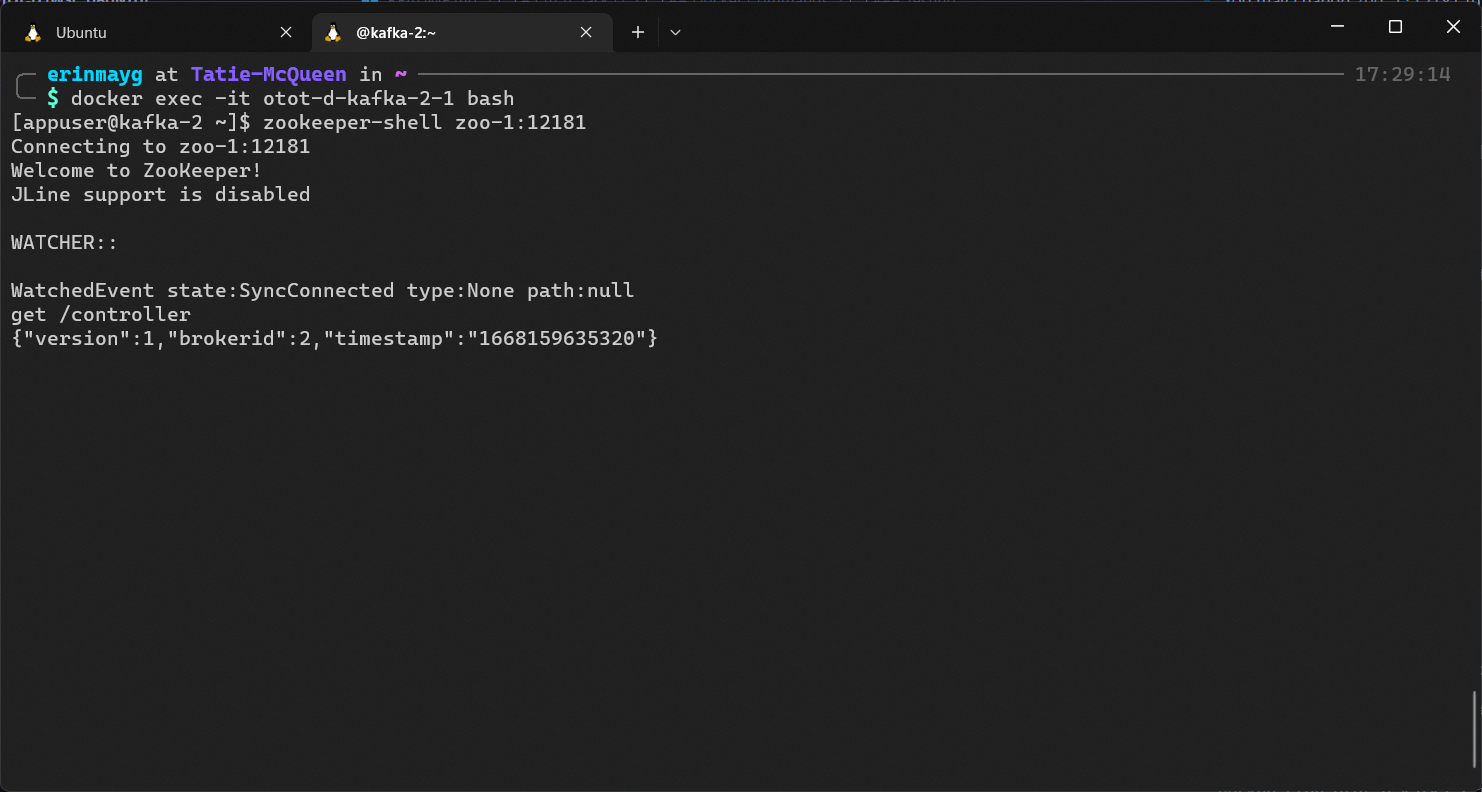

On one of the kafka containers, run

zookeeper-shell zoo-1:12181

You may change

zoo-1:12181to any zookeeper containers and their respective port with the format<hostname>:<port>(refer tozoo-*.hostnameandzoo-*.environments.ZOOKEEPER_CLIENT_PORTfrom thedocker-compose.ymlfile respectively).You should enter the zookeeper shell, you can now type

get /controller

and it should return the broker id of the controller

In this case, the controller is

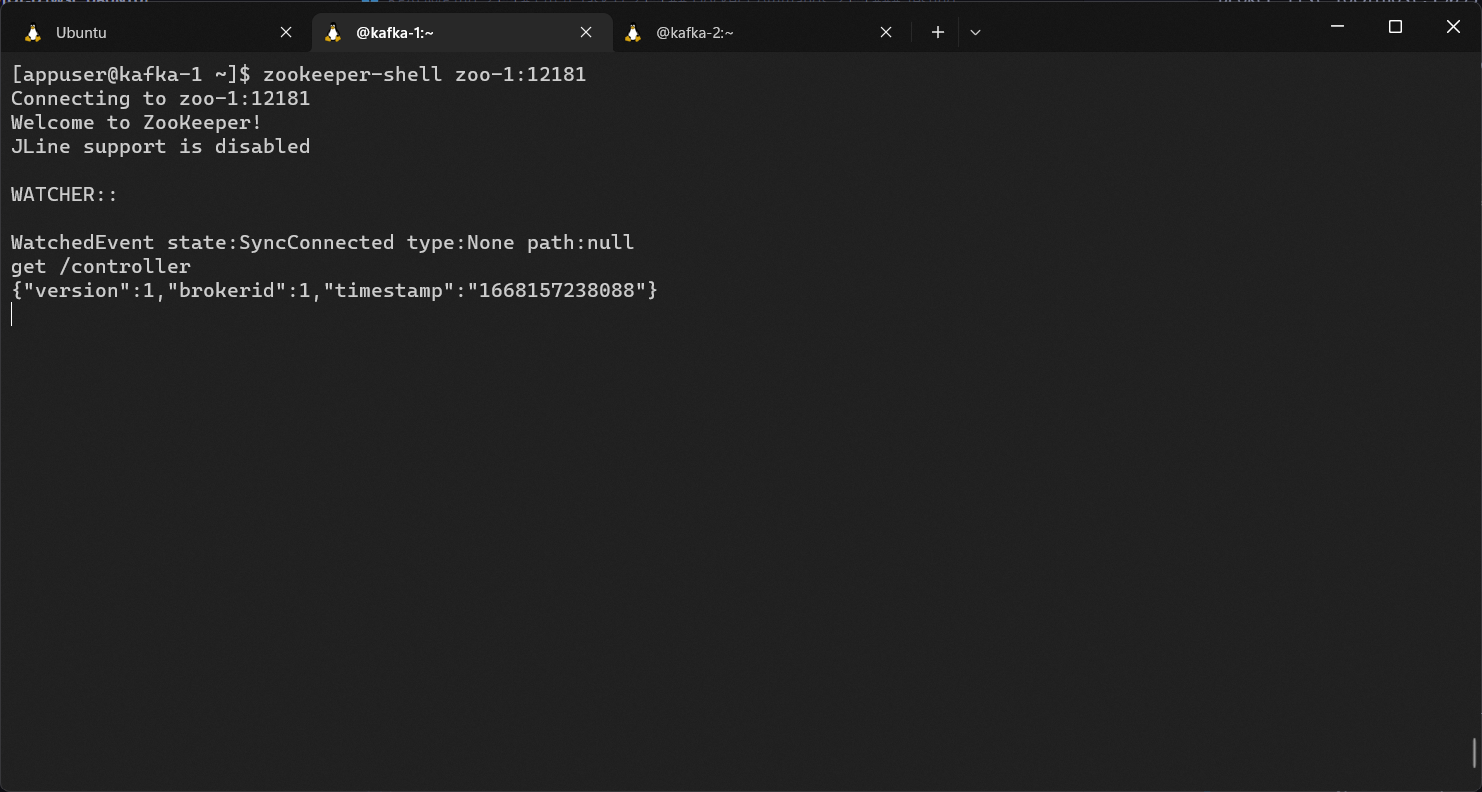

broker 1.Ctrl + Cto exit zookeeper-shell andexitto exit the kafka container.To stop the controller node, take note of the name of the kafka container from step 1. and run

docker stop otot-d-kafka-1-1

On a different kafka container, run the commands in step 3 again and it should output a different

broker idas the controller.Now,

broker 2is the new controller.Exit the shell with

Ctrl + Cand exit the kafka container usingexit.

To stop the containers, navigate to the directory of docker-compose.yml and run

docker-compose downIt should stop and remove all the containers.