This is a Pytorch implementation of the STGAN model from the paper "Cloud Removal in Satellite Images Using Spatiotemporal Generative Models," Sarukkai*, Jain*, Uzkent, and Ermon (https://arxiv.org/abs/1912.06838). The STGAN is accepted to IEEE WACV 2020.

This implementation is built on a clone of the implementation of Pix2Pix from https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix. The readme also is based on the readme from the CycleGAN/pix2pix repo. That repo contains the code corresponding to the following two papers:

Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks.

Jun-Yan Zhu*, Taesung Park*, Phillip Isola, Alexei A. Efros. In ICCV 2017. (* equal contributions) [Bibtex]

Image-to-Image Translation with Conditional Adversarial Networks.

Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, Alexei A. Efros. In CVPR 2017. [Bibtex]

- Linux or macOS

- Python 3

- CPU or NVIDIA GPU + CUDA CuDNN

- Clone this repo:

git clone https://github.com/ermongroup/STGAN.git

cd stgan- Install PyTorch 0.4+ and other dependencies (e.g., torchvision, visdom and dominate).

- For pip users, please type the command

pip install -r requirements.txt.

- For pip users, please type the command

Download the STGAN dataset from https://doi.org/10.7910/DVN/BSETKZ. This download includes two datasets (singleImage and multipleImage), described both in the paper and on the linked page. Before training a model, these images must be split into train/val/test splits--for instance, for both singleImage/clear and singleImage/cloudy, create three subfolders called "train","val","test", and assign the images from the main directory into the corresponding partitions either using a script that assigns splits at random or a split that keeps images from the same "tile" together (accounting for the possibility that these images are correlated). In our case, we used a 80-10-10 train-val-test split while keeping image crops from the same "tile" together. Make sure that corresponding clear and cloudy images are assigned to the same split.

Once the data is formatted this way, call:

python datasets/combine_A_and_B.py --fold_A /path/to/data/cloudy --fold_B /path/to/data/clear --fold_AB /path/to/data/combinedThis will combine each pair of images (cloudy,clear) into a single image file, ready for training.

- Train a model:

python train.py --dataroot ./path/to/data/combined --name stgan --model temporal_branched_ir --netG unet_256_independent --input_nc 4-

Options include temporal_branched and temporal_branched_ir for "model" (depending on if we're using infrared data), 3 or 4 for "input_nc" (again depending on if we're using infrared data), unet_256_independent and independent_resnet_9blocks for "netG", and further options such as "batch_size", "preprocess", and "norm". See train.py for detained training options.

-

Our best models often opted for larger batch_size, norm=batch, and both options for netG worked well (with the resnet generator taking longer to train than the unet but sometimes providing improvements in accuracy).

-

To view training results and loss plots, run

python -m visdom.serverand click the URL http://localhost:8097. -

Test the model (

bash ./scripts/test_pix2pix.sh):

python test.py --dataroot ./path/to/data/combined --name stgan --model temporal_branched_ir --netG unet_256_independent --input_nc 4-

The test results will be saved to a html file here:

./results/stgan/test_latest/index.html. -

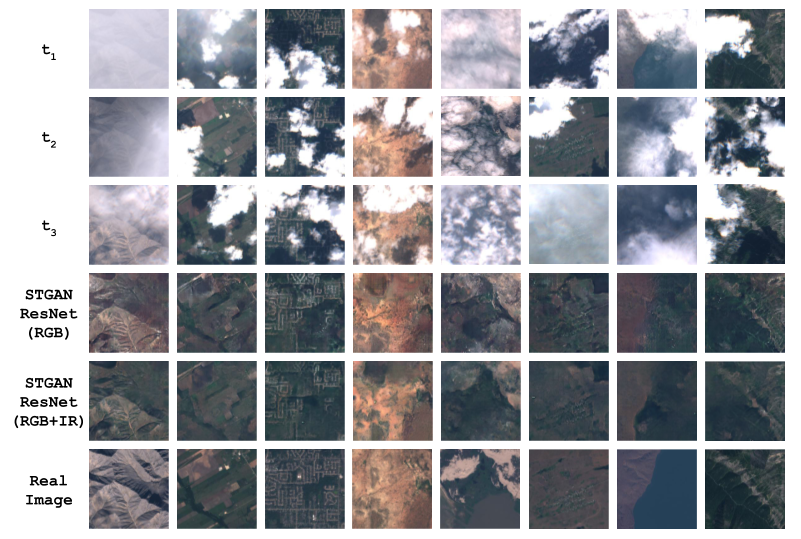

Some examples of cloud removal with STGAN can be seen in the figure below.

@article{sarukkai2019cloud,

title={Cloud Removal in Satellite Images Using Spatiotemporal Generative Networks},

author={Sarukkai, Vishnu and Jain, Anirudh and Uzkent, Burak and Ermon, Stefano},

journal={arXiv preprint arXiv:1912.06838},

year={2019}

}