Our paper examines if the state-of-the-art bias mitigation methods are able to perform well on more realistic settings: with multiple sources of biases, hidden biases and without access to test distributions. This repository has implementations/re-implementations for seven popular techniques.

conda create -n bias_mitigator python=3.7

source activate bias_mitigator

conda install pytorch==1.4.0 torchvision==0.5.0 cudatoolkit=10.1 -c pytorch

conda install tqdm opencv pandas

- Edit the

ROOTvariable incommon.sh. This directory will contain the datasets and the experimental results.

- For each dataset, we test on train, val and test splits. Each dataset file contains a function to create a dataloader for all of these splits.

Since the publication, we created BiasedMNISTv2, a more challenging version of the dataset. Version 2 includes increased image sizes, spuriously correlated digit scales, distracting letters instead of simplistic geometric shapes, and updated background textures.

We encourage the community to use the BiasedMNIST v2.

You can download the BiasedMNISTv1 (WACV 2021) from here.

Both BiasedMNISTv1 and v2 are released under Creative Commons Attribution 4.0 International (CC BY 4.0) license.

- Download the dataset from here and extract the data to

${ROOT} - We adapted the data loader from

https://github.com/kohpangwei/group_DRO

-

Download GQA (object features, spatial features and questions) from here

-

Build the GQA-OOD by following these instructions

-

Download embeddings

python -m spacy download en_vectors_web_lg -

Preprocess visual/spatial features

./scripts/gqa-ood/preprocess_gqa.sh

We have provided a separate bash file for running each method on each dataset in the scripts directory. Here is a sample script:

source activate bias_mitigator

TRAINER_NAME='BaseTrainer'

lr=1e-3

wd=0

python main.py \

--expt_type celebA_experiments \

--trainer_name ${TRAINER_NAME} \

--lr ${lr} \

--weight_decay ${wd} \

--expt_name ${TRAINER_NAME} \

--root_dir ${ROOT}- If you want to add more methods, simply follow one of the implementations inside

trainersdirectory.

-

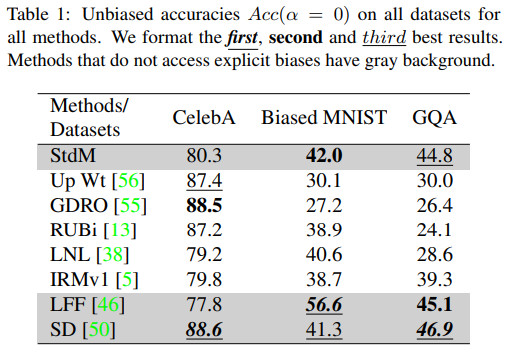

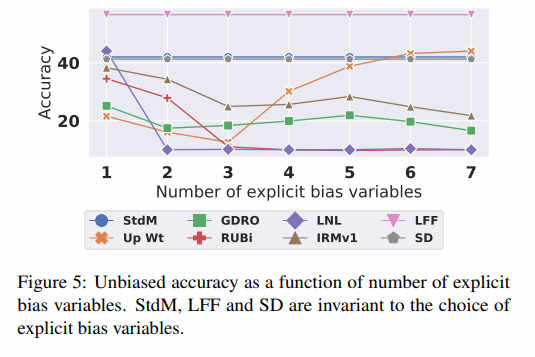

Overall, methods fail when datasets contain multiple sources of bias, even if they excel on smaller settings with one or two sources of bias (e.g., CelebA).

-

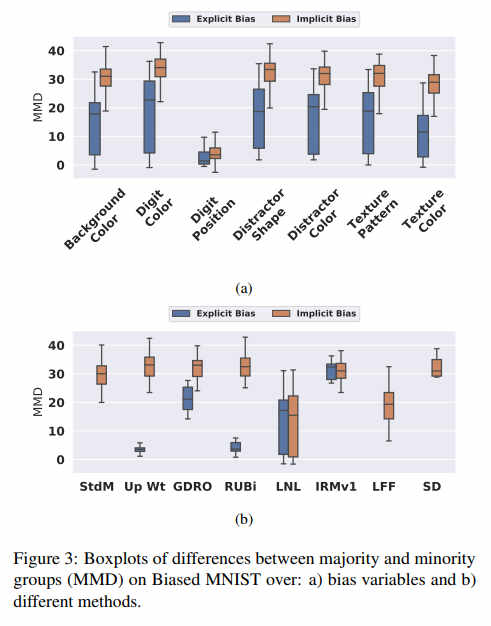

Methods can exploit both implicit (hidden) and explicit biases.

-

Methods cannot handle multiple sources of bias even when they are explicitly labeled.

-

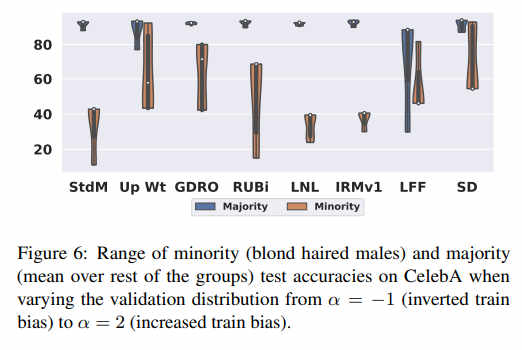

Most methods show high sensitivity to the tuning distribution especially for minority groups

@article{shrestha2021investigation,

title={An investigation of critical issues in bias mitigation techniques},

author={Shrestha, Robik and Kafle, Kushal and Kanan, Christopher},

journal={Workshop on Applications of Computer Vision},

year={2021}

}

This work was supported in part by the DARPA/SRI Lifelong Learning Machines program[HR0011-18-C-0051], AFOSR grant [FA9550-18-1-0121], and NSF award #1909696.