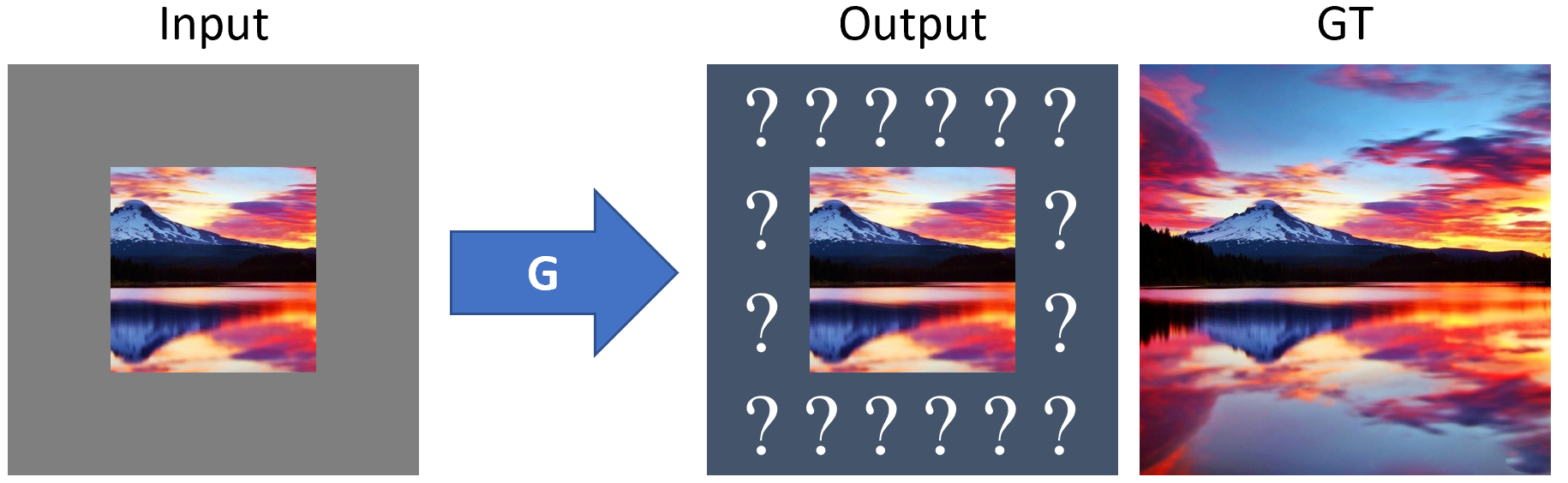

This is some example code for my paper on Image Outpainting and Harmonization using Generative Adversarial Networks , which was made for the class Deep Learning for Computer Vision by Prof. Peter Belhumeur at Columbia University. Essentially, we extrapolate 128x128 RGB images into an 192x192 output by attempting to draw a reasonable hallucination of what could reside beyond every border of the input photo. The architecture is inspired by Context Encoders: Feature Learning by Inpainting . Part of my work explores SinGAN for style harmonization, but this post-processing step was done mostly manually so it is not included in this repository for now.

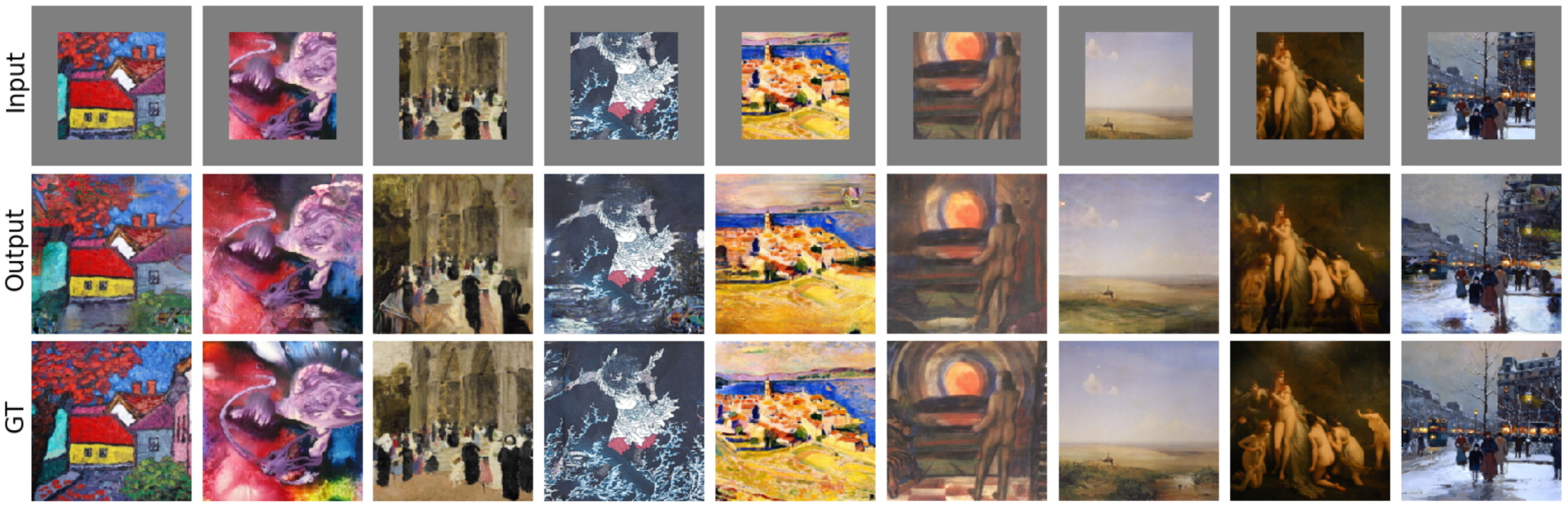

Both figures shown here are evaluated either on the test or validation sets.

Natural photography:

Artwork:

-

Prepare a dataset split into the following folders: train, val, and test. I used MIT Places365-Standard which can be downloaded here: train, val, test. Another section of my work uses an artwork dataset scraped from WikiArt; I might post a link here later.

-

Edit and run

train.py. -

Run

forward.py input.jpg output.jpgfor evaluation of custom images. This script usesgenerator_final.ptto load network weights and accepts arbitrary image resolutions.

- The adversarial loss weight follows a specific schedule in order to prevent the generator from collapsing to a simple autoencoder, or reconstruction loss minimizer. However, making this term too important results in rather glitched but nonetheless sharper visuals. Room for improvement therefore exists in terms of finding a better balance.

Enjoy!