Work with (local) Ollama and Llama large language models - but also other models supported by Ollama like Mistral or Phi (https://ollama.com/library)

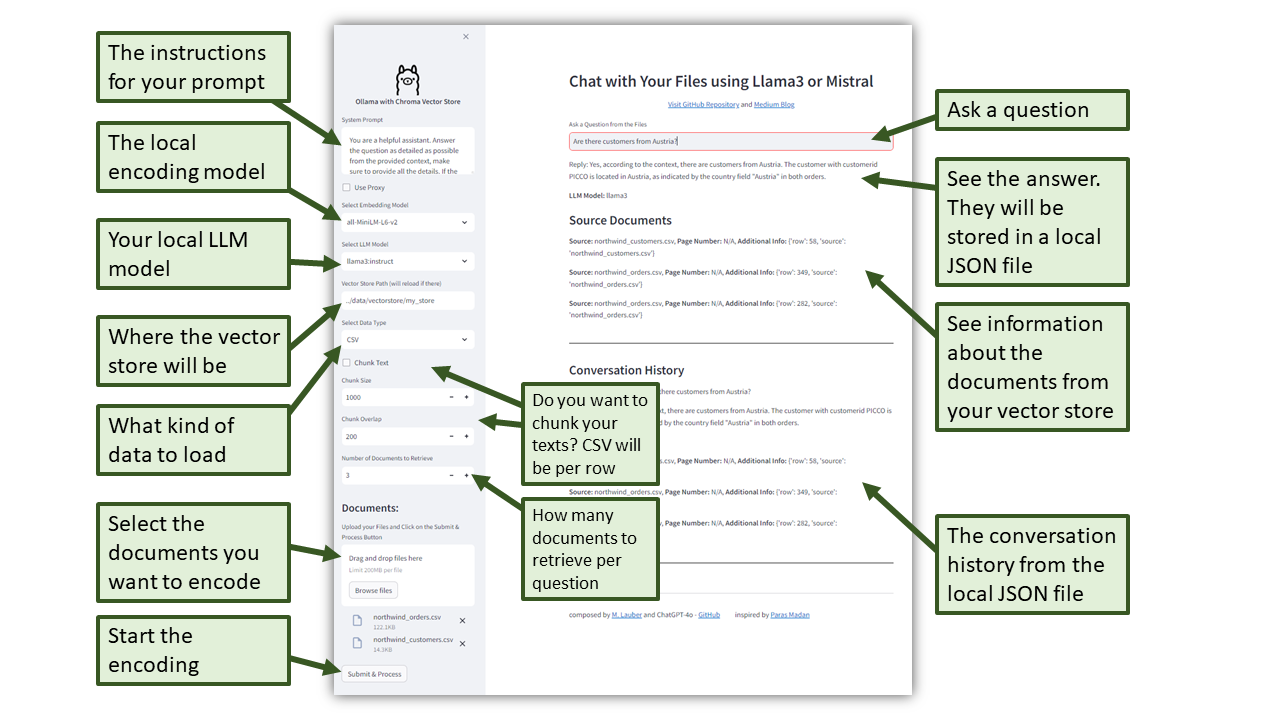

Ollama and Llama3 — A Streamlit App to convert your files into local Vector Stores and chat with them using the latest LLMs

https://medium.com/p/c5340fcd6ad0

https://github.com/ml-score/ollama/tree/main/script

https://medium.com/p/237eda761c1c

https://medium.com/p/aca61e4a690a

https://medium.com/p/311bf61dd20e

https://medium.com/p/cef650fc142b

In the subfolder /notebooks/ you will find sample code to work with local large language models and you own files

- Ollama - Chat with your Logs.ipynb

- Ollama - Chat with your PDF.ipynb

- Ollama - Chat with your Unstructured CSVs.ipynb

- Ollama - Chat with your Unstructured Log Files.ipynb

- Ollama - Chat with your Unstructured Text Files.ipynb

You can find an example of how to use these code within KNIME to chat or process with your files in this KNIME Workflow (maybe best to download the whole workflow group):

https://hub.knime.com/-/spaces/-/~5s39Yth4NbkUIj0q/current-state/

More articles that might be intersting: