This ResNet spends 3 hours to train on a modern CPU. It can reach 63% on CIFAR100 coarse 20 classes task.

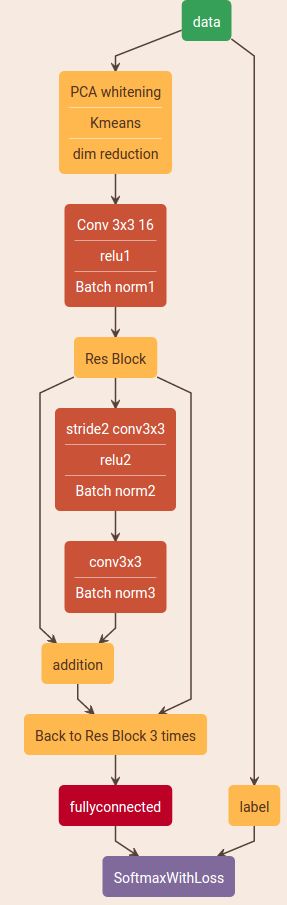

This residual neural network is different from the original paper in there ways:

- Only have 13 layers, which the original paper didn't studied.

- No ReLU before subsampling convolutional layer, which improve accuracy by 3%

- BatchNorm is done before addition, which improve accuracy a little bit.

This ResNet-13 can't beat ResNet-18/34/150/1000 layers residual nets in the long run, however, more efficient with non-sufficient training time, interestingly.

Details are shown here. Archtecture shown at the bottom.

Traning 3 hours on CPU:

| Acc. | CIFAR-10 | CIFAR-100 |

|---|---|---|

| Alexnet | 82% | - |

| Mimic Learning | - | 50% |

| 2-layer NN with PCA and Kmeans | 78% | 56% |

| ResNet-13 (this repo) | 84% | 63% |

python redo.py /path/to/CIFAR100python/

- Output layers contain 20 labels

- Using tensorflow

- number of filters

- iterations

- learning rate

- batch size

- regularization strength

- number of layers

- optimization methods

- drop out

- initialization

- LSUV init

- Kaiming He's initialization

- hidden neurons

- filter size of convolution layers

- filter size of pooling layers