Note: This is part of Enabling Hadoop Migrations to Azure reference implementation. For more information check out the [readme file in the root.] (https://github.com/Azure/Hadoop-Migrations/blob/main/README.md)

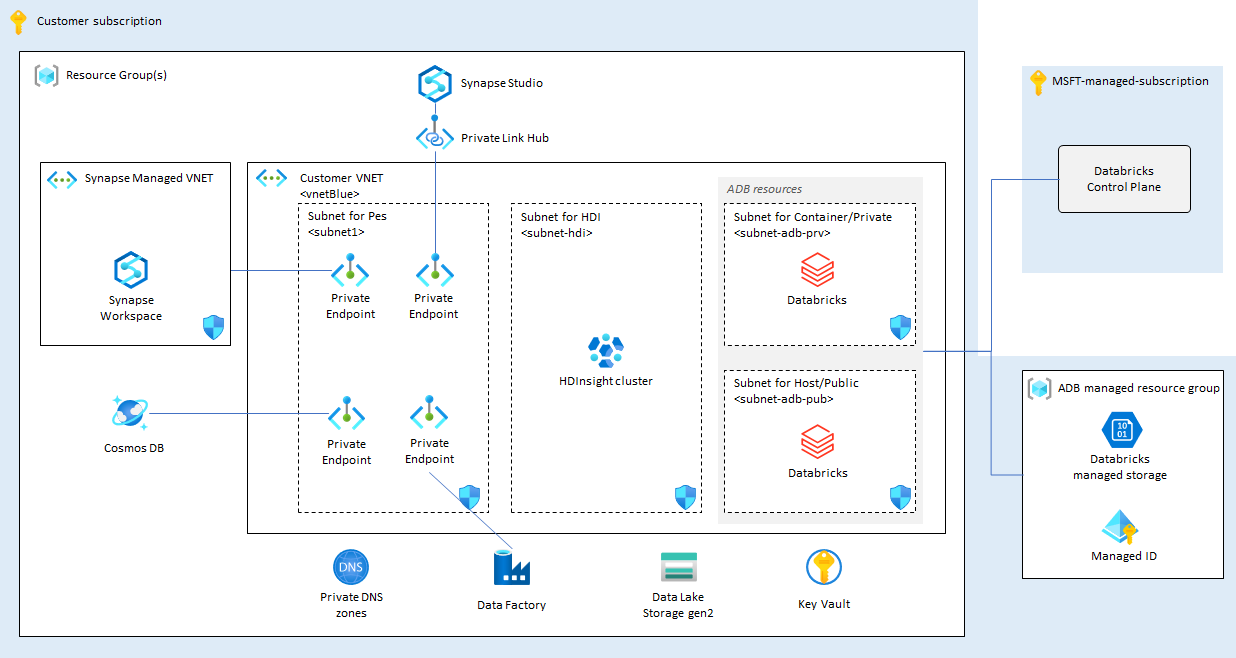

One of the challenges while migrating workloads from on-premises Hadoop to Azure is having the right deployment done which is aligning with the desired end state architecture and the application. With this bicep project we are aiming to reduce a significant effort which goes behind deploying the PaaS services on Azure and having a production ready architecture up and running.

We will be looking at the end state architecture for big data workloads on Azure PaaS listing all the components deployed as a part of bicep template deployment. With Bicep we also have an additional advantage of deploying only the modules we prefer for a customised architecture. In the later sections we will cover the pre-requisites for the template and different methods of deploying the resources on Azure such as Oneclick, Azure CLI, Github Actions and DevOps Pipeline.

By default, all the services which come under the reference architecture are enabled, and you must explicitly disable services that you don't want to be deployed from parameters which prompts in the ARM screen at portal or in the template files *.parameters.json or directly in *.bicep

Note: Before deploying the resources, we recommend to check registration status of the required resource providers in your subscription. For more information, see Resource providers for Azure services.

Based on the detailed architecture above the end state deployment is simplified below for better understanding.

For the reference architecture, the following services are created

- Azure HDInsight

- Azure Synapse Analytics

- Azure Databricks

- Azure Data Factory

- Azure Cosmos DB

- Infrastructure

- Azure Key Vault

- VNet

- VM

- Private DNS Zone

For more details regarding the services that will be deployed, please read the Domains guide(link) in the Hardoop Migration documentation.

If you don't have an Azure subscription, create your Azure free account today.

- Azure CLI

- Bicep

In this quickstart, you will create:

- Resource Group

- Service Principal and access

- Public Key for SSH (Optional)

The Azure CLI's default authentication method uses a web browser and access token to sign in.

Run the login command

az loginOnce the authentication is successful, you should see a similar output:

{

"cloudName": "AzureCloud",

"homeTenantId": "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx",

"id": "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx",

"isDefault": true,

"managedByTenants": [],

"name": "xxxxxxxxxxxx",

"state": "Enabled",

"tenantId": "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx",

"user": {

"name": "xxxxxxx@xxxxxxxxx.com",

"type": "user"

}

},Copy the subscription id from output above, you will need it to create more resources.

Create a resource group using the below command

az group create -l <Your Region> -n <Resource Group Name> --subscription <Your Subscription Id>

An Azure service principal is an identity created for use with applications, hosted services, and automated tools to access Azure resources programatically. It needs to be generated for authentication and authorization by Key Vault. Get the subscription id from the output saved earlier. Open Cloud shell or Azure CLI, set the Azure context and execute the following commands to generate the required credentials:

Note: The purpose of this new Service Principal is to assign least-privilege rights. Therefore, it requires the Contributor role at a resource group scope in order to deploy the resources inside the resource group dedicated to a specific data domain. The Network Contributor role assignment is required as well in this repository in order to assign the resources to the dedicated subnet.

**** to-be-updated ****

az ad sp create-for-rbac -n <Your App Name>

You should see the output similar to the following

{

"appId": "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx",

"displayName": "<Your App Name>",

"name": "http://<Your App Name>",

"password": "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx",

"tenant": "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx"

}Save the appId and password for the upcoming steps.

This is an optional step, follow when you want to deploy VMs at VNets for testing purpose: This article shows you how to quickly generate and use an SSH public-private key file pair for Linux VMs

To create and use an SSH public-private key pair for Linux VMs in Azure

cat ~/.ssh/id_rsa.pub

Save the output of the above command as the public key and store in a safe location, to be used in the upcoming commands.

Most of Azure regions have all majority data & analytics services available, some of them are given below:

- Canada Central

- Canada East

- Central US

- East US

- East US 2

- North Central US

- South Central US

- West Central US

- West US

- West US 2

There are 4 methods available for deploying this reference architecture, let's look at each one individually:

- Oneclick button to Quickstart

- CLI

- Github Action

- Azure DevOps Pipeline

- Infrastructure

- Key Vault

- Services all-at-once

Doublecheck if you've logged in.

az loginClone this repo to your environment

git clone https://github.com/nudbeach/data-platform-migration.gitYou can run all following commands at home directory of data-platform-migration

Create a resource group with location using your subscription id from previous step

az group create -l koreacentral -n <Your Resource Group Name> \

--subscription xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxxDeploy components by running these commands sequentially

az deployment group create -g <Your Resource Group Name> -f main/main-infra.bicep

az deployment group create -g <Your Resource Group Name> -f main/main-keyvault.bicep

az deployment group create -g <Your Resource Group Name> -f main/main-service-all-at-once.bicepor

az deployment group create -g <Your Resource Group Name> \

-f main/main-infra.bicep \

--parameter main/main-service-infra.json

az deployment group create -g <Your Resource Group Name> \

-f main/main-service-keyvault.bicep \

--parameter main/main-service-keyvault.json

az deployment group create -g <Your Resource Group Name> \

-f main/main-service-all-at-once.bicep \

--parameter main/main-service-all-at-once.parameters.json--parameter <parameter filename> is optional

or after you run ./build.sh from command line,

az deployment group create -g <Your Resource Group Name> \

-f build/main-infra.json \

--parameter build/main-service-infra.json

az deployment group create -g <Your Resource Group Name> \

-f build/main-service-keyvault.json \

--parameter build/main-service-keyvault.json

az deployment group create -g <Your Resource Group Name> \

-f build/main-service-all-at-once.json \

--parameter build/main-service-all-at-once.parameters.jsonThis option consists of 3 steps

- Role assignments to Service Principal

- Setting up AZURE_CREDENTIAL

- Pipeline implementation

- Running Workflow

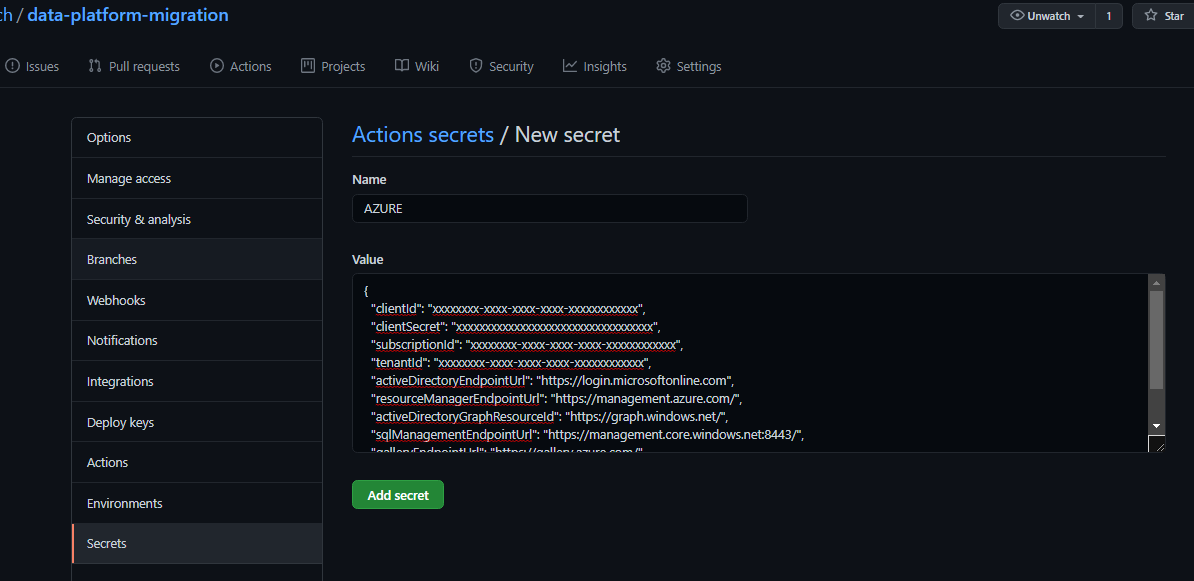

In the previous step, you've already got a Service Principal which's for Key Vault. But we're going to create another one for client authentication backed by Azure AD which's dedicated to GitHub Action and Azure DevOps Pipeline

az ad sp create-for-rbac --name <Your App Name -2> --role contributor \

--scopes <Your Resource Group Id> \

--role contributor --sdk-auth

<Your App Name -2> is different name to previous one. Then you'll get something like this

{

"clientId": "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx",

"clientSecret": "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx",

"subscriptionId": "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx",

"tenantId": "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx",

"activeDirectoryEndpointUrl": "https://login.microsoftonline.com",

"resourceManagerEndpointUrl": "https://management.azure.com/",

"activeDirectoryGraphResourceId": "https://graph.windows.net/",

"sqlManagementEndpointUrl": "https://management.core.windows.net:8443/",

"galleryEndpointUrl": "https://gallery.azure.com/",

"managementEndpointUrl": "https://management.core.windows.net/"

}Keep entire Jason document for next step. And it has to be assigned all required role to this brand new service principal

- Contributor

- Private DNS Zone Contributor

- Network Contributor

- User Access Administrator

User Access Administrator is for role assignment for data platform services to storage account.

az role assignment create \

--assignee "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx" \

--role "<Role Name>" \

--resource-group <Your Resource Group>

Run this commands for required roled listed above to your resource groups separately. Or run this command from your command line

./roleassign.sh <Your App Name -2> <Your Resource Group Name>

Next step is now you need to let GitHub Action authenticate for all access to your resources. This is simple. Before you do this, I recommend you to fork the repo under your GitHub account so that you can easily update actions From Setting menu on the repo, goto secrets, and click on 'New Repository Secret'. And put the name as AZURE_CREDENTIAL, paste entire Jason document to value which you got from previous step. And 'Add Secrete' Button at below. From now on, all acces to your resources on Azure will be authenticated by using this token

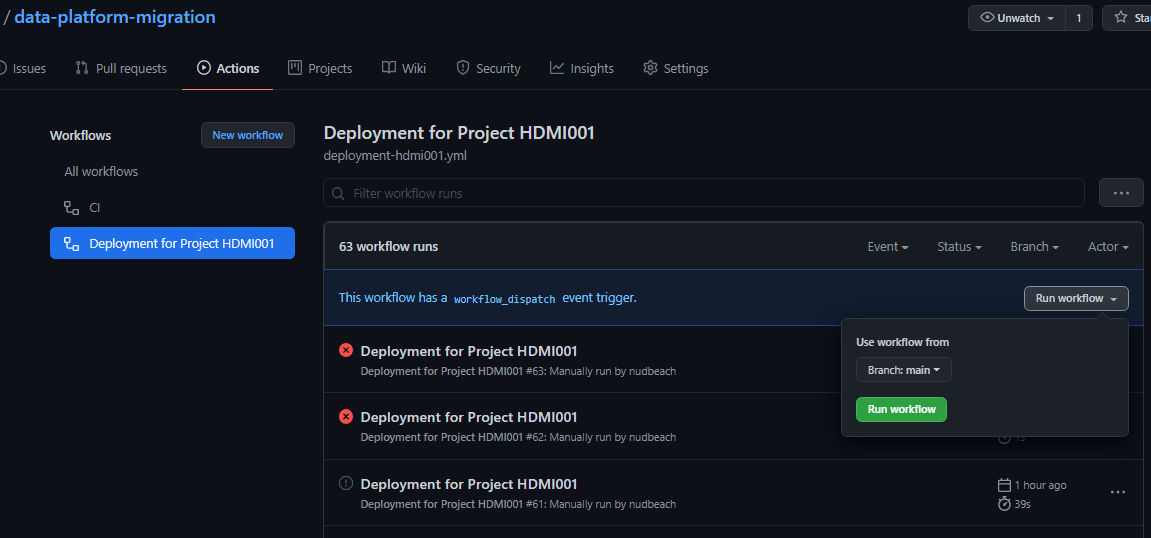

Checkout your repo which forked from data-platform-migration and go to .github/workflows. Open the workflow file, 'deployment-hdmi001.yml' and simply update environment variables with yours

- AZURE_SUBSCRIPTION_ID

- AZURE_RESOURCE_GROUP_NAME

- AZURE_LOCATION

You can externalize these environment variables to env file. See this for further details

Run this command from your command line to update to your repo

git add . ; git commit -m "my first commit" ; git push

In this example, it only manually works by workflow_dispatch event, it never automatically runs by pull and push events. You can do this by un-remarking initial parts like this

# Controls when the action will run.

on:

# Triggers the workflow on push or pull request events but only for the main branch

push:

branches: [ main ]

pull_request:

branches: [ main ]Go to 'Actions' item on your repo, and click on 'Deployment for Project HDMI001'. And click on 'Run Workflow' on the right

Now you can see the running workflow

Both GitHub Action and Azure DevOps looks similar in terms of structure and concepts but not 100% the same each other.Comparing with instructions in GitHub Action, you can reuse most of things that you've created at the intial steps 'Role assignments to Service Principal' and 'Setting up AZURE_CREDENTIAL'. For 'Pipeline implementation' step, there's a few differences in syntax of workflow, for example on, env are not supported in Azure DevOps, you can just remove them and externalize them to "Environment" and "Variables".

So I would recommend you to make a repo copy to Azure DevOps before you start

- Create Azure ARM connection

- Configure your Pipeline

- Run the pieline

First of all, create and get your project and select it. From "Project Settings" at the bottom left, go to "Service Connection" and click on "New Service Connection" at top right

In the "New Service Connection" tab, select "Azure Resource Manager", and select "Next"

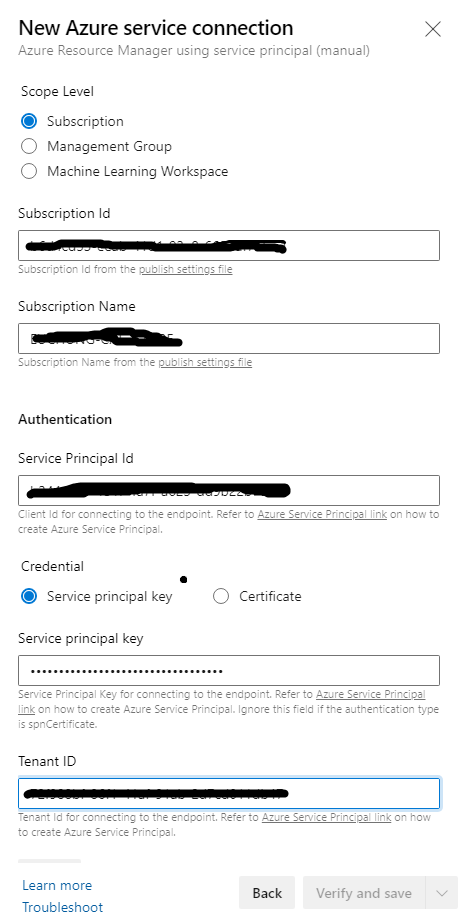

Select "Service principal (manual)" and clock on "Next"

From "New Azure service connection" tab, select "Subscription", input your Subscriotion id and Subscriotion id, Enter details with service principal that we have generated in GitHub Actionconfig.

- Service Principal Id = clientId

- Service Principal Key = clientSecret

- Tenant ID = tenantId

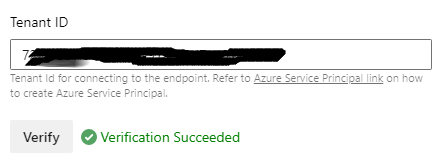

And click on Verify to make sure that the connection works.

Input your user-friendly connection name to use when referring to this service connection and description if you want. Take note of the name because this will be required in the parameter update process.

That's it

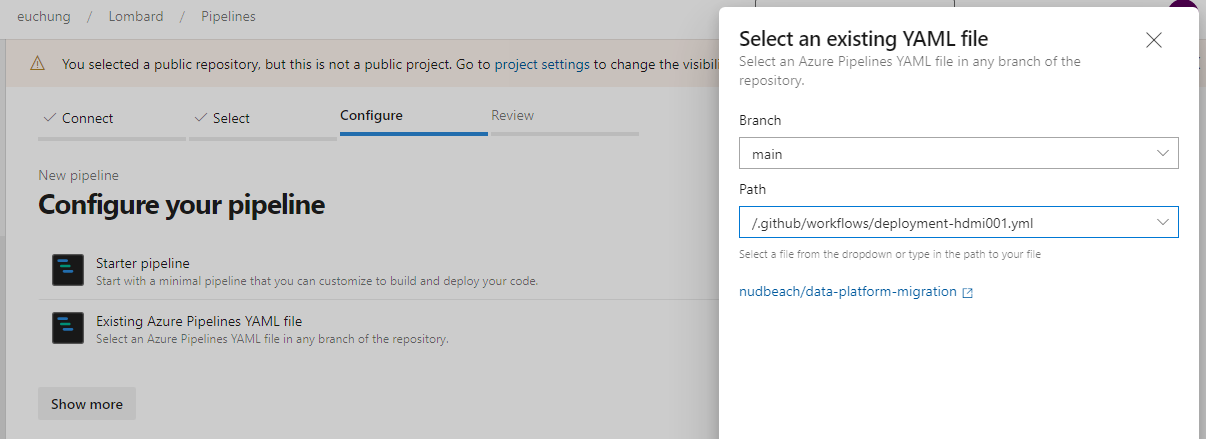

Go to Pipelines, click on "New Pipeline" button on top right.

Select "Azure Repo Git" if you've already made clone to your repo, or you can select Github to connect to your repo which's forked from this repo

Select "Existing Azure Pipelines YAML file", then you'll see the Github Action workflow file under .github/workflows, in the next step, you can make some changes directly on the web UI so that you can configure your pipeline and try to test it

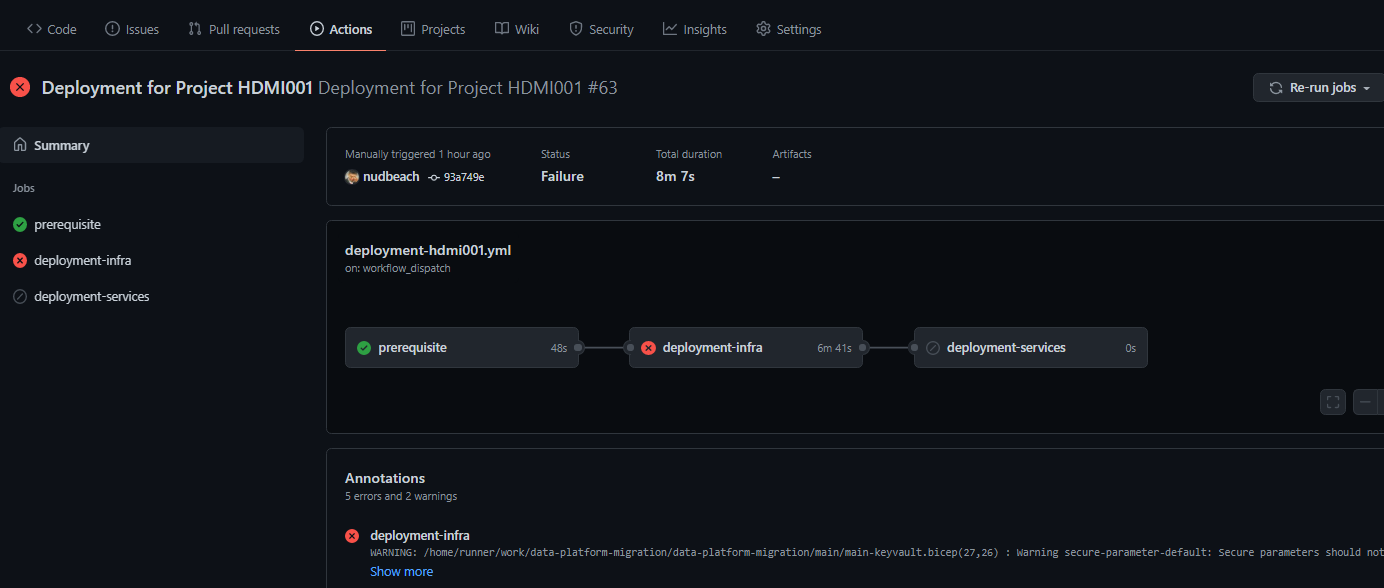

Warning Message:

data-platform-migration/modules/create-vnets-with-peering/azuredeploy.bicep(25,7) : Warning no-unused-params: Parameter is declared but never used. [https://aka.ms/bicep/linter/no-unused-params]

data-platform-migration/modules/create-vnets-with-peering/azuredeploy.bicep(28,7) : Warning no-unused-params: Parameter is declared but never used. [https://aka.ms/bicep/linter/no-unused-params]

data-platform-migration/modules/create-private-dns-zone/azuredeploy.bicep(11,7) : Warning no-unused-params: Parameter is declared but never used. [https://aka.ms/bicep/linter/no-unused-params]

data-platform-migration/modules/create-private-dns-zone/azuredeploy.bicep(14,7) : Warning no-unused-params: Parameter is declared but never used. [https://aka.ms/bicep/linter/no-unused-params]

data-platform-migration/modules/create-vm-simple-linux/azuredeploy.bicep(19,7) : Warning no-unused-params: Parameter is declared but never used. [https://aka.ms/bicep/linter/no-unused-params]

Solution:

Simply ignore these warnings. It's because of optional settings for VM, HDI, Synapse and so on. If you set these false, all corresponding parameters for these creations are not gonna get used. You can just ignore these when you try this with CLI or Quick Start Button but in Git Hub Action or Azure DevOps pipeline, you need to skip this warnings by adding continue-on-error: true to Jobs or Steps. That's because current version of deployment agent in Azure CLI detects it's an error than warning. It's Bicep CLI version 0.3.255 (589f0375df) for now.

The warning is to guide to reduce confusion in your template, delete any parameters that are defined but not used. This test finds any parameters that aren't used anywhere in the template. Eliminating unused parameters also makes it easier to deploy your template because you don't have to provide unnecessary values. You can find further details from here

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

When you submit a pull request, a CLA bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repositories using our CLA.

[//]: # (This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.)