This repository contains the implementation of a Conformer model with Rotary Positional Encoding to train and evaluate on the LibriSpeech dataset.

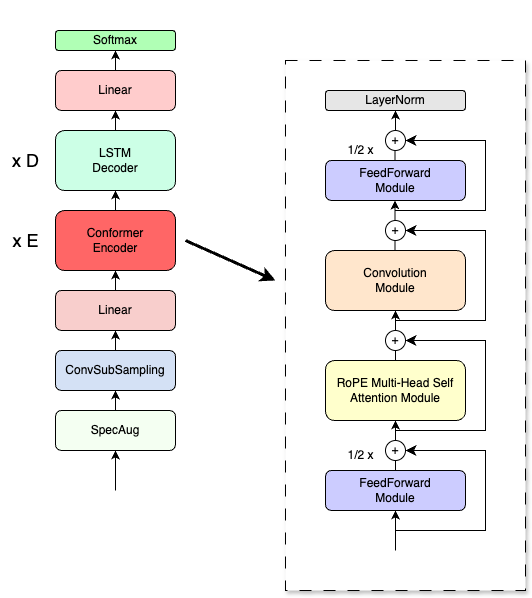

This repository implements a Conformer model, which is renowned for its performance in speech recognition tasks. The Conformer model effectively combines convolutional neural networks (CNNs) and Transformer-based self-attention mechanisms. While maintaining the core architecture of the Conformer, I incorporate Rotary Positional Embeddings from RoFormer to improve the model's ability to capture long-range dependencies and provide a more robust representation of sequential data.

To get started, clone the repository and install the necessary dependencies:

git clone https://github.com/eufouria/RoPEConformer_s2t.gitFor dataset preparation, download the LibriSpeech dataset and extract the files to a directory data.

wget -P data/ https://us.openslr.org/resources/12/train-clean-100.tar.gz

...The configuration file (config.json) includes settings for:

- Dataset paths

- Model architecture

- Training parameters (learning rate, batch size, epochs, etc.)

- Audio processing settings

Ensure all paths and parameters are correctly set before running training or evaluation scripts.

To train the model on the LibriSpeech dataset, run the following command:

python train.py --config config.jsonTo evaluate the trained model on the test set, use:

python evaluate.py --config config.json --checkpoint checkpoints/checkpoint.pthThe evaluation script outputs WER (Word Error Rate) of the model.

This code is licensed under the BSD license. See the LICENSE file for more details.

This implementation is based on the work from the following sources:

- XFormers Repo

- GPT-NeoX Repo

- RoFormer Paper

- Conformer Paper

- Unofficial Conformer Repo

- Connectionist Temporal Classification

If you have any questions or need further assistance, feel free to open an issue or contact me.

Happy coding!