This repository is the official implementation of Incomplete Multimodal Industrial Anomaly Detection via Cross-Modal Distillation.

We implement this repo with the following environment:

- Ubuntu 22.04

- CUDA 12.1

- Python 3.11

- Pytorch 2.2.0

To install requirements:

# Please install Pytorch first before other packages

# Install KNN_CUDA

pip install --upgrade https://github.com/unlimblue/KNN_CUDA/releases/download/0.2/KNN_CUDA-0.2-py3-none-any.whl

# Install Pointnet2_PyTorch(pointnet2_ops)

git clone https://github.com/erikwijmans/Pointnet2_PyTorch.git

cd Pointnet2_PyTorch

pip install -r requirements.txt

# You may encounter compilation issues for Pointnet2_PyTorch (see attached note).

# Now you can go back and install other packages for CMDIAD :)

pip install -r requirements.txt

📋 Sometimes conda's version control will cause the installation failure. We recommend using venv or conda to create a virtual environment and then use pip to install all packages. If you encountered compilation issues for Pointnet2_PyTorch, please modify

pointnet2_ops_lib/setup.pywith my attempts Pull request

The MVTec 3D-AD dataset can be downloaded from MVTec3D-AD.

It should be unzipped and placed under the datasets folder.

python utils/preprocessing.py --dataset_path datasets/mvtec_3d/

📋 It is recommended to use the default value for the path to the dataset to prevent problems in subsequent training and evaluation, but you can change the number of threads used according to your configuration. Please note that the pre-processing is performed in place.

| Purpose | Checkpoint |

|---|---|

| Point Clouds (PCs) feature extractor | Point-MAE |

| RGB Images feature extractor | DINO |

| Feature-to-Feature network (main PCs) | MTFI_FtoF_PCs |

| Feature-to-Input network (main PCs) | MTFI_FtoI_PCs |

| Input-to-Feature network (main PCs) | MTFI_ItoF_PCs |

| Feature-to-Feature network (main RGB) | MTFI_FtoF_RGB |

| Feature-to-Input network (main RGB) | MTFI_FtoI_RGB |

| Input-to-Feature network (main RGB) | MTFI_ItoF_RGB |

📋 Please put all checkpoints in folder

checkpoints.

To train the models in the paper, run these commands:

To save the features for distillation network training:

python main.py \

--method_name DINO+Point_MAE \

--experiment_note <your_note> \

--save_feature_for_fusion \

--save_path datasets/patch_lib \

The results are saved in the

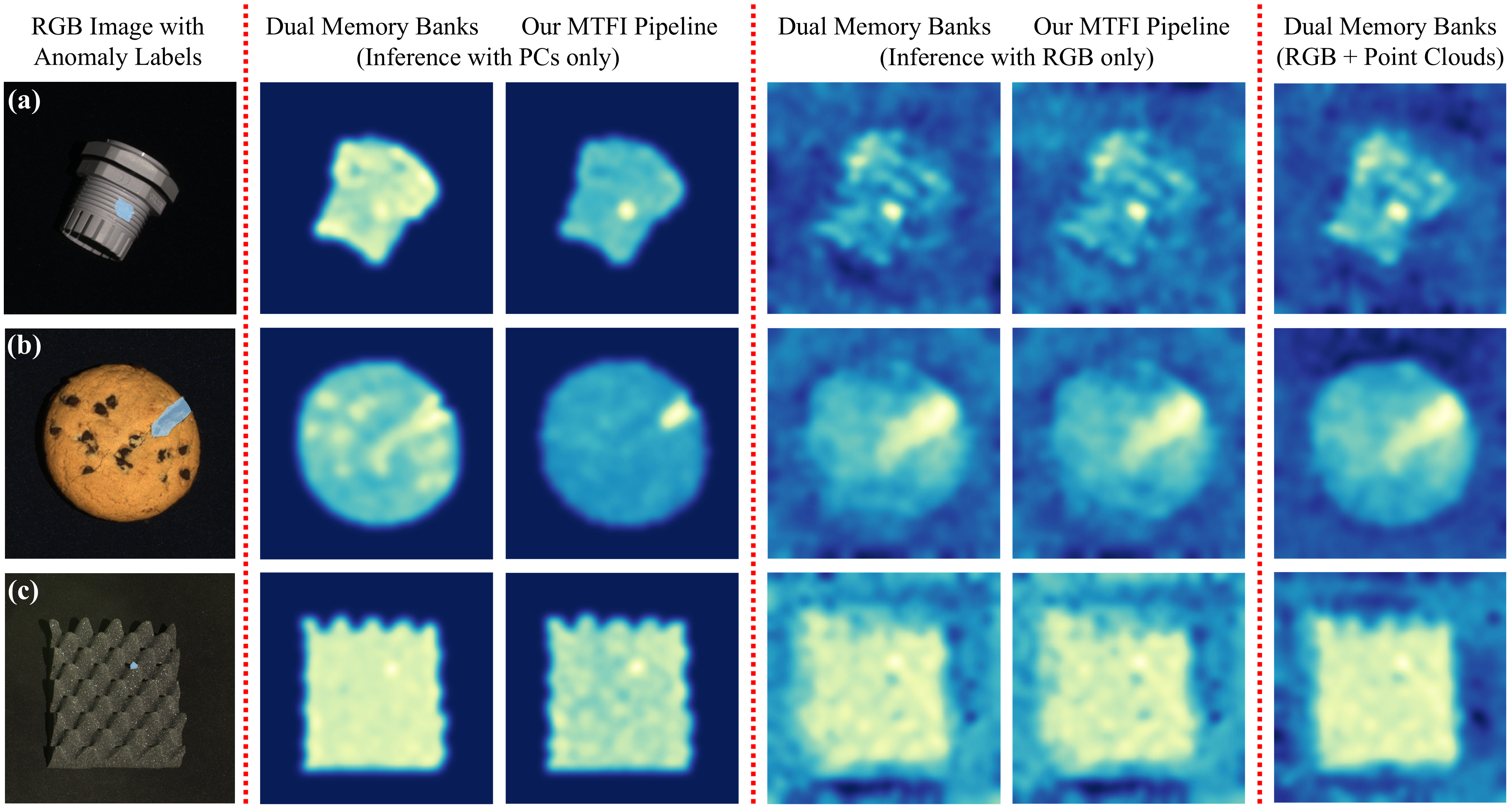

resultsfolder. If you need to output the raw anomaly scores at image or pixel level to a file, add--save_raw_resultsor--save_seg_results. You can useutils/heatmapto generate similar visualized results. You can define the maximum number of threads with--cpu_core_numand leave your note through--experiment_note.

To train MTFI pipeline with Feature-to-Feature distillation network:

python hallucination_network_pretrain.py \

--lr 0.0005 \

--batch_size 32 \

--data_path datasets/patch_lib \

--output_dir <your_output_dir_path> \

--train_method HallucinationCrossModality \

--num_workers 2 \

📋 For MTFI pipeline with Feature-to-Feature distillation network, PCs or RGB images as the main modality are trained simultaneously. If you think your GPU memory is really not enough, maybe try with

--accum_iter 2for Gradient Accumulation and change--batch_size 16correspondingly. The data is loaded into GPU memory in advance to speed up the training, you can change it through dataset and dataloader.

To save the features for distillation network training:

python main.py \

--method_name DINO+Point_MAE \

--experiment_note <your_note> \

--save_frgb_xyz \

--save_path_frgb_xyz datasets/frgb_xyz \

--save_rgb_fxyz \

--save_path_rgb_fxyz datasets/rgb_fxyz \

For PCs as main modality.

python hallucination_network_pretrain.py \

--lr 0.0005 \

--batch_size 32 \

--data_path datasets/rgb_fxyz \

--output_dir <your_output_dir_path> \

--train_method XYZFeatureToRGBInputConv \

For RGB images as main modality.

python hallucination_network_pretrain.py \

--lr 0.0005 \

--batch_size 32 \

--data_path datasets/frgb_xyz \

--output_dir <your_output_dir_path> \

--train_method RGBFeatureToXYZInputConv \

Similarly, you need to store the features for distillation network training:

python main.py \

--method_name DINO+Point_MAE \

--experiment_note <your_note> \

--save_frgb_xyz \

--save_path_frgb_xyz datasets/frgb_xyz \

--save_rgb_fxyz \

--save_path_rgb_fxyz datasets/rgb_fxyz \

For PCs as main modality.

python -u hallucination_network_pretrain.py \

--lr 0.0003 \

--batch_size 32 \

--data_path datasets/frgb_xyz \

--output_dir <your_output_dir_path> \

--train_method XYZInputToRGBFeatureHRNET \

--c_hrnet 128 \

--pin_mem \

For RGB images as main modality.

python -u hallucination_network_pretrain.py \

--lr 0.0002 \

--batch_size 32 \

--data_path datasets/rgb_fxyz \

--output_dir <your_output_dir_path> \

--train_method XYZInputToRGBFeatureHRNET \

--c_hrnet 192 \

--pin_mem \

For single PCs memory bank:

python main.py \

--method_name Point_MAE \

--experiment_note <your_note> \

📋 For single RGB memory bank and dual memory bank, please replace

Point_MAEwithDINOandDINO+Point_MAE, respectively.

For PCs as main modality.

python main.py \

--method_name WithHallucination \

--use_hn \

--main_modality xyz \

--fusion_module_path checkpoints/MTFI_FtoF_PCs.pth \

--experiment_note <your_note> \

📋 For RGB images as main modality, please replace

xyzwithrgbfor--main_modalityand give the new checkpoint pathcheckpoints/MTFI_FtoF_RGB.pthto the model.

For PCs as main modality.

python main.py \

--method_name WithHallucinationFromFeature \

--use_hn_from_rgb_conv \

--main_modality xyz \

--fusion_module_path checkpoints/MTFI_FtoI_PCs.pth \

--experiment_note <your_note> \

📋 For RGB images as main modality, replace

xyzwithrgband give model the new checkpoint path.

For PCs as main modality.

python main.py \

--method_name WithHallucination \

--use_hrnet \

--main_modality xyz \

--c_hrnet 128 \

--fusion_module_path checkpoints/MTFI_ItoF_PCs.pth \

--experiment_note <your_note> \

For RGB images as main modality.

python main.py \

--method_name WithHallucination \

--use_hrnet \

--main_modality rgb \

--c_hrnet 192 \

--fusion_module_path checkpoints/MTFI_ItoF_RGB.pth \

--experiment_note <your_note> \

If you think this repository is helpful for your project, please use the following.

@misc{sui2024crossmodal,

title={Cross-Modal Distillation in Industrial Anomaly Detection: Exploring Efficient Multi-Modal IAD},

author={Wenbo Sui and Daniel Lichau and Josselin Lefèvre and Harold Phelippeau},

year={2024},

eprint={2405.13571},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

We appreciate the following github repos for their valuable code: