Unofficial implementation of Liu et al., 2018. Image Inpainting for Irregular Holes Using Partial Convolutions.

This implementation was inspired by and is partially based on the early version of this repository. Many ideas, e.g. random mask generator using OpenCV, were taken and used here.

- Python 3.6

- TensorFlow 1.13

- Keras 2.2.4

- OpenCV and NumPy (for mask generator)

First, set proper paths to your datasets (IMG_DIR_TRAIN and IMG_DIR_VAL in the inpainter_main.py file). Note, the code is using the ImageDataGenerator class from Keras. These paths should therefore point to one level above in the directory tree, i.e. if e.g. your train images are stored in the directory path/to/train/images/dir/subdir/ then you set IMG_DIR_TRAIN = path/to/train/images/dir/. If there is more than one directory in path/to/train/images/dir/ (e.g. associated with different classes), they all will be used in training.

Second, download the VGG16 weights ported from PyTorch here and set VGG16_WEIGHTS in the inpainter_main.py file to be the path to these weights.

When the paths are set, run the code

python inpainter_main.py

This will start the initial training stage 1. When it is complete, set STAGE_1 to False and LAST_CHECKPOINT to be the path to the checkpoint from the last epoch on stage 1. Then run the code again. This will start the fine-tuning stage 2.

You can also do all this in the jupyter notebook provided.

The authors of the paper used PyTorch to implement the model. The VGG16 model was chosen for feature extraction. The VGG16 model in PyTorch was trained with the following image pre-processing:

- Divide the image by 255,

- Subtract [0.485, 0.456, 0.406] from the RGB channels, respectively,

- Divide the RGB channels by [0.229, 0.224, 0.225], respectively.

The same pre-processing scheme was used in the paper. The VGG16 model in Keras comes with weights ported from the original Caffe implementation and expects another image pre-processing:

- Convert the images from RGB to BGR,

- Subtract [103.939, 116.779, 123.68] from the BGR channels, respectively.

Due to different pre-processing, the scales of features extracted using the VGG16 model from PyTorch and Keras are different. If we were to use the build-in VGG16 model in Keras, we would need to modify the loss term normalizations in Eq. 7. To avoid this, the weights of the VGG16 model were ported from PyTorch using this script and are provided in the file data/vgg16_weights/vgg16_pytorch2keras.h5. The PyTorch-style image pre-processing is used in the code.

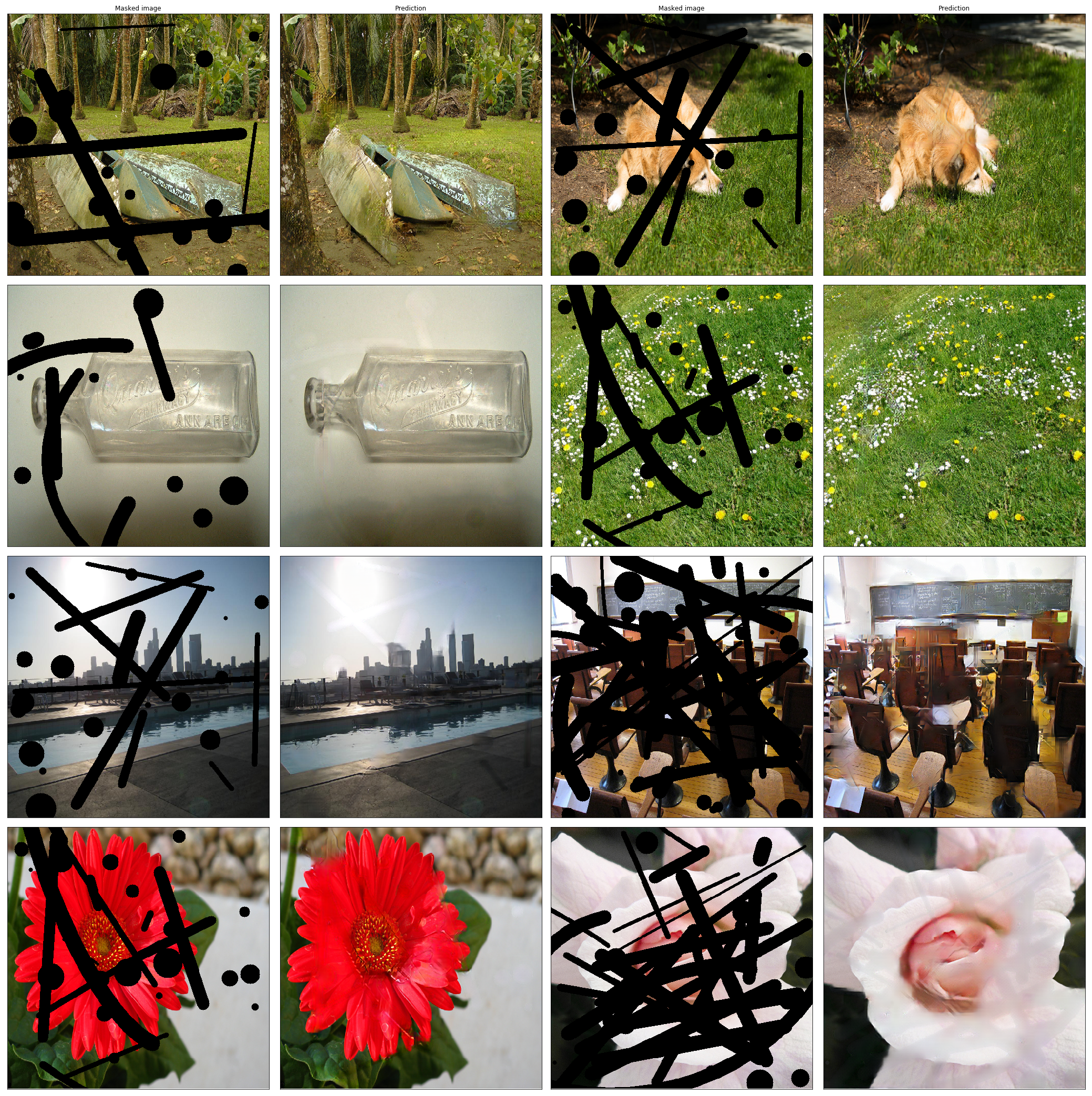

Random masks consisting of circles and lines are generated using the OpenCV library. The mask generator is the modified version of the one used here. It was modified to generate reproducible masks for validation images.

To generate consistent masks, i.e. the same set of masks after each epochs, for validation images, make sure the number of images in your validation set is equal to the product of STEPS_VAL and BATCH_SIZE_VAL. I used 400 validation images with STEPS_VAL = 100 and BATCH_SIZE_VAL = 4. You might need to change these parameters if you want to use more/less validation images with consistent masks between different epochs.

The examples shown below were generated using the model trained on the Open Images Dataset (subset with bounding boxes, partitions 1 to 5). You can train the model using other datasets.

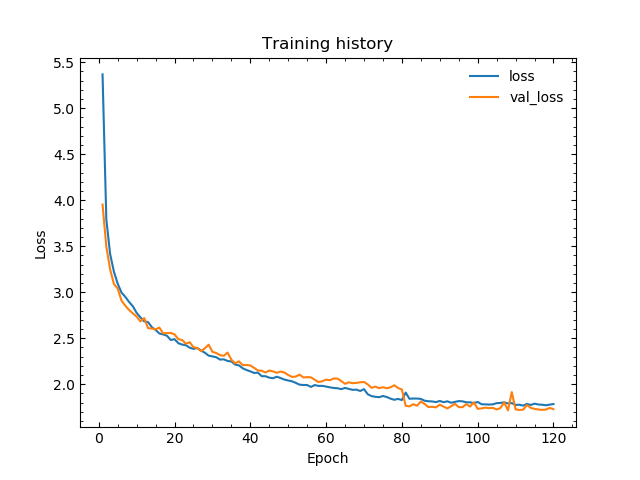

The model was trained in two steps:

- Initial training (BatchNorm enabled): 70 epochs with learning rate 0.0002, then 10 epochs with learning rate 0.0001,

- Fine-tuning (BatchNorm disabled in encoder): 40 epochs with learning rate 0.00005

with the batch size of 5 and 2500 steps per epoch.

The weights of the trained model can be downloaded via this link.

I cannot reach the same inpainting quality as was demostrated in the paper. Suggestions and bug reports are welcome.

A big thank you goes to Mathias Gruber for making his repository public and to Guilin Liu for his feedback on the losses and the image pre-processing scheme used in the paper.