MXNet implementation for:

Drop an Octave: Reducing Spatial Redundancy in Convolutional Neural Networks with Octave Convolution

- Loss: Softmax

- Learning rate: Cosine (warm-up: 5 epochs, lr: 0.4)

- MXNet API: Symbol API

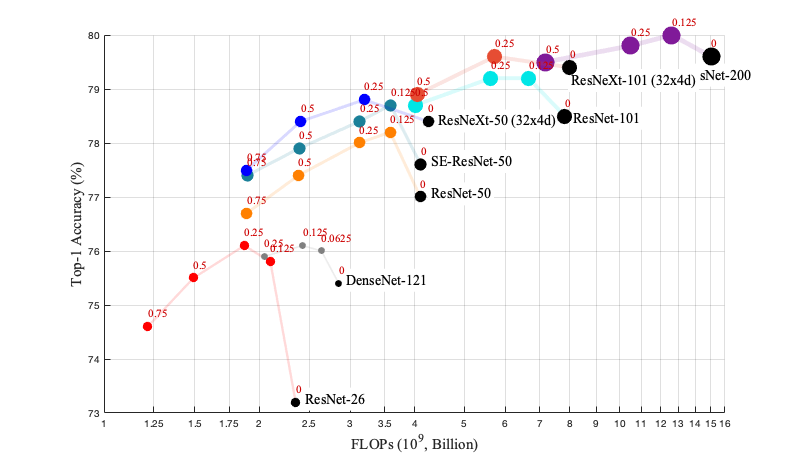

| Model | baseline | alpha = 0.125 | alpha = 0.25 | alpha = 0.5 | alpha = 0.75 |

|---|---|---|---|---|---|

| DenseNet-121 | 75.4 / 92.7 | 76.1 / 93.0 | 75.9 / 93.1 | -- | -- |

| ResNet-26 | 73.2 / 91.3 | 75.8 / 92.6 | 76.1 / 92.6 | 75.5 / 92.5 | 74.6 / 92.1 |

| ResNet-50 | 77.0 / 93.4 | 78.2 / 93.9 | 78.0 / 93.8 | 77.4 / 93.6 | 76.7 / 93.0 |

| SE-ResNet-50 | 77.6 / 93.6 | 78.7 / 94.1 | 78.4 / 94.0 | 77.9 / 93.8 | 77.4 / 93.5 |

| ResNeXt-50 | 78.4 / 94.0 | -- | 78.8 / 94.2 | 78.4 / 94.0 | 77.5 / 93.6 |

| ResNet-101 | 78.5 / 94.1 | 79.2 / 94.4 | 79.2 / 94.4 | 78.7 / 94.1 | -- |

| ResNeXt-101 | 79.4 / 94.6 | -- | 79.6 / 94.5 | 78.9 / 94.4 | -- |

| ResNet-200 | 79.6 / 94.7 | 80.0 / 94.9 | 79.8 / 94.8 | 79.5 / 94.7 | -- |

Note:

- Top-1 / Top-5, single center crop accuracy is shown in the table. (testing script)

- All residual networks in ablation study adopt pre-actice version[1] for convenience.

- Learning rate: Cosine (warm-up: 5 epochs, lr: 0.4)

- MXNet API: Gluon API

| Model | alpha | label smoothing[2] | mixup[3] | #Params | #FLOPs | Top1 / Top5 |

|---|---|---|---|---|---|---|

| 0.75 MobileNet (v1) | .375 | 2.6 M | 213 M | 70.5 / 89.5 | ||

| 1.0 MobileNet (v1) | .5 | 4.2 M | 321 M | 72.5 / 90.6 | ||

| 1.0 MobileNet (v2) | .375 | Yes | 3.5 M | 256 M | 72.0 / 90.7 | |

| 1.125 MobileNet (v2) | .5 | Yes | 4.2 M | 295 M | 73.0 / 91.2 | |

| Oct-ResNet-152 | .125 | Yes | Yes | 60.2 M | 10.9 G | 81.4 / 95.4 |

| Oct-ResNet-152 + SE | .125 | Yes | Yes | 66.8 M | 10.9 G | 81.6 / 95.7 |

@article{chen2019drop,

title={Drop an Octave: Reducing Spatial Redundancy in Convolutional Neural Networks with Octave Convolution},

author={Chen, Yunpeng and Fan, Haoqi and Xu, Bing and Yan, Zhicheng and Kalantidis, Yannis and Rohrbach, Marcus and Yan, Shuicheng and Feng, Jiashi},

journal={Proceedings of the IEEE International Conference on Computer Vision},

year={2019}

}

- PyTorch Implementation with imagenet training log and pre-trained model by d-li14

- MXNet Implementation with imagenet training log by terrychenism

- Keras Implementation with cifar10 results by koshian2

- Thanks MXNet, Gluon-CV and TVM!

- Thanks @Ldpe2G for sharing the code for calculating the #FLOPs (

link) - Thanks Min Lin (Mila), Xin Zhao (Qihoo Inc.), Tao Wang (NUS) for helpful discussions on the code development.

[1] He K, et al "Identity Mappings in Deep Residual Networks".

[2] Christian S, et al "Rethinking the Inception Architecture for Computer Vision"

[3] Zhang H, et al. "mixup: Beyond empirical risk minimization.".

The code and the models are MIT licensed, as found in the LICENSE file.