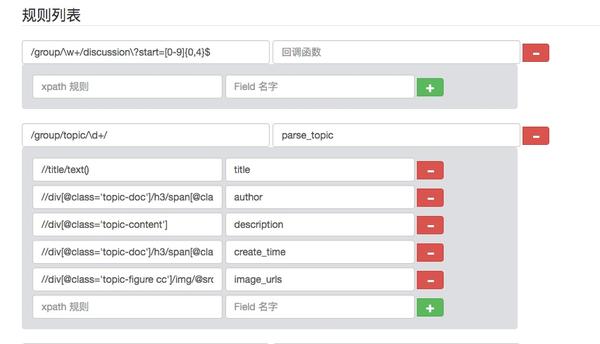

Dynamic configurable crawl (动态可配置化爬虫)

git clone git@github.com:facert/scrapy_helper.git && cd scrapy_helpervirtualenv .envsource .env/bin/activatepip install -r requirements.txtpython manage.py migrate

python manage.py runserver- open browser http://127.0.0.1:8000/

- use test account (username: demo/password: demo ) to login