White Pages Application

Functionality

- Third party service, socket at fixed address/port, one connection at a time

- Multiple seq requests/replies can be sent over active connection

- Third party suggest to keep socket open all day and pour traffic through it

- White page service - look up phone number given name, or name given phone number

- Requests can be generated by anyone in our company

- Users enter request on workstation and see response when available

Questions

- How would software be structured

- What are classes and responsibilities of each

- Is design biased towards

- performance

- maintainability

- extensibility

- ease of development

- What would alternative design look like, which is better

- Code up a few classes and methods (50 - 200 lines of code)

Initial thoughts on the problem

Some initial thoughts, literally, in no particular order

- We have a single point of failure and serialized access to an external service

- What happens when the machine where the external service is running fails?

- If connection fails we need to bring it up again, quick

- Consider cache to reduce load on service and improve performance

- Getting a very fast reply does not seem important - we are not talking ms timings here

- Blocking versus non-blocking/asynchronous call

- Design question biased towards class design, I'd also like to focus on system design as well which will drive what functionality our Java code will need to provide

Some initial questions

Questions I'd take to relevant stakeholders

Application

- What type of application do we want to present to users - e.g. web page

- No mention about security, is authentication required

- What sort of volume do we expect from users - if this is in some sort of call centre, it going to be high, if used for corp directory type searches then relatively low

- What is SLA in terms of throughput, latency, up-time. e.g. max latency that would be acceptable?

- See next section for more questions around functionality

Third Party Service

- Seriously discuss getting additonal connection(s) to external services running on different hosts, different data centres, even different regions. We need better resiliency to failures on their end otherwise our clients are going to be hit hard as service availability degrades/vanishes

- At a minimum, can we get a failover URI, and would it be hot

- What SLA is in place with third party service? This will constrain our SLA

- Need more details on API, is there a client lib for example

Runtime environment

- What HW is available, how many servers, and specs

- Do we have a container based infrastructure in place, any cloud accounts (on or off premise)

Costs/Time

- What budget do we have for overall project, will influence some of architectural decisions, determine size of team,

- Timeline for project

A deeper look into White page service

Some clarification needed around requirements...

Searching By Name

- Do we require exact match or fuzzy match. e.g. will searching for "Nik Stehr" yield same result as "Nikolas Stehr", and how would it handle middle names like "Nikolas Friedrich Stehr", what about wildcard style searches like "Stehr"

- Ideally all of above searches would be supported, but q should be posed to stakeholder

- API has to indicate when multiple possible matches

- Does API support distinction between first and last name

- Ideally these features are supported by external service, if not, we could implement something ourselves, depends on API of external service - if it can give us all of the names, we can build relevant data structures with a one to many relationship between user entered name and possible matching names, and for each of these call the external service for the number (we could even start to pre-cache)

Searching by number

- How to take into account formatting convention around telephone numbers

- Convention varies by county, see https://en.wikipedia.org/wiki/National_conventions_for_writing_telephone_numbers

- Given the country, application could apply formatting rules and indicate if error was made entering number

- Anything built into service to validate numbers

Bulk/batch API

- Does the external service handle bulk searches - some of use cases above would benefit from this feature to reduce network traffic/latency

Patterns

Some patterns I think are important here, several from world of cloud, but equally applicable in non-cloud distributed application. They capture main concerns around:

- Availability

- Resiliency

- Management and Monitoring

- Performance

Availability Patterns

Availability - proportion of time system is functional and working (usually measured as % of uptime)

This is a service with users who will be sensitive to high latency - determine Service level agreement (SLA), design to maximize availability wrt SLA.

Throttling

Control the consumption of resources used by an instance of an application

The load on application will vary over time based on the number of active users, for example, more users are likely to be active during business hours. There might also be sudden and unanticipated bursts in activity. If the processing requirements of the system exceed the capacity of the resources that are available, it'll suffer from poor performance and could even fail. Strategies available for handling varying load:

- Auto scaling to match the provisioned resources to the user needs - if we're going down the container route this is an option

- Throttling - allow applications to use resources only up to a limit, and then throttle them when this limit is reached.

How to implement throttling?

- Reject requests from an individual user who's already accessed system APIs more than n times per second over a given period of time.

- Using load levelling to smooth the volume of activity

- Others...

Is this overkill for our application? Not necessarily, if someone hooks up API call into a script or their own application that generates a very high volume of requests in a short period of time, or even if they just sit their and hit refresh lots of times, we dont want other users to be impacted as a result - they would effectively be starved until all the other requests had gone through.

(This is a Scalability pattern as well)

Load balance

Distribute traffic that arrives from client requests to 'backend' service(s). Typically multiple instances of a service will be running, with requests sent to them from a load balancer using some sort of algorithm (simple - round robin). Balancer will use health checks to determine whether to route to given instance or not.

In our case, we need to start to think about application architecture, and what instances of what will be running. Do we even need a load balancer? It depends, ideally we have some sort of redundancy built into our architecture. However, we have what is effectively a singleton resource, which is the external service, an app that created this connection cannot be scaled otherwise we'd open multiple connections when we have been informed only one is permitted.

Our application needs to do more than just call the external API though, it will also (as discussed in Performance Patterns section) apply caching techniques, perform input validation, and so on. So a given request may not even require the external service if result already cached (or user enters invalid search). This is an important observation.

Lets separate out the concerns here, we can have multiple instances of an application running that handle incoming client requests and do things like caching, that share the single connection to the external service - how do they share the connection across JVMs? We have to extract out another type of service that acts as a proxy to the external service.

Feels a bit like over-architecting, but all good things to consider.

(This is a Resiliency Pattern as well)

Resiliency Patterns

Gracefully handle and recover from failures. Given we are dependent on an external service and have a single connection, this is particularly important

Retry

Enable application to handle temporary failures when it attempts to connect to a service or network resource by transparently retrying an operation that has previously failed a certain number of times, or until a configured time-out period elapses in the expectation that the cause of the failure is transient

We may get such a failure if we get an error from the external service, or it could be a network issue and a corrupt packet.

We may need to retry after a delay, e.g. connectivity issues with the external service which can be corrected by recreating our connection.

Circuit Breaker

Handle faults that might take a variable amount of time to recover from, when connecting to a remote service or resource

Too many failed requests can cause a bottleneck, as pending requests accumulate in the queue. These blocked requests might hold system resources such as memory, threads, and so on, which can cause cascading failures. This pattern can prevent a service from repeatedly trying an operation that is likely to fail.

We may get such a failure if the external service is unavailable (we get an IOException and cant open socket again). We have no alternative (unless cache had been used), so all we can do is report exception to user and ask them to try again soon

Performance Patterns

The external service introduces a point of serialized access, whereby multiple simultaneous requests may result in a queue developing as individual request are processed. Its not known what throughput of external service is (yet), network hop alone will add a chunk of time to the request.

Introducing a cache will improve overall performance - if the same requests are generated. That’s a big if actually, assuming our cache is relatively short lived, what is probability of users requesting them same thing? Some names will be searched more, e.g. the company CEO, but consideration needs to be given to increased complexity of adding a cache

Cache-Aside

- Load data on demand into a cache from external service

- Implement strategy to ensure that data in the cache is as up-to-date as possible

- Read-through and write-through/write-behind operations

- Consider:

- Lifetime of cached data and when to evict

- Priming cache

- Consistency

- Local (in memory) caching

Adding a cache does seem like a no-brainer. It also protects us if external service is temporarily unavailable and result of a request is available in the cache - its address availability concerns.

(this is a data management pattern as well)

Management and Monitoring Patterns

Health endpoint monitoring

Implement functional checks that external tools can access through exposed endpoints at regular intervals

Healthy means either available or not poorly performing, if our application/service or external service becomes unhealthy and can therefore no longer service requests adequately, we need to know about it so we can do something - e.g. restart process (ideally in automated manner)

Performance Monitoring

Not a pattern as such, monitor application performance and behaviour under load to give real-time insight into the application. Use to diagnose issues and perform root-cause analysis of failures. Tools like Prometheus and Grafana can help provide time series metrics and dashboards

So Just How would software be structured architecturally?

A reminder of the gist of the application - requirement that 'Users enter request on workstation and see response when available' and an external remote service with a single connection that provides the result. So we have a client part to the application and some sort of 'back end' service that will communicate with the third party external service.

We've looked at the important patterns that address main concerns around availability and so on, so any solution has to make it easy it apply these.

Separation of concerns and clean architecture are important to support maintainability and extensibility. The question asked if design biased towards these two goals, I'd say that maintainability is something that should never be compromised, whilst extensibility is preferred to avoid changes rippling out across the system (same principle applies to any code).

Lets look at the client and the service in turn.

Client

The client will need to connect to something else on the network that maintains the single connection to the external service. We also need somewhere to apply input validation (e.g. format of telephone number entered into search), and start to think about some of other concerns like caching and retry. Possible options:

- Fat client, installed on the user workstation

- Thin client, in the web browser

- Plugin, eg. into Excel,

- Client library integrated into an existing application installed on users application

We dont know right now which of above solutions will be required. My guidance to stakeholder would be to avoid fat client, for lots of reasons, not least to avoid the hassle to install and maintain client software (and finding a developer with experience of swing or similar, as opposed to web dev).

We can abstract away specific details of the client and provide a solution that could be easily used by current and future clients by using a microservice based approach.

A microservice based service approach

https://12factor.net/ summarises some of the benefits of microservices nicely - goal is to provide robust microservices based apps optimized for continuous delivery.

The microservice would encapsulate logic to connect to the external service and expose HTTP end points like below, with some example responses:

Search by number

/white-pages/v1/?number=07976376509

Example response:

{

"data": {

"name": "Nik Stehr",

"number": 07976376509

},

"links": {

"self": "http://internal.address.com/white-pages/v1/?number=07976376509"

}

}

Search by name

/white-pages/v1/?name=Stehr

Example response - assumed here one name, one number, in reality a person will have more than one number:

{

"data": { [

{

"name": "Nik Stehr",

"number": 07976376509,

"links": {

"self": "http://internal.address.com/white-pages/v1/?name=Nik+Stehr"

}

},

{

"name": "Marlies Stehr",

"number": 07982132456,

"links": {

"self": "http://internal.address.com/white-pages/v1/?name=Marlies+Stehr"

}

}

]

},

"links": {

"self": "http://internal.address.com/white-pages/v1/?name=Stehr"

}

}

Alternative Search by name

Pending info on external API

/white-pages/v1/?first_name=Nik&last_name=Stehr

Error Responses

Number not found:

{

"error": {

"code": 404,

"message": "Number not found"

}

}

Something went seriously wrong:

{

"error": {

"code": 503,

"message": "External Service Unavailable <some more info on what to do next>"

}

}

Throttling:

{

"error": {

"code": 429,

"message": "Too Many Requests"

}

}

Health

/white-pages/v1/health

Response:

{

"data": {

"code": 200,

"message": "OK"

}

}

How to apply our patterns?

Reverse Proxy

Some of these concerns can be handed off to a separate reverse proxy such as nginx (https://www.nginx.com/). I've picked nginx here, there are alternatives (e.g. Apache, Jetty), everything except Circuit Breaker supported in opensource (free) version nginx

- Availability/Throttling - see https://www.nginx.com/blog/rate-limiting-nginx/

- Resiliency/Load balance - see http://nginx.org/en/docs/http/load_balancing.html

- Resiliency/Retry - see http://nginx.org/en/docs/http/load_balancing.html#nginx_load_balancing_health_checks

- Resiliency/Circuit Breaker - doable in nginx+, which will incur licencing cost.

- Performance/Cache - setting up a disc cache without having to proxy requests for same content each time: https://docs.nginx.com/nginx/admin-guide/content-cache/content-caching/.

So, for example, call to

/white-pages/v1/?name=Stehrwill be cached after 1st call, with nginx delivering cached value for requests to same end-point.

Other approaches

Availability and Resiliency patterns can be implemented in code via Java APIs such as https://github.com/resilience4j/resilience4j, whilst many app frameworks support these features as well, e.g. vert.x https://vertx.io/docs/vertx-circuit-breaker/java/

Performance/Cache could be implemented in a few other ways, e.g.:

- In process/JVM based cache that sits with microservice (e.g. Guava or its replacement Caffeine, or just a good old HashMap)

- Good: Simple

- Bad: If Java process dies we lose the cache, if we have multiple java processes, each will have its own copy of the cache

- External cache (in memory data grid like Hazelcast or distributed key/value like Redis) that is referenced in Java code

- Good: Cache durable across JVM restarts

- Bad: complicates architecture

Availability/Health endpoint monitoring

Built into microservice as a REST endpoint

Asynchronous versus blocking

Should we have a blocking request/response or something more asnychronous where a request is fired off and message sent back to client when available. The latter approach is generally more complex, will require some sort of messaging or event-bus layer. Given our point of serialisation and potential for high number of blocked requests, asnychronous approach may be a more practical design here. We can find a middle ground, keep request/response but introduce callback to response when result ready, the main benefit here is we wont block the thread(s) handling requests coming in. This approach also means we dont need to introduce separate messaging/event-bus.

Containerised versus non-containerised

If containerised environment available, we should definitely use it, if not, we should put forward a compelling case for why it should be used.

Some good things (from my direct experience):

- Self service envs - super quick for anyone to carve out isolated env

- Single concern - similar to 'S' in SOLID, containers generally do one thing, with cloud patterns like sidecar and ambassador to handle multiple concerns

- Image immutability - once built its not changed between diff envs (dev/int/prod), means no surprises when deploying to prod because someone made manual edit to version in int!

- Self containment - container contain everything it needs at build time, runtime should only be adding config and storage, so no surprises when an app fires up because we have different version of a lib!

- Declarative application topology - describe how services should deployed, dependencies on other services, and so on

- Declarative runtime confinement - declare resource requirements (CPU, Mem, Volumes) up front

- Declarative deployment - encapsulated upgrade and rollback process - I love this, as makes deployment super simple, and easy to change strategy (rolling, blue/green, canary)

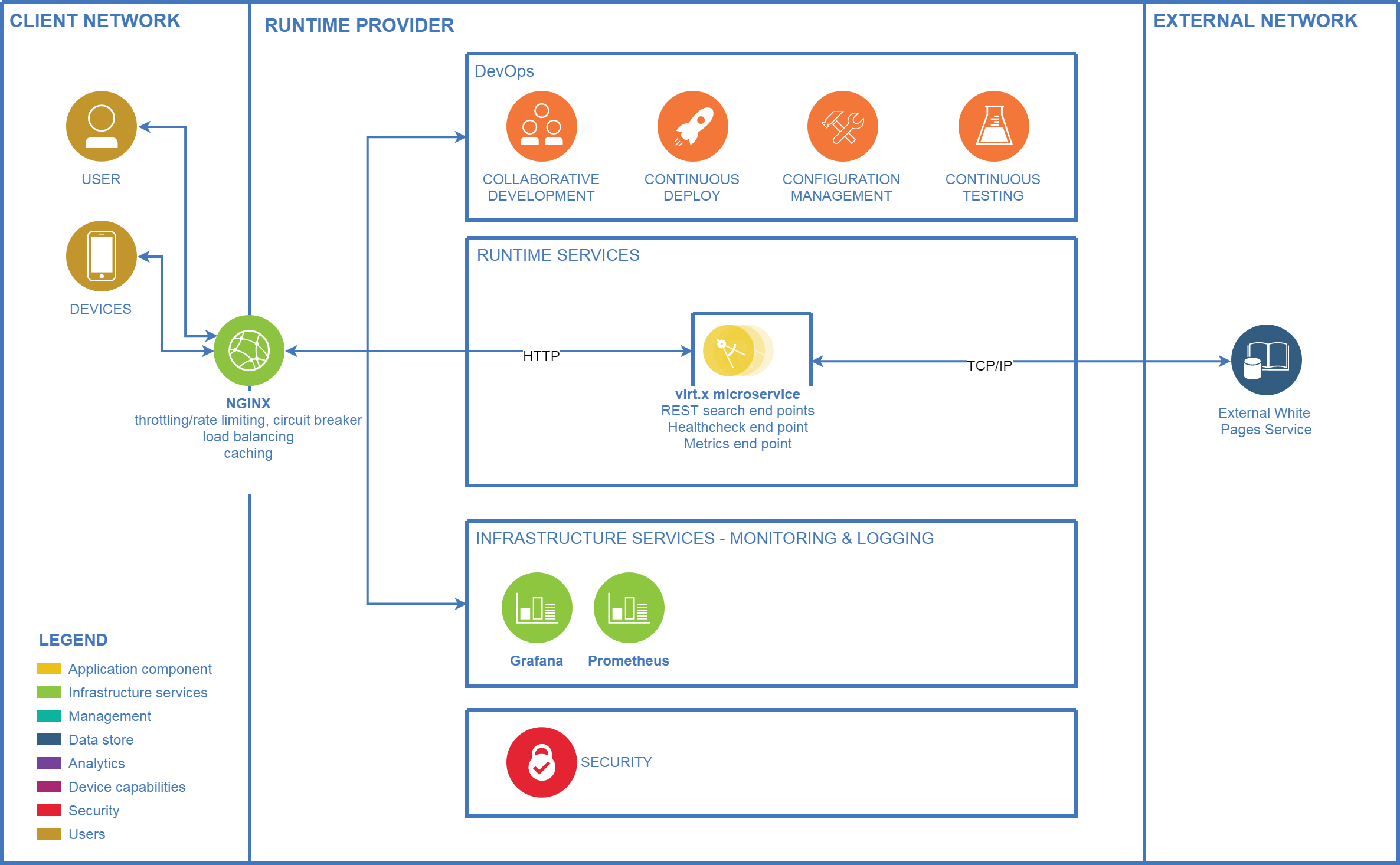

System Diagram

One possible design open for discussion:

- use nxingx to handle availability & resiliency concerns, note we only have a single instance of microservice so no load balancing

- nxingx also handles caching to improve performance (and resiliency to failure)

- single vert.x instance of the microserice which exposes (RESTful) search endpoints and owns connection to external service

- some sort of orchestration needed to ensure healthy instance of microservice is always available -

/healthcheckend-point can be used to determine this; this is sort of thing a container-orchestration system like kubernetes does out the tin - prometheus ingests data from

/metricsend-point on microservice and stores them in its timeseries database - grafana provides analytics and monitoring on top of prometheus

- consider adding log aggregation app like

fluentdto stream logs from nginx and vert.x

(derived from AWS draw.io library)

Class design/OO

Make sure good principles of sw design applied. KISS, DRY, YAGNI, SoC, SOLID.

Lets model things around the domain.

- This is a search application, we can search by name or number, so need a

NameandNumberinterface to represent these - A factory (possibly via a factory method?) will be required to create concrete instances from user provided String

- We will need to validate these, so some sort of validator will be needed, a

NameValidatorandNumberValidator, obtained via aValidatorFactory - A name or number may be able to validate itself if its improves the design, be careful not to break the 'O' of SOLID though, and inject a relevant validator in

- We should have something to represent our search request, a

DirectoryLookupRequest, it wil have a handle on a (validated)NameorNumber - Send

DirectoryLookupRequestto something that is going to help execute the query and return a result (via callback): aDirectoryLookup - We'll get back a

DirectoryLookupResponse, it will have references toName's andNumber's, or something representing an error - We'll need something to proxy the external service, a

ExternalDirectoryLookup, it will need to consider itself as a singleton to ensure only one connection opened - A

DirectoryLookupwill need to have a ref to theExternalDirectoryLookup - TODO - continue....