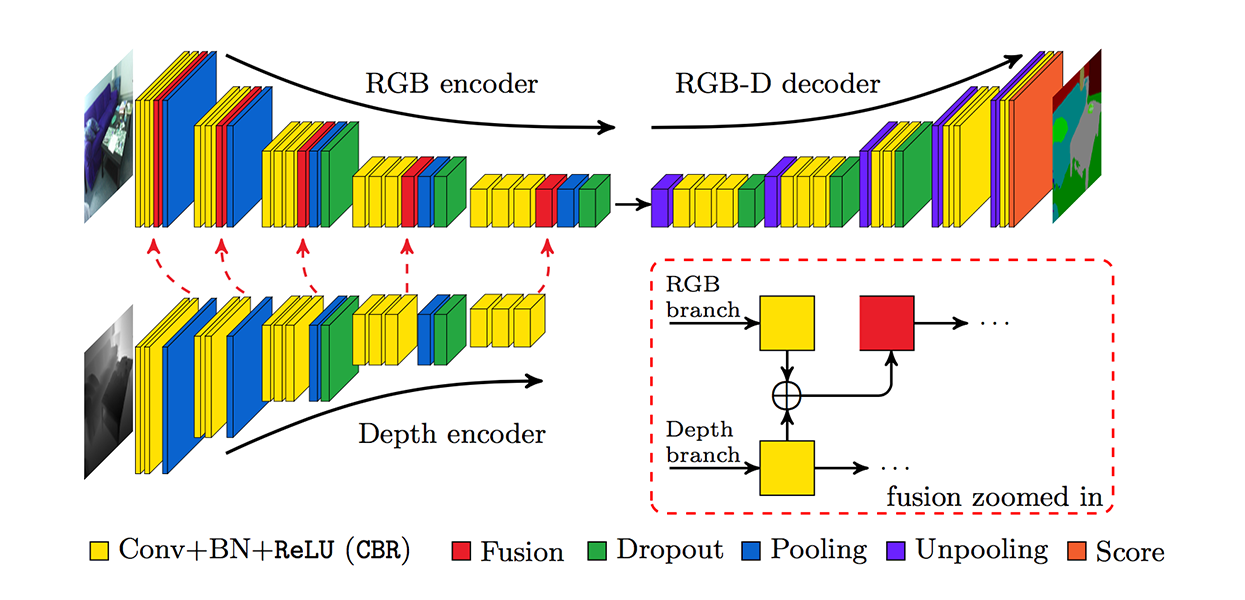

Joint scene classification and semantic segmentation using FuseNet architecture from FuseNet: incorporating depth into semantic segmentation via fusion-based CNN architecture. Potential effects of additional scene classification loss on the overall semantic segmentation quality are tested.

- python 2.7

- PyTorch 0.1.12 CUDA 8.0 version

- In addition, please

pip install -r requirements.txtto install the following packages:- TODO

1.Simply download the processed .h5py dataset with 40 annotations and 10 classes here: train + test set. (TODO: When running Train_FuseNet.py download the dataset automatically if not found)

-

To train Fusenet run

Train_FuseNet.py. Dataset choice is manually implemented in the script for now. The dataset is taken and prepared byutils/data_utils_class.py, therefore make sure to give the correct path in the script. (TODO: Pass the arguments instead of manually entering in the script) -

Note: VGG weights are downloaded automatically at the beginning of the training process. Depth layers weights will also be initialized with their vgg16 equivalent layers. However, for 'conv1_1' the weights will be averaged to fit one channel depth input (3, 3, 3, 64) -> (3, 3, 1, 64)

- To evaluate Fusenet results, locate the trained model file and use

FuseNet_Class_Plots.ipynb.

- Modularize the code

Caner Hazirbas, Lingni Ma, Csaba Domokos and Daniel Cremers, "FuseNet: Incorporating Depth into Semantic Segmentation via Fusion-based CNN Architecture", in proceedings of the 13th Asian Conference on Computer Vision, 2016. (pdf)

@inproceedings{fusenet2016accv,

author = "C. Hazirbas and L. Ma and C. Domokos and D. Cremers",

title = "FuseNet: incorporating depth into semantic segmentation via fusion-based CNN architecture",

booktitle = "Asian Conference on Computer Vision",

year = "2016",

month = "November",

}