Universal Adaptor: Converting Mel-Spectrograms Between Different Configurations for Speech Synthesis

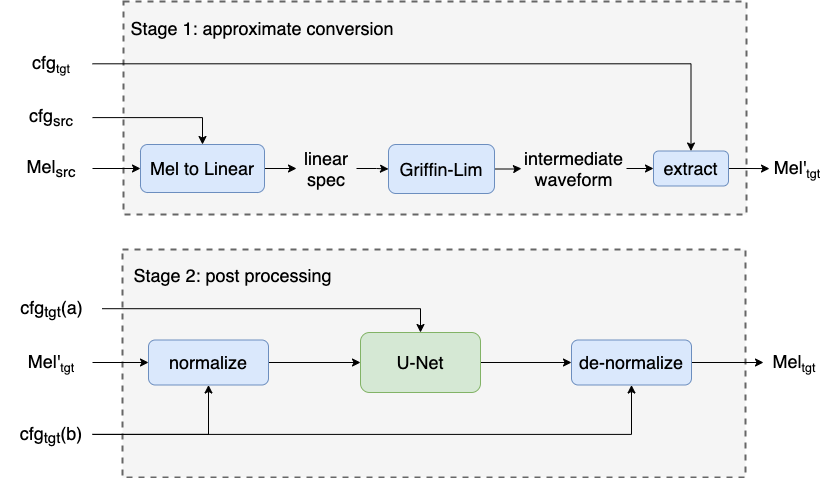

Abstract : Most recent TTS systems are composed of a synthesizer and a vocoder. However, the existing synthesizers and vocoders can only be matched to acoustic features extracted with a specific configuration. Hence, we can't combine arbitrary synthesizers and vocoders together to form a complete TTS system, not to mention applying to a newly developed model. In this paper, we proposed a universal adaptor, which takes a Mel-spectogram parametrized by the source configuration and converts it into a Mel-spectrogram parametrized by the target configuration, as long as we feed in the source and the target configurations. Experiments show that the quality of speeches synthesized from our output of the universal adaptor is comparable to those synthesized from ground truth Mel-spectrogram. Moreover, our universal adaptor can be applied in the recent TTS systems and in multi-speaker speech synthesis without dropping quality.

Visit our demo website for audio samples.

Installation

Install the requirements by pip:

pip install -r requirements.txt

How To Use

Train Your Own Model

All the procedures are written in run.sh. All you have to do is to fill in the expected folder names and run the command:

bash run.sh

Inference with Pretrained Models

If you want to skip the training and inference directly, there are two steps to do.

-

We have to pass through Stage 1 by

transform.py.python3 transform.py -sc source_config -tc target_config -d data_dir -o out_dir

-

We pass through Stage 2 by the stage 2 in

run.sh. Fill in the expected folder names and run the command:bash run.sh

Then, you can find the results in your output directory.

Other Files

generate.py: can help you turn the waveforms into the acoustic features with the configuration you want.

Configuration Tables

- LJSpeech

Source Configuration: cfg1, cfg2, cfg3, cfg4

Target Configuration (Vocoder): cfg1(WaveRNN), cfg2(WaveGLOW), cfg3(HiFiGAN), cfg4(MelGAN)

- Text-to-Speech

Source Configuration (TTS): cfg1(Tacotron), cfg2(Tacotron 2), cfg3(FastSpeech 2)

Target Configuration (Vocoder): cfg1(WaveRNN), cfg2(WaveGLOW), cfg3(HiFiGAN), cfg4(MelGAN)

- CMU_ARCTIC

Source Configuration: cfg1, cfg2, cfg3, cfg4

Target Conifguration (Vocoder): cfg3(HiFiGAN)

Reference Repositories for Configurations

- Efficient Neural Audio Synthesis

- WaveGlow: A Flow-based Generative Network for Speech Synthesis

- MelGAN: Generative Adversarial Networks for Conditional Waveform Synthesis

- HiFi-GAN: Generative Adversarial Networks for Efficient and High Fidelity Speech Synthesis

- Parallel WaveGAN: A fast waveform generation model based on generative adversarial networks with multi-resolution spectrogram

- WaveNet: A generative model for raw audio