This repository contains code for deep face forgery detection in video frames. This is a student project from Advanced Deep Learning for Computer Vision course at TUM.

Using transfer learning we were able to achieve a new state of the art performance on faceforenics benchmark

For detecting video frame forgeries we use FaceForensics++ dataset of pristine and manipulated videos. As a preprocessing step we extract faces from all the frames using MTCNN. Total dataset is ~507GB and contains 7 million frames. Dataset downloading and frame extraction code is located in dataset directory. For model training, we use the split from FaceForensics++ repository.

Main technologies:

Pythonas main programming languagePytorchas deep learning librarypipfor dependency management

All the training and evaluation code together with various models are stored in src directory. All scripts are self-documenting. Just run them with --help option.

They automatically use gpu when available, but will also work on cpu, but very slowly, unfortunately.

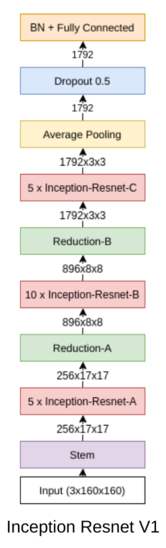

We got the best single-frame classification accuracy using a version of Inception Resnet V1 model pretrained on VGGFace2 face recognition dataset.

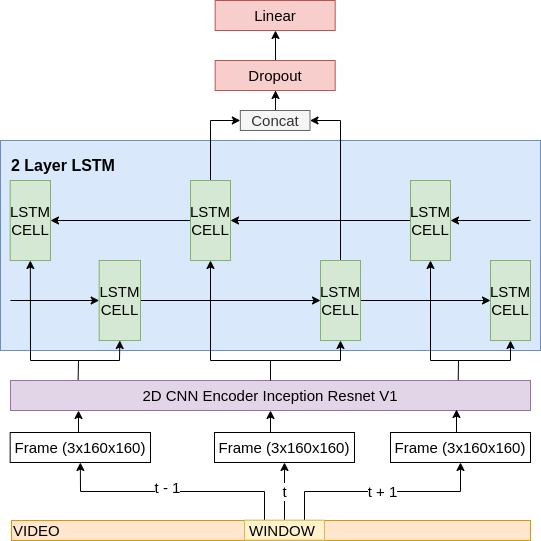

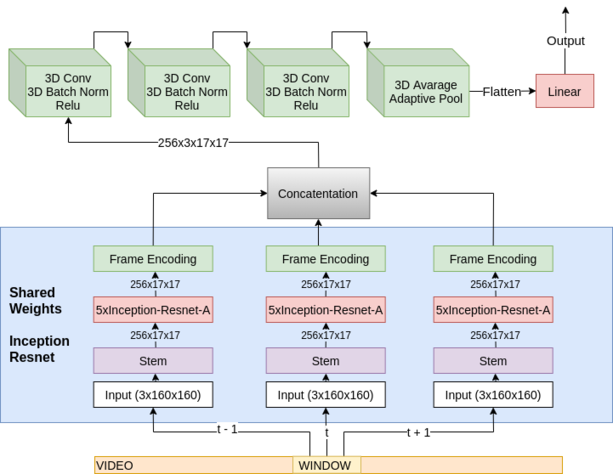

We also evaluated how performance improves when incorporating temporal data. The task in this case changes from single frame classification to frame sequence classification. We used 2 different models for such an approach 3D convolutional and Bi-LSTM.

Temporal feature locality assumption that 3D convolutional model has, seems reasonable in this case, but it is very slow to train for large window sizes.